分词器

Analyzer 分析器

character filter :字符过滤器,对文本进行字符过滤处理,如处理文本中的html标签字符。处理完后再交给tokenizer进行分词。一个analyzer中可包含0个或多个字符过滤器,多个按配置顺序依次进行处理。

tokenizer:分词器,对文本进行分词。一个analyzer必需且只可包含一个tokenizer。

token filter:词项过滤器,对tokenizer分出的词进行过滤处理。如转小写、停用词处理、同义词处理。一个analyzer可包含0个或多个词项过滤器,按配置顺序进行过滤。

POST _analyze { "analyzer": "whitespace", "text": "The quick brown fox." }

POST _analyze

{

"tokenizer": "standard",

"filter": [ "lowercase", "asciifolding" ],

"text": "Is this déja vu?"

}

内建的character filter

HTML Strip Character Filter

html_strip :过滤html标签,解码HTML entities like &.

Mapping Character Filter

mapping :用指定的字符串替换文本中的某字符串。

Pattern Replace Character Filter

pattern_replace :进行正则表达式替换

POST _analyze { "tokenizer": "keyword", "char_filter": [ "html_strip" ], "text": "<p>I'm so <b>happy</b>!</p>" }

在索引中配置:

PUT my_index { "settings": { "analysis": { "analyzer": { "my_analyzer": { "tokenizer": "keyword", "char_filter": ["my_char_filter"] } }, "char_filter": { "my_char_filter": { "type": "html_strip", "escaped_tags": ["b"] } } } } }

escaped_tags 用来指定例外的标签。 如果没有例外标签需配置,则不需要在此进行客户化定义,在上面的my_analyzer中直接使用 html_strip

POST my_index/_analyze { "analyzer": "my_analyzer", "text": "<p>I'm so <b>happy</b>!</p>" }

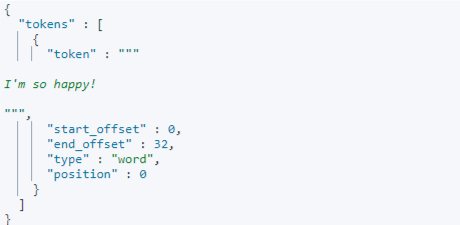

结果

{ "tokens" : [ { "token" : """ I'm so <b>happy</b>! """, "start_offset" : 0, "end_offset" : 32, "type" : "word", "position" : 0 } ] }

Mapping character filter

PUT my_index { "settings": { "analysis": { "analyzer": { "my_analyzer": { "tokenizer": "keyword", "char_filter": [ "my_char_filter" ] } }, "char_filter": { "my_char_filter": { "type": "mapping", "mappings": [ "٠ => 0", "١ => 1", "٢ => 2", "٣ => 3", "٤ => 4", "٥ => 5", "٦ => 6", "٧ => 7", "٨ => 8", "٩ => 9" ] } } } } }

测试

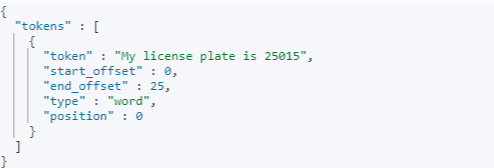

POST my_index/_analyze { "analyzer": "my_analyzer", "text": "My license plate is ٢٥٠١٥" }

结果

Pattern Replace Character Filter

PUT my_index { "settings": { "analysis": { "analyzer": { "my_analyzer": { "tokenizer": "standard", "char_filter": [ "my_char_filter" ] } }, "char_filter": { "my_char_filter": { "type": "pattern_replace", "pattern": "(\\d+)-(?=\\d)", "replacement": "$1_" } } } } }

测试

POST my_index/_analyze { "analyzer": "my_analyzer", "text": "My credit card is 123-456-789" }

结果

{ "tokens" : [ { "token" : "My", "start_offset" : 0, "end_offset" : 2, "type" : "<ALPHANUM>", "position" : 0 }, { "token" : "credit", "start_offset" : 3, "end_offset" : 9, "type" : "<ALPHANUM>", "position" : 1 }, { "token" : "card", "start_offset" : 10, "end_offset" : 14, "type" : "<ALPHANUM>", "position" : 2 }, { "token" : "is", "start_offset" : 15, "end_offset" : 17, "type" : "<ALPHANUM>", "position" : 3 }, { "token" : "123_456_789", "start_offset" : 18, "end_offset" : 29, "type" : "<NUM>", "position" : 4 } ] }

内建的Tokenizer

Standard Tokenizer

Letter Tokenizer

Lowercase Tokenizer

Whitespace Tokenizer

UAX URL Email Tokenizer

Classic Tokenizer

Thai Tokenizer

NGram Tokenizer

Edge NGram Tokenizer

Keyword Tokenizer

Pattern Tokenizer

Simple Pattern Tokenizer

Simple Pattern Split Tokenizer

Path Hierarchy Tokenizer

POST _analyze { "tokenizer": "standard", "text": "张三说的确实在理" } POST _analyze { "tokenizer": "ik_smart", "text": "张三说的确实在理" }

内建的Token Filter

Lowercase Token Filter :lowercase 转小写

Stop Token Filter :stop 停用词过滤器

Synonym Token Filter: synonym 同义词过滤器

说明:中文分词器Ikanalyzer中自带有停用词过滤功能。

Synonym Token Filter 同义词过滤器

PUT /test_index { "settings": {"analysis" : { "analyzer" : { "my_ik_synonym" : { "tokenizer" : "ik_smart", "filter" : ["synonym"] } }, "filter" : { "synonym" : { "type" : "synonym", <!-- synonyms_path:指定同义词文件(相对config的位置)--> "synonyms_path" : "analysis/synonym.txt" } } } } }

示例

POST test_index/_analyze { "analyzer": "my_ik_synonym", "text": "张三说的确实在理" } POST test_index/_analyze { "analyzer": "my_ik_synonym", "text": "我想买个电饭锅和一个电脑" }

为索引定义个default分词器

PUT /my_index { "settings": { "analysis": { "analyzer": { "default": { "tokenizer": "ik_smart", "filter": [ "synonym" ] } }, "filter": { "synonym": { "type": "synonym", "synonyms_path": "analysis/synonym.txt" } } } }, "mappings": {"properties": { "title": { "type": "text" } } } }

Analyzer的使用顺序

首先选用字段mapping定义中指定的analyzer,字段定义中没有指定analyzer,则选用 index settings中定义的名字为default 的analyzer。如index setting中没有定义default分词器,则使用 standard analyzer.

查询阶段ES将按如下顺序来选用分词:

The analyzer defined in a full-text query.

The search_analyzer defined in the field mapping.

The analyzer defined in the field mapping.

An analyzer named default_search in the index settings.

An analyzer named default in the index settings.

The standard analyzer

shingle token filter

PUT test12 { "settings": { "number_of_shards":1, "analysis":{ "analyzer":{ "trigram":{ "type":"custom", "tokenizer":"standard", "filter":[ "shingle" ] }, "reverse":{ "type":"custom", "tokenizer":"standard", "filter":[ "reverse" ] } }, "filter":{ "shingle":{ "type":"shingle", "min_shingle_size": 2, "max_shingle_size": 3 } } } }, "mappings": { "properties":{ "title":{ "type":"text", "fields": { "trigram":{ "type":"text", "analyzer":"trigram" }, "reverse":{ "type":"text", "analyzer":"reverse" } } } } } } POST test12/_doc {"title":"noble warriors"} POST test12/_doc {"title":"nobel prize"} POST test12/_doc {"title":"noble hello prize"} POST test12/_doc {"title":"nobel prize hello"} POST test12/_doc {"title":"noebl prize"} POST test12/_analyze { "field":"title.trigram", "text":"nobel prize" }

浙公网安备 33010602011771号

浙公网安备 33010602011771号