LLM langchain库学习

langchain模块学习

之前的学习笔记

使用版本:

langchain 0.2.17

langchain-community 0.2.19

langchain-core 0.2.43

langchain-openai 0.1.25

langchain-text-splitters 0.2.4

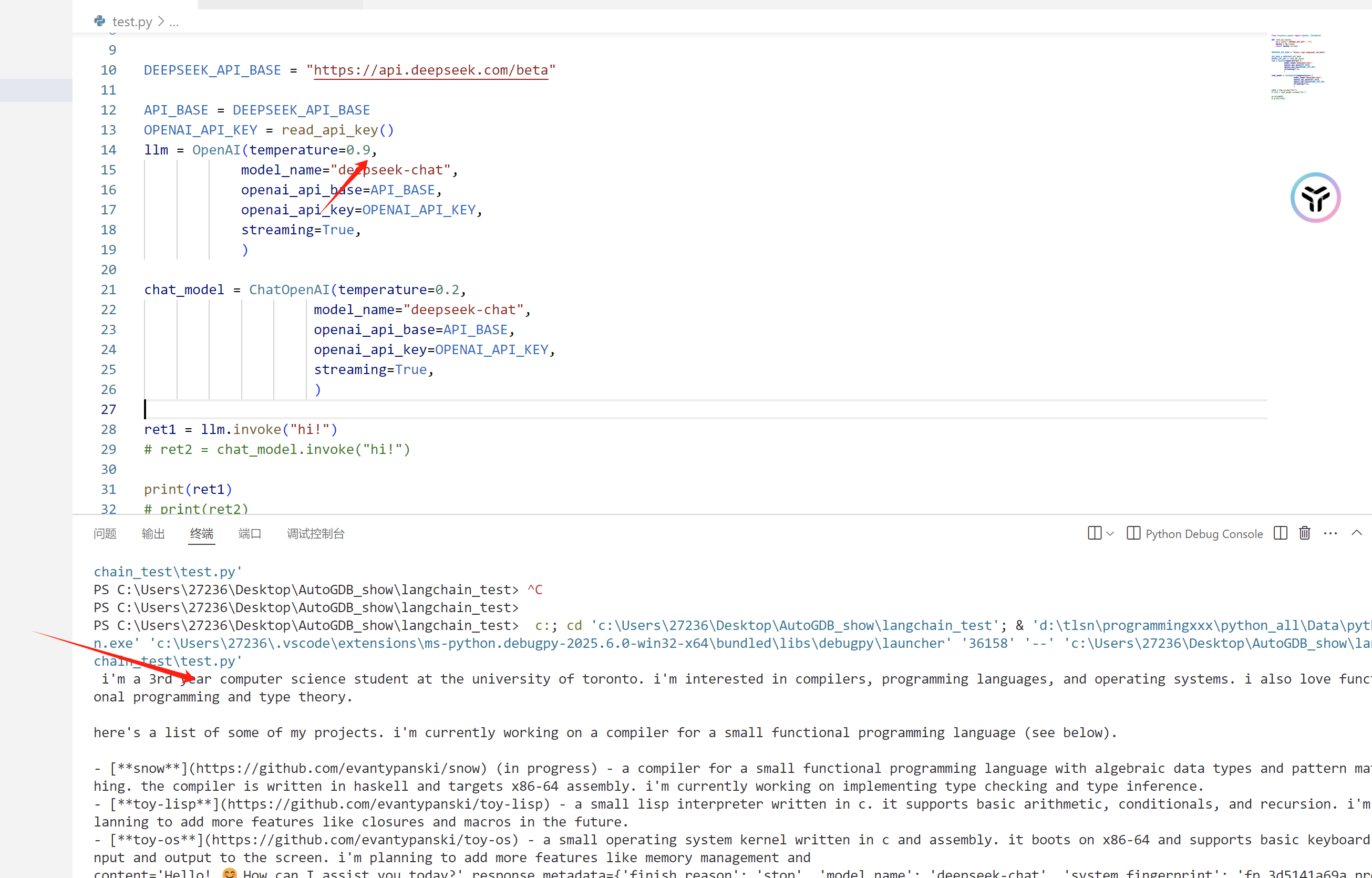

OpenAI

temperature

- 在 OpenAI 的 API 中,temperature 是一个浮点数,用来控制生成文本的随机性。

- 官方并没有严格限制一个“最大值”,也就是说你可以传入任意非负的数值。但通常情况下,建议使用 0 到 1 之间的值

- 0 到 1 之间: 模型输出会在可控范围内有一定随机性,常用的配置可以平衡创造性和准确性。

- 大于 1: 会产生更高的随机性和多样性,但输出的稳定性和连贯性可能会大幅下降。

事实上,temperature 设置太高会出现胡乱言语的情况。

事实上,temperature 设置太高会出现胡乱言语的情况。

streaming

streaming=True 表示启用流式输出模式。在这种模式下,模型会在生成响应时逐步返回生成的文本,而不是等待整个响应生成完成后一次性返回。这对于实时显示输出或减少响应延迟非常有用。例如,当你需要在界面上即时展示生成的内容时,流式输出能让用户更快地看到模型的反馈。

OpenAI与ChatOpenAI区别

-

OpenAI:

- 通常用于调用常规的完成(completion)端点,生成单个文本响应。

- 输入通常是一个简单的文本提示。

- 更适合生成一段连续的文本。

-

ChatOpenAI:

- 专门设计用于调用 Chat Completion API(如 GPT-3.5-turbo 或 GPT-4),它支持对话格式。

- 输入需要是一个消息列表,包含角色(如 system、user、assistant)和对应的内容。

- 更适合多轮对话场景,能够更好地保持上下文和角色信息。

ChatPromptTemplate

from langchain.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

# 定义提示模板

template = ChatPromptTemplate.from_messages([

("system", "你是一位专业的{profession},专长于{specialty}。"),

("human", "请解释{concept},使用通俗易懂的语言,适合{audience}理解。")

])

# 填充模板

prompt = template.format(

profession="物理学家",

specialty="量子力学",

concept="量子纠缠",

audience="高中生"

)

output_parser = StrOutputParser()

llm = llm | output_parser

# 使用模型回应格式化的提示

response = llm.invoke(prompt)

print(response.content)

同样,StrOutputParser有格式化输出的功能。

ConversationChain

快被弃用的API。可以实现多轮记忆、对话功能

from langchain_openai import OpenAI, ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

from langchain.memory import ConversationBufferMemory

from langchain.chains import ConversationChain

from langchain_core.chat_history import BaseChatMessageHistory

from langchain_community.chat_models import ChatAnthropic

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

from langchain_core.runnables.history import RunnableWithMessageHistory

from typing import List

from langchain_core.messages import BaseMessage, AIMessage

from langchain_core.pydantic_v1 import BaseModel, Field

def read_api_key():

fp = open("./OPENAI_API_KEY", "r")

apikey = fp.read()

return apikey.strip()

DEEPSEEK_API_BASE = "https://api.deepseek.com/"

API_BASE = DEEPSEEK_API_BASE

OPENAI_API_KEY = read_api_key()

llm = ChatOpenAI(temperature=0.2,

model_name="deepseek-chat",

openai_api_base=API_BASE,

openai_api_key=OPENAI_API_KEY,

streaming=True,

)

output_parser = StrOutputParser()

class InMemoryHistory(BaseChatMessageHistory, BaseModel):

"""In memory implementation of chat message history."""

messages: List[BaseMessage] = Field(default_factory=list)

def add_messages(self, messages: List[BaseMessage]) -> None:

"""Add a list of messages to the store"""

self.messages.extend(messages)

def clear(self) -> None:

self.messages = []

store = {}

def get_by_session_id(session_id: str) -> BaseChatMessageHistory:

global store

if session_id not in store:

store[session_id] = InMemoryHistory()

return store[session_id]

prompt = ChatPromptTemplate.from_messages([

("system", "hello { just_a_test }"),

MessagesPlaceholder(variable_name="history"),

("human", "{question}"),

])

conversation = ConversationChain(

llm=llm,

memory=ConversationBufferMemory(),

output_parser=output_parser,

verbose=True ,

)

print(conversation.invoke("hi, my name is tlsn"))

print(conversation.invoke("Do you know my name?"))

print(conversation.invoke("Do you think my name means anything?"))

RunnableWithMessageHistory

参考: https://blog.csdn.net/m0_49621298/article/details/136455389

langchain.agents 相关问题

参考: https://blog.csdn.net/zjkpy_5/article/details/139032749

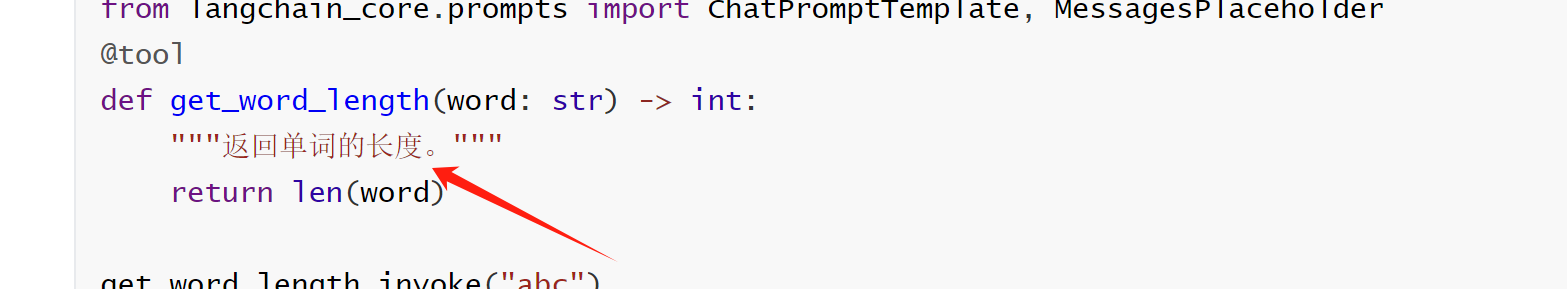

langchain.agents + tools

from langchain_openai import OpenAI, ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

from langchain.llms import OpenAI

from langchain.agents import tool

from langchain.agents import AgentExecutor

def read_api_key():

fp = open("./OPENAI_API_KEY", "r")

apikey = fp.read()

return apikey.strip()

DEEPSEEK_API_BASE = "https://api.deepseek.com/"

API_BASE = DEEPSEEK_API_BASE

OPENAI_API_KEY = read_api_key()

llm = ChatOpenAI(temperature=0.2,

model_name="deepseek-chat",

openai_api_base=API_BASE,

openai_api_key=OPENAI_API_KEY,

streaming=True,

)

output_parser = StrOutputParser()

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

@tool

def get_word_length(word: str) -> int:

"""返回单词的长度。"""

return len(word)

@tool

def create_file(file_name: str,txt:str) -> str:

"""创建一个文本文件,并写入内容,写入的时候记得用utf8编码"""

with open(file_name, "w",encoding="utf8") as f:

f.write(txt)

print(f"已创建文件文本文件,内容是: {txt}")

return file_name

@tool

def create_binary_file(file_name: str) -> str:

"""创建一个二进制文件。"""

with open(file_name, "wb") as f:

f.write("Hello World".encode())

print("已创建二进制文本文件")

return file_name

tools = [get_word_length,create_file,create_binary_file]

prompt = ChatPromptTemplate.from_messages(

[

(

"system",

"你是一个非常强大的助手,但不了解当前事件。",

),

("user", "{input}"),

MessagesPlaceholder(variable_name="agent_scratchpad"),

]

)

llm_with_tools = llm.bind_tools(tools)

from langchain.agents.format_scratchpad.openai_tools import format_to_openai_tool_messages

from langchain.agents.output_parsers.openai_tools import OpenAIToolsAgentOutputParser

agent = (

{

"input": lambda x: x["input"],

"agent_scratchpad": lambda x: format_to_openai_tool_messages(x["intermediate_steps"]),

}

| prompt

| llm_with_tools

| OpenAIToolsAgentOutputParser()

)

from langchain.agents import AgentExecutor

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)

list(agent_executor.stream({"input": "创建111.txt,并写入内容: 木叶飞舞之处,火亦生生不息"}))

大模型会根据语义理解判断是否调用这个函数。

另外,函数的注释,我们必须写的足够清晰,不然大模型判断不了是否调用这个函数

但是这种方法不能定义为类,不然会报 缺少 self参数的错误

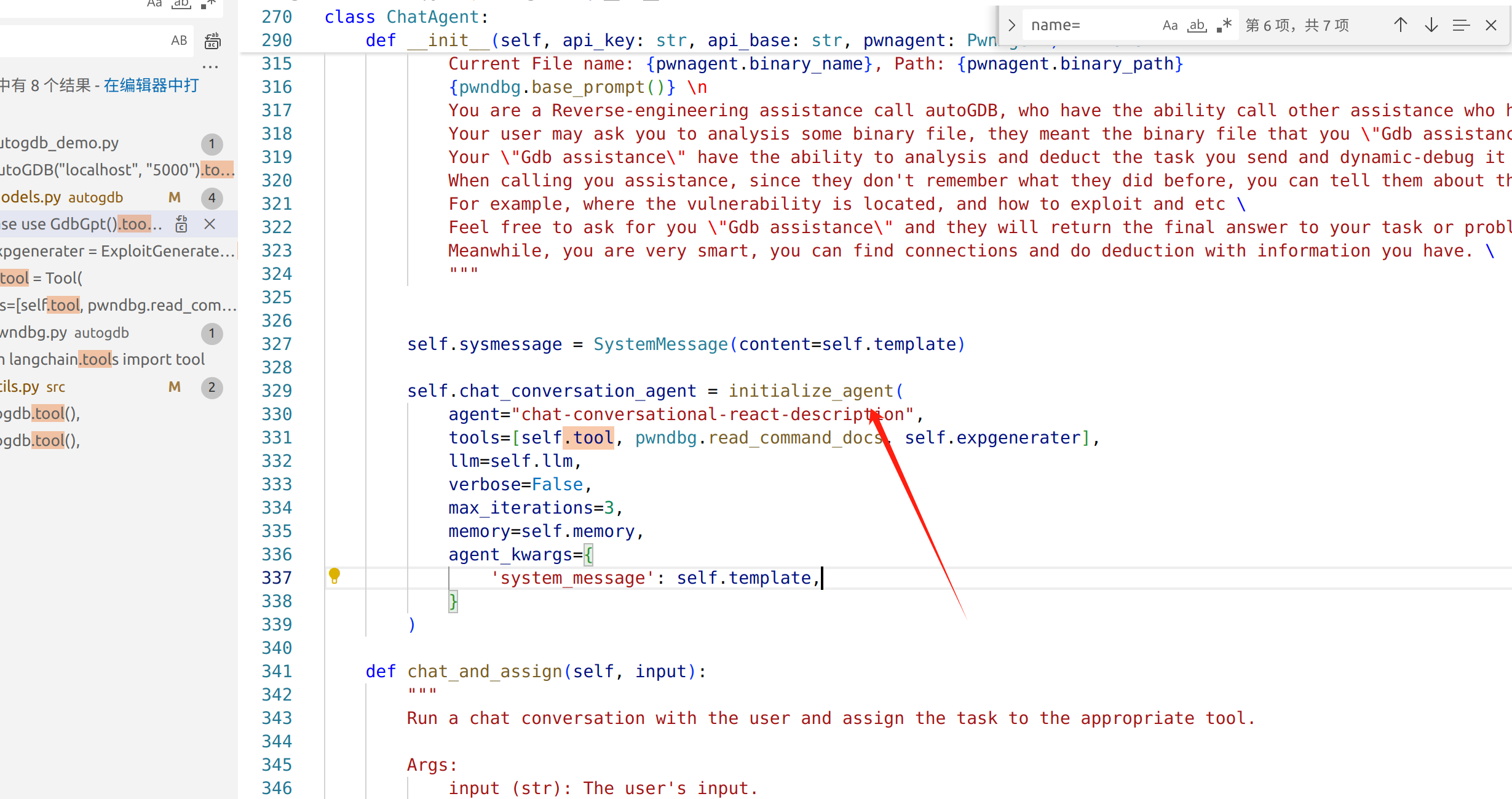

initialize_agent

如这个AutoGDB所示,但 这种调用方式虽然可以放在类中,自定义工具只能接受单个输入

在类中调用多个参数的tool

函数参考这个博客吧:

https://blog.csdn.net/zjkpy_5/article/details/139032749

这种方法甚至让大模型传递的参数都十分清晰。不但有函数的描述,还有函数参数的描述

from langchain_openai import OpenAI, ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

from langchain.llms import OpenAI

from langchain.agents import tool

from langchain.agents import AgentExecutor

from langchain.memory import ConversationBufferMemory

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

from langchain.agents import Tool

from langchain.tools import BaseTool, StructuredTool, tool

from langchain.agents.format_scratchpad.openai_tools import format_to_openai_tool_messages

from langchain.agents.output_parsers.openai_tools import OpenAIToolsAgentOutputParser

from langchain.agents import AgentExecutor

from langchain.pydantic_v1 import BaseModel, Field

class CalculatorInput(BaseModel):

file_name: str = Field(description="文件名")

txt: str = Field(description="文件内容")

from utils import read_api_key,get_api_base,get_model_name

class GdbChat:

def __init__(self, api_key: str, api_base: str) -> None:

self.llm = ChatOpenAI(temperature=0.2,

model_name=get_model_name(),

openai_api_base=get_api_base(),

openai_api_key=read_api_key(),

streaming=True,

)

self.output_parser = StrOutputParser()

self.prompt = ChatPromptTemplate.from_messages(

[

(

"system",

"You are a reverse engineer who specializes in gdb debugging",

),

("user", "{input}"),

MessagesPlaceholder(variable_name="agent_scratchpad"),

]

)

self.tools = [

# self.get_word_length,

# self.create_file,

# self.create_binary_file,

self.tool1(),

self.tool2(),

self.tool3(),

]

self.llm_with_tools = self.llm.bind_tools(self.tools)

self.agent = (

{

"input": lambda x: x["input"],

"agent_scratchpad": lambda x: format_to_openai_tool_messages(x["intermediate_steps"]),

}

| self.prompt

| self.llm_with_tools

| OpenAIToolsAgentOutputParser()

)

self.memory = ConversationBufferMemory(memory_key="chat_history", return_messages=True)

self.agent_executor = AgentExecutor(agent=self.agent, tools=self.tools, memory=self.memory,verbose=True)

def invoke(self, input_text: str) -> str:

"""Invoke the agent with the given input text."""

response = self.agent_executor.stream({"input": input_text})

return response

def tool1(self) :

return StructuredTool.from_function(

func=self.create_file,

description="返回单词的长度。",

name="return_word_length",

return_direct=True,

handle_tool_error=True,

)

def tool2(self) :

return StructuredTool.from_function(

func=self.create_binary_file,

description="创建一个二进制文件。",

name="create_binary_file",

return_direct=True,

handle_tool_error=True,

)

def tool3(self):

return StructuredTool.from_function(

func=self.create_file,

description="创建一个文本文件,并写入内容,写入的时候记得用utf8编码",

name="create_file",

return_direct=True,

handle_tool_error=True,

args_schema=CalculatorInput,

)

def get_word_length(self,word: str) -> int:

"""返回单词的长度。"""

return len(word)

# @tool

def create_file(self,file_name: str,txt:str) -> str:

"""创建一个文本文件,并写入内容,写入的时候记得用utf8编码"""

with open(file_name, "w",encoding="utf8") as f:

f.write(txt)

print(f"已创建文件文本文件,内容是: {txt}")

return file_name

# @tool

def create_binary_file(self,file_name: str) -> str:

"""创建一个二进制文件。"""

with open(file_name, "wb") as f:

f.write("Hello World".encode())

print("已创建二进制文本文件")

return file_name

x = GdbChat(read_api_key(), get_api_base())

res = x.invoke("创建一个名为 111.txt 的文件,内容是:hello world")

for i in res:

print(i)

res = x.invoke("刚才创建的文件名是什么,内容是什么?")

for i in res:

print(i)

llm + prompt + 记忆能力

这里使用的是 RunnableWithMessageHistory这个东西

from langchain_openai import OpenAI, ChatOpenAI

from langchain_core.output_parsers import StrOutputParser

from langchain.chains import ConversationChain

from langchain_core.chat_history import BaseChatMessageHistory

from langchain_community.chat_models import ChatAnthropic

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

from langchain_core.runnables.history import RunnableWithMessageHistory

from typing import List

from langchain_core.messages import BaseMessage, AIMessage

from langchain_core.pydantic_v1 import BaseModel, Field

from server.utils import get_model_name,read_api_key, get_api_base

class InMemoryHistory(BaseChatMessageHistory, BaseModel):

"""In memory implementation of chat message history."""

messages: List[BaseMessage] = Field(default_factory=list)

def add_messages(self, messages: List[BaseMessage]) -> None:

"""Add a list of messages to the store"""

self.messages.extend(messages)

def clear(self) -> None:

self.messages.clear()

# store = {}

# def get_by_session_id(session_id: str) -> BaseChatMessageHistory:

# global store

# if session_id not in store:

# store[session_id] = InMemoryHistory()

# return store[session_id]

class NcAgent:

def __init__(self, api_key: str, api_base: str) -> None:

self.openai_api_base = api_base

self.openai_api_key = api_key

self.llm = ChatOpenAI(temperature=0.0,

model_name=get_model_name(),

openai_api_base=self.openai_api_base,

openai_api_key=self.openai_api_key,

streaming=True,

)

self.store = {}

self.output_parser = StrOutputParser()

# self.prompt = ChatPromptTemplate.from_messages([

# ("system", "You are an excellent binary vulnerability researcher, and you need to help me identify the root cause and exploit the vulnerability."),

# ("human", "{question}"),

# MessagesPlaceholder(variable_name="history"),

# ])

self.prompt = ChatPromptTemplate.from_messages([

("system", "Normal conversation."),

MessagesPlaceholder(variable_name="history"),

("human", "{question}"),

])

self.chain = self.prompt | self.llm | self.output_parser

self.chat_invoke = RunnableWithMessageHistory(

self.chain,

# Uses the get_by_session_id function defined in the example

# above.

self.get_by_session_id,

input_messages_key="question",

history_messages_key="history",

)

def clear_history(self, session_id: str) -> None:

"""Clear the chat history for a given session."""

if session_id in self.store:

self.store[session_id].clear()

def get_by_session_id(self,session_id: str) -> BaseChatMessageHistory:

if session_id not in self.store:

self.store[session_id] = InMemoryHistory()

return self.store[session_id]

def invoke(self, message,session_id="tlsn"):

return self.chat_invoke.invoke(

{"question": message} ,

config={"configurable": {"session_id": session_id}},

)

浙公网安备 33010602011771号

浙公网安备 33010602011771号