Sqoop简介和实战 (三) Kettle&Sqoop数据同步框架案例

说明:

etl数据库的control_table表中记录着需要同步到Hive的表的表名

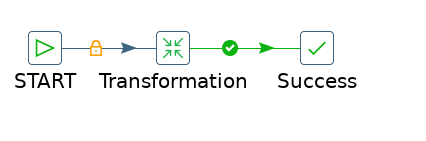

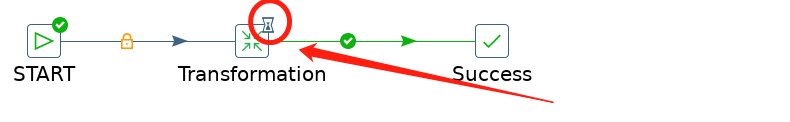

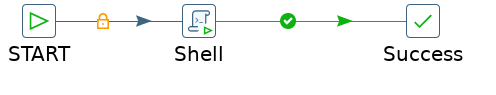

总体用 Kettle的转换-Transformation 来实现数据的同步

转换包括两个分支: 建Hive表(创建和task_name同名的表)

第一阶段(Shell)Sqoop语句的任务--创建表:

将test数据库中需要同步的TABLE_NAME

同步到Hive的DB_NAME库的ODS_TABLE_NAME表中

这3个变量的值从前一步(Table input)的SQL语句中获取

第二阶段(Shell)Sqoop语句的任务--插入数据:

将test数据库中需要同步的TABLE_NAME中的数据

同步到Hive中,目标用路径的方式书写, /user/hive/warehouse/下

${DB_NAME}.db为数据库

${ODS_TABLE_NAME}为表名

这3个变量的值依然是从同分支的前一步(Table input2)的SQL语句中获取

前期准备

--test数据库下:

create table product ( id bigint, product_id bigint, product_name varchar(50), created_at datetime, updated_at datetime ) ; insert into product select 1,1,'name1',now(),now(); commit;

--etl数据库下创建表control_table来存储等待存库的表名:

create table control_table (id int primary key auto_increment, task_name varchar(255), run_flag int, created_at datetime, updated_at datetime ) ;

批量建表:

create table test.product1 as select * from product ; create table test.product2 as select * from product ; create table test.product3 as select * from product ; create table test.product4 as select * from product ; create table test.product5 as select * from product ; create table test.product6 as select * from product ; create table test.product7 as select * from product ; create table test.product8 as select * from product ; create table test.product9 as select * from product ; create table test.product10 as select * from product ; create table test.product11 as select * from product ; create table test.product12 as select * from product ; create table test.product13 as select * from product ; create table test.product14 as select * from product ; create table test.product15 as select * from product ; create table test.product16 as select * from product ; create table test.product17 as select * from product ; create table test.product18 as select * from product ; create table test.product19 as select * from product ; create table test.product20 as select * from product ; create table test.product21 as select * from product ; create table test.product22 as select * from product ; create table test.product23 as select * from product ; create table test.product24 as select * from product ; create table test.product25 as select * from product ; create table test.product26 as select * from product ; create table test.product27 as select * from product ; create table test.product28 as select * from product ; create table test.product29 as select * from product ; create table test.product30 as select * from product ; create table test.product31 as select * from product ; create table test.product32 as select * from product ; create table test.product33 as select * from product ; create table test.product34 as select * from product ; create table test.product35 as select * from product ; create table test.product36 as select * from product ; create table test.product37 as select * from product ; create table test.product38 as select * from product ; create table test.product39 as select * from product ; create table test.product40 as select * from product ; create table test.product41 as select * from product ; create table test.product42 as select * from product ; create table test.product43 as select * from product ; create table test.product44 as select * from product ; create table test.product45 as select * from product ; create table test.product46 as select * from product ; create table test.product47 as select * from product ; create table test.product48 as select * from product ; create table test.product49 as select * from product ; create table test.product50 as select * from product ;

insert into control_table(task_name,run_flag,created_at,updated_at) select 'product1',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product2',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product3',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product4',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product5',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product6',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product7',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product8',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product9',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product10',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product11',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product12',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product13',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product14',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product15',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product16',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product17',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product18',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product19',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product20',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product21',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product22',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product23',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product24',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product25',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product26',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product27',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product28',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product29',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product30',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product31',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product32',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product33',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product34',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product35',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product36',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product37',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product38',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product39',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product40',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product41',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product42',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product43',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product44',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product45',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product46',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product47',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product48',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product49',0,now(),now() from dual; commit; insert into control_table(task_name,run_flag,created_at,updated_at) select 'product50',0,now(),now() from dual; commit;

update control_table set run_flag = 1;

run_flag代表表是否被禁掉,如果值为 0 则被禁掉,为 1 则不是.

一、Kettle整体流程图

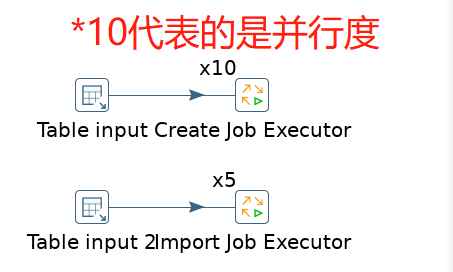

二、转换的详情

并行度为10就是 并行执行10个任务-job

1、第一条线路--创建表

Table input

连接的是etl数据库,JNDI的配置文件jdbc.properties (Linux中的/opt/module/Kettle7.1/data-integration/simple-jndi) 加上:

MYSQL_ETL/type=javax.sql.DataSource

MYSQL_ETL/driver=org.gjt.mm.mysql.Driver

MYSQL_ETL/url=jdbc:mysql://192.168.212.50:3306/etl

MYSQL_ETL/user=root

MYSQL_ETL/password=000000

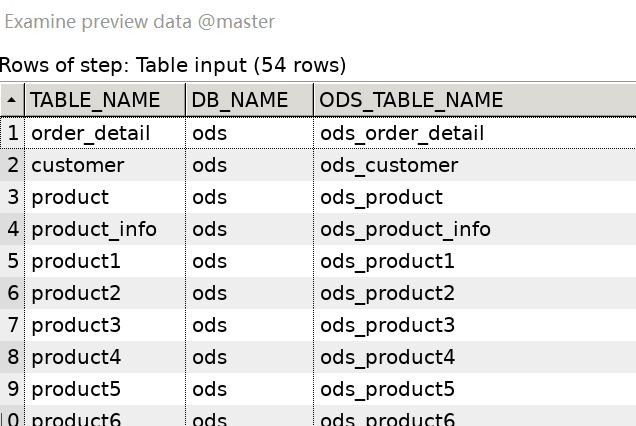

SQL语句 :

SELECT task_name AS TABLE_NAME, 'ods' AS DB_NAME, CONCAT( 'ods_', task_name) AS ODS_TABLE_NAME FROM control_table WHERE run_flag = 1 and 1=1 ;

concat() 返回结果为连接参数产生的字符串。如有任何一个参数为NULL ,则返回值为 NULL。

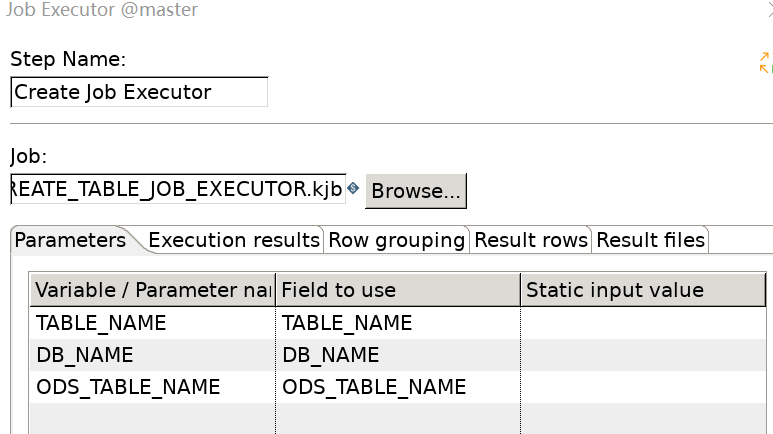

Create Job Executor

2、第二条线路--导入数据

注意,转换中如果没有start的话,多条线路是会并行执行的,

需要先创建表,走完第一个线路才能够走第二个线路,因此需要在不执行的SQL语句中修改限制条件为 1=2

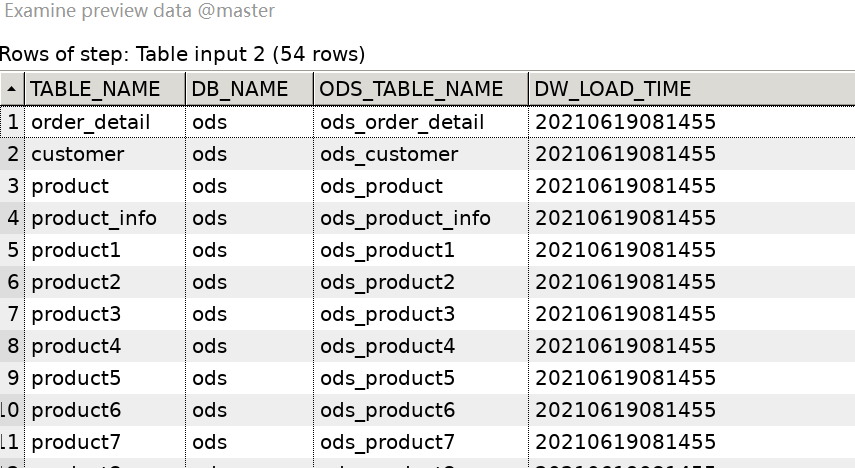

Table input2

同上,连接的是etl数据库, SQL语句为:

SELECT task_name AS TABLE_NAME, 'ods' AS DB_NAME, CONCAT( 'ods_', task_name) AS ODS_TABLE_NAME, DATE_FORMAT(now(),'%Y%m%d%H%i%s') AS DW_LOAD_TIME FROM control_table WHERE run_flag = 1 and 1=1 ;

预览下得到的数据:

Import Job Executor

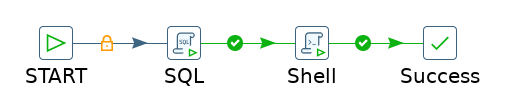

三、第一条线路--Create Job Executor

SQL语句--如果表在Hive中存在就删除掉:

DROP TABLE IF EXISTS ${DB_NAME}.${ODS_TABLE_NAME};

根据前面的Table input的SQL语句,

DB_NAME 变量会变成:ods

ODS_TABLE_NAME 变量会变成:CONCAT( 'ods_', task_name)

Shell语句(Sqoop):

sqoop create-hive-table \ -Dmapreduce.job.name=sqoop_create_table \ -Dmapreduce.job.queuename=root.users.root \ --connect jdbc:mysql://master:3306/test \ --username root \ --password-file /user/root/vingo/sqoop.pwd \ --table '${TABLE_NAME}' \ --hive-database '${DB_NAME}' \ --hive-table '${ODS_TABLE_NAME}' \ --hive-overwrite \ --fields-terminated-by ',' \ --lines-terminated-by '\n' \ --hive-drop-import-delims

hive-drop-import-delims: 删除数据中的分隔符,防止错行

将 test 库中的TABLE_NAME表导入到Hive的 DB_NAME 库的 ODS_TABLE_NAME 表中.

这个过程会有漏斗的标识,证明还在运行,需要再等待一会儿.

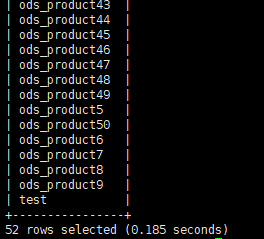

查看Hive中创建的表:

四、第二条线路--Import Job Executor

Shell语句(Sqoop)--增量更新:

sqoop import \ -Dmapreduce.job.name=sqoop_import_${TABLE_NAME}_${DW_LOAD_TIME} \ -Dmapreduce.job.queuename=root.users.root \ --connect jdbc:mysql://master:3306/test \ --username root \ --password-file /user/root/vingo/sqoop.pwd \ --table '${TABLE_NAME}' \ --check-column 'updated_at' \ --incremental lastmodified \ --last-value '2018-01-14 16:40:53.0' \ --target-dir '/user/hive/warehouse/${DB_NAME}.db/${ODS_TABLE_NAME}' \ --merge-key 'id' \ -m 1

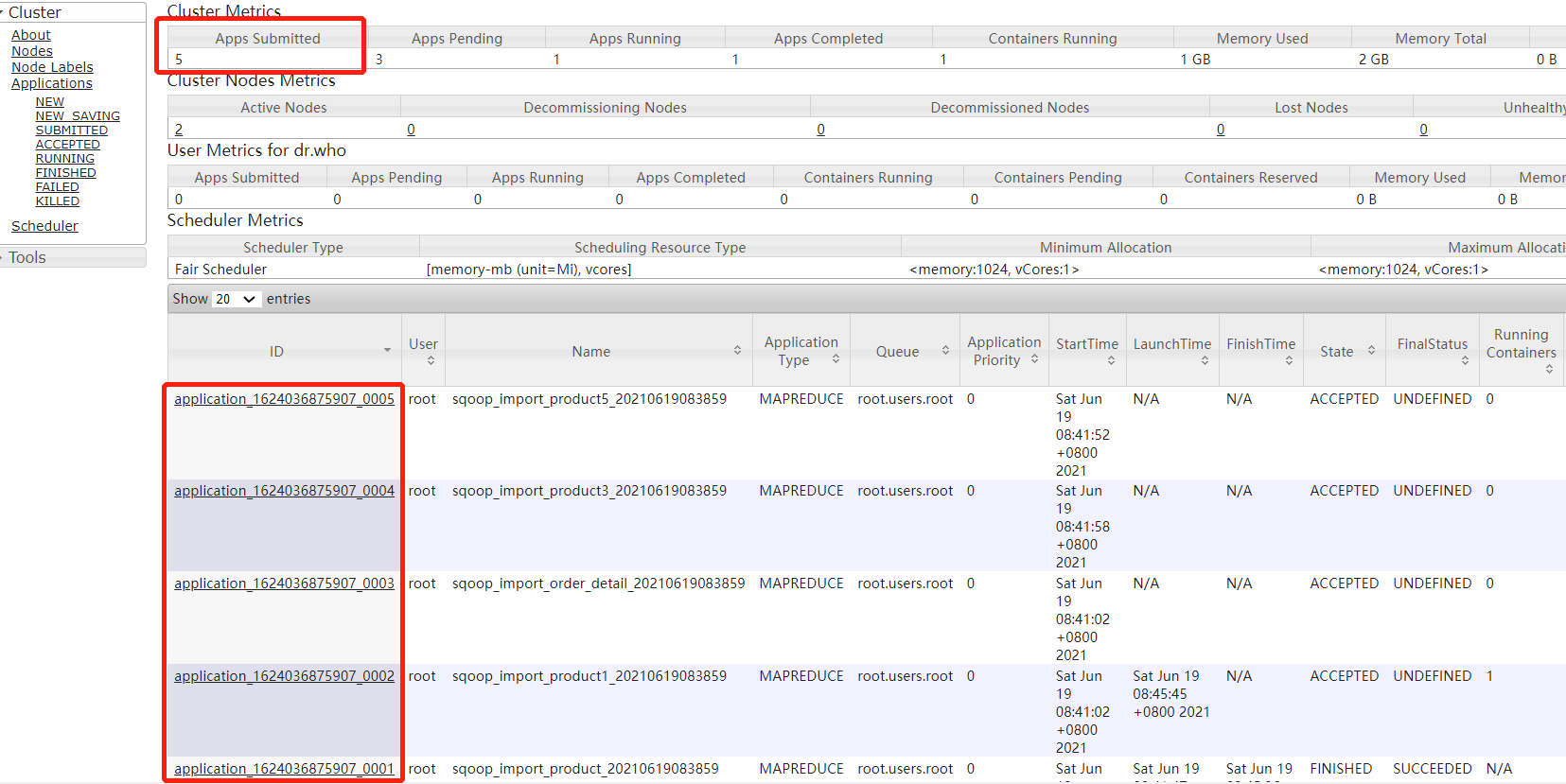

查看Yarn中的Applications:

一共可以有5个Job并行执行

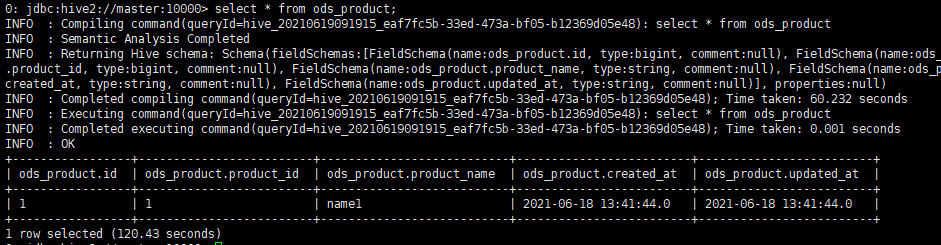

查看引入到表中的数据:

浙公网安备 33010602011771号

浙公网安备 33010602011771号