电影推荐系统-项目搭建之Maven(二)添加依赖

1)父项目中引入公共的依赖

|

<!--声明公用的属性配置 --> <properties> <log4j.version>1.2.17</log4j.version> <slf4j.version>1.7.22</slf4j.version> <scala.version>2.11.8</scala.version> </properties>

<!-- 声明并引入子项目共有的依赖 --> <dependencies> <!-- 所有子项目的日志框架 --> <dependency> <groupId>org.slf4j</groupId> <artifactId>jcl-over-slf4j</artifactId> <version>${slf4j.version}</version> </dependency> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-api</artifactId> <version>${slf4j.version}</version> </dependency> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-log4j12</artifactId> <version>${slf4j.version}</version> </dependency> <!-- 具体的日志实现 --> <dependency> <groupId>log4j</groupId> <artifactId>log4j</artifactId> <version>${log4j.version}</version> </dependency> <!-- Logging End --> </dependencies>

<!-- 仅声明子项目中共有的依赖,如果子项目中需要此依赖,在子项目中需要声明 --> <dependencyManagement> <dependencies> <dependency> <groupId>org.scala-lang</groupId> <artifactId>scala-library</artifactId> <version>${scala.version}</version> </dependency> </dependencies> </dependencyManagement>

<!-- 声明构建信息,一般很少修改--> <build> <!-- 声明并引入子项目共有的插件 --> <plugins> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-compiler-plugin</artifactId> <version>3.6.1</version> <!-- 所有的编译都依照JDK1.8 --> <configuration> <source>1.8</source> <target>1.8</target> </configuration> </plugin> </plugins>

<!-- 仅声明子项目中共有的插件,如果子项目需要此插件,那么子项目需要声明 --> <pluginManagement> <plugins> <!-- 用于将scala代码编译成class文件 --> <plugin> <groupId>net.alchim31.maven</groupId> <artifactId>scala-maven-plugin</artifactId> <version>3.2.2</version> <executions> <execution> <!-- 声明绑定到maven的compile阶段 --> <goals> <goal>compile</goal> <goal>testCompile</goal> </goals> </execution> </executions> </plugin>

<!-- 用于项目的打包插件 --> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-assembly-plugin</artifactId> <version>3.0.0</version> <executions> <execution> <id>make-assembly</id> <phase>package</phase> <goals> <goal>single</goal> </goals> </execution> </executions> </plugin> </plugins> </pluginManagement> </build> |

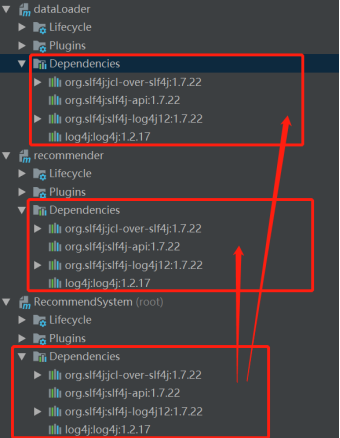

加载之后就能在Maven中看到子项目的依赖也加载上了:

其中Scala只是声明了一下,并不一定用得到,如果子项目需要使用,就得在子项目中声明。

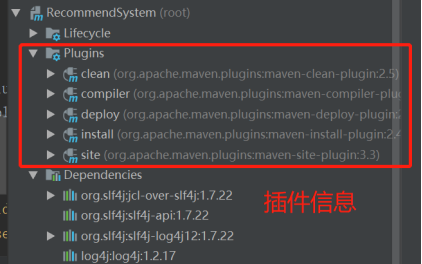

Plugins:插件信息

2)子项目-2中引入依赖

|

<properties> <mongodb-spark.version>2.0.0</mongodb-spark.version> <casbah.version>3.1.1</casbah.version> <elasticsearch-spark.version>5.6.2</elasticsearch-spark.version> <elasticsearch.version>5.6.2</elasticsearch.version> </properties>

<dependencies> <!-- Spark的依赖引入 ,没有写version是因为在父项目中已经声明过了--> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-core_2.11</artifactId> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-sql_2.11</artifactId> </dependency> <!-- 引入Scala ,没有写version是因为在父项目中已经声明过了--> <dependency> <groupId>org.scala-lang</groupId> <artifactId>scala-library</artifactId> </dependency>

<!-- 加入MongoDB的驱动 --> <!-- 用于代码方式连接MongoDB --> <dependency> <groupId>org.mongodb</groupId> <artifactId>casbah-core_2.11</artifactId> <version>${casbah.version}</version> </dependency> <!-- 用于Spark和MongoDB的对接 --> <dependency> <groupId>org.mongodb.spark</groupId> <artifactId>mongo-spark-connector_2.11</artifactId> <version>${mongodb-spark.version}</version> </dependency>

<!-- 加入ElasticSearch的驱动 --> <!-- 用于代码方法连接ElasticSearch --> <dependency> <groupId>org.elasticsearch.client</groupId> <artifactId>transport</artifactId> <version>${elasticsearch.version}</version> </dependency> <!-- 用于Spark和ElasticSearch的对接 --> <dependency> <groupId>org.elasticsearch</groupId> <artifactId>elasticsearch-spark-20_2.11</artifactId> <version>${elasticsearch-spark.version}</version> <!-- 将依赖的包从依赖路径中除去 --> <exclusions> <exclusion> <groupId>org.apache.hive</groupId> <artifactId>hive-service</artifactId> </exclusion> </exclusions> </dependency> </dependencies> |

3)子项目-1中引入依赖

|

<dependencyManagement> <dependencies> <!-- 引入Spark相关的Jar包 --> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-core_2.11</artifactId> <version>2.1.1</version> <!-- provider如果存在,那么运行时该Jar包不存在,也不会打包到最终的发布版本中,只是编译器有效 --> <!--<scope>provided</scope>--> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-sql_2.11</artifactId> <version>2.1.1</version> <!-- provider如果存在,那么运行时该Jar包不存在,也不会打包到最终的发布版本中,只是编译器有效 --> <!--<scope>provided</scope>--> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-streaming_2.11</artifactId> <version>2.1.1</version> <!-- provider如果存在,那么运行时该Jar包不存在,也不会打包到最终的发布版本中,只是编译器有效 --> <!--<scope>provided</scope>--> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-mllib_2.11</artifactId> <version>2.1.1</version> <!-- provider如果存在,那么运行时该Jar包不存在,也不会打包到最终的发布版本中,只是编译器有效 --> <!--<scope>provided</scope>--> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-graphx_2.11</artifactId> <version>2.1.1</version> <!-- provider如果存在,那么运行时该Jar包不存在,也不会打包到最终的发布版本中,只是编译器有效 --> <!--<scope>provided</scope>--> </dependency> </dependencies> </dependencyManagement> |

浙公网安备 33010602011771号

浙公网安备 33010602011771号