Flink(五)Flink开发IDEA环境搭建与测试(2)【用IDEA开发实时程序--流式处理数据案例--WordcountStreaming】

Posted on 2020-08-30 13:50 MissRong 阅读(643) 评论(0) 收藏 举报Flink(五)Flink开发IDEA环境搭建与测试(2)

用IDEA开发实时程序--流式处理数据案例--WordcountStreaming

(1)Scala代码

|

import org.apache.flink.api.java.utils.ParameterTool import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment import org.apache.flink.streaming.api.windowing.time.Time

object SocketWindowWordCountScala { def main(args: Array[String]) : Unit = { // 定义一个数据类型保存单词出现的次数 case class WordWithCount(word: String, count: Long) // port 表示需要连接的端口 val port: Int = try { ParameterTool.fromArgs(args).getInt("port") } catch { case e: Exception => { System.err.println("No port specified. Please run 'SocketWindowWordCount --port <port>'") return } } // 获取运行环境 val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment // 连接此socket获取输入数据 val text = env.socketTextStream("node21", port, '\n') //需要加上这一行隐式转换 否则在调用flatmap方法的时候会报错 import org.apache.flink.api.scala._ // 解析数据, 分组, 窗口化, 并且聚合求SUM val windowCounts = text .flatMap { w => w.split("\\s") } .map { w => WordWithCount(w, 1) } .keyBy("word") .timeWindow(Time.seconds(5), Time.seconds(1)) .sum("count") // 打印输出并设置使用一个并行度 windowCounts.print().setParallelism(1) env.execute("Socket Window WordCount") } } |

----自己操作----

Scala代码:

package WordCount

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.api.windowing.time.Time

/**

* @Author : ASUS and xinrong

* @Version : 2020/8/29

*

* 流式处理数据-WordCountStreaming

*/

object WordCountStreaming2 {

def main(args: Array[String]): Unit = {

//一、环境

val eonvironment=StreamExecutionEnvironment.getExecutionEnvironment;

//二、接入端口

val text= eonvironment.socketTextStream("192.168.212.111", 9000, '\n')

//三、分词

val windowCounts=text

.flatMap(w=>w.split(" "))

.map(w=>WordWithCounts(w,1L))//自定义类

.keyBy("word")

.timeWindow(Time.seconds(5), Time.seconds(1))

.sum("count")

//打印

windowCounts.print()

//执行

eonvironment.execute("Scala Window")

}

case class WordWithCounts(word:String,count:Long)

}

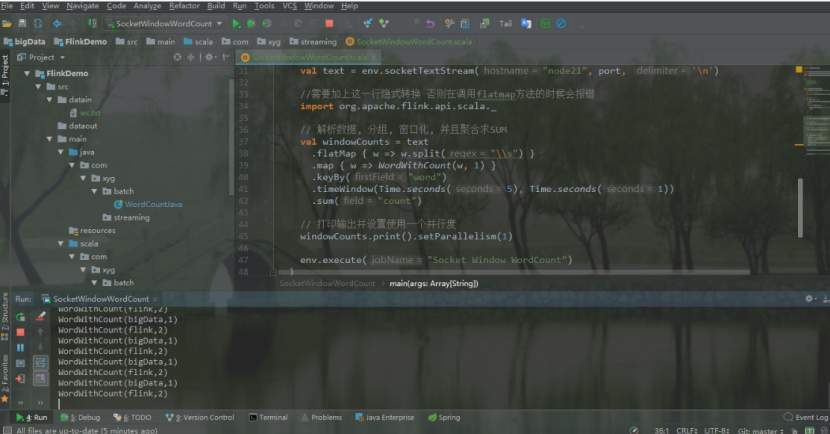

测试:

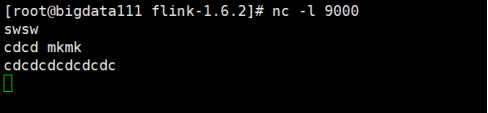

首先,使用nc命令启动一个本地监听,命令是:

[root@bigdata111 flink-1.6.2]# nc -l 9000

启动IDEA中的程序

输入数据-1:

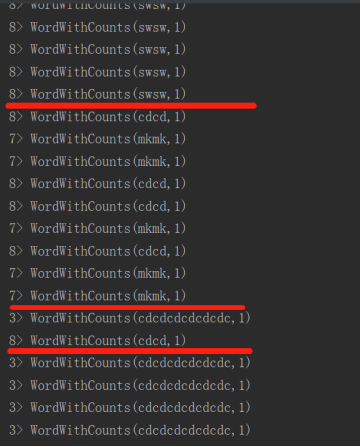

观察-1:

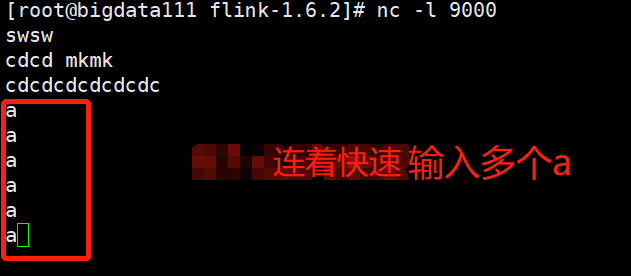

接着快速连着输入6个a:

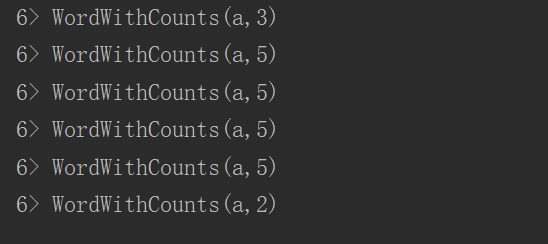

查看IDEA中结果:

(2)Java代码

|

import org.apache.flink.api.common.functions.FlatMapFunction; import org.apache.flink.api.java.utils.ParameterTool; import org.apache.flink.streaming.api.datastream.DataStream; import org.apache.flink.streaming.api.datastream.DataStreamSource; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; import org.apache.flink.streaming.api.windowing.time.Time; import org.apache.flink.util.Collector;

public class WordCount { //先在虚拟机上打开你的端口号 nc -l 9000 public static void main(String[] args) throws Exception { //定义socket的端口号 int port; try{ ParameterTool parameterTool = ParameterTool.fromArgs(args); port = parameterTool.getInt("port"); }catch (Exception e){ System.err.println("没有指定port参数,使用默认值9000"); port = 9000; }

//获取运行环境 StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

//连接socket获取输入的数据 DataStreamSource<String> text = env.socketTextStream("192.168.1.52", port, "\n");

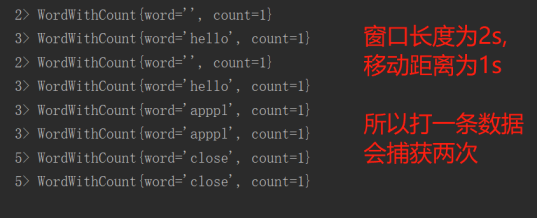

//计算数据 DataStream<WordWithCount> windowCount = text.flatMap(new FlatMapFunction<String, WordWithCount>() { public void flatMap(String value, Collector<WordWithCount> out) throws Exception { String[] splits = value.split("\\s"); for (String word:splits) { out.collect(new WordWithCount(word,1L)); } } })//打平操作,把每行的单词转为<word,count>类型的数据 .keyBy("word")//针对相同的word数据进行分组 .timeWindow(Time.seconds(2),Time.seconds(1))//指定计算数据的窗口大小和滑动窗口大小 .sum("count");

//把数据打印到控制台 windowCount.print() .setParallelism(1);//使用一个并行度 //注意:因为flink是懒加载的,所以必须调用execute方法,上面的代码才会执行 env.execute("streaming word count");

}

/** * 主要为了存储单词以及单词出现的次数 */ public static class WordWithCount{ public String word; public long count; public WordWithCount(){} public WordWithCount(String word, long count) { this.word = word; this.count = count; }

@Override public String toString() { return "WordWithCount{" + "word='" + word + '\'' + ", count=" + count + '}'; } } } |

运行测试

首先,使用nc命令启动一个本地监听,命令是:

[itstar@node21 ~]$ nc -l 9000

启动监听如果报错:-bash: nc: command not found,请先安装nc,在线安装命令:yum -y install nc。

(通过netstat命令观察9000端口: netstat -anlp | grep 9000)

然后,IDEA上运行flink官方案例程序

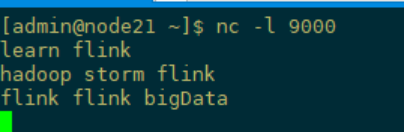

node21上输入

集群测试

这里单机测试官方案例

|

[itstar@node21 flink-1.6.1]$ pwd /opt/flink-1.6.1 [itstar@node21 flink-1.6.1]$ ./bin/start-cluster.sh Starting cluster. Starting standalonesession daemon on host node21. Starting taskexecutor daemon on host node21. [itstar@node21 flink-1.6.1]$ jps StandaloneSessionClusterEntrypoint TaskManagerRunner Jps [itstar@node21 flink-1.6.1]$ ./bin/flink run examples/streaming/SocketWindowWordCount.jar --port 9000 |

单词在5秒的时间窗口(处理时间,翻滚窗口)中计算并打印到stdout。监视TaskManager的输出文件并写入一些文本nc(输入在点击后逐行发送到Flink):

----自己操作----

Java代码:

package WordCount;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.util.Collector;

/**

* @Author : ASUS and xinrong

* @Version : 2020/8/29 & 1.0

*

* 流式处理数据-WordCountStreaming

*/

public class WordCountStreaming {

public static void main(String[] args) throws Exception {

//一、创建一个端口号

int port=9000;

//二、运行时的环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

//三、创建Source

DataStreamSource<String> text = env.socketTextStream("192.168.212.111", port, '\n');

//四、转换数据-自定义结果数据类

DataStream<WordWithCount> windowCount = text.flatMap(new FlatMapFunction<String, WordWithCount>() {

@Override

public void flatMap(String line, Collector<WordWithCount> out) throws Exception {

for (String word : line.split(" ")) {

out.collect(new WordWithCount(word, 1L));

}

}

}).keyBy("word")

.timeWindow(Time.seconds(2), Time.seconds(1))//时间窗口(窗口大小,每次滑动的秒数)

.sum("count");

windowCount.print();

env.execute("Streaming word Count");//执行(添加名字)

}

/**

* 自定义结果类

*/

public static class WordWithCount {

public String word;

public Long count;

public WordWithCount() {

}

public WordWithCount(String word, Long count) {

this.word = word;

this.count = count;

}

@Override

public String toString() {

return "WordWithCount{" +

"word='" + word + '\'' +

", count=" + count +

'}';

}

}

}

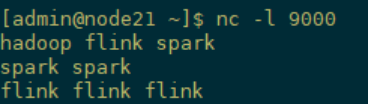

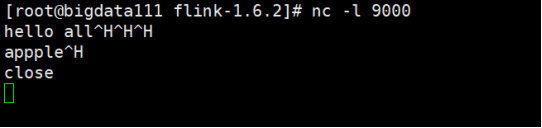

测试:

首先,使用nc命令启动一个本地监听,命令是:

[root@bigdata111 flink-1.6.2]# nc -l 9000

启动监听如果报错:-bash: nc: command not found,请先安装nc

先虚拟机联网,然后执行yum -y install nc

nc是用来打开端口的工具

然后nc -l 9000

然后,IDEA上运行flink官方案例程序

bigdata111上输入:

IDEA上执行日可看到:

浙公网安备 33010602011771号

浙公网安备 33010602011771号