Spark 2.x管理与开发-Spark SQL-【Spark SQL案例】(二)多表查询*

Posted on 2020-08-01 12:22 MissRong 阅读(544) 评论(0) 收藏 举报Spark 2.x管理与开发-Spark SQL-【Spark SQL案例】(二)多表查询*

1.将三张表合并实现查询

Scala代码:

package sqlExamples

import org.apache.spark.sql.SparkSession

import org.apache.log4j.Logger

import org.apache.log4j.Level

import java.text.SimpleDateFormat

//注意:在Spark和Hive里面读取进来的原数据尽量用String,这样可以避免很多不必要的问题

//如果后期需要进行运算,再将其进行类型的转换即可

/**

* sno:学号

* sname:姓名

* ssex:性别

* sbirthday:生日

* sclass:班级

*/

case class Students(sno: String, sname: String, ssex: String, sbirthday: String, sclass: String)

/**

* cno:课程号

* cname:课程名

* tno:教工编号

*/

case class Course(cno: String, cname: String, tno: String)

/**

* sno:学号

* cno:课程号

* degree:成绩

*/

case class Score(sno: String, cno: String, degree: String)

/**

* tprof:职称

* tdepart:教工所在部门

*/

case class Teacher(tno: String, tname: String, tsex: String, tbirthday: String, tprof: String, tdepart: String)

object StudentsAndTeachers {

def main(args: Array[String]): Unit = {

//下面的两行代码定义日志级别,可以减少打印出来的日志

Logger.getLogger("org.apache.spark").setLevel(Level.ERROR)

Logger.getLogger("org.eclipse.jetty.server").setLevel(Level.OFF)

//Spark环境

val spark = SparkSession.builder().master("local").appName("SparkSQLExample").getOrCreate()

import spark.sqlContext.implicits._

//读取数据

spark.sparkContext.textFile("D:\\tmp_files\\spark_sql_test_data\\Student.csv")

.map(_.split(",")) //注意:csv格式的文件最好用逗号进行切割

.map(x => Students(x(0), x(1), x(2), x(3), x(4))) //采用case class 创建DataFrame 并关联表结构

.toDF //将RDD转换成DataFrame

.createOrReplaceTempView("student") //创建视图

spark.sparkContext.textFile("D:\\tmp_files\\spark_sql_test_data\\Course.csv")

.map(_.split(","))

.map(x => Course(x(0), x(1), x(2)))

.toDF

.createOrReplaceTempView("course")

spark.sparkContext.textFile("D:\\tmp_files\\spark_sql_test_data\\Score.csv")

.map(_.split(","))

.map(x => Score(x(0), x(1), x(2)))

.toDF

.createOrReplaceTempView("Score")

spark.sparkContext.textFile("D:\\tmp_files\\spark_sql_test_data\\Teacher.csv")

.map(_.split(","))

.map(x => Teacher(x(0), x(1), x(2), x(3), x(4), x(5)))

.toDF

.createOrReplaceTempView("Teacher")

//*********************多表查询***********************

//提示:1) 多表查询可后写select部分,例如下面的:一、

// 2) 需要Join的可考虑先Join,之后再考虑其它复杂的查询条件,例如下面的:三、

//一、将三张表合并实现查询-join on

//查询所有学生的sname,cname degree

spark.sql("select s.sname,c.cname,t.degree from score t " +

"Join student s on t.sno=s.sno " +

"join course c on c.cno=t.cno").show(false)

//关闭Spark

spark.close()

}

}

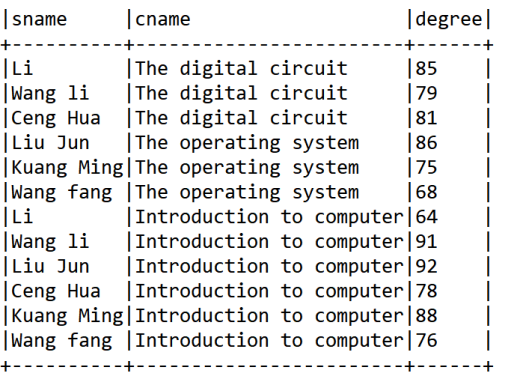

结果:

2.多个子查询

Scala代码:

//二、多个子查询

//1.查询Score中选修多门选修课的学生中,分数为非最高分成绩的记录--max()

spark.sql("select * from score where sno in " +

"(select sno from score t group by t.sno having count(1)>1 )" +

"and degree!=(select max(degree) from score)").show()

//2.查询所有没有讲过课的老师的tname和tdepart --not in()、in()

spark.sql("select tname,tdepart from Teacher t where t.tno not in(select tno from Course c where c.cno in (select cno from Score))").show()

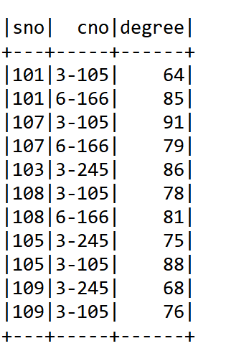

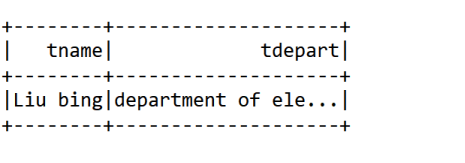

结果:

3.Join自己临时获取的中间表

Scala代码:

//三、join自己临时获取的中间表

//查询选修某课程的同学人数多于5人的教师姓名

spark.sql("select tname from Teacher t " +

"join Course c on c.tno=t.tno " +

"join (select cno from score group by cno having count(1)>5) m on c.cno=m.cno").show()

//注:count(1)换成count(cno)也可以

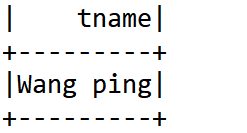

结果:

浙公网安备 33010602011771号

浙公网安备 33010602011771号