YARN-HA部署

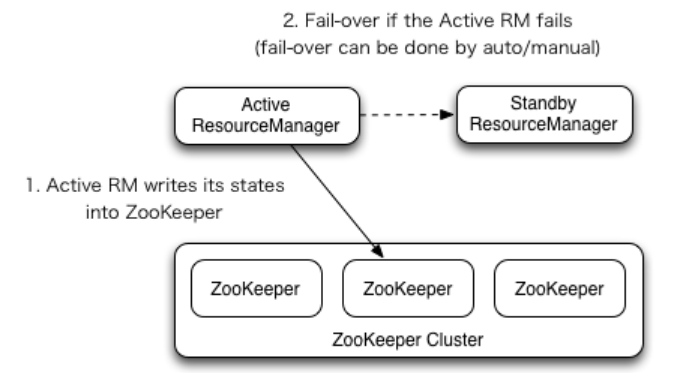

一、YARN-HA工作机制

1)官方文档:

http://hadoop.apache.org/docs/r2.7.2/hadoop-yarn/hadoop-yarn-site/ResourceManagerHA.html

2)YARN-HA工作机制

二、配置YARN-HA集群

0)环境准备

(1)修改IP

(2)修改主机名及主机名和IP地址的映射

(3)关闭防火墙

(4)ssh免密登录

(5)安装JDK,配置环境变量等

(6)配置Zookeeper集群

1)规划集群

bigdata111 bigdata111 bigdata111

NameNode NameNode

JournalNode JournalNode JournalNode

DataNode DataNode DataNode

ZK ZK ZK

ResourceManager ResourceManager

NodeManager NodeManager NodeManager

2)具体配置

(1)yarn-site.xml

|

<configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property>

<!--启用resourcemanager ha--> <property> <name>yarn.resourcemanager.ha.enabled</name> <value>true</value> </property>

<!--声明两台resourcemanager的地址--> <property> <name>yarn.resourcemanager.cluster-id</name> <value>cluster-yarn1</value> </property>

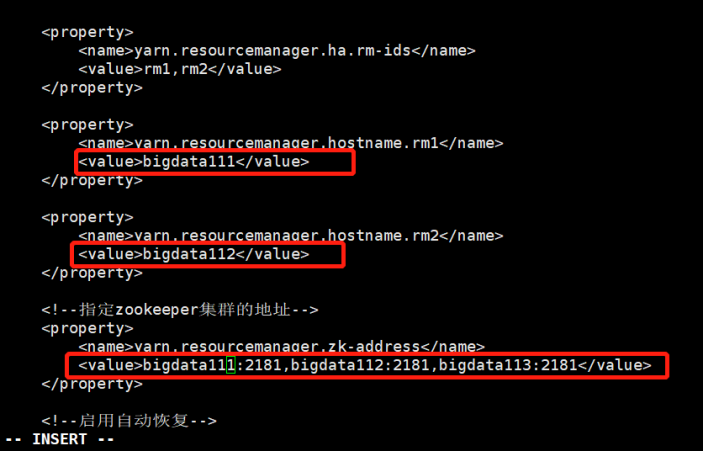

<property> <name>yarn.resourcemanager.ha.rm-ids</name> <value>rm1,rm2</value> </property>

<property> <name>yarn.resourcemanager.hostname.rm1</name> <value>centos1</value> </property>

<property> <name>yarn.resourcemanager.hostname.rm2</name> <value>centos2</value> </property>

<!--指定zookeeper集群的地址--> <property> <name>yarn.resourcemanager.zk-address</name> <value>centos1:2181,centos2:2181,centos3:2181</value> </property>

<!--启用自动恢复--> <property> <name>yarn.resourcemanager.recovery.enabled</name> <value>true</value> </property>

<!--指定resourcemanager的状态信息存储在zookeeper集群--> <property> <name>yarn.resourcemanager.store.class</name> <value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value> </property> </configuration> |

注意修改主机名

(2)配置map(略,因为hadoop2.8.4是从之前的完全分布式那里复制过来的)

[root@bigdata111 module]# ll

total 12

drwxr-xr-x 3 root root 25 Mar 22 21:41 HA

drwxr-xr-x. 11 1001 1001 4096 Feb 22 14:08 hadoop-2.8.4

drwxr-xr-x. 8 10 143 4096 Jul 22 2017 jdk1.8.0_144

drwxr-xr-x 11 1001 1001 4096 Mar 19 19:29 zookeeper-3.4.10

[root@bigdata111module]#cp hadoop-2.8.4/etc/hadoop/mapred-site.xml /opt/module/HA/hadoop-2.8.4/etc/hadoop/

同步更新其他节点的配置信息

3)启动hdfs

(1)在各个JournalNode节点上,输入以下命令启动journalnode服务:

sbin/hadoop-daemon.sh start journalnode

(2)在[nn1]上,对其进行格式化,并启动:

bin/hdfs namenode -format

sbin/hadoop-daemon.sh start namenode

(3)在[nn2]上,同步nn1的元数据信息:

bin/hdfs namenode -bootstrapStandby

(4)启动[nn2]:

sbin/hadoop-daemon.sh start namenode

(5)启动所有datanode

sbin/hadoop-daemons.sh start datanode

(6)将[nn1]切换为Active

bin/hdfs haadmin -transitionToActive nn1

4)启动yarn

(1)在bigdata111中执行:

sbin/start-yarn.sh

[root@bigdata111 hadoop-2.8.4]# jps

10465 Jps

3942 QuorumPeerMain

9737 DataNode

10105 DFSZKFailoverController

9931 JournalNode

10429 NodeManager

10335 ResourceManager

[root@bigdata112 hadoop-2.8.4]# jps

8496 DataNode

8593 JournalNode

3444 QuorumPeerMain

8428 NameNode

8908 NodeManager

9020 Jps

8703 DFSZKFailoverController

(2)在bigdata112中执行:

sbin/yarn-daemon.sh start resourcemanager

[root@bigdata112 hadoop-2.8.4]# jps

8496 DataNode

8593 JournalNode

3444 QuorumPeerMain

9048 ResourceManager

9080 Jps

8428 NameNode

8908 NodeManager

8703 DFSZKFailoverController

(3)查看服务状态(注意:yarn自动切换需要稍等下大概5到10秒,就切换成功)

bin/yarn rmadmin -getServiceState rm1

[root@bigdata111 hadoop-2.8.4]# bin/yarn rmadmin -getServiceState rm1

active

[root@bigdata111 hadoop-2.8.4]# bin/yarn rmadmin -getServiceState rm2

standby

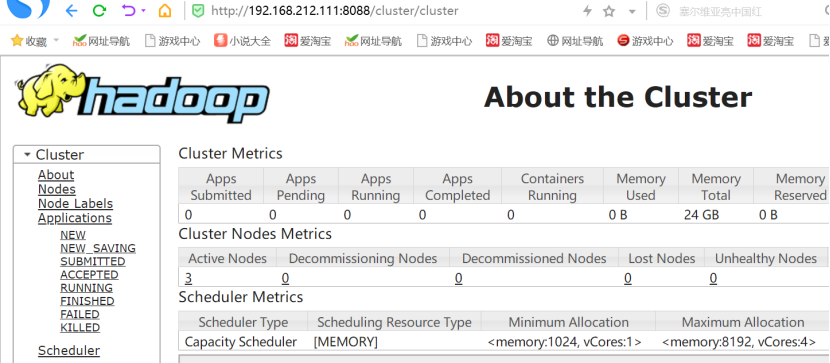

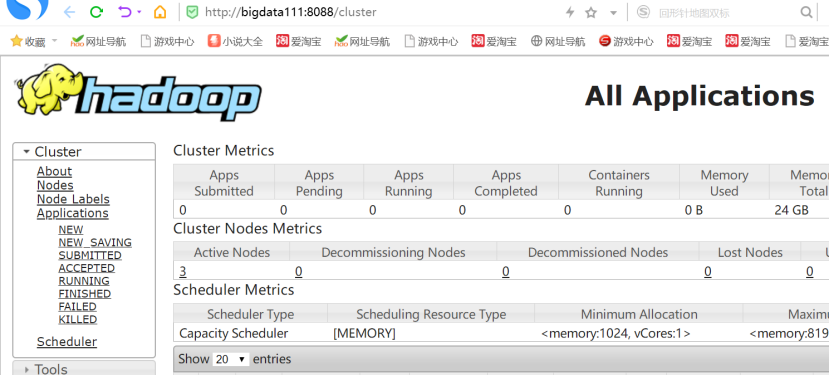

192.168.212.111:8088

192.168.212.112:8088

这两个看起来都是相同的

5)验证

(1)可以杀死active的机器上的ResourceManager线程

然后查看active是否转移

[root@bigdata112 hadoop-2.8.4]# bin/yarn rmadmin -getServiceState rm2

active

浙公网安备 33010602011771号

浙公网安备 33010602011771号