doris源码编译和集成vertica 数据源catalog

建议使用doris.apache.org的二进制文件包,需要改那些文件编译后替换。

准备:

1. linux编译环境(cpu核数要多+内存要大),需要安装好docker,使用docker编译,同时可以运行linux版本的idea

idea官方

下载一个社区版本即可。并复制到虚拟机linux环境的任意目录。启动即可。

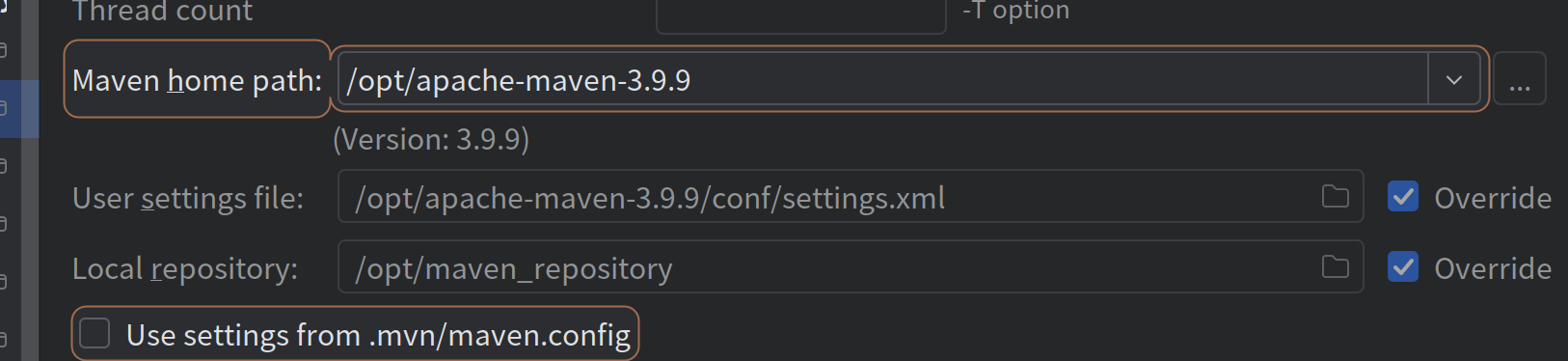

需要为linux配置好maven

2. 文档位置

编译doris请参考github和官方文档

doris编译

官方文档编译一:

github-doris

官方文档编译二:

compilation-with-docker

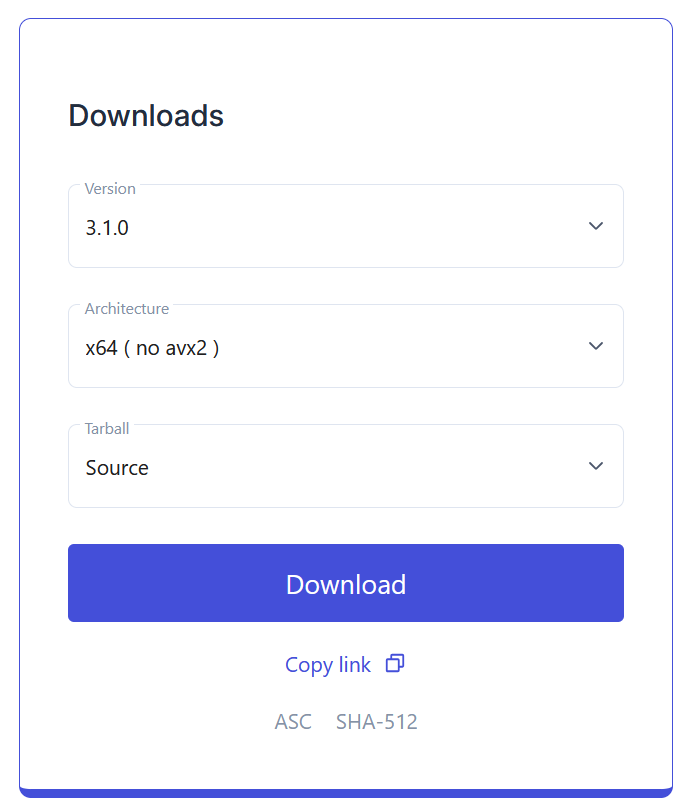

3. 下载源码:

需要注意:

下载地址:

https://doris.apache.org/download 并在Doris All Releases下选择自己想要的版本,注意选择的avx2

并将下载好的压缩包上传至linux服务器。

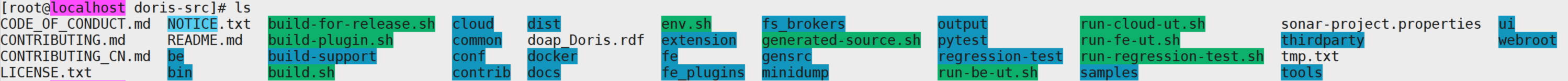

正常doris源码目录:

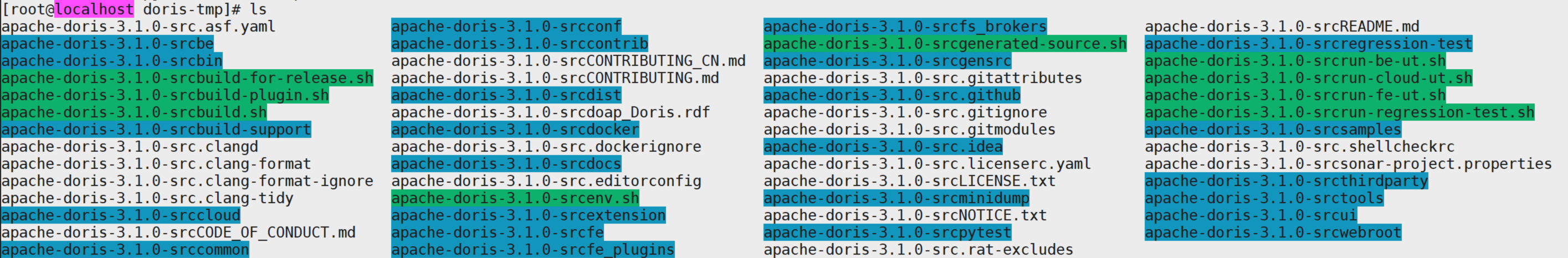

实际从doris官网获取的源码如下:

可见归档后的源码目录名称多了前缀apache-doris-3.1.0-src,此前缀仅在根目录有。

需执行linux命令rename 'apache-doris-3.1.0-src' '' ./*,去除所有源码文件的前缀。

注意github branch 下也有源码,但是都是动态分支,不是发布版本!!!

发布的版本移步至https://github.com/apache/doris/releases

# 克隆分支请参考,本文用不到

git clone -b branch-2.0 https://github.com/apache/doris.git

另外需要注意检查自己部署服务器的硬件支持avx2,选择对应的版本。

4. pull docker image

选择源码对应的docker环境镜像即可,注意:avx2。

5. 启动docker

# -v 本地目录:docker镜像内目录

# 需要将源码和maven仓库都映射到docker内部目录

# 仅需要修改自己的volume映射即可

docker run -it --network=host --name dorisbuild -v /opt/maven_repository:/root/.m2/repository -v /opt/pjs/doris-3.1.0-src:/root/doris-3.1.0-src/ apache/doris:build-env-for-3.1-no-avx2

# 启动容器

docker start dorisbuild

# 进入容器bash

docker exec -it dorisbuild /bin/bash

# 编译原始代码-必须试一下:

# -j是并行度设置,编译be时候有用

# 先把后端编译好,前端随意编译

# 注意:如果从doris官网下载的源码不可以使用参数`USE_AVX2=0`,仅仅从github pull的源码可以使用参数`USE_AVX2=0`

DISABLE_JAVA_CHECK_STYLE=ON ./build.sh --fe --be -j 6

DISABLE_JAVA_CHECK_STYLE=ON ./build.sh --be -j 6

# 编译be 非常慢,如果报错了,多试几次,成功后再次编译cpp时候,会增量编译,快得多。

记得修改源码前提交个git commit。

后端编译可能报错:

后端be 的c编译失败:error: only virtual member functions can be marked 'override',参考文章issues,当然选择,3.0.8版本即可,3.1.0版本需要自己处理编译异常。

8.修改源码

9.替换文件

各类所属文件如下:

# doris/fe/lib/fe-common-1.2-SNAPSHOT.jar

org/apache/doris/thrift/TOdbcTableType

# doris/fe/lib/doris-fe.jar

org/apache/doris/catalog/JdbcTable

org/apache/doris/catalog/OdbcTable

org/apache/doris/datasource/jdbc/source/JdbcScanNode

org/apache/doris/datasource/jdbc/client/JdbcVerticaClient

org/apache/doris/catalog/JdbcResource

org/apache/doris/datasource/jdbc/client/JdbcClient

# doris/be/lib/java_extensions/jdbc-scanner/jdbc-scanner-jar-with-dependencies.jar

org/apache/doris/jdbc/VerticaJdbcExecutor

org/apache/doris/jdbc/JdbcExecutorFactory

#可以使用如下命令查找java中类所属包

find . -name '*.jar' | while read jarfile; do if jar tf "$jarfile" | grep org/apache/doris/datasource/jdbc/client/JdbcClient -q; then echo "$jarfile"; fi; done

注意:

(1)doris/be/lib/java_extensions下的所有包都替换一下。因为其中含有JdbcExecutorFactory的公共类。

(2)添加jdbc连接器不需要修改cpp等文件,doris的c++代码使用jni 调用java api.

重启be和fe节点

10. 测试vertica catalog

-- vertica catalog

CREATE CATALOG vertica_ts PROPERTIES(

'type'='jdbc',

'user'='vertica

'password'='123456'

'jdbc_url'='jdbc:vertica://192.168.100.100/db',

'driver_url'='file:///opt/doris/fe/plugins/jdbc_drivers/vertica-jdbc-12.0.3-0.jar',

'driver_class'='com.vertica.jdbc.Driver'

);

源码修改内容:

具体文件夹位置参考JdbcPostgreSQLClient。

JdbcVerticaClient

package org.apache.doris.datasource.jdbc.client;

import com.google.common.collect.Lists;

import org.apache.doris.catalog.ArrayType;

import org.apache.doris.catalog.ScalarType;

import org.apache.doris.catalog.Type;

import org.apache.doris.common.util.Util;

import org.apache.doris.datasource.jdbc.util.JdbcFieldSchema;

import org.apache.logging.log4j.LogManager;

import org.apache.logging.log4j.Logger;

import java.sql.*;

import java.util.Arrays;

import java.util.Iterator;

import java.util.List;

import java.util.Optional;

import java.util.regex.Matcher;

import java.util.regex.Pattern;

public class JdbcVerticaClient extends JdbcClient {

private static final Logger LOG = LogManager.getLogger(JdbcVerticaClient.class);

private static final String[] supportedInnerType =

new String[]{

"Boolean",

"Integer",

"Float",

"Numeric",

"Date", "Time", "Timestamp", "TimestampTz",

"Char", "Varchar", "Long Varchar",

"Varbinary", "Long Varbinary",

"Uuid"

};

protected JdbcVerticaClient(JdbcClientConfig jdbcClientConfig) {

super(jdbcClientConfig);

}

private static boolean supportInnerType(String innerType) {

if (Arrays.asList(supportedInnerType).contains(innerType)) return true;

else if (innerType.startsWith("Array[")) {

return true;

} else if (innerType.startsWith("Interval ")) {

return true;

} else {

return false;

}

}

private Type createArrayType(JdbcFieldSchema fieldSchema) {

// TODO: TBL,DB acquire?

String remoteDbName=null;

String remoteTableName=null;

String innerTypeName = fieldSchema.getDataTypeName().orElse(null);

if (innerTypeName == null || innerTypeName.isEmpty()) {

LOG.warn(String.format("vertica array type not support inner, inner type must in %s", Arrays.toString(supportedInnerType)));

return Type.UNSUPPORTED;

}

boolean isSupported = supportInnerType(innerTypeName);

if (!isSupported) {

LOG.warn(String.format("vertica array type not support inner, inner type must in %s", Arrays.toString(supportedInnerType)));

return Type.UNSUPPORTED;

}

Connection conn = null;

Statement stmt = null;

ResultSet arrElementRs = null;

JdbcFieldSchema innerSchema = null;

try {

conn = getConnection();

stmt = conn.createStatement();

arrElementRs = stmt.executeQuery(String.format("SELECT %s[0] ITEM FROM %s.%s WHERE 1=0", fieldSchema.getColumnName(), remoteDbName, remoteTableName));

innerSchema = new JdbcFieldSchema(arrElementRs.getMetaData(), 1);

} catch (SQLException ex) {

LOG.warn("Failed to get item type for column {}: {}",

fieldSchema.getColumnName(), Util.getRootCauseMessage(ex));

} finally {

close(arrElementRs, null);

if (stmt != null) {

try {

stmt.close();

} catch (SQLException ex) {

LOG.warn("Failed to close statement: {}", Util.getRootCauseMessage(ex));

}

}

if (conn != null) {

try {

conn.close();

} catch (SQLException ex) {

LOG.warn("Failed to close connection: {}", Util.getRootCauseMessage(ex));

}

}

}

Type arrayInnerType = jdbcTypeToDoris(innerSchema);

Type arrayType = ArrayType.create(arrayInnerType, true);

return arrayType;

}

@Override

public List<JdbcFieldSchema> getJdbcColumnsInfo(String remoteDbName, String remoteTableName) {

Connection conn = null;

ResultSet rs = null;

List<JdbcFieldSchema> tableSchema = Lists.newArrayList();

try {

conn = getConnection();

DatabaseMetaData databaseMetaData = conn.getMetaData();

String catalogName = getCatalogName(conn);

rs = getRemoteColumns(databaseMetaData, catalogName, remoteDbName, remoteTableName);

while (rs.next()) {

JdbcFieldSchema fieldSchema = new JdbcFieldSchema(rs, 0);

tableSchema.add(fieldSchema);

}

Iterator<JdbcFieldSchema> it=tableSchema.iterator();

LOG.error(String.format("print table schema: %s.%s",remoteDbName,remoteTableName));

while(it.hasNext()){

LOG.error(String.format("FieldSchema: %s",it.next().toString()));

}

} catch (SQLException e) {

throw new JdbcClientException(

"failed to get jdbc columns info for remote table `%s.%s`: %s",

remoteDbName, remoteTableName, Util.getRootCauseMessage(e));

} finally {

close(rs, conn);

}

return tableSchema;

}

@Override

protected String[] getTableTypes() {

return new String[]{

"TABLE", "PARTITIONED TABLE", "VIEW", "MATERIALIZED VIEW", "FOREIGN TABLE"

};

}

@Override

protected Type jdbcTypeToDoris(JdbcFieldSchema fieldSchema) {

String verticaType = fieldSchema.getDataTypeName().orElse("unknown");

switch (verticaType) {

case "Integer":

return ScalarType.BIGINT;

case "Numeric":

case "Decimal":

case "Number": {

int precision = fieldSchema.getColumnSize().orElse(0);

int scale = fieldSchema.getDecimalDigits().orElse(0);

return createDecimalOrStringType(precision, scale);

}

case "Real":

case "Float":

case "Float8":

case "Double Precision":

return ScalarType.DOUBLE;

case "Timestamp":

case "TimestampTz": {

// vertica can support microsecond

int scale = fieldSchema.getDecimalDigits().orElse(0);

if (scale > 6) {

scale = 6;

}

return ScalarType.createDatetimeV2Type(scale);

}

case "Date":

return ScalarType.DATEV2;

case "Boolean":

return ScalarType.BOOLEAN;

case "Char":

case "Character":

case "Varchar":

case "Character Varying":

case "Long Varchar":

case "Uuid":

case "Binary":

case "Varbinary":

case "Binary Varying":

case "Long Varbinary":

case "Raw":

case "Bytea":

case "Time":

case "TimeTz":

return ScalarType.createStringType();

default:

if (verticaType.startsWith("Interval")) {

return ScalarType.createStringType();

} else if (verticaType.startsWith("Array")) {

LOG.warn(String.format("got array type of Vertica: %s", verticaType));

return ScalarType.createStringType();

// return createArrayType(fieldSchema);

}

LOG.error(String.format("got unsupported type of Vertica: %s", verticaType));

return Type.UNSUPPORTED;

}

}

}

具体文件夹位置参考PostgreSQLJdbcExecutor。

添加类:VerticaJdbcExecutor

package org.apache.doris.jdbc;

import com.google.common.collect.Lists;

import org.apache.doris.common.jni.vec.ColumnType;

import org.apache.doris.common.jni.vec.ColumnType.Type;

import org.apache.doris.common.jni.vec.ColumnValueConverter;

import org.apache.doris.common.jni.vec.VectorTable;

import org.apache.log4j.Logger;

import java.io.BufferedInputStream;

import java.io.ByteArrayOutputStream;

import java.io.InputStream;

import java.math.BigDecimal;

import java.sql.Date;

import java.sql.ResultSetMetaData;

import java.sql.SQLException;

import java.sql.Timestamp;

import java.time.LocalDate;

import java.time.LocalDateTime;

import java.time.OffsetDateTime;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.List;

public class VerticaJdbcExecutor extends BaseJdbcExecutor {

private static final Logger LOG = Logger.getLogger(VerticaJdbcExecutor.class);

public VerticaJdbcExecutor(byte[] thriftParams) throws Exception {

super(thriftParams);

}

@Override

protected void initializeBlock(

int columnCount,

String[] replaceStringList,

int batchSizeNum,

VectorTable outputTable) {

for (int i = 0; i < columnCount; ++i) {

if (outputTable.getColumnType(i).getType() == Type.DATETIME

|| outputTable.getColumnType(i).getType() == Type.DATETIMEV2) {

block.add(new Object[batchSizeNum]);

} else if (outputTable.getColumnType(i).getType() == Type.STRING

|| outputTable.getColumnType(i).getType() == Type.ARRAY) {

block.add(new Object[batchSizeNum]);

} else {

block.add(outputTable.getColumn(i).newObjectContainerArray(batchSizeNum));

}

}

}

@Override

protected Object getColumnValue(int columnIndex, ColumnType type, String[] replaceStringList)

throws SQLException {

switch (type.getType()) {

case BOOLEAN:

return resultSet.getObject(columnIndex + 1, Boolean.class);

case TINYINT:

case SMALLINT:

case INT:

return resultSet.getObject(columnIndex + 1, Integer.class);

case BIGINT:

return resultSet.getObject(columnIndex + 1, Long.class);

case FLOAT:

return resultSet.getObject(columnIndex + 1, Float.class);

case DOUBLE:

return resultSet.getObject(columnIndex + 1, Double.class);

case DECIMALV2:

case DECIMAL32:

case DECIMAL64:

case DECIMAL128:

return resultSet.getObject(columnIndex + 1, BigDecimal.class);

case DATE:

case DATEV2:

java.sql.Date date = resultSet.getObject(columnIndex + 1, java.sql.Date.class);

if (date == null) {

return null;

} else {

return date.toLocalDate();

}

case DATETIME:

case DATETIMEV2:

java.sql.Timestamp ts = resultSet.getObject(columnIndex + 1, java.sql.Timestamp.class);

if (ts == null) {

return null;

} else {

return ts.toLocalDateTime();

}

case CHAR:

case VARCHAR:

case STRING:

Object data = resultSet.getObject(columnIndex + 1);

if (data == null) {

return null;

} else if (data instanceof java.sql.Array) {

java.sql.Array array = (java.sql.Array) data;

Object arr = array.getArray();

if (arr instanceof long[]) {

return Arrays.toString((long[]) arr);

} else if (arr instanceof Long[]) {

return Arrays.toString((Long[]) arr);

} else if (arr instanceof String[]) {

return Arrays.toString((String[]) arr);

} else if (arr instanceof double[]) {

return Arrays.toString((double[]) arr);

}else if (arr instanceof Double[]) {

return Arrays.toString((Double[]) arr);

} else if (arr instanceof char[]) {

return Arrays.toString((char[]) arr);

} else if (arr instanceof Character[]) {

return Arrays.toString((Character[]) arr);

} else if (arr instanceof BigDecimal[]) {

return Arrays.toString((BigDecimal[]) arr);

} else {

LOG.error(String.format("unsupported array type of class: %s",arr.getClass().getName()));

return Arrays.toString(new String[]{});

}

} else if (data instanceof java.sql.Time) {

return timeToString((java.sql.Time) data);

} else if (data instanceof byte[]) {

return new String((byte[]) data);

} else {

return data.toString();

}

case ARRAY:

java.sql.Array array = resultSet.getArray(columnIndex + 1);

return array == null ? null : convertArrayToList(array.getArray());

case BINARY:

try (

InputStream is = resultSet.getBinaryStream(columnIndex + 1);

ByteArrayOutputStream bs = new ByteArrayOutputStream();

) {

byte[] buffer = new byte[4096];

Integer bytesRead = -1;

while ((bytesRead = is.read(buffer)) != -1) {

bs.write(buffer, 0, bytesRead);

}

return bs.toByteArray();

} catch (Exception ex) {

}

default:

throw new IllegalArgumentException("Unsupported Runtime column type: " + type.getType());

}

}

@Override

protected ColumnValueConverter getOutputConverter(ColumnType columnType, String replaceString) {

switch (columnType.getType()) {

case DATETIME:

case DATETIMEV2:

return createConverter(

input -> {

if (input instanceof Timestamp) {

return ((Timestamp) input).toLocalDateTime();

} else if (input instanceof OffsetDateTime) {

return ((OffsetDateTime) input).toLocalDateTime();

} else {

return input;

}

},

LocalDateTime.class);

case CHAR:

return createConverter(input -> trimSpaces(input.toString()), String.class);

case VARCHAR:

case STRING:

return createConverter(

input -> {

if (input instanceof java.sql.Time) {

return timeToString((java.sql.Time) input);

} else if (input instanceof byte[]) {

return verticaByteArrayToHexString((byte[]) input);

} else {

return input.toString();

}

},

String.class);

case ARRAY:

return createConverter(

(Object input) ->

convertArray((List<?>) input, columnType.getChildTypes().get(0)),

List.class);

default:

return null;

}

}

private static String verticaByteArrayToHexString(byte[] bytes) {

StringBuilder hexString = new StringBuilder("\\x");

for (byte b : bytes) {

hexString.append(String.format("%02x", b & 0xff));

}

return hexString.toString();

}

private List<Object> convertArrayToList(Object array) {

if (array == null) {

return null;

}

int length = java.lang.reflect.Array.getLength(array);

List<Object> list = new ArrayList<>(length);

for (int i = 0; i < length; i++) {

Object element = java.lang.reflect.Array.get(array, i);

list.add(element);

}

LOG.error(String.format("got array size: %d", list.size()));

return list;

}

private List<?> convertArray(List<?> array, ColumnType type) {

if (array == null) {

return null;

}

LOG.error(String.format("starting to convert nonempty array: %s", array));

switch (type.getType()) {

case DATE:

case DATEV2: {

List<LocalDate> result = new ArrayList<>();

for (Object element : array) {

if (element == null) {

result.add(null);

} else {

result.add(((Date) element).toLocalDate());

}

}

return result;

}

case DATETIME:

case DATETIMEV2: {

List<LocalDateTime> result = new ArrayList<>();

for (Object element : array) {

if (element == null) {

result.add(null);

} else {

if (element instanceof Timestamp) {

result.add(((Timestamp) element).toLocalDateTime());

} else if (element instanceof OffsetDateTime) {

result.add(((OffsetDateTime) element).toLocalDateTime());

} else {

result.add((LocalDateTime) element);

}

}

}

return result;

}

case ARRAY:

List<List<?>> resultArray = Lists.newArrayList();

for (Object element : array) {

if (element == null) {

resultArray.add(null);

} else {

List<?> nestedList = convertArrayToList(element);

resultArray.add(convertArray(nestedList, type.getChildTypes().get(0)));

}

}

return resultArray;

default:

return array;

}

}

}

修改类org.apache.doris.catalog.JdbcResource

public static final String JDBC_VERTICA = "jdbc:vertica";

public static final String VERTICA = "VERTICA";

public static String parseDbType(String url) throws DdlException {

if (url.startsWith(JDBC_MYSQL) || url.startsWith(JDBC_MARIADB)) {

return MYSQL;

}

// 添加vertica url判断

else if (url.startsWith(JDBC_VERTICA)) {

return VERTICA;

}

throw new DdlException("Unsupported jdbc database type, please check jdbcUrl: " + url);

}

修改类org.apache.doris.datasource.jdbc.client.JdbcClient

public static JdbcClient createJdbcClient(JdbcClientConfig jdbcClientConfig) {

String dbType = parseDbType(jdbcClientConfig.getJdbcUrl());

switch (dbType) {

case JdbcResource.MYSQL:

return new JdbcMySQLClient(jdbcClientConfig);

// 添加vertica数据源

case JdbcResource.VERTICA:

return new JdbcVerticaClient(jdbcClientConfig);

default:

throw new IllegalArgumentException("Unsupported DB type: " + dbType);

}

}

修改类org.apache.doris.jdbc.JdbcExecutorFactory

public static String getExecutorClass(TOdbcTableType type) {

switch (type) {

case MYSQL:

case OCEANBASE:

return "org/apache/doris/jdbc/MySQLJdbcExecutor";

// 添加Vertia执行器的class名称

case VERTICA:

return "org/apache/doris/jdbc/VerticaJdbcExecutor";

default:

throw new IllegalArgumentException("Unsupported jdbc type: " + type);

}

}

org.apache.doris.thrift.TOdbcTableType类是thrift生成的源码,由注解可见@javax.annotation.Generated(value = "Autogenerated by Thrift Compiler (0.16.0)", date = "2025-10-16")其模板文件是./gensrc/thrift/Types.thrift,doris内部 T+大写 开头类名的类都是thrift生成的。

修改模板文件Types.thrift

enum TOdbcTableType {

MYSQL,

// 添加vertica

VERTICA

}

修改类org.apache.doris.datasource.jdbc.source.JdbcScanNode

private String getJdbcQueryStr() {

// Other DataBase use limit do top n

if (shouldPushDownLimit()

&& (jdbcType == TOdbcTableType.MYSQL

// 添加vertica

|| jdbcType == TOdbcTableType.VERTICA)) {

sql.append(" LIMIT ").append(limit);

}

}

修改类org.apache.doris.catalog.JdbcTable

static {

Map<String, TOdbcTableType> tempMap = new CaseInsensitiveMap();

tempMap.put("mysql", TOdbcTableType.MYSQL);

// 添加vertica

tempMap.put("vertica", TOdbcTableType.VERTICA);

TABLE_TYPE_MAP = Collections.unmodifiableMap(tempMap);

}

public static String databaseProperName(TOdbcTableType tableType, String name) {

switch (tableType) {

case MYSQL:

return formatName(name, "`", "`", false, false);

// 添加vertica

case VERTICA:

return formatName(name, "\"", "\"", false, false);

default:

return name;

}

}

public static String properNameWithRemoteName(TOdbcTableType tableType, String remoteName) {

switch (tableType) {

case MYSQL:

return formatNameWithRemoteName(remoteName, "`", "`");

// 添加vertica

case VERTICA:

return formatNameWithRemoteName(remoteName, "\"", "\"");

default:

return remoteName;

}

}

修改类org.apache.doris.catalog.OdbcTable

static {

Map<String, TOdbcTableType> tempMap = new HashMap<>();

tempMap.put("oracle", TOdbcTableType.ORACLE);

// 添加vertica

tempMap.put("vertica", TOdbcTableType.VERTICA);

TABLE_TYPE_MAP = Collections.unmodifiableMap(tempMap);

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号