torchvision手写数据集训练过程(完整训练过程)

在torchvision中下载MNIST数据集

import torchvision

from torchvision.transforms import ToTensor

import torch

常见的图片格式: (高, 宽, 通道)

然后这里我们设置ToTensor()的作用就是:

ToTensor() 作用:

1. 将输入转为tensor

2. 规范图片格式为 (channel, height, width)

3. 将像素取值范围规范到(0,1)

train_ds = torchvision.datasets.MNIST('data',

train=True,

transform=ToTensor(),

download=True)

test_ds = torchvision.datasets.MNIST('data',

train=False,

transform=ToTensor(),

download=True)

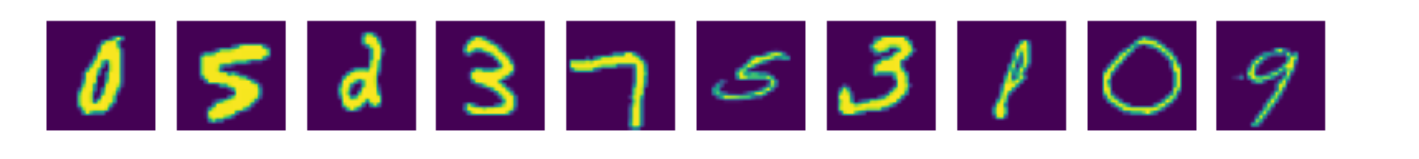

查看数据集

imgs, labels = next(iter(train_dl))

imgs.shape

# torch.Size([64, 1, 28, 28])

import matplotlib.pyplot as plt

import numpy as np

plt.figure(figsize=(10, 1))

for i, img in enumerate(imgs[:10]):

npimg = img.numpy()

npimg = np.squeeze(npimg)

plt.subplot(1, 10, i+1)

plt.imshow(npimg)

plt.axis('off')

labels[:10]

# tensor([0, 5, 2, 3, 7, 5, 3, 8, 0, 9])

定义模型

class Model(nn.Module):

def __init__(self):

super().__init__()

self.linear_1 = nn.Linear(28*28, 120)

self.linear_2 = nn.Linear(120, 84)

self.linear_3 = nn.Linear(84, 10)

def forward(self, input):

x = input.view(-1, 1*28*28)

x = torch.relu(self.linear_1(x))

x = torch.relu(self.linear_2(x))

logits = self.linear_3(x)

return logits # 未激活的输出,叫做logits

主要我们下面要用CrossEntropyLoss()作为损失函数,所以上面我们不能用softmax()进行激活。这个CrossEntropyLoss()就是帮助我们计算交叉熵损失的。而他的输入并不希望我们输入这种[0.9,0.04,0.06]这种激活后的输出。

例如:loss_fn=torch.nn.CrossEntropyLoss(),它的输入:loss_fn(target,input),他要求的输入target:是0,1,2这样的类别索引,而不是独热编码,input:是未激活(softmax)的input.

损失函数和优化器

# 定义损失函数

loss_fn = torch.nn.CrossEntropyLoss()

将模型转化到GPU中

device = 'cuda' if torch.cuda.is_available() else 'cpu'

model = Model().to(device) # 初始化模型

opt = torch.optim.SGD(model.parameters(), lr=0.001)

训练过程

训练函数

# 训练函数

def train(dl, model, loss_fn, optimizer):

size = len(dl.dataset)

num_batches = len(dl)

train_loss, correct = 0, 0

model.train()

for x, y in dl: # 一个批次的数据

x, y = x.to(device), y.to(device)

pred = model(x)

loss = loss_fn(pred, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

with torch.no_grad():

correct += (pred.argmax(1) == y).type(torch.float).sum().item()

train_loss += loss.item() # loss.item()是一个批次的损失

correct /= size #全部的正确率,所以除的不一样

train_loss /= num_batches #train_loss是所有批次的损失

return correct, train_loss

测试函数

# 测试函数

def test(test_dl, model, loss_fn):

size = len(test_dl.dataset)

num_batches = len(test_dl)

test_loss, correct = 0, 0

model.eval()

with torch.no_grad():

for x, y in test_dl:

x, y = x.to(device), y.to(device)

pred = model(x)

loss = loss_fn(pred, y)

test_loss += loss.item()

correct += (pred.argmax(1) == y).type(torch.float).sum().item()

correct /= size

test_loss /= num_batches

return correct, test_loss

训练

def fit(epochs,train_dl,test_dl,model,loss_fn,opt):

train_loss = []

train_acc = []

test_loss = []

test_acc = []

for epoch in range(epochs):

epoch_acc, epoch_loss = train(train_dl, model, loss_fn, opt)

epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)

train_acc.append(epoch_acc)

train_loss.append(epoch_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

template = ("epoch:{:2d}, train_Loss:{:.5f}, train_acc:{:.5f}, test_Loss:{:.5f}, test_acc:{:.5f}")

print(template.format(epoch, epoch_loss, epoch_acc, epoch_test_loss, epoch_test_acc))

print('Done')

return train_loss,train_acc,test_loss,test_acc

fit函数

def fit(epochs,train_dl,test_dl,model,loss_fn,opt):

train_loss = []

train_acc = []

test_loss = []

test_acc = []

for epoch in range(epochs):

epoch_acc, epoch_loss = train(train_dl, model, loss_fn, opt)

epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)

train_acc.append(epoch_acc)

train_loss.append(epoch_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

template = ("epoch:{:2d}, train_Loss:{:.5f}, train_acc:{:.5f}, test_Loss:{:.5f}, test_acc:{:.5f}")

print(template.format(epoch, epoch_loss, epoch_acc, epoch_test_loss, epoch_test_acc))

print('Done')

return train_loss,train_acc,test_loss,test_acc

(train_loss,

train_acc,

test_loss,

test_acc)=fit(10,train_dl,test_dl,model,loss_fn,opt)

结果

epoch: 0, train_Loss:0.81310, train_acc:0.80535, test_Loss:0.74215, test_acc:0.81870

epoch: 1, train_Loss:0.72248, train_acc:0.81923, test_Loss:0.66442, test_acc:0.83260

epoch: 2, train_Loss:0.65554, train_acc:0.83070, test_Loss:0.60734, test_acc:0.84400

epoch: 3, train_Loss:0.60485, train_acc:0.84162, test_Loss:0.56217, test_acc:0.85440

epoch: 4, train_Loss:0.56517, train_acc:0.85003, test_Loss:0.52724, test_acc:0.86180

epoch: 5, train_Loss:0.53357, train_acc:0.85745, test_Loss:0.49885, test_acc:0.86770

epoch: 6, train_Loss:0.50748, train_acc:0.86377, test_Loss:0.47488, test_acc:0.87430

epoch: 7, train_Loss:0.48581, train_acc:0.86872, test_Loss:0.45535, test_acc:0.87790

epoch: 8, train_Loss:0.46758, train_acc:0.87297, test_Loss:0.43883, test_acc:0.88140

epoch: 9, train_Loss:0.45202, train_acc:0.87667, test_Loss:0.42449, test_acc:0.88580

Done

用CNN进行训练

class Model(nn.Module):

def __init__(self):# (b,1,28,28)

super().__init__()

self.conv1 = nn.Conv2d(1, 16, kernel_size=3,padding=1)

self.max1=nn.MaxPool2d(3, padding=1, stride=2)

self.bn1=nn.BatchNorm2d(16)

self.conv2 = nn.Conv2d(16, 32, kernel_size=3,padding=1)

self.max2=nn.MaxPool2d(3, padding=1, stride=2)

self.bn2=nn.BatchNorm2d(32)

self.linear_1 = nn.Linear(32*7*7, 120)

self.linear_2= nn.Linear(120,10)

def forward(self, input):

x=torch.relu(self.conv1(input))

x=self.max1(x)

# x=self.bn1(x)

x=torch.relu(self.conv2(x))

x=self.max2(x)

# x=self.bn2(x)

x = x.view(-1, 32*7*7)

x = torch.relu(self.linear_1(x))

logits = self.linear_2(x)

return logits # 未激活的输出,叫做logits

# 定义损失函数

loss_fn = torch.nn.CrossEntropyLoss()

device = 'cuda' if torch.cuda.is_available() else 'cpu'

model = Model().to(device) # 初始化模型

print(model)

opt = torch.optim.SGD(model.parameters(), lr=0.001)

# 训练函数

def train(dl, model, loss_fn, optimizer):

size = len(dl.dataset)

num_batches = len(dl)

train_loss, correct = 0, 0

model.train()

for x, y in dl: # 一个批次的数据

x, y = x.to(device), y.to(device)

pred = model(x)

loss = loss_fn(pred, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

with torch.no_grad():

correct += (pred.argmax(1) == y).type(torch.float).sum().item()

train_loss += loss.item() # loss.item()是一个批次的损失

correct /= size #全部的正确率,所以除的不一样

train_loss /= num_batches #train_loss是所有批次的损失

return correct, train_loss

# 测试函数

def test(test_dl, model, loss_fn):

size = len(test_dl.dataset)

num_batches = len(test_dl)

test_loss, correct = 0, 0

model.eval()

with torch.no_grad():

for x, y in test_dl:

x, y = x.to(device), y.to(device)

pred = model(x)

loss = loss_fn(pred, y)

test_loss += loss.item()

correct += (pred.argmax(1) == y).type(torch.float).sum().item()

correct /= size

test_loss /= num_batches

return correct, test_loss

def fit(epochs,train_dl,test_dl,model,loss_fn,opt):

train_loss = []

train_acc = []

test_loss = []

test_acc = []

for epoch in range(epochs):

epoch_acc, epoch_loss = train(train_dl, model, loss_fn, opt)

epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)

train_acc.append(epoch_acc)

train_loss.append(epoch_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

template = ("epoch:{:2d}, train_Loss:{:.5f}, train_acc:{:.5f}, test_Loss:{:.5f}, test_acc:{:.5f}")

print(template.format(epoch, epoch_loss, epoch_acc, epoch_test_loss, epoch_test_acc))

print('Done')

return train_loss,train_acc,test_loss,test_acc

(train_loss,

train_acc,

test_loss,

test_acc)=fit(10,train_dl,test_dl,model,loss_fn,opt)

CIFAR10的训练

import torchvision

from torchvision.transforms import ToTensor

import torch

import numpy as np

from torch import nn

train_ds = torchvision.datasets.CIFAR10('data',

train=True,

transform=ToTensor(),

download=True)

test_ds = torchvision.datasets.CIFAR10('data',

train=False,

transform=ToTensor(),

download=True)

train_dl = torch.utils.data.DataLoader(train_ds,

batch_size=64,

shuffle=True)

test_dl = torch.utils.data.DataLoader(test_ds,

batch_size=64)

imgs, labels = next(iter(test_dl))

imgs.shape

# torch.Size([64, 3, 32, 32])

class Model(nn.Module):

def __init__(self):# (b,3,32,32)

super().__init__()

self.conv1 = nn.Conv2d(3, 16, kernel_size=3,padding=1)

self.max1=nn.MaxPool2d(3, padding=1, stride=2)

self.bn1=nn.BatchNorm2d(16)

# (b,16,16,16)

self.conv2 = nn.Conv2d(16, 32, kernel_size=3,padding=1)

self.max2=nn.MaxPool2d(3, padding=1, stride=2)

self.bn2=nn.BatchNorm2d(32)

# (b,32,8,8)

self.linear_1 = nn.Linear(32*8*8, 1024)

self.drop_1 = nn.Dropout(0.2)

self.linear_2 = nn.Linear(1024, 120)

self.drop_2 = nn.Dropout(0.2)

self.linear_3 = nn.Linear(120,10)

def forward(self, input):

x=torch.relu(self.conv1(input))

x=self.max1(x)

x=self.bn1(x)

x=torch.relu(self.conv2(x))

x=self.max2(x)

x=self.bn2(x)

x = x.view(-1, 32*8*8)

x = torch.relu(self.linear_1(x))

x = self.drop_1(x)

x = torch.relu(self.linear_2(x))

x = self.drop_2(x)

logits = self.linear_3(x)

return logits # 未激活的输出,叫做logits

# 定义损失函数

loss_fn = torch.nn.CrossEntropyLoss()

device = 'cuda' if torch.cuda.is_available() else 'cpu'

model = Model().to(device) # 初始化模型

opt = torch.optim.Adam(model.parameters(), lr=0.001)

# 训练函数

def train(dl, model, loss_fn, optimizer):

size = len(dl.dataset)

num_batches = len(dl)

train_loss, correct = 0, 0

model.train()

for x, y in dl: # 一个批次的数据

x, y = x.to(device), y.to(device)

pred = model(x)

loss = loss_fn(pred, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

with torch.no_grad():

correct += (pred.argmax(1) == y).type(torch.float).sum().item()

train_loss += loss.item() # loss.item()是一个批次的损失

correct /= size #全部的正确率,所以除的不一样

train_loss /= num_batches #train_loss是所有批次的损失

return correct, train_loss

# 测试函数

def test(test_dl, model, loss_fn):

size = len(test_dl.dataset)

num_batches = len(test_dl)

test_loss, correct = 0, 0

model.eval()

with torch.no_grad():

for x, y in test_dl:

x, y = x.to(device), y.to(device)

pred = model(x)

loss = loss_fn(pred, y)

test_loss += loss.item()

correct += (pred.argmax(1) == y).type(torch.float).sum().item()

correct /= size

test_loss /= num_batches

return correct, test_loss

def fit(epochs,train_dl,test_dl,model,loss_fn,opt):

train_loss = []

train_acc = []

test_loss = []

test_acc = []

for epoch in range(epochs):

epoch_acc, epoch_loss = train(train_dl, model, loss_fn, opt)

epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)

train_acc.append(epoch_acc)

train_loss.append(epoch_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

template = ("epoch:{:2d}, train_Loss:{:.5f}, train_acc:{:.5f}, test_Loss:{:.5f}, test_acc:{:.5f}")

print(template.format(epoch, epoch_loss, epoch_acc, epoch_test_loss, epoch_test_acc))

print('Done')

return train_loss,train_acc,test_loss,test_acc

(train_loss,

train_acc,

test_loss,

test_acc)=fit(20,train_dl,test_dl,model,loss_fn,opt)

imgs, labels = next(iter(test_dl))

imgs.shape

imgs=imgs.to(device)

pred=model(img)

pred.argmax(1)

labels

浙公网安备 33010602011771号

浙公网安备 33010602011771号