2023数据采集与融合技术实践作业二

作业①

实验要求

·在中国气象网(http://www.weather.com.cn)给定城市集的7日天气预报,并保存在数据库。

Gitee作业1链接

实验内容:

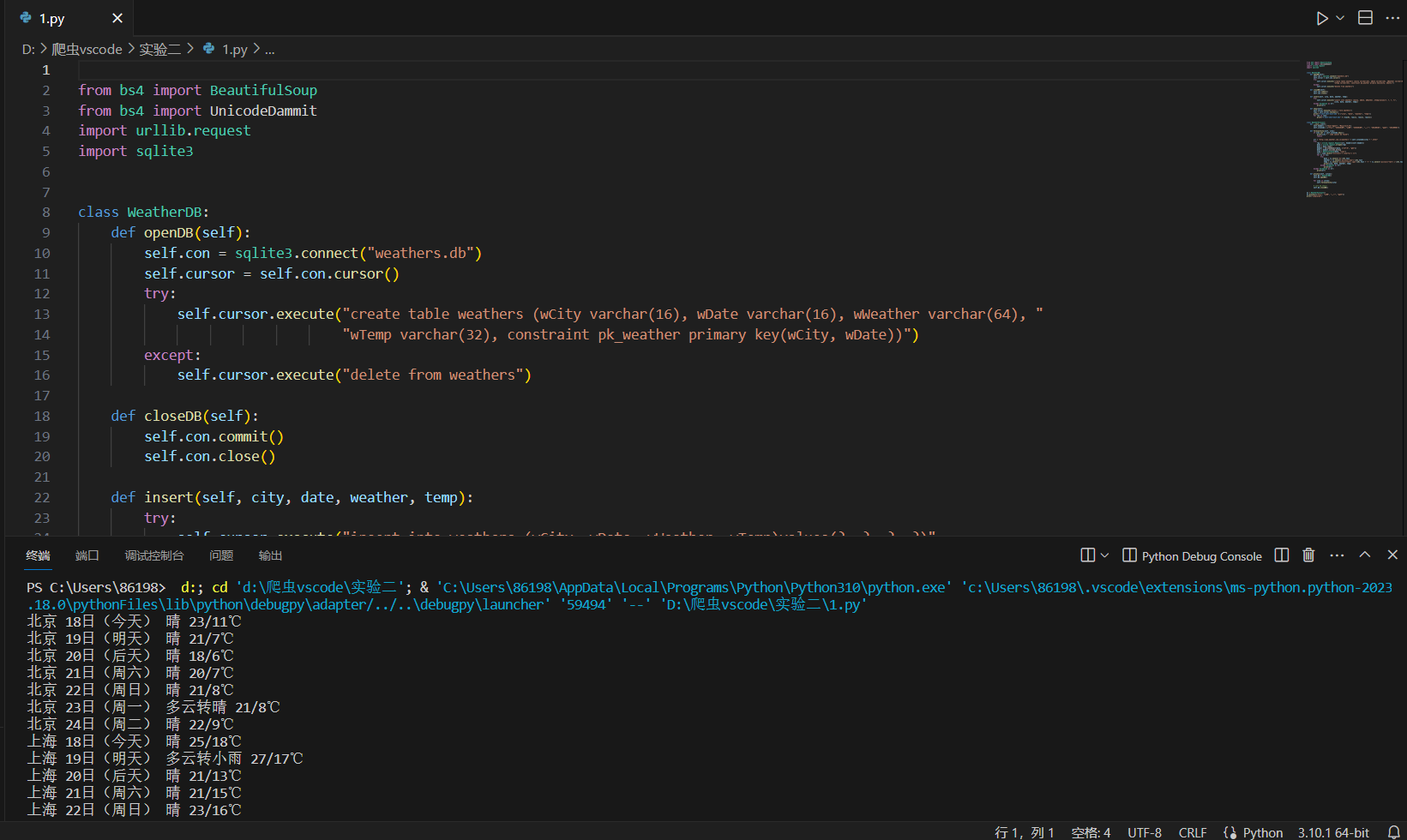

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import urllib.request

import sqlite3

class WeatherDB:

def openDB(self):

self.con = sqlite3.connect("weathers.db")

self.cursor = self.con.cursor()

try:

self.cursor.execute("create table weathers (wCity varchar(16), wDate varchar(16), wWeather varchar(64), "

"wTemp varchar(32), constraint pk_weather primary key(wCity, wDate))")

except:

self.cursor.execute("delete from weathers")

def closeDB(self):

self.con.commit()

self.con.close()

def insert(self, city, date, weather, temp):

try:

self.cursor.execute("insert into weathers (wCity, wDate, wWeather, wTemp)values(?, ?, ?, ?)",

(city, date, weather, temp))

except Exception as err:

print(err)

def show(self):

self.cursor.execute("select * form weathers")

rows = self.cursor.fetchall()

print("%-16s%-16s%-32s%-16s" % ("city", "date", "weather", "temp"))

for row in rows:

print("%-16s%-16s%-32s%-16s" % (row[0], row[1], row[2], row[3]))

class WeatherForecast:

def __init__(self):

self.headers = {"User-Agent": "Mozilla/5.0"}

self.cityCode = {"北京": "101010100", "上海": "101020100", "广州": "101280101", "深圳": "101280601"}

def forecastCity(self, city):

if city not in self.cityCode.keys():

print(city + " code cannot be found")

return

url = "http://www.weather.com.cn/weather/" + self.cityCode[city] + ".shtml"

try:

req = urllib.request.Request(url, headers=self.headers)

data = urllib.request.urlopen(req)

data = data.read()

dammit = UnicodeDammit(data, ["utf-8", "gbk"])

data = dammit.unicode_markup

soup = BeautifulSoup(data, "lxml")

lis = soup.select("ul[class='t clearfix'] li")

for li in lis:

try:

date = li.select('h1')[0].text

weather = li.select('p[class="wea"]')[0].text

temp = li.select('p[class="tem"] span')[0].text + "/" + li.select('p[class="tem"] i')[0].text

print(city, date, weather, temp)

except Exception as err:

print(err)

except Exception as err:

print(err)

def process(self, cities):

self.db = WeatherDB()

self.db.openDB()

for city in cities:

self.forecastCity(city)

# self.db.show()

self.db.closeDB()

ws = WeatherForecast()

ws.process(["北京", "上海", "广州", "深圳"])

print("completed")

运行结果:

心得体会:

通过对课例的实践,对bs库有了更深的认识

作业②

实验要求:

·要求:用 requests 和 BeautifulSoup 库方法定向爬取股票相关信息,并存储在数据库中。

·技巧:在谷歌浏览器中进入 F12 调试模式进行抓包,查找股票列表加载使用的 url,并分析 api 返回的值,并根据所要求的参数可适当更改api 的请求参数。根据 URL 可观察请求的参数 f1,f2 可获取不同的数值,根据情况可删减请求的参数。

Gitee作业2链接

实验内容:

import requests,re

import json

def count(s):

return len([ch for ch in s if '\u4e00' <= ch <= '\u9fff'])

cnt = 3

print("{:<3} {:<5} {:<6} {:<4} {:<5} {:<5} {:<8} {:<9} {:<4} {:<5} {:<4} {:<5} {:<5}".format(

"序号", "股票代码", "股票名称", "最新报价", "涨跌幅", "涨跌额", "成交量", "成交额", "振幅", "最高", "最低", "今开", "昨收"))

for i in range(cnt):

url = "http://41.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112408942134990117723_1601810673881&pn=" + \

str(i+1)+"&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&fid=f3&fs=m:0+t:6,m:0+t:13,m:0+t:80,m:1+t:2,m:1+t:23"

r = requests.get(url)

r.encodingn = "UTF-8"

text = r.text[r.text.index("["):]

datas = re.findall("{.*?}", text)

for j in range(len(datas)):

data = json.loads(datas[j])

temp = "{:<5} {:<8} {:<"+str(10-count(

data['f14']))+"} {:<7} {:<7} {:<7} {:<8} {:<13} {:<6} {:<6} {:<6} {:<6} {:<6}"

print(temp.format(i*20+j+1, data['f12'], data['f14'], data['f2'], data['f3'], data['f4'],

data['f5'], data['f6'], data['f7'], data['f15'], data['f16'], data['f17'], data['f18']))

运行结果:

心得体会:

通过作业2,让我加深了抓取网页加载的js的url链接的使用,以及根据分析url的结构以及根据自己的需求可以对其进行修改来获取指定信息。

作业 ③

实验要求:

·要求:爬取中国大学 2021 主榜(https://www.shanghairanking.cn/rankings/bcur/2021)所有院校信息,并存储在数据库中,同时将浏览器 F12 调试分析的过程录制 Gif 加入至博客中。

·技巧:分析该网站的发包情况,分析获取数据的 api

Gitee作业3链接

实验内容:

调试分析过程

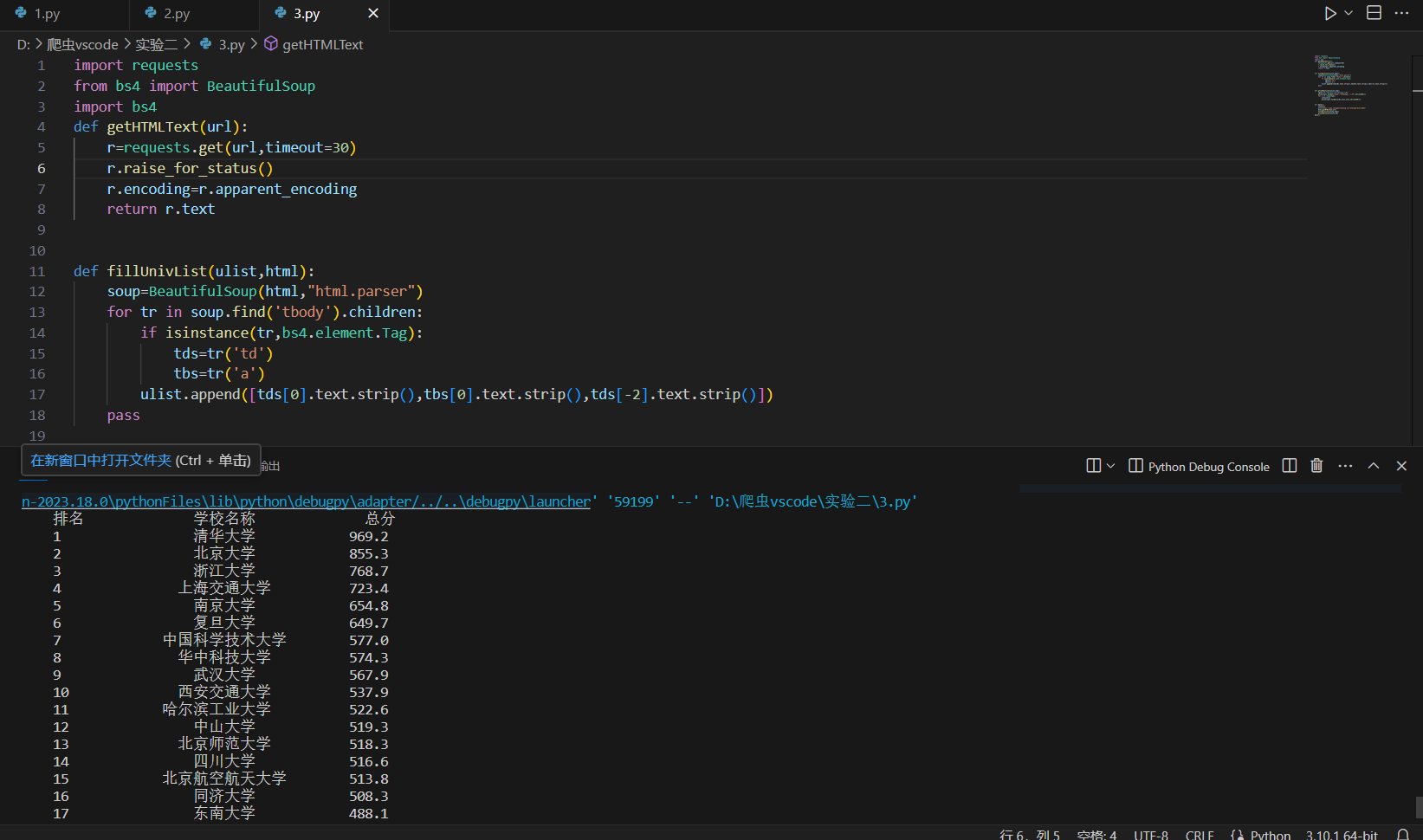

import requests

from bs4 import BeautifulSoup

import bs4

def getHTMLText(url):

r=requests.get(url,timeout=30)

r.raise_for_status()

r.encoding=r.apparent_encoding

return r.text

def fillUnivList(ulist,html):

soup=BeautifulSoup(html,"html.parser")

for tr in soup.find('tbody').children:

if isinstance(tr,bs4.element.Tag):

tds=tr('td')

tbs=tr('a')

ulist.append([tds[0].text.strip(),tbs[0].text.strip(),tds[-2].text.strip()])

pass

def printUnivList(ulist,num):

tplt="{0:^10}\t{1:{3}^10}\t{2:^10}"

print(tplt.format("排名","学校名称","总分",chr(12288)))

for i in range(num):

u=ulist[i]

print(tplt.format(u[0],u[1],u[2],chr(12288)))

def main():

ulnfo=[]

url="https://www.shanghairanking.cn/rankings/bcur/2021"

html=getHTMLText(url)

fillUnivList(ulnfo,html)

printUnivList(ulnfo,20)

main()

运行结果:

心得体会:

通过此次实验掌握了如何将数据写入数据库并通过navicat可视化,对如何抓包有了更深的理解,熟悉正则表达式,对爬虫有更深的理解