kubernetes1.23环境搭建学习实验(kubeadm和二进制两种方式)

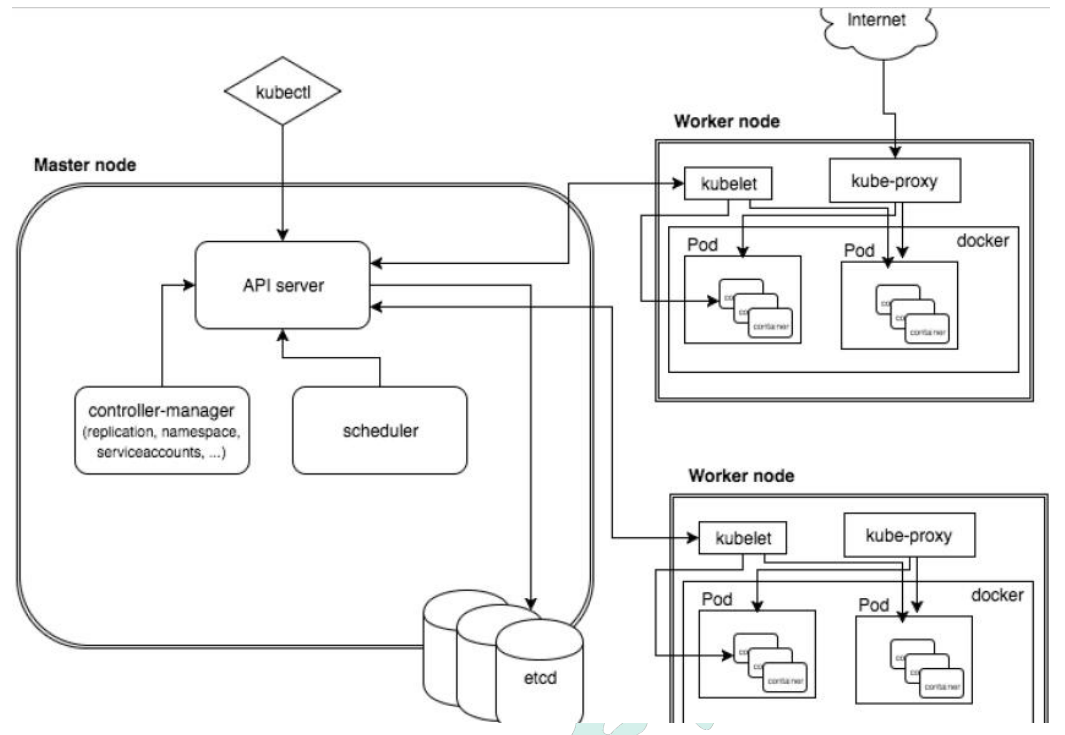

一、k8s集群架构组件

Master组件

- apiserver: 集群的统一入口,以restful 方式提供,交给etcd存储

- scheduler: 节点调度,选择node节点应用部署

- controller-manager: 处理集群中常规后台任务,一个资源对应一个控制器

- etcd: 存储系统,用于保存集群相关数据

node组件

- kubelet: master派到node节点代表,管理本机容器

- kube-proxy: 提供网络代理,实现负载均衡等操作

其它

- CoreDNS: 可以为集群中的SVC创建一个域名IP的对应关系解析

- dashboard: 给k8s集群提供一个B/S结构访问体系

- Ingress Controller: 官方只能实现四层代理,ingress可以实现7层代理

- federation: 提供一个可以跨集群中心多k8s统一管理功能

- prometheus: 提供k8s集群的监控能力

- ELK: 提供 k8s集群日志统一分析介入平台

二、k8s核心概念

同一个pod中,共享网络和存储卷

2.1 pod

- 最小部署单元

- 一组容器的集合

- 共享网络

- 生命周期是短暂的

2.2 controller

- 确保预期的pod副本数量

- 应用部署

- 无状态(拿过来直接使用)

- 有状态(需要有特定条件才能使用,例如固定IP,依赖存储等)

- 确保所有的node运行同一个pod

- 一次性任务和定时任务

pod的控制器类型:

replicationCotroller: 用来确保容器应用的副本数始终保持在用户定义的副本数,即如果有容器气场退出,会自动创建新的pod来替代;而如果异常多出来的容器也会自动回收。在新版本的kubernetes中建议使用replicaset来取代replicationController;

replicaset跟ReplicationController没有本质的不同,只是名字不一样,并且replicaset支持集合式的selector;

deployment: 虽然replicaset可以独立使用,但一般还是建议使用deployment来自动管理replicaset,这样就无需担心跟其他机制的不兼容问题(比如replicaset不支持rolling-update,但deployment支持)

HPA(HorizontalPodAutoScale):Horizontal Pod Autocaling仅适用于deployment和replicaset,在V1版本中仅支持根据pod的CPU利用率扩容,在v1alpha版本中,支持根据内存和用户自定义metric扩缩容。

statrfulset: 是为了解决有状态服务的问题(对应deployment和replicaset是为无状态服务而设计),其应用场景:

- 稳定的持久化存储,即pod重新调度后还是能访问到相同的持久化,基于PVC来实现

- 稳定的网络标志,即pod重新调度后其podname和hostname不变,基于Headless Service (即没有cluster IP 的service)来实现。

- 有序部署,有序扩展,即pod是有顺序的,在部署或者扩展的时候要依据定义的顺序依次进行(即从0到n-1,在下一个pod运行之前所有之前的pod都是running和ready状态),基于init containers来实现。

- 有序收缩,有序删除(即从n-1到0)

daemon: 确保全部(或者一些)node 上运行一个pod的副本。当有node加入集群时,也会为他们新增一个pod。当有node从集群移除时,这些pod也会被回收。删除deamonset 将会删除它创建的所有pod。

使用DeamonSet的一些典型用法:

- 运行集群存储deamon,例如在每个node上运行glusterd、ceph;

- 在每个node上运行日志收集daemon,例如fluend、logstash;

- 在每个node上运行监控daemon,例如prometheus node exporter。

job: Job负责批处理任务,即仅执行一次的任务,它保证批处理任务的一个或多个pod成功结束;

cronjob: cronjob 管理基于时间的Job,即1、在给定时间点只运行一次;2、周期性地在给定时间点运行。

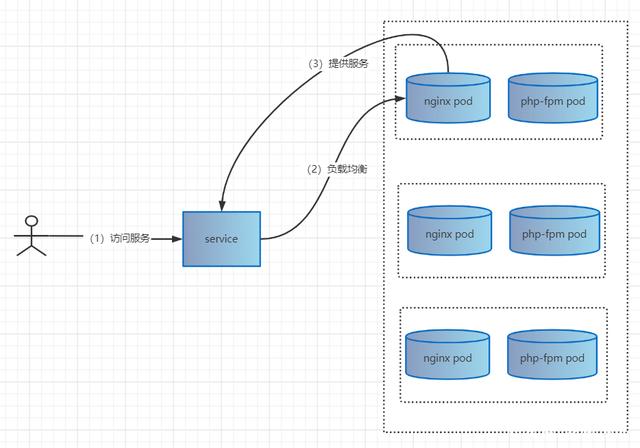

2.3 service

- 定义一组pod的访问规则

例如:

三、 kubernetes 网络通讯方式

kubernetes的网络模型假定了所有pod都在一个可以直接连通的扁平的网络空间中(所有的pod都可以通过对方的IP“直接到达”),这在GCE(Google compute engine)里面是现成的网络模型,kubernetes假定这个网络已经存在。而在私有云里搭建kubernetes集群,就不能假定这个网络已经存在了。我们需要自己实现这个网络假设,将不同节点上的docker容器之间的互相访问先打通,然后运行kubernetes。

-

同一个pod内的多容器之间:lo

-

各pod之间的通讯:overlay network

-

pod与service之间的通讯:各节点的iptables规则

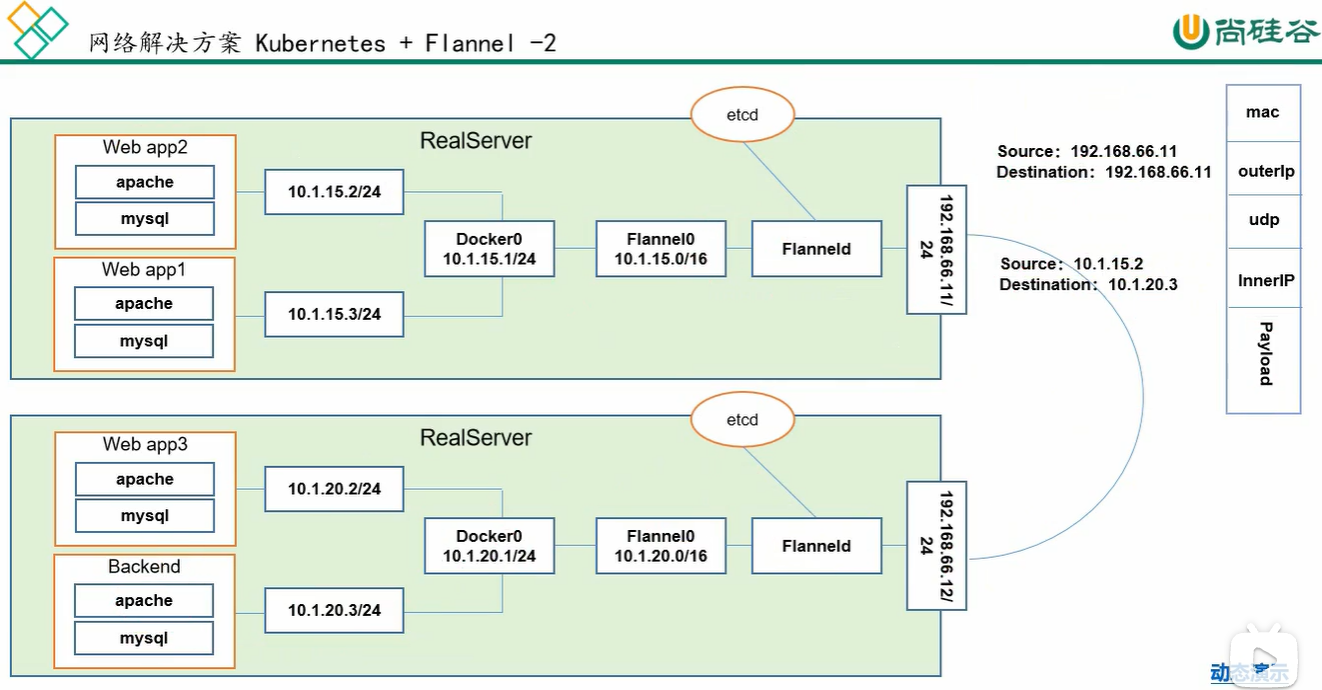

flannel 是CoreOS团队针对kubernetes设计的一个网络规划服务,简单来说,它的功能是让集群中的不同节点主机创建的Docker 容器都具有全集群唯一的虚拟IP地址。而且它还能在这些IP地址之间建立一个覆盖网络(Overlay Network),通过这个覆盖网络,将数据包原封不动地传递到目标容器内。

ETCD之Flannel 提供说明:

- 存储管理Flannel 可分配的IP地址段资源

- 监控ETCD中每个Pod的实际地址,并在内存中建立维护Pod节点路由表

总结:

同一个pod内部通讯: 同一个pod共享一个网络命名空间,共享同一个Linux协议栈。(使用localhost通讯)

pod1至pod2:

- pod1和pod2 不在同一台主机 ,pod的地址是与docker0 在同一个网段的,但docker0 网段与宿主机网卡是两个完全不同的IP网段,并且不同node之间的通讯只能通过宿主机的物理网卡进行。将pod的IP 和所在node的IP关联起来,通过这个关联让pod之间互相访问;

- pod1 和pod2 在同一台机器 ,由docker0网桥直接转发请求至pod2, 不需要经过flannel。

pod至service的网络: 目前基于性能考虑,全部为iptables (LVS)维护和转发

pod到外网: pod向外网发送请求,查找路由表,转发数据包到宿主机的网卡,宿主网卡完成路由选择后,iptables执行masquerade,把源ip更改为宿主网卡的ip,然后向外网服务器发送请求

外网访问pod: service

四、搭建k8s集群

3.1 搭建k8s平台规划

- 单master集群(容易引起单点故障)

- 多master集群

3.2服务器硬件配置要求

-

测试

- master 2核 4G内存 20G硬盘

- node 4核 8G内存 40G硬盘

-

生产

- 更高要求

3.3搭建k8s集群

(一) kubeadm部署方式

前言 (kubeadm)

https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm/

安装要求 (kubeadm)

- 一台或者多台机器,操作系统centos7以上

- 硬件基本配置:2GRAM,2个CPU,硬盘30G

- 集群中的机器互通

- 可以访问外网,需要拉取镜像

- 禁止swap分区

服务器规划(kubeadm)

| role | ip |

|---|---|

| master | 123.60.82.54/192.168.0.171 |

| node1 | 121.37.138.144/192.168.0.128 |

| node2 | 124.70.138.160/192.168.0.118 |

系统初始化(kubeadm)

-

升级内核(三台机器)

# 导入公钥 rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org # 安装elrepo源 yum install -y https://www.elrepo.org/elrepo-release-7.el7.elrepo.noarch.rpm # 查看kernel版本 yum --disablerepo=\* --enablerepo=elrepo-kernel list --showduplicates | grep kernel-lt # 安装新版kernel yum --disablerepo=\* --enablerepo=elrepo-kernel install -y kernel-lt-5.4.161-1.el7.elrepo # 删除旧版kernel相关包 yum remove kernel-headers-3.10.0-1160.15.2.el7.x86_64 kernel-tools-libs-3.10.0-1062.el7.x86_64 kernel-tools-3.10.0-1062.el7.x86_64 # 安装新版kernel相关包 yum --disablerepo=* --enablerepo=elrepo-kernel install -y kernel-lt-tools-5.4.161-1.el7.elrepo kernel-lt-tools-libs-5.4.161-1.el7.elrepo kernel-lt-headers-5.4.161-1.el7.elrepo --skip-broken # 修改为默认内核 grub2-set-default 0 # 重启服务器 reboot -

关闭防火墙(三台机器)

-

关闭selinux(三台机器)

# 修改selinux配置文件,永久关闭 sed -i 's/^SELINUX=.\*/SELINUX=disabled/' /etc/selinux/config # 临时关闭selinux setenforce 0 -

关闭swap(三台机器)

# 临时关闭 swapoff -a # 永久关闭 sed -i 's/.*swap.*/#&/' /etc/fstab -

设置主机名(三台机器)

hostnamectl set-hostname master -

将master、node1、node2主机名和ip添加到hosts(master机器)

cat >> /etc/hosts << EOF 192.168.0.171 master 192.168.0.128 node1 192.168.0.119 node2 EOF -

加载ipvs内核模块(三台机器)

cat > /etc/sysconfig/modules/ipvs.modules <<EOF #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 modprobe -- overlay modprobe -- br_netfilter EOF # modprobe -- nf_conntrack_ipv4 4.19内核已改名为nf_conntrack,这里报错可忽略 chmod 755 /etc/sysconfig/modules/ipvs.modules bash /etc/sysconfig/modules/ipvs.modules lsmod | grep -e ip_vs -e nf_conntrack_ipv4 -

设置时间同步(三台机器)

# 时间同步 yum install ntpdate -y ntpdate time.windows.com -

修改内核参数

cat > /etc/sysctl.d/k8s.conf <<EOF net.bridge.bridge-nf-call-iptables=1 net.bridge.bridge-nf-call-ip6tables=1 net.ipv4.ip_forward=1 vm.swappiness=0 vm.overcommit_memory=1 vm.panic_on_oom=0 fs.file-max=52706963 fs.nr_open=52706963 net.netfilter.nf_conntrack_max=2310720 EOF # vm.swappiness=0 禁止使用 swap 空间,只有当系统 OOM 时才允许使用它 # vm.overcommit_memory=1 不检查物理内存是否够用 # vm.panic_on_oom=0 内存不足时,开启OOM # fs.file-max=52706963 系统级打开最大文件句柄的数量 # fs.nr_open=52706963 系统级打开最大进程的数量 # net.netfilter.nf_conntrack_max=2310720 最大连接状态跟踪数量 sysctl -p /etc/sysctl.d/k8s.conf

安装docker(三台机器)(kubeadm)

[root@master ~]$ wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

[root@master ~]$ yum -y install docker-ce-18.06.1.ce-3.el7

[root@master ~]$ mkdir /etc/docker

# 设置镜像加速(买的华为云)

cat > /etc/docker/daemon.json <<EOF

{

"insecure-registries": ["registry.access.redhat.com","quay.io","harbor.likf.space"],

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://7b0266c80f7c42b4b1a61185aaf53494.mirror.swr.myhuaweicloud.com"],

"log-driver": "json-file"

}

EOF

# 设置完后需要重启docker

[root@master ~]$ systemctl enable docker --now

安装kubernetes 组件(三台机器)(kubeadm)

# 配置阿里云镜像源

# https://developer.aliyun.com/mirror/kubernetes?spm=a2c6h.13651102.0.0.20dd1b11Ftu9TX

[root@master ~]$ cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

# 安装kubeadm,kubelet和kubectl

# 由于版本更新频繁,这里指定版本号部署

[root@master ~]$ yum -y install kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0

# 开机自启kubelet

[root@master ~]$ systemctl enable kubelet && systemctl start kubelet

开始部署master和node节点(kubeadm)

# 在master上执行:

# 由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址

# 使用华为云的时候,apiserver-advertise-address填私有ip

# service-cidr随便填,只要和pod-network-cidr不冲突即可

# pod-network-cidr 设置为10.244.0.0/16,便于之后部署flannel插件

[root@master ~]$ kubeadm init --apiserver-advertise-address=192.168.0.171 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.18.0 --service-cidr=10.192.0.0/12 --pod-network-cidr=10.244.0.0/16

# 使用kubectl工具

[root@master ~]$mkdir -p $HOME/.kube

[root@master ~]$sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]$sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 在node节点中执行以下语句,将node节点加入到master中

[root@master ~]$ kubeadm join 192.168.0.171:6443 --token x55nve.wcdpivqw2cqxjrkw --discovery-token-ca-cert-hash sha256:c5c1967d3521371e5a5890d9a5e0209fb7232ccd5e5cec0b7fd553868b6719af

# 查看状态

[root@master ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady master 7m18s v1.18.0

node1 NotReady <none> 30s v1.18.0

node2 NotReady <none> 21s v1.18.0

[root@master ~]$

部署CNI网络插件(kubeadm)

默认镜像地址无法访问,sed命令修改为docker hub镜像仓库

# 应用flannel 插件

[root@master ~]$ kubectl apply -f ../kube-flannel.yaml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

# 检查服务启动之后,表示网络插件已经部署好了

[root@master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7ff77c879f-22q2x 1/1 Running 0 11m

coredns-7ff77c879f-7c8gj 1/1 Running 0 11m

etcd-master 1/1 Running 0 12m

kube-apiserver-master 1/1 Running 0 12m

kube-controller-manager-master 1/1 Running 0 12m

kube-flannel-ds-amd64-2ndxd 1/1 Running 0 2m7s

kube-flannel-ds-amd64-5c8nz 1/1 Running 0 107s

kube-flannel-ds-amd64-mvp4v 1/1 Running 0 9m9s

kube-proxy-22wx5 1/1 Running 0 2m7s

kube-proxy-bwnrg 1/1 Running 0 11m

kube-proxy-wfltc 1/1 Running 0 107s

kube-scheduler-master 1/1 Running 0 12m

[root@master ~]#

# 在master节点检查

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 10m v1.18.0

node1 Ready <none> 40s v1.18.0

node2 Ready <none> 20s v1.18.0

[root@master ~]#

测试k8s集群(kubeadm)

在k8s集群中创建一个pod

[root@master ~]# kubectl create deployment nginx --image=nginx

[root@master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

[root@master ~]# kubectl get pod,svc

(二) 二进制方式

从GitHub下载二进制包,手动部署每隔组件

服务器规划(二进制)

| role | ip | 组件 |

|---|---|---|

| master | 192.168.0.171 | kube-apiserver,kube-controller-manager,kube-scheduler,docker,etcd,harbor |

| node1 | 192.168.0.128 | kubelet,kube-proxy,docker,etcd,flannel |

| node2 | 192.168.0.118 | kubelet,kube-proxy,docker,etcd,flannel |

操作系统初始化(二进制)

同kubeadm

使用cfssl自签证书(二进制)

在k8s集群内部也是有证书的,如果不带证书,那么访问就会受限。在集群的内部外部的访问,需要签发证书。

如果使用二进制部署方式,那么需要自己手动签发证书。

自签证书:我们可以想象成在一家公司上班,然后会颁发一个门禁卡,同时一般门禁卡有两种,一个是内部员工的门禁卡,和外部访客门禁卡。这两种门禁卡的权限可能不同,员工的门禁卡可以进入公司的任何地方,而访客的门禁卡是受限的,这个门禁卡其实就是自签证书。

(1) cfssl证书生成工具

cfssl是一个开源的证书管理工具,使用json文件生成证书,相比openssl 更方便使用。找任意一台服务器操作,这里用Master节点。

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

chmod +x /usr/local/bin/cfssl*

(2) 创建放置证书文件的目录

# 在master 下操作

[root@master opt]$ mkdir -p TLS/{etcd,k8s}

[root@master opt]$ cd TLS/etcd

(3) 自签CA

##############################################

# ca.expiry:ca证书的过期时间

# CN:common name,kube-apiserver 从证书中提取该字段作为请求的用户名(user name);浏览器使用该字段验证网站是否合法

# O:organization,kube-apiserver 从证书中提取该字段作为请求用户所属的组

# C:国家

# ST:省份

# L:城市

##############################################

# 创建自签证书请求配置文件

[root@master etcd]$ cat > ca-csr.json <<EOF

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF

# 生成证书签名请求文件、ca私钥、ca证书

[root@master ca]$ cfssl gencert -initca ca-csr.json | cfssljson -bare ca

# ca 用于签发的配置信息

###########################################################

# default.expiry:全局配置,颁发的证书默认有效期10年

# profiles:可以定义多个配置,这里定义了一个kubernetes,在使用的时候可以指定一个profile。例如: -config=ca-config=config.json -profile=kubernetes

##########################################################

[root@master ca]$ cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

(4) 使用自签 CA 签发 Etcd HTTPS 证书

[root@master ca]$ cd /opt/TLS/etcd

# 创建证书请求配置文件

[root@master etcd]$ cat > etcd-csr.json <<EOF

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.0.171",

"192.168.0.128",

"192.168.0.118"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "k8s",

"OU": "System"

}

]

}

EOF

## 注:上述文件 hosts 字段中 IP 为所有 etcd 节点的集群内部通信IP,如果有域名会更灵活

#生成证书:

[root@master etcd]$ cfssl gencert -ca /opt/TLS/ca/ca.pem -ca-key /opt/TLS/ca/ca-key.pem -config /opt/TLS/ca/ca-config.json --profile kubernetes etcd-csr.json | cfssljson -bare etcd

# [WARNING] This certificate lacks a "hosts" field. 可忽略

部署etcd集群(二进制)

(1)下载二进制文件

地址:https://github.com/etcd-io/etcd/releases

(2)为etcd签发HTTPS证书

[root@master ca]$ cd /opt/TLS/etcd

# 创建证书请求配置文件

[root@master etcd]$ cat > etcd-csr.json <<EOF

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.0.171",

"192.168.0.128",

"192.168.0.118"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "k8s",

"OU": "System"

}

]

}

EOF

## 注:上述文件 hosts 字段中 IP 为所有 etcd 节点的集群内部通信IP,如果有域名会更灵活

#生成证书:

[root@master etcd]$ cfssl gencert -ca /opt/TLS/ca/ca.pem -ca-key /opt/TLS/ca/ca-key.pem -config /opt/TLS/ca/ca-config.json --profile kubernetes etcd-csr.json | cfssljson -bare etcd

# [WARNING] This certificate lacks a "hosts" field. 可忽略

(3)部署etcd集群

以下均在master节点操作

# 将下载的etcd二进制文件包放到/opt/src/etcd 目录下

[root@master etcd]# pwd

/opt/src/etcd

[root@master etcd]# ls

etcd-v3.4.14-linux-amd64.tar.gz

[root@master etcd]#

# 创建工作目录并解压二进制包

[root@master etcd]$ tar zxvf etcd-v3.4.14-linux-amd64.tar.gz -C /opt/

[root@master etcd]$ cd /opt/

[root@master etcd]$ mv etcd-v3.4.14-linux-amd64/ etcd-v3.4.14

[root@master etcd]$ ln -s etcd-v3.4.14/ etcd

[root@master opt]# ll

total 12

lrwxrwxrwx 1 root root 13 Feb 19 18:25 etcd -> etcd-v3.4.14/

drwxr-xr-x 5 630384594 600260513 4096 Feb 19 18:36 etcd-v3.4.14

drwxr-xr-x 5 root root 4096 Feb 19 17:51 src

drwxr-xr-x 4 root root 4096 Feb 19 17:57 TLS

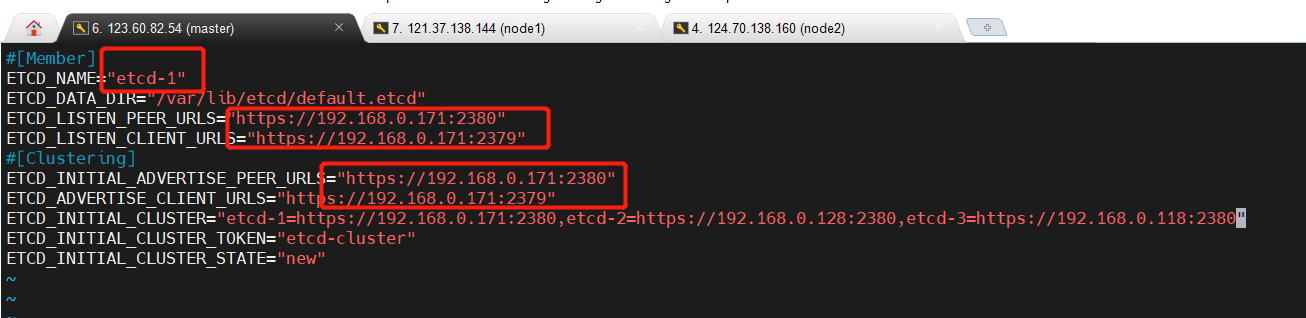

# 创建etcd.conf 文件 ,注意修改对应的ip

[root@master opt]$ cat > /opt/etcd/cfg/etcd.conf << EOF

#[Member]

ETCD_NAME="etcd-1"

ETCD_DATA_DIR="/data/etcd/etcd-server"

ETCD_LISTEN_PEER_URLS="https://192.168.0.171:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.0.171:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.0.171:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.0.171:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.0.171:2380,etcd-2=https://192.168.0.118:2380,etcd-3=https://192.168.0.128:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

# 创建上述目录

[root@master opt]$ mkdir -p /data/etcd/etcd-server

# 注释

# ETCD_NAME:节点名称,集群中唯一

# ETCD_DATA_DIR:数据目录

# ETCD_LISTEN_PEER_URLS:集群通信监听地址

# ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址

# ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址

# ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址

# ETCD_INITIAL_CLUSTER:集群节点地址

# ETCD_INITIAL_CLUSTER_TOKEN:集群 Token

# ETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态,new 是新集群,existing 表示加入 已有集群

# 创建etcd.service

[root@master opt]$ cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/opt/etcd/cfg/etcd.conf

ExecStart=/opt/etcd/etcd \

--cert-file=/opt/etcd/ssl/server.pem \

--key-file=/opt/etcd/ssl/server-key.pem \

--peer-cert-file=/opt/etcd/ssl/server.pem \

--peer-key-file=/opt/etcd/ssl/server-key.pem \

--trusted-ca-file=/opt/etcd/ssl/ca.pem \

--peer-trusted-ca-file=/opt/etcd/ssl/ca.pem \

--logger=zap

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

# 将所有文件拷贝到node1 和 node2中

[root@master opt]$ scp -r /opt/etcd/ root@node1:/opt/

[root@master opt]$ scp /usr/lib/systemd/system/etcd.service root@node1:/usr/lib/systemd/system/

[root@master opt]$ scp -r /opt/etcd/ root@node2:/opt/

[root@master opt]$ scp /usr/lib/systemd/system/etcd.service root@node2:/usr/lib/systemd/system/

# 注意:文件拷贝过去之后,需要修改etcd/cfg/etcd.conf文件

# 最后启动etcd,并设置开机自启

[root@master opt]$ systemctl daemon-reload

# (启动时需要集群节点一起启动)

[root@master opt]$ systemctl start etcd

[root@master opt]$ systemctl enable etcd

# 查看集群状态

[root@master etcd]$ /opt/etcd/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.0.171:2379,https://192.168.0.128:2379,https://192.168.0.118:2379" endpoint status --write-out=table

+----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| https://192.168.0.171:2379 | 7ec2542a2723e9e3 | 3.4.14 | 20 kB | true | false | 13 | 8 | 8 | |

| https://192.168.0.128:2379 | a25c294d3a391c7c | 3.4.14 | 20 kB | false | false | 13 | 8 | 8 | |

| https://192.168.0.118:2379 | 486e0511355102be | 3.4.14 | 20 kB | false | false | 13 | 8 | 8 | |

+----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

[root@master etcd]$ ETCDCTL_API=3 /opt/etcd/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.0.171:2379,https://192.168.0.128:2379,https://192.168.0.118:2379" endpoint health --write-out=table

+----------------------------+--------+-------------+-------+

| ENDPOINT | HEALTH | TOOK | ERROR |

+----------------------------+--------+-------------+-------+

| https://192.168.0.171:2379 | true | 12.577721ms | |

| https://192.168.0.128:2379 | true | 11.207208ms | |

| https://192.168.0.118:2379 | true | 13.157181ms | |

+----------------------------+--------+-------------+-------+

将文件拷贝到其它节点时,需要注意修改以下内容:

安装docker

同kubeadm方式安装

安装harbor

部署master组件(二进制)

(1)下载二进制文件

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/README.md

本次下载1.21版本

(2)部署kube-apiserver

签发证书

# 1、创建ca

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

[root@master k8s]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca

# 2、签发apiserver 证书

cat > server-csr.json << EOF

{

"CN": "kubernetes",

"hosts": [

"10.192.0.1",

"127.0.0.1",

"192.168.0.171",

"192.168.0.118",

"192.168.0.128",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

[root@master k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

部署kube-apiserver

# 1、将二进制文件下载到本地

[root@master V1.20.1]# pwd

/opt/src/kubernetes/V1.20.1

[root@master V1.20.1]# ls

kubernetes-server-linux-amd64.tar.gz

# 2、创建工作目录,解压压缩文件

[root@master opt]# tar zxvf kubernetes-server-linux-amd64.tar.gz -C /opt/

[root@master opt]# cd /opt/

[root@master opt]# mv kubernetes/ kubernetes-V1.20.1/

[root@master opt]# ln -s kubernetes-V1.20.1/ kubernetes

# 3、删除不需要的文件

[root@master opt]# cd kubernetes

[root@master kubernetes]# rm -rf kubernetes-src.tar.gz

[root@master kubernetes]# cd /opt/kubernetes/server/bin/

[root@master bin]# rm -rf *.tar *tag

# 4、创建配置文件

[root@master cfg]# cat kube-apiserver.conf

KUBE_APISERVER_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/data/k8s/kube-apiserver/logs \

--etcd-servers=https://192.168.0.171:2379,https://192.168.0.118:2379,https://192.168.0.128:2379 \

--bind-address=192.168.0.171 \

--secure-port=6443 \

--advertise-address=192.168.0.171 \

--allow-privileged=true \

--service-cluster-ip-range=10.192.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--enable-bootstrap-token-auth=true \

--token-auth-file=/opt/kubernetes/server/bin/cfg/token.csv \

--service-node-port-range=30000-32767 \

--kubelet-client-certificate=/opt/kubernetes/server/bin/ssl/server.pem \

--kubelet-client-key=/opt/kubernetes/server/bin/ssl/server-key.pem \

--tls-cert-file=/opt/kubernetes/server/bin/ssl/server.pem \

--tls-private-key-file=/opt/kubernetes/server/bin/ssl/server-key.pem \

--client-ca-file=/opt/kubernetes/server/bin/ssl/ca.pem \

--service-account-key-file=/opt/kubernetes/server/bin/ssl/ca-key.pem \

--service-account-issuer=api \

--service-account-signing-key-file=/opt/kubernetes/server/bin/ssl/server-key.pem \

--etcd-cafile=/opt/etcd/ssl/ca.pem \

--etcd-certfile=/opt/etcd/ssl/server.pem \

--etcd-keyfile=/opt/etcd/ssl/server-key.pem \

--requestheader-client-ca-file=/opt/kubernetes/server/bin/ssl/ca.pem \

--proxy-client-cert-file=/opt/kubernetes/server/bin/ssl/server.pem \

--proxy-client-key-file=/opt/kubernetes/server/bin/ssl/server-key.pem \

--requestheader-allowed-names=kubernetes \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--enable-aggregator-routing=true \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/data/k8s/kube-apiserver/logs/audit.log"

# 5、将证书文件拷贝到对应目录

]# cp /opt/TLS/k8s/ca*.pem /opt/TLS/k8s/server*.pem /opt/kubernetes/server/bin/ssl/

# 6、启用 TLS Bootstrapping 机制,这个机制有自动发布证书的作用,还有就是启动该机制所有的node要与apiserver来进行连接的时候必须带证书过来

#生成一个token

[root@master bin]# head -c 16 /dev/urandom | od -An -t x | tr -d ' '

03b327f4b2581410778681b0636346c0,kubelet-bootstrap,10001,"system:node-bootstrapper"

##配置token文件,并将上面生成的token写进配置文件里面

[root@master bin]# vim /opt/kubernetes/server/bin/cfg/token.csv

# 7、systemctl 来管理kube-apiserver

[root@master bin]# cat >> /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/server/bin/cfg/kube-apiserver.conf

ExecStart=/opt/kubernetes/server/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

# 启动apiserver 并设置开机自启

[root@master cfg]$ systemctl enable kube-apiserver --now

[root@master cfg]$ systemctl status kube-apiserver

(3)kubectl 客户端配置以及kubeconfig 说明

官方文档:

https://kubernetes.io/zh/docs/concepts/configuration/organize-cluster-access-kubeconfig/

https://kubernetes.io/docs/reference/generated/kubectl/kubectl-commands

安装kubectl:

[root@master bin]# pwd

/opt/kubernetes/server/bin

[root@master bin]# ll

total 585128

-rwxr-xr-x 1 root root 46665728 Dec 18 2020 apiextensions-apiserver

drwxr-xr-x 2 root root 4096 Feb 20 00:11 cfg

-rwxr-xr-x 1 root root 39219200 Dec 18 2020 kubeadm

-rwxr-xr-x 1 root root 44658688 Dec 18 2020 kube-aggregator

-rwxr-xr-x 1 root root 118128640 Dec 18 2020 kube-apiserver

-rwxr-xr-x 1 root root 112312320 Dec 18 2020 kube-controller-manager

-rwxr-xr-x 1 root root 40230912 Dec 18 2020 kubectl

-rwxr-xr-x 1 root root 113982312 Dec 18 2020 kubelet

-rwxr-xr-x 1 root root 39485440 Dec 18 2020 kube-proxy

-rwxr-xr-x 1 root root 42848256 Dec 18 2020 kube-scheduler

-rwxr-xr-x 1 root root 1630208 Dec 18 2020 mounter

drwxr-xr-x 2 root root 4096 Feb 19 23:21 ssl

[root@master bin]# cp kubectl /usr/local/bin/

# 配置completion补全增强

# 先安装bash-completion (yum install bash-completion -y)

[root@master bin]# echo 'source <(kubectl completion bash)' >> ~/.bashrc

[root@master bin]# source ~/.bashrc

(4)部署kube-controller-manager

# 1、生成配置文件

[root@master cfg]# pwd

/opt/kubernetes/server/bin/cfg

[root@master cfg]# vim kube-controller-manager.conf

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/data/k8s/kube-controller-manager/logs \

--leader-elect=true \

--kubeconfig=/opt/kubernetes/server/bin/cfg/kube-controller-manager.kubeconfig \

--bind-address=127.0.0.1 \

--allocate-node-cidrs=true \

--cluster-cidr=10.244.0.0/16 \

--service-cluster-ip-range=10.0.0.0/24 \

--cluster-signing-cert-file=/opt/kubernetes/server/bin/ssl/ca.pem \

--cluster-signing-key-file=/opt/kubernetes/server/bin/ssl/ca-key.pem \

--root-ca-file=/opt/kubernetes/server/bin/ssl/ca.pem \

--service-account-private-key-file=/opt/kubernetes/server/bin/ssl/ca-key.pem \

--cluster-signing-duration=87600h0m0s"

# 2、生成kube-controller-manager证书

[root@master bin]# cd ~/TLS/k8s

[root@master k8s]# cat >> kube-controller-manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

[root@master k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

# 3、生产kubeconfig 文件

[root@master bin## KUBE_CONFIG="/opt/kubernetes/cfg/kube-controller-manager.kubeconfig"

[root@master bin]# KUBE_APISERVER="https://192.168.0.171:6443"

## 设置集群信息

[root@master bin]# kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/server/bin/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${KUBE_CONFIG}

### 设置用户信息

[root@master bin]# kubectl config set-credentials kube-controller-manager \

--client-certificate=/opt/kubernetes/server/bin/ssl/kube-controller-manager.pem \

--client-key=/opt/kubernetes/server/bin/ssl/kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=${KUBE_CONFIG}

## 设置上下文信息,将用户与集群关联

[root@master bin]# kubectl config set-context default \

--cluster=kubernetes \

--user=kube-controller-manager \

--kubeconfig=${KUBE_CONFIG}

## 设置配置文件中默认的上下文

[root@master bin]# kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

# 4、systemctl管理kube-scheduler

[root@master bin]# vim /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/server/bin/cfg/kube-controller-manager.conf

ExecStart=/opt/kubernetes/server/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

# 4、设置开机自启

[root@master kubecm-conf]$ systemctl daemon-reload

[root@master kubecm-conf]$ systemctl start kube-controller-manager

[root@master kubecm-conf]$ systemctl enable kube-controller-manager

(5)部署kube-scheduler

# 1、先生成配置文件

[root@master k8s]# vim /opt/kubernetes/server/bin/cfg/kube-scheduler.conf

KUBE_SCHEDULER_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/data/k8s/kube-scheduler/logs \

--leader-elect \

--kubeconfig=/opt/kubernetes/server/bin/cfg/kube-scheduler.kubeconfig \

--bind-address=127.0.0.1"

# 2、生成kube-scheduler证书配置文件

[root@master k8s]# cd ~/TLS/k8s

[root@master k8s]# cat >> kube-scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

## 生成证书

[root@master k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

2021/04/11 17:40:41 [INFO] generate received request

2021/04/11 17:40:41 [INFO] received CSR

2021/04/11 17:40:41 [INFO] generating key: rsa-2048

2021/04/11 17:40:41 [INFO] encoded CSR

2021/04/11 17:40:41 [INFO] signed certificate with serial number 350742079432942409840395888625637132125203998882

2021/04/11 17:40:41 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@master k8s]# cp kube-scheduler*pem /opt/kubernetes/server/bin/ssl/

# 3、生成kuconfig配置文件

[root@master k8s]# KUBE_CONFIG="/opt/kubernetes/server/bin/cfg/kube-scheduler.kubeconfig"

[root@master k8s]# KUBE_APISERVER="https://192.168.0.171:6443"

[root@master k8s]# kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/server/bin/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${KUBE_CONFIG}

[root@master k8s]# kubectl config set-credentials kube-scheduler \

--client-certificate=/opt/kubernetes/server/bin/ssl/kube-scheduler.pem \

--client-key=/opt/kubernetes/server/bin/ssl/kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=${KUBE_CONFIG}

[root@master k8s]# kubectl config set-context default \

--cluster=kubernetes \

--user=kube-scheduler \

--kubeconfig=${KUBE_CONFIG}

[root@master k8s]# kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

Switched to context "default".

# 4、systemctl管理kube-scheduler

[root@master k8s]# cat /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/server/bin/cfg/kube-scheduler.conf

ExecStart=/opt/kubernetes/server/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

#启动

[root@master k8s]# systemctl daemon-reload

[root@master k8s]# systemctl start kube-scheduler

[root@master k8s]# systemctl enable kube-scheduler

(6)检查集群状态

# 1、生成kubectl连接集群的证书

[root@master k8s]# cat >> admin-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

[root@master k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

2021/04/11 17:42:48 [INFO] generate received request

2021/04/11 17:42:48 [INFO] received CSR

2021/04/11 17:42:48 [INFO] generating key: rsa-2048

2021/04/11 17:42:49 [INFO] encoded CSR

2021/04/11 17:42:49 [INFO] signed certificate with serial number 392352199304023135205029573074747070915819946890

2021/04/11 17:42:49 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@master k8s]# cp admin*.pem /opt/kubernetes/server/bin/ssl/

# 2、生成kubeconfig文件

[root@master k8s]# mkdir /root/.kube

[root@master k8s]# KUBE_CONFIG="/root/.kube/config"

[root@master k8s]# KUBE_APISERVER="https://192.168.0.171:6443"

[root@master k8s]# kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/server/bin/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${KUBE_CONFIG}

[root@master k8s]# kubectl config set-credentials cluster-admin \

--client-certificate=/opt/kubernetes/server/bin/ssl/admin.pem \

--client-key=/opt/kubernetes/server/bin/ssl/admin-key.pem \

--embed-certs=true \

--kubeconfig=${KUBE_CONFIG}

[root@master k8s]# kubectl config set-context default \

--cluster=kubernetes \

--user=cluster-admin \

--kubeconfig=${KUBE_CONFIG}

[root@master k8s]# kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

Switched to context "default".

# 4、通过kubectl工具来检查当前集群组件状态

[root@master k8s]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+ #这个警告小编在之后会给大家讲解的

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

##授权kubectl-bootstrap用户允许请求证书

[root@master k8s]# kubectl create clusterrolebinding kubelet-bootstrap \

--clusterrole=system:node-bootstrapper \

--user=kubelet-bootstrap

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

[root@master cfg]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-2 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

部署node组件(二进制)

(1)安装kubelet

# 一、准备环境目录

# node 节点执行

[root@node1 bin]# pwd

/opt/kubernetes/server/bin

[root@node1 bin]# ls -lh

total 572M

-rwxr-xr-x 1 root root 45M Feb 21 09:10 apiextensions-apiserver

drwxr-xr-x 2 root root 4.0K Feb 21 09:20 cfg

-rwxr-xr-x 1 root root 38M Feb 21 09:10 kubeadm

-rwxr-xr-x 1 root root 43M Feb 21 09:10 kube-aggregator

-rwxr-xr-x 1 root root 113M Feb 21 09:10 kube-apiserver

-rwxr-xr-x 1 root root 108M Feb 21 09:10 kube-controller-manager

-rwxr-xr-x 1 root root 39M Feb 21 09:10 kubectl

-rwxr-xr-x 1 root root 109M Feb 21 09:10 kubelet

-rwxr-xr-x 1 root root 38M Feb 21 09:10 kube-proxy

-rwxr-xr-x 1 root root 41M Feb 21 09:10 kube-scheduler

-rwxr-xr-x 1 root root 1.6M Feb 21 09:10 mounter

drwxr-xr-x 2 root root 4.0K Feb 21 09:21 ssl

# 二、创建配置文件

[root@node1 bin]# cd cfg

[root@node1 cfg]# vim /opt/kubernetes/server/bin/cfg/kubelet.conf

KUBELET_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/data/k8s/kubelet/logs \

--hostname-override=node1 \

--network-plugin=cni \

--kubeconfig=/opt/kubernetes/server/bin/cfg/kubelet.kubeconfig \

--bootstrap-kubeconfig=/opt/kubernetes/server/bin/cfg/bootstrap.kubeconfig \

--config=/opt/kubernetes/server/bin/cfg/kubelet-config.yml \

--cert-dir=/opt/kubernetes/server/bin/cfg/ssl \

--pod-infra-container-image=lizhenliang/pause-amd64:3.0"

# 三、配置参数文件

[root@node1 cfg]# vim /opt/kubernetes/server/bin/cfg/kubelet-config.yml

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

cgroupDriver: systemd

clusterDNS:

- 10.192.0.2

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /opt/kubernetes/server/bin/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

# 四、生成kubelet初次加入集群引导kubeconfig文件

[root@node1 cfg]# KUBE_CONFIG="/opt/kubernetes/server/bin/cfg/bootstrap.kubeconfig"

[root@node1 cfg]# KUBE_APISERVER="https://192.168.0.171:6443"

[root@node1 cfg]# TOKEN="03b327f4b2581410778681b0636346c0"

## 设置集群信息

[root@node1 cfg]# kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/server/bin/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${KUBE_CONFIG}

Cluster "kubernetes" set.

## 设置用户信息,之前用的是证书,现在这里用的是token(这个token我们后面只赋予他创建csr请求的权限),kubernetes的证书我们交给apiserver来管理

[root@node1 cfg]# kubectl config set-credentials "kubelet-bootstrap" \

--token=${TOKEN} \

--kubeconfig=${KUBE_CONFIG}

User "kubelet-bootstrap" set.

## 设置上下文信息

[root@node1 cfg]# kubectl config set-context default \

--cluster=kubernetes \

--user="kubelet-bootstrap" \

--kubeconfig=${KUBE_CONFIG}

Context "default" created.

## 设置默认上下文

[root@node1 cfg]# kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

Switched to context "default".

# 六、加入systemctl管理

[root@node1 cfg]# vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

[Service]

EnvironmentFile=/opt/kubernetes/server/bin/cfg/kubelet.conf

ExecStart=/opt/kubernetes/server/bin/kubelet $KUBELET_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

# 七、开启服务并设置开机自启

[root@node1 cfg]# systemctl start kubelet

[root@node2 cfg]# systemctl enable kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

# 八、批准kubelet证书的申请并加入集群

[root@master ~]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-od3kcZBecLZOwXjIOBMrBE0Te4ONK2YtmjGHSLglaXk 52s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

## 批准申请

[root@master ~]# kubectl certificate approve node-csr-od3kcZBecLZOwXjIOBMrBE0Te4ONK2YtmjGHSLglaXk

certificatesigningrequest.certificates.k8s.io/node-csr-od3kcZBecLZOwXjIOBMrBE0Te4ONK2YtmjGHSLglaXk approved

[root@master ~]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-od3kcZBecLZOwXjIOBMrBE0Te4ONK2YtmjGHSLglaXk 92s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

## 查看节点(网络插件还没有部署好,节点就会没有准备就绪notready状态)

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

node1 NotReady <none> 11s v1.20.1

node2 NotReady <none> 59s v1.20.1

(2)安装kube-proxy

# 1、配置kube-config

[root@node1 cfg]# vim /opt/kubernetes/server/bin/cfg/kube-proxy.conf

KUBE_PROXY_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/data/k8s/kube-proxy/logs \

--config=/opt/kubernetes/server/bin/cfg/kube-proxy-config.yml"

# 2、配置参数文件

[root@node1 cfg]# vim /opt/kubernetes/server/bin/cfg/kube-proxy-config.yml

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

metricsBindAddress: 0.0.0.0:10249

clientConnection:

kubeconfig: /opt/kubernetes/server/bin/cfg/kube-proxy.kubeconfig

hostnameOverride: k8s-master1

clusterCIDR: 10.244.0.0/16

# 3、生成kube-proxy.kubeconfig文件

## 3.1 生成证书文件

[root@master ~]# cd /opt/TLS/k8s/

[root@master k8s]# ll

total 84

-rw-r--r-- 1 root root 1009 Feb 20 01:27 admin.csr

-rw-r--r-- 1 root root 229 Feb 20 01:27 admin-csr.json

-rw------- 1 root root 1679 Feb 20 01:27 admin-key.pem

-rw-r--r-- 1 root root 1399 Feb 20 01:27 admin.pem

-rw-r--r-- 1 root root 284 Feb 19 22:58 ca-config.json

-rw-r--r-- 1 root root 1001 Feb 19 22:50 ca.csr

-rw-r--r-- 1 root root 208 Feb 19 22:49 ca-csr.json

-rw------- 1 root root 1671 Feb 19 22:50 ca-key.pem

-rw-r--r-- 1 root root 1359 Feb 19 22:50 ca.pem

-rw-r--r-- 1 root root 1045 Feb 20 00:24 kube-controller-manager.csr

-rw-r--r-- 1 root root 254 Feb 20 00:21 kube-controller-manager-csr.json

-rw------- 1 root root 1675 Feb 20 00:24 kube-controller-manager-key.pem

-rw-r--r-- 1 root root 1436 Feb 20 00:24 kube-controller-manager.pem

-rw-r--r-- 1 root root 1029 Feb 20 01:15 kube-scheduler.csr

-rw-r--r-- 1 root root 245 Feb 20 01:14 kube-scheduler-csr.json

-rw------- 1 root root 1679 Feb 20 01:15 kube-scheduler-key.pem

-rw-r--r-- 1 root root 1424 Feb 20 01:15 kube-scheduler.pem

-rw-r--r-- 1 root root 1261 Feb 19 22:58 server.csr

-rw-r--r-- 1 root root 477 Feb 19 22:58 server-csr.json

-rw------- 1 root root 1679 Feb 19 22:58 server-key.pem

-rw-r--r-- 1 root root 1627 Feb 19 22:58 server.pem

## 3.2 生成证书

[root@master k8s]# vim kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

[root@master k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

## 3.3 分发证书

[root@master k8s]# scp kube-proxy*pem root@node1:/opt/kubernetes/server/bin/ssl/

[root@master k8s]# scp kube-proxy*pem root@node2:/opt/kubernetes/server/bin/ssl/

## 3.4 生成kubeconfig文件

[root@node1 cfg]# KUBE_CONFIG="/opt/kubernetes/server/bin/cfg/kube-proxy.kubeconfig"

[root@node1 cfg]# KUBE_APISERVER="https://192.168.0.171:6443"

[root@node1 cfg]# kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/server/bin/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${KUBE_CONFIG}

Cluster "kubernetes" set.

[root@node1 cfg]# kubectl config set-credentials kube-proxy \

--client-certificate=/opt/kubernetes/server/bin/ssl/kube-proxy.pem \

--client-key=/opt/kubernetes/server/bin/ssl/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=${KUBE_CONFIG}

User "kube-proxy" set.

[root@node1 cfg]# kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=${KUBE_CONFIG}

Context "default" created.

[root@node1 cfg]# kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

Switched to context "default".

# 4、systemd管理kube-proxy

[root@node1 cfg]# vim /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=/opt/kubernetes/server/bin/cfg/kube-proxy.conf

ExecStart=/opt/kubernetes/server/bin/kube-proxy $KUBE_PROXY_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

(3)安装calico

https://docs.projectcalico.org/manifests/calico.yaml

[root@master k8s]# kubectl apply -f calico.yaml

(4)授权apiserver访问kubelet

[root@master cfg]# vim apiserver-to-kubelet-rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

- pods/log

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubernetes

[root@master cfg]# kubectl apply -f apiserver-to-kubelet-rbac.yaml

clusterrole.rbac.authorization.k8s.io/system:kube-apiserver-to-kubelet created

clusterrolebinding.rbac.authorization.k8s.io/system:kube-apiserver created

(5)检查node状态

[root@master ssl]# kubectl get node

NAME STATUS ROLES AGE VERSION

node1 Ready <none> 5h39m v1.20.1

node2 Ready <none> 5h39m v1.20.1

[root@master ssl]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-97769f7c7-4hqpr 1/1 Running 0 3h59m

calico-node-cqzdj 1/1 Running 65 3h59m

calico-node-m9p6z 1/1 Running 67 3h59m

部署Dashboard和CoreDNS

(1)部署dashboard

# (1)准备yaml目录

[root@master k8s-yaml]# pwd

/opt/kubernetes/k8s-yaml

[root@master k8s-yaml]# ll

total 16

-rw-r--r-- 1 root root 4279 Feb 21 20:20 coredns.yaml

-rw-r--r-- 1 root root 7591 Feb 21 20:20 kubernetes-dashboard.yaml

# (2)应用

[root@master k8s-yaml]# kubectl apply -f kubernetes-dashboard.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

[root@master k8s-yaml]#

[root@master k8s-yaml]# kubectl get pod,svc -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

pod/dashboard-metrics-scraper-7b59f7d4df-fzckz 1/1 Running 0 91s

pod/kubernetes-dashboard-74d688b6bc-cnx5t 1/1 Running 0 91s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/dashboard-metrics-scraper ClusterIP 10.192.0.169 <none> 8000/TCP 91s

service/kubernetes-dashboard NodePort 10.192.0.224 <none> 443:30001/TCP 91s

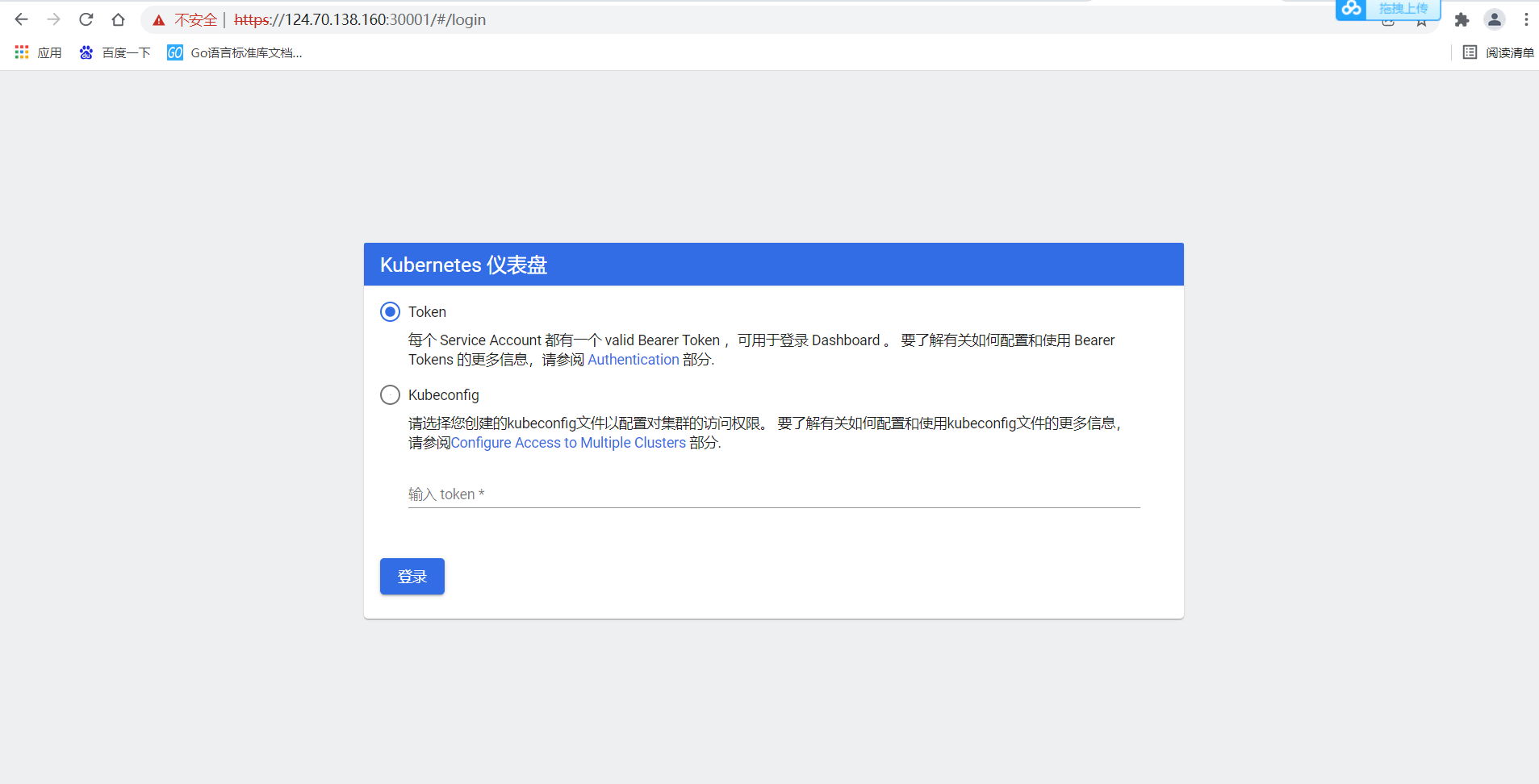

尝试登录:

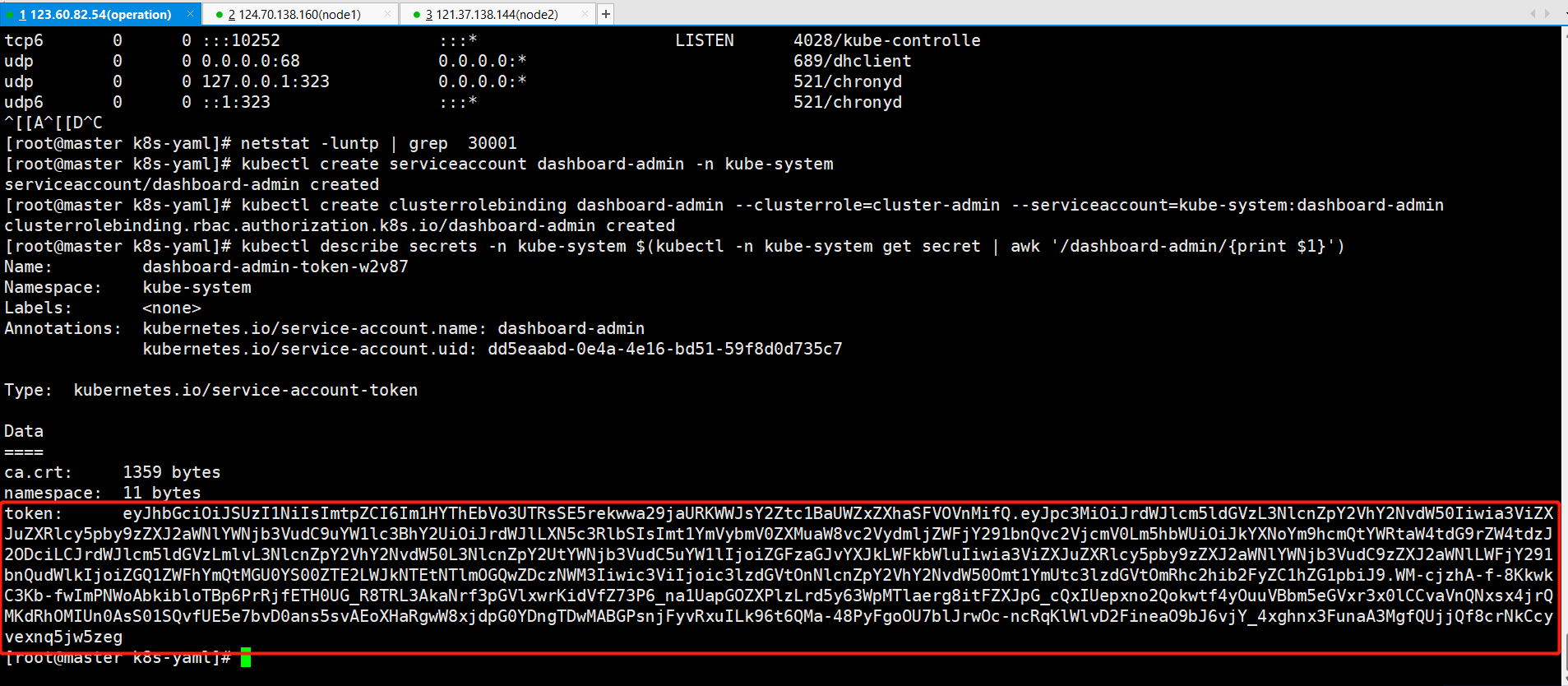

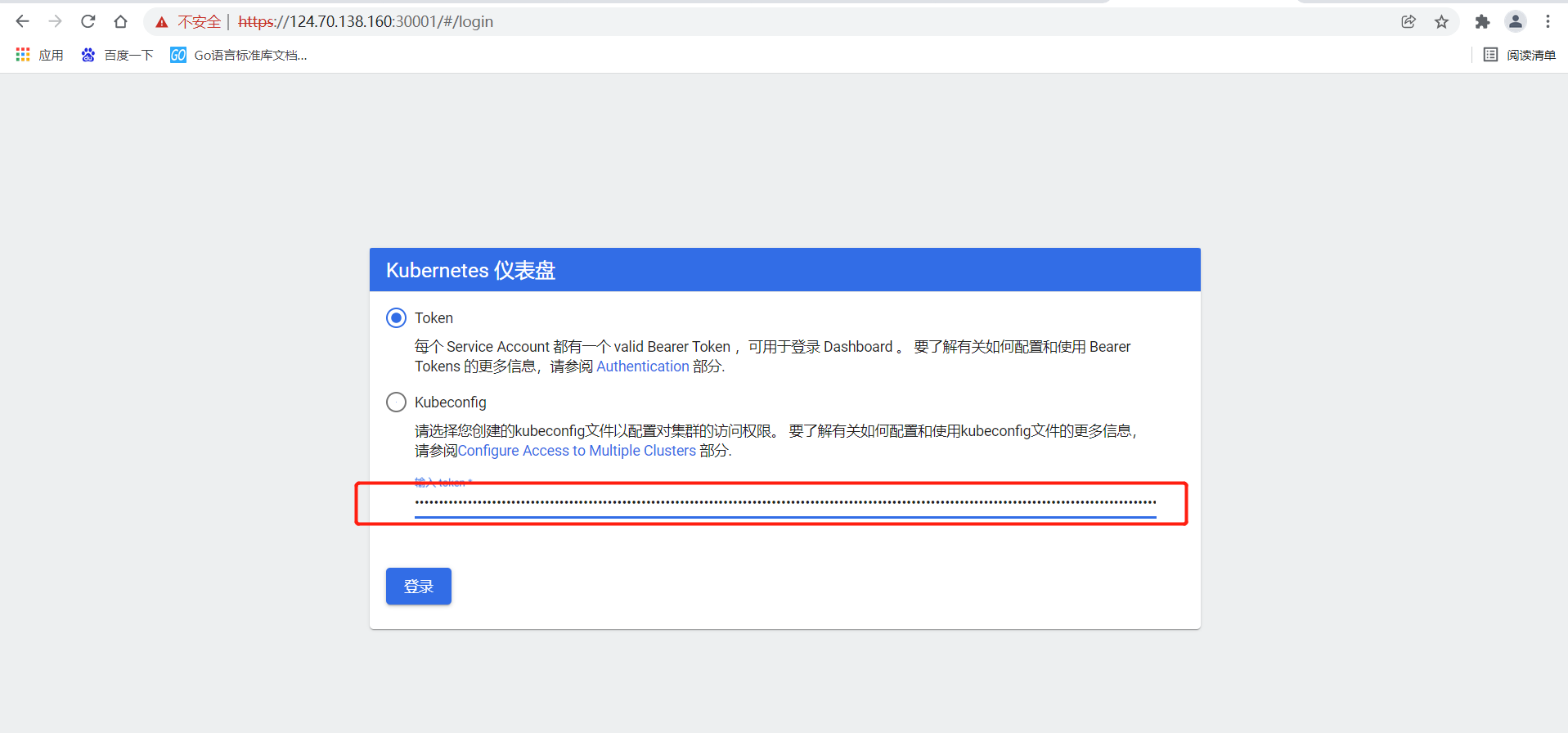

创建service account并绑定默认cluster-admin管理员集群角色

kubectl create serviceaccount dashboard-admin -n kube-system

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

将上述数据输入到浏览器中:

(2)部署CoreDNS

CoreDNS用于集群内部service名称解析

[root@master k8s-yaml]# pwd

/opt/kubernetes/k8s-yaml

[root@master k8s-yaml]# ls -lh

total 16K

-rw-r--r-- 1 root root 4.2K Feb 25 10:32 coredns.yaml

-rw-r--r-- 1 root root 7.5K Feb 21 20:20 kubernetes-dashboard.yaml

# 注意修改coredns.yaml 文件中的clusterIP

[root@master k8s-yaml]#

[root@master k8s-yaml]# kubectl create -f coredns.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

[root@master k8s-yaml]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-97769f7c7-4hqpr 1/1 Running 0 3d18h

calico-node-cqzdj 1/1 Running 65 3d18h

calico-node-m9p6z 1/1 Running 67 3d18h

coredns-6d8f96d957-vxzgv 1/1 Running 0 20s

#################################

# DNS 解析测试 #

#################################

[root@master k8s-yaml]# kubectl run -it --rm dns-test --image=busybox:1.28.4 sh

If you don't see a command prompt, try pressing enter.

/ # nslookup kubernetes

Server: 10.192.0.2

Address 1: 10.192.0.2 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.192.0.1 kubernetes.default.svc.cluster.local

/ # exit

Session ended, resume using 'kubectl attach dns-test -c dns-test -i -t' command when the pod is running

pod "dns-test" deleted

[root@master k8s-yaml]#

## 测试通过

浙公网安备 33010602011771号

浙公网安备 33010602011771号