LLM Agentic Memory Systems

https://zhuanlan.zhihu.com/p/18413217342

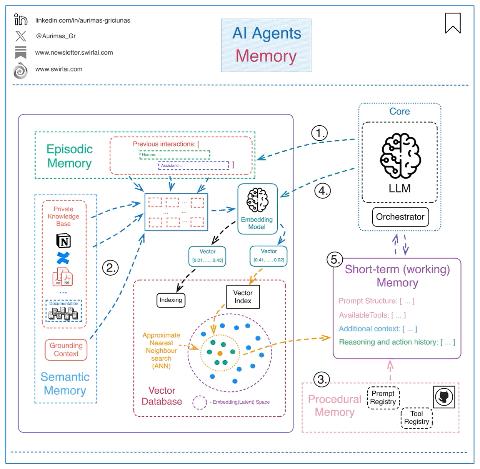

AI Agent Memory 的核心概念其实很简单:它是为了让 AI 能够更聪明地规划和反应,通过记住过去的交互或处理那些当下无法立即获取的数据。在 LLM

(大型语言模型)的背景下,Memory 通常通过 prompt(提示词)传递给模型,从而在特定任务中帮助 AI 实现更好的表现。

为了更直观地理解,我们可以把 AI Agent 的 Memory 分为四种类型:

情景记忆(Episodic Memory

,图中#1)

用来存储 AI 过去的交互记录和已执行的操作。每当 AI 完成一个任务或与用户进行一次对话,这些信息会被存储在一种持久化的存储系统中,比如向量数据库(Vector Database

)。向量数据库可以捕捉这些交互的语义含义,方便 AI 在需要时快速调用。就像是 AI 的“日志本”,帮它记住“昨天发生了什么”。

语义记忆(Semantic Memory

,图中#2)

AI 的知识库,也是它对外部世界和自身理解的重要基础。它包含了 AI 能接触到的所有外部信息,比如从互联网获取的答案或更聚焦的数据集(类似 RAG 应用中的上下文)。这种记忆可以是 AI 的“专属知识”,也可以是被设计用来过滤复杂信息的“地基上下文”(Grounding Context),用来提高回答的准确性。

程序性记忆(Procedural Memory

,图中#3)

这部分存储的不是“内容”,而是“规则”。比如系统提示词的结构(System Prompt)、可用工具列表、模型的使用边界(Guardrails)等。这些信息通常存储在 Git 仓库、Prompt 和工具注册表中,是 AI 系统运行的“使用手册”。

短期记忆(Short-Term Memory

/ Working Memory,图中#5)

在任务执行过程中,AI 需要将从长期记忆中提取出来的内容和当前任务相关的信息“整合”到一起,形成短期记忆。这就像人类的工作记忆,帮助 AI 在当前上下文中完成任务。这些整合后的信息会被打包进 Prompt,最终被传递给 LLM,让模型给出进一步的操作指令。

LLM Agentic Memory Systems

https://kickitlikeshika.github.io/2025/03/22/agentic-memory.html#1-working-memory

Introduction

Current AI systems, particularly those built around Large Language Models (LLMs), face a fundamental limitation: they lack true memory. While they can process information provided in their immediate context, they cannot naturally accumulate experiences over time. This creates several problems:

- Contextual Amnesia: Agents forget previous interactions with users, forcing repetitive explanations

- Inability to Learn: Without recording successes and failures, agents repeat the same mistakes

- Personalization Gaps: Agents struggle to adapt to individual users’ preferences and needs over time

- Efficiency Barriers: Valuable insights from past interactions are lost, requiring “reinvention of the wheel”

To address these limitations, we need to equip our AI agents with memory systems that capture not just what was said, but what was learned.

Types of Agentic Memory

Agentic memory systems can be categorized into several distinct types, each serving different purposes in enhancing agent capabilities:

1. Working Memory

Working memory represents the short-term, immediate context an agent uses for the current task. It’s analogous to human short-term memory or a computer’s RAM.

Characteristics:

- Temporarily holds information needed for the current conversation

- Limited in size due to context window constraints

- Cleared or reset between different sessions or tasks

Example: When a user asks a series of related questions about a topic, working memory helps the agent maintain coherence throughout that specific conversation without requiring repetition of context.

2. Episodic Memory

Episodic memory stores specific interactions or “episodes” that the agent has experienced. These are concrete, instance-based memories of conversations, including what was discussed and how the interaction unfolded.

Characteristics:

- Records complete or summarized conversations

- Includes metadata about the interaction (time, user, topic)

- Searchable by semantic similarity to current context

- Contains information about what worked well and what didn’t

Example: An agent remembers that when discussing transformers with a particular user last week, visual explanations were particularly effective, while mathematical formulas caused confusion.

3. Semantic Memory

Semantic memory stores general knowledge extracted from experiences, rather than the experiences themselves. It represents the “lessons learned” across many interactions.

Characteristics:

- Abstracts patterns across multiple episodes

- Represents generalized knowledge rather than specific instances

- Often organized in structured forms (rules, principles, facts)

- Evolves over time as more experiences accumulate

Example: After numerous interactions explaining technical concepts, an agent develops general principles about how to adapt explanations based on the user’s background.

4. Procedural Memory

Procedural memory captures how to perform actions or processes. For AI agents, this translates to remembering effective strategies for solving problems.

Characteristics:

- Stores successful action sequences and approaches

- Focuses on “how” rather than “what”

- Can be applied across different but similar situations

Example: An agent remembers the effective sequence of steps for debugging code issues, starting with checking syntax, then examining logic, and finally testing edge cases.

mem0

https://docs.mem0.ai/examples/personal-travel-assistant

https://github.com/mem0ai/mem0/tree/main

import os from openai import OpenAI from mem0 import Memory # Set the OpenAI API key os.environ['OPENAI_API_KEY'] = "sk-xxx" config = { "llm": { "provider": "openai", "config": { "model": "gpt-4o", "temperature": 0.1, "max_tokens": 2000, } }, "embedder": { "provider": "openai", "config": { "model": "text-embedding-3-large" } }, "vector_store": { "provider": "qdrant", "config": { "collection_name": "test", "embedding_model_dims": 3072, } }, "version": "v1.1", } class PersonalTravelAssistant: def __init__(self): self.client = OpenAI() self.memory = Memory.from_config(config) self.messages = [{"role": "system", "content": "You are a personal AI Assistant."}] def ask_question(self, question, user_id): # Fetch previous related memories previous_memories = self.search_memories(question, user_id=user_id) # Build the prompt system_message = "You are a personal AI Assistant." if previous_memories: prompt = f"{system_message}\n\nUser input: {question}\nPrevious memories: {', '.join(previous_memories)}" else: prompt = f"{system_message}\n\nUser input: {question}" # Generate response using Responses API response = self.client.responses.create( model="gpt-4o", input=prompt ) # Extract answer from the response answer = response.output[0].content[0].text # Store the question in memory self.memory.add(question, user_id=user_id) return answer def get_memories(self, user_id): memories = self.memory.get_all(user_id=user_id) return [m['memory'] for m in memories['results']] def search_memories(self, query, user_id): memories = self.memory.search(query, user_id=user_id) return [m['memory'] for m in memories['results']] # Usage example user_id = "traveler_123" ai_assistant = PersonalTravelAssistant() def main(): while True: question = input("Question: ") if question.lower() in ['q', 'exit']: print("Exiting...") break answer = ai_assistant.ask_question(question, user_id=user_id) print(f"Answer: {answer}") memories = ai_assistant.get_memories(user_id=user_id) print("Memories:") for memory in memories: print(f"- {memory}") print("-----") if __name__ == "__main__": main()

Implementation

使用向量数据库,并制造记忆prompt或有偏好的记忆信息并存储

https://github.com/KickItLikeShika/agentic-memory/blob/main/agentic-memory.ipynb

react prompt属于过程性提示词

https://zhuanlan.zhihu.com/p/1931154686532105460

记忆作为工具

https://python.langchain.com/docs/versions/migrating_memory/long_term_memory_agent/

summarization

https://github.com/fanqingsong/langgraph-memory-example

from typing import Literal from langchain_core.messages import SystemMessage, HumanMessage, RemoveMessage from langchain_openai import ChatOpenAI from langgraph.graph import START, StateGraph, MessagesState, END from langgraph.prebuilt import tools_condition, ToolNode from langchain_community.tools.tavily_search import TavilySearchResults tavily_tool = TavilySearchResults(max_results=10) tools = [tavily_tool] # Define LLM with bound tools llm = ChatOpenAI(model="gpt-4o") llm_with_tools = llm.bind_tools(tools) model = llm_with_tools # State class to store messages and summary class State(MessagesState): summary: str # Define the logic to call the model def call_model(state: State): # Get summary if it exists summary = state.get("summary", "") # If there is summary, then we add it to messages if summary: # Add summary to system message system_message = f"Summary of conversation earlier: {summary}" # Append summary to any newer messages messages = [SystemMessage(content=system_message)] + state["messages"] else: messages = state["messages"] response = model.invoke(messages) return {"messages": response} # Custom routing function from assistant def route_assistant(state: State) -> Literal["tools", "summarize_conversation", "__end__"]: """Route from assistant based on tool calls and message count.""" messages = state["messages"] last_message = messages[-1] # Check if the assistant called any tools if hasattr(last_message, "tool_calls") and len(last_message.tool_calls) > 0: return "tools" # No tools called - check if we should summarize if len(messages) > 6: return "summarize_conversation" # Otherwise end return END # Routing function after tools def route_after_tools(state: State) -> Literal["summarize_conversation", "assistant"]: """Route after tools execution.""" messages = state["messages"] # If there are more than six messages, summarize if len(messages) > 6: return "summarize_conversation" # Otherwise go back to assistant return "assistant" def summarize_conversation(state: State): # First get the summary if it exists summary = state.get("summary", "") # Create our summarization prompt if summary: # If a summary already exists, add it to the prompt summary_message = ( f"This is summary of the conversation to date: {summary}\n\n" "Extend the summary by taking into account the new messages above:" ) else: # If no summary exists, just create a new one summary_message = "Create a summary of the conversation above:" # Add prompt to our history messages = state["messages"] + [HumanMessage(content=summary_message)] response = model.invoke(messages) # Delete all but the 2 most recent messages and add our summary to the state delete_messages = [RemoveMessage(id=m.id) for m in state["messages"][:-2]] return {"summary": response.content, "messages": delete_messages} # Build graph builder = StateGraph(State) # Note: using State instead of MessagesState builder.add_node("assistant", call_model) builder.add_node("tools", ToolNode(tools)) builder.add_node("summarize_conversation", summarize_conversation) # Add edges builder.add_edge(START, "assistant") # Route from assistant based on tool calls and message count builder.add_conditional_edges("assistant", route_assistant) # After tools, check if we should summarize or go back to assistant builder.add_conditional_edges("tools", route_after_tools) # After summarization, go back to assistant builder.add_edge("summarize_conversation", "assistant") # Compile graph graph = builder.compile()

mem0 集成

import os from typing import Literal from langchain_core.messages import SystemMessage, HumanMessage, RemoveMessage from langchain_openai import ChatOpenAI from langgraph.graph import START, StateGraph, MessagesState, END from langgraph.prebuilt import tools_condition, ToolNode from langchain_community.tools.tavily_search import TavilySearchResults from mem0 import MemoryClient mem0 = MemoryClient(api_key=os.getenv("MEM0_API_KEY")) tavily_tool = TavilySearchResults(max_results=10) tools = [tavily_tool] # Define LLM with bound tools llm = ChatOpenAI(model="gpt-4o") llm_with_tools = llm.bind_tools(tools) model = llm_with_tools class State(MessagesState): mem0_user_id: str # Define the logic to call the model def call_model(state: State): messages = state["messages"] user_id = state.get("mem0_user_id", "default_user_1") # Get only the last message (current user input) current_message = messages[-1] # Retrieve relevant memories based on the current message memories = mem0.search(current_message.content, user_id=user_id) context = "Relevant information from previous conversations:\n" for memory in memories: context += f"- {memory['memory']}\n" system_message = SystemMessage(content=f"""You are a helpful Assistant. Use the provided context to personalize your responses and remember user preferences and past interactions. {context}""") # Only send system message + current user message to LLM (no history) full_messages = [system_message, current_message] response = llm_with_tools.invoke(full_messages) # Store the interaction in Mem0 - use a list of messages mem0.add( messages=[ {"role": "user", "content": current_message.content}, {"role": "assistant", "content": response.content} ], user_id=user_id ) # Clear all previous messages except the current response # so we dont have to store all the messages in the context messages_to_delete = [RemoveMessage(id=m.id) for m in messages] return {"messages": messages_to_delete + [response]} # Build graph builder = StateGraph(MessagesState) builder.add_node("assistant", call_model) builder.add_node("tools", ToolNode(tools)) # Add edges builder.add_edge(START, "assistant") # Use tools_condition to route from assistant builder.add_conditional_edges( "assistant", tools_condition, { "tools": "tools", # If tools are called, go to tools END: END, # If no tools, end } ) # After tools execution, go back to assistant builder.add_edge("tools", "assistant") # Compile graph graph = builder.compile()

https://github.com/fanqingsong/langgraph-mem0-agent

浙公网安备 33010602011771号

浙公网安备 33010602011771号