A Long-Term Memory Agent ---- vector base as memory

A Long-Term Memory Agent

https://python.langchain.com/docs/versions/migrating_memory/long_term_memory_agent/

This tutorial shows how to implement an agent with long-term memory capabilities using LangGraph. The agent can store, retrieve, and use memories to enhance its interactions with users.

Inspired by papers like MemGPT and distilled from our own works on long-term memory, the graph extracts memories from chat interactions and persists them to a database. "Memory" in this tutorial will be represented in two ways:

- a piece of text information that is generated by the agent

- structured information about entities extracted by the agent in the shape of

(subject, predicate, object)knowledge triples.This information can later be read or queried semantically to provide personalized context when your bot is responding to a particular user.

The KEY idea is that by saving memories, the agent persists information about users that is SHARED across multiple conversations (threads), which is different from memory of a single conversation that is already enabled by LangGraph's persistence.

You can also check out a full implementation of this agent in this repo.

# Define the prompt template for the agent prompt = ChatPromptTemplate.from_messages( [ ( "system", "You are a helpful assistant with advanced long-term memory" " capabilities. Powered by a stateless LLM, you must rely on" " external memory to store information between conversations." " Utilize the available memory tools to store and retrieve" " important details that will help you better attend to the user's" " needs and understand their context.\n\n" "Memory Usage Guidelines:\n" "1. Actively use memory tools (save_core_memory, save_recall_memory)" " to build a comprehensive understanding of the user.\n" "2. Make informed suppositions and extrapolations based on stored" " memories.\n" "3. Regularly reflect on past interactions to identify patterns and" " preferences.\n" "4. Update your mental model of the user with each new piece of" " information.\n" "5. Cross-reference new information with existing memories for" " consistency.\n" "6. Prioritize storing emotional context and personal values" " alongside facts.\n" "7. Use memory to anticipate needs and tailor responses to the" " user's style.\n" "8. Recognize and acknowledge changes in the user's situation or" " perspectives over time.\n" "9. Leverage memories to provide personalized examples and" " analogies.\n" "10. Recall past challenges or successes to inform current" " problem-solving.\n\n" "## Recall Memories\n" "Recall memories are contextually retrieved based on the current" " conversation:\n{recall_memories}\n\n" "## Instructions\n" "Engage with the user naturally, as a trusted colleague or friend." " There's no need to explicitly mention your memory capabilities." " Instead, seamlessly incorporate your understanding of the user" " into your responses. Be attentive to subtle cues and underlying" " emotions. Adapt your communication style to match the user's" " preferences and current emotional state. Use tools to persist" " information you want to retain in the next conversation. If you" " do call tools, all text preceding the tool call is an internal" " message. Respond AFTER calling the tool, once you have" " confirmation that the tool completed successfully.\n\n", ), ("placeholder", "{messages}"), ] )

model = ChatOpenAI(model_name="gpt-4o") model_with_tools = model.bind_tools(tools) tokenizer = tiktoken.encoding_for_model("gpt-4o") def agent(state: State) -> State: """Process the current state and generate a response using the LLM. Args: state (schemas.State): The current state of the conversation. Returns: schemas.State: The updated state with the agent's response. """ bound = prompt | model_with_tools recall_str = ( "<recall_memory>\n" + "\n".join(state["recall_memories"]) + "\n</recall_memory>" ) prediction = bound.invoke( { "messages": state["messages"], "recall_memories": recall_str, } ) return { "messages": [prediction], } def load_memories(state: State, config: RunnableConfig) -> State: """Load memories for the current conversation. Args: state (schemas.State): The current state of the conversation. config (RunnableConfig): The runtime configuration for the agent. Returns: State: The updated state with loaded memories. """ convo_str = get_buffer_string(state["messages"]) convo_str = tokenizer.decode(tokenizer.encode(convo_str)[:2048]) recall_memories = search_recall_memories.invoke(convo_str, config) return { "recall_memories": recall_memories, } def route_tools(state: State): """Determine whether to use tools or end the conversation based on the last message. Args: state (schemas.State): The current state of the conversation. Returns: Literal["tools", "__end__"]: The next step in the graph. """ msg = state["messages"][-1] if msg.tool_calls: return "tools" return END

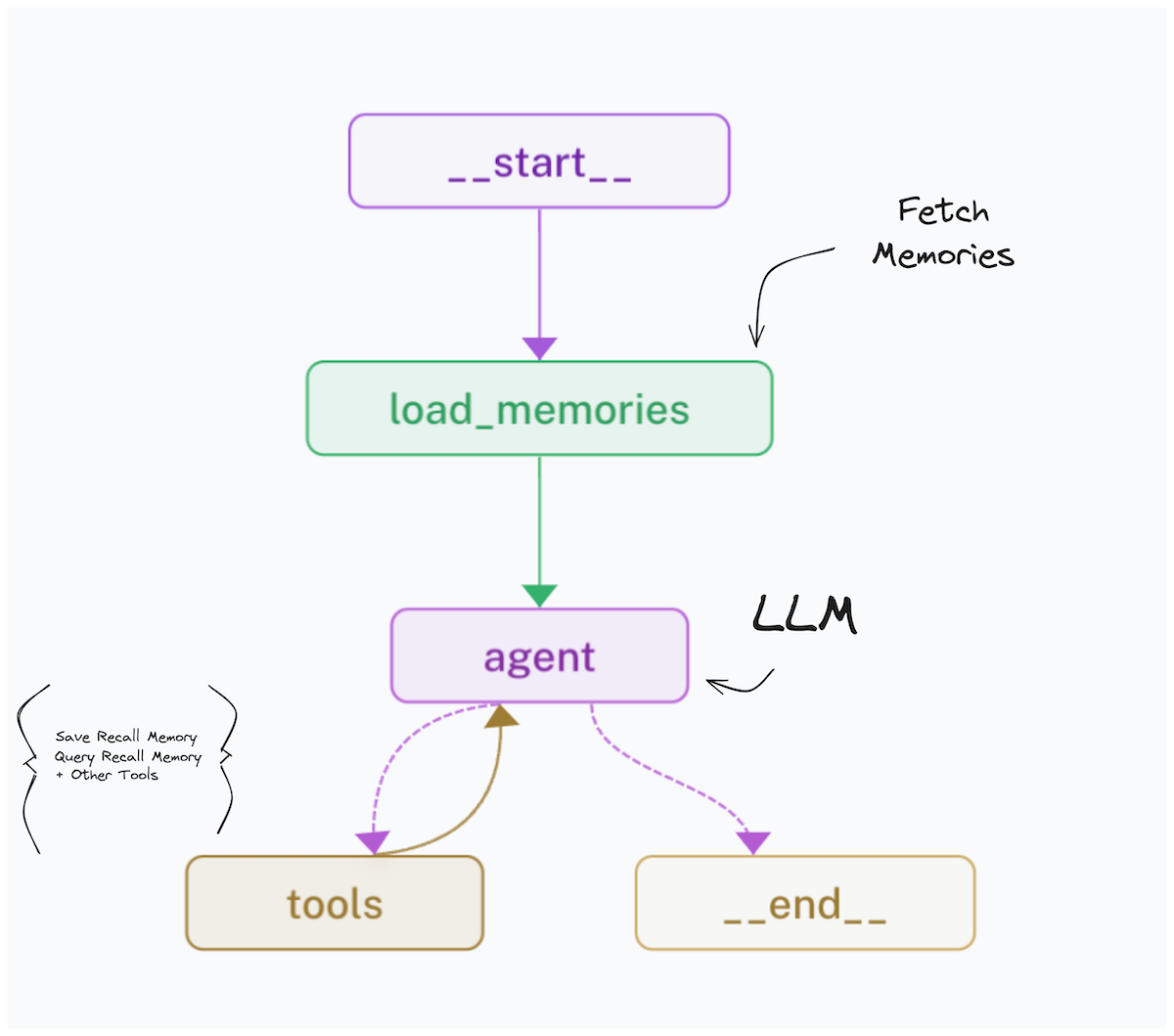

# Create the graph and add nodes builder = StateGraph(State) builder.add_node(load_memories) builder.add_node(agent) builder.add_node("tools", ToolNode(tools)) # Add edges to the graph builder.add_edge(START, "load_memories") builder.add_edge("load_memories", "agent") builder.add_conditional_edges("agent", route_tools, ["tools", END]) builder.add_edge("tools", "agent") # Compile the graph memory = MemorySaver() graph = builder.compile(checkpointer=memory)

浙公网安备 33010602011771号

浙公网安备 33010602011771号