Making it easier to build human-in-the-loop agents with interrupt

Making it easier to build human-in-the-loop agents with interrupt

https://blog.langchain.dev/making-it-easier-to-build-human-in-the-loop-agents-with-interrupt/

While agents can be powerful, they are not perfect. This often makes it important to keep the human “in the loop” when building agents. For example, in our fireside chat we did with Michele Catasta (President of Replit) on their Replit Agent, he speaks several times about the human-in-the-loop component being crucial to their agent design.

From the start, we designed LangGraph with this in mind, and it’s one of the key reasons many companies choose to build on LangGraph. Today, we’re excited to announce a new method to more easily include human-in-the-loop steps in your LangGraph agents: interrupt

How we built LangGraph for human-in-the-loop workflows

One of the differentiating aspects of LangGraph is that we built it for human-in-the-loop workflows. We think these workflows are incredibly important when building agents, and so we built in first class support for them in LangGraph.

We did this by making persistence a first class citizen in LangGraph. Every step of the graph, it reads from and then writes to a checkpoint of that graph state. That graph state stores everything the agent needs to do its work.

This makes it possible to pause execution of the graph half way through, and then resume after some time - because that checkpoint is there, and we can just pick right back up from there.

It also makes it possible to pause, let the human edit the checkpoint, and then resume from that new updated checkpoint.

In some ways, you can think of this persistence layer as a scratchpad for human/agent collaboration.

interrupt: a new developer experience for human-in-the-loop

We’ve had a few ways of building human in the loop interactions before (breakpoints, NodeInterrupt). Over the past few months, we’ve seen developers want to do more and more complicated things, and so we’ve added a new tool to help with this.

When building human-in-the-loop into Python programs, one common way to do this is with the input function. With this, your program pauses, a text box pops up in your terminal, and whatever you type is then used as the response to that function. You use it like the below:

response = input("Your question here")

That is a pretty easy and intuitive way to add human-in-the-loop functionality. The downside to this is that it is synchronous and blocks the process and doesn’t really work outside the command line (or notebooks). So this won’t work in production at all.

We’ve tried to emulate this developer experience by adding a new function to LangGraph: interrupt. You can use this in much the same way as input:

response = interrupt("Your question here")

This is designed to work in production settings. When you do this, it will pause execution of the graph, mark the thread you are running as interrupted, and put whatever you passed as an input to interrupt into the persistence layer. This way, you can check the thread status, see that it’s interrupted, check the message, and then based on that invoke the graph again (in a special way) to pass your response back in:

graph.invoke(Command(resume="Your response here"), thread)

Note that it doesn’t function exactly the same as input (it reruns any work in that node done before this is called, but no previous nodes). This ensures interrupted threads don’t take up any resources (beyond storage space), and can be resumed many months later, on a different machine, etc.

For more information, see the Python and Javascript documentation.

Common human-in-the-loop workflows

There are a few different human-in-the-loop workflows that we see being implemented.

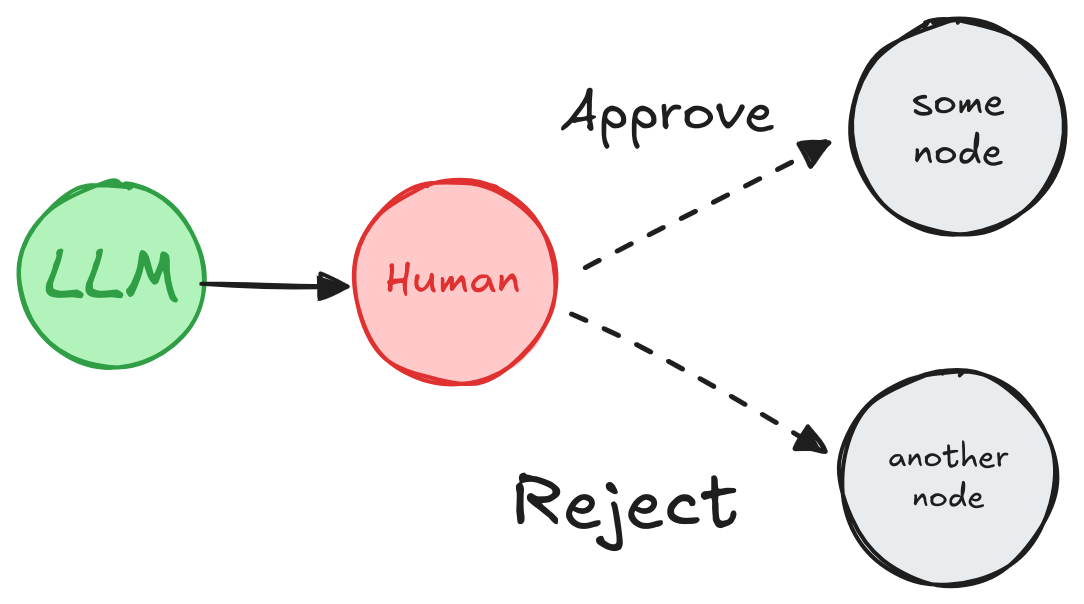

Pause the graph before a critical step, such as an API call, to review and approve the action. If the action is rejected, you can prevent the graph from executing the step, and potentially take an alternative action.

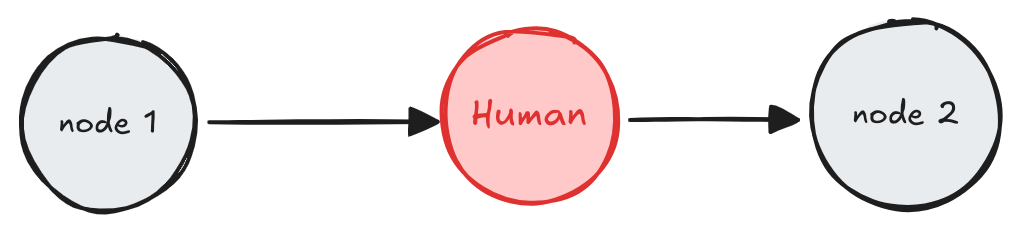

A human can review and edit the state of the graph. This is useful for correcting mistakes or updating the state with additional information.

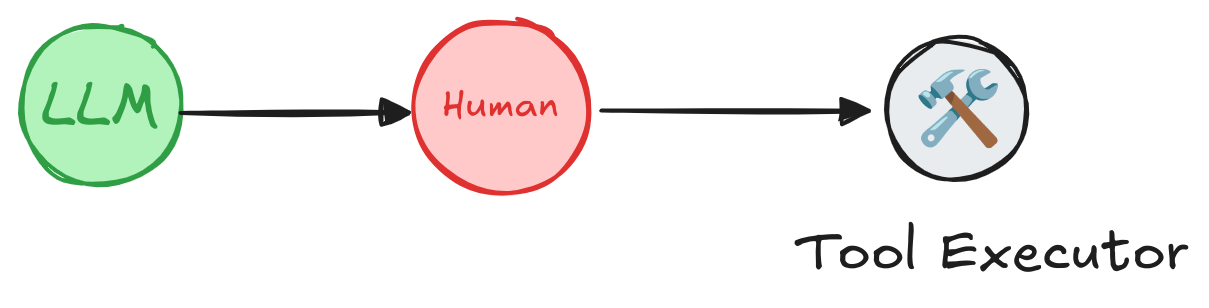

A human can review and edit the output from the LLM before proceeding. This is particularly critical in applications where the tool calls requested by the LLM may be sensitive or require human oversight.

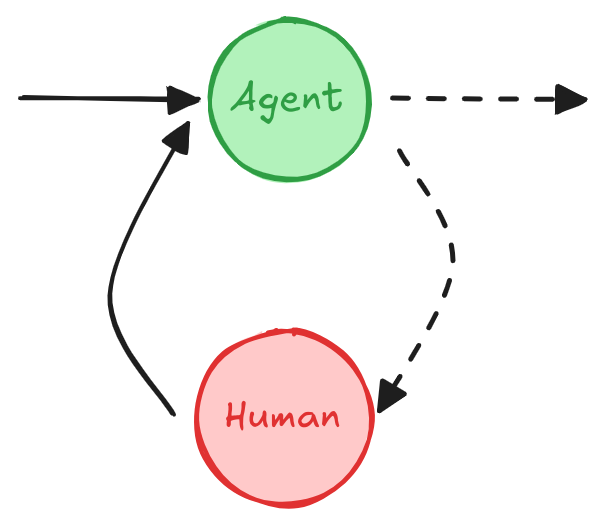

Multi-turn conversation in a multi-agent setup

A multi-turn conversation involves multiple back-and-forth interactions between an agent and a human, which can allow the agent to gather additional information from the human in a conversational manner.

This design pattern is useful in an LLM application consisting of multiple agents. One or more agents may need to carry out multi-turn conversations with a human, where the human provides input or feedback at different stages of the conversation. For simplicity, the agent implementation below is illustrated as a single node, but in reality it may be part of a larger graph consisting of multiple nodes and include a conditional edge.

Conclusion

We are building LangGraph to be the best agent framework for human-in-the-loop interaction patterns. We think interrupt makes this easier than ever. We’ve updated all of our examples that use human-in-the-loop to use this new functionality. We hope to release some more end-to-end projects that demonstrate this in real-world action soon.

See our YouTube walkthrough for more information

We’re excited to see what you build!

https://langchain-ai.github.io/langgraph/concepts/human_in_the_loop/?ref=blog.langchain.dev#interrupt

https://juejin.cn/post/7457184920303468584

一 、批准或拒绝设计模式

在人机协同工作流中,可以执行以下三种不同的操作:

- 批准或拒绝: 在关键步骤(例如 API 调用)之前暂停图,以审查并批准操作。如果操作被拒绝,可以阻止图执行该步骤,并可能采取替代操作。这种模式通常基于人类的输入来决定图的执行路径。

- 编辑 graph 状态: 暂停图以审查并编辑图的状态。这对于纠正错误或用额外信息更新状态非常有用。这种模式通常涉及根据人类的输入更新状态。

- 获取输入: 在 graph 的特定步骤显式请求人类输入。这对于收集额外信息或上下文以支持 agent 的决策过程,或支持多轮对话非常有用。 根据我们人类的批准或拒绝,graph 可以继续执行操作或采取替代路径。如下图:

在我们执行关键步骤之前暂停 graph,我们人类介入审查并批准操作。如果操作被拒绝,可以阻止 graph 执行该步骤,并可能采取替代操作。代码如下:

二 、审查并编辑状态设计模式

我们人类可以审查并编辑 graph 的状态。这对于纠正错误或用额外信息更新状态非常有用。 代码如下:

from langgraph.types import interrupt

def human_editing(state: State):

...

result = interrupt(

{

"task": "审查大模型的输出,来进行必要的修改.",

"llm_generated_summary": state["messages"]

}

)

# 使用编辑后的文本更新状态。

return {

"messages": result["编辑后的文本"]

}

# 将节点添加到 graph 中的适当位置,并将其连接到相关节点

graph_builder.add_node("human_editing", human_editing)

graph = graph_builder.compile(checkpointer=checkpointer)

...

# 在运行 graph 并触发中断后,graph 将暂停。通过批准或拒绝来恢复其执行。

thread_config = {"configurable": {"thread_id": "some_id"}}

graph.invoke(

Command(resume={"edited_text": "The edited text"}),

config=thread_config

)

三、审查调用工具设计模式

在继续执行之前,人类可以审查并编辑来自 LLM 的输出。加假如 LLM 请求的工具调用比较敏感则需要人工监督。如下图 代码如下面所示:

def human_review_node(state) -> Command[Literal["call_llm", "run_tool"]]:

# 这是我们将通过 Command(resume=<human_review>) 提供的值。

human_review = interrupt(

{

"question": "Is this correct?",

# 为了审查显示调用的工具

"tool_call": tool_call

}

)

review_action, review_data = human_review

# 批准工具调用

if review_action == "continue":

return Command(goto="run_tool")

# 手动修改工具调用,然后继续执行。

elif review_action == "update":

updated_msg = get_updated_msg(review_data)

# 要修改现有消息,我们需要传递具有匹配 ID 的消息。

return Command(goto="run_tool", update={"messages": [updated_message]})

# 提供自然语言反馈,然后将其传回给代理。

elif review_action == "feedback":

feedback_msg = get_feedback_msg(review_data)

return Command(goto="call_llm", update={"messages": [feedback_msg]})

四、多轮对话设计模式

一种多轮对话设计,其中代理和人类节点来回循环,直到代理决定将对话移交给另一个代理或系统的另一部分。 多轮对话的设计涉及 agent 与人类之间的多次来回交互,这使得 agent 能够以对话的方式从人类那里收集更多信息。 这种设计模式在由多个 agent 组成的 LLM 应用程序中非常有用。一个或多个 agent 可能需要与人类进行多轮对话,而人类在对话的不同阶段提供输入或反馈。为了简化,下面的代理实现被展示为单个节点,但实际上它可能是由多个节点组成的更大图的一部分,并且可能包含条件边。 下面关于多轮对话又有两种设计方式: 第一种:

from langgraph.types import interrupt

def human_input(state: State):

human_message = interrupt("human_input")

return {

"messages": [

{

"role": "human",

"content": human_message

}

]

}

def agent(state: State):

graph_builder.add_node("human_input", human_input)

graph_builder.add_edge("human_input", "agent")

graph = graph_builder.compile(checkpointer=checkpointer)

graph.invoke(

Command(resume="hello!"),

config=thread_config

)

在这种模式中,每个代理都有自己的用于收集用户输入的人类节点。这可以通过为人类节点指定唯一名称(例如,“代理 1 的人类节点”、“代理 2 的人类节点”)或使用子图来实现,其中子图包含一个人类节点和一个代理节点。 第二种:

from langgraph.types import interrupt

def human_node(state: MessagesState) -> Command[Literal["agent_1", "agent_2", ...]]:

"""A node for collecting user input."""

user_input = interrupt(value="Ready for user input.")

# 从状态中确定活动代理,以便在收集输入后路由到正确的代理。例如,向状态添加字段或使用最后一个活动代理,或者填充代理生成的 AI 消息的 name 属性。

active_agent = ...

return Command(

update={

"messages": [{

"role": "human",

"content": user_input,

}]

},

goto=active_agent,

)

在这种模式中,一个单独的人类节点用于为多个代理收集用户输入。活动代理从状态中确定,因此在收集人类输入后,图可以路由到正确的代理。

五、总结

从上面4中设计模式可以看到,LangGraph 的中断功能为 LLM 应用程序提供了强大的人机交互能力,使得在关键步骤中引入人工审查和干预成为可能。通过合理设计工作流,开发者可以确保LLM生成的输出和工具调用在敏感或关键场景中的准确性和可靠性。无论是批准、编辑还是多轮对话,LangGraph 的设计模式都为构建复杂的人机协同系统提供了灵活且强大的工具。

浙公网安备 33010602011771号

浙公网安备 33010602011771号