Multi-agent network

Multi-agent network

https://langchain-ai.github.io/langgraph/tutorials/multi_agent/multi-agent-collaboration/

A single agent can usually operate effectively using a handful of tools within a single domain, but even using powerful models like

gpt-4, it can be less effective at using many tools.One way to approach complicated tasks is through a "divide-and-conquer" approach: create a specialized agent for each task or domain and route tasks to the correct "expert". This is an example of a multi-agent network architecture.

This notebook (inspired by the paper AutoGen: Enabling Next-Gen LLM Applications via Multi-Agent Conversation, by Wu, et. al.) shows one way to do this using LangGraph.

The resulting graph will look something like the following diagram:

Before we get started, a quick note: this and other multi-agent notebooks are designed to show how you can implement certain design patterns in LangGraph. If the pattern suits your needs, we recommend combining it with some of the other fundamental patterns described elsewhere in the docs for best performance.

code

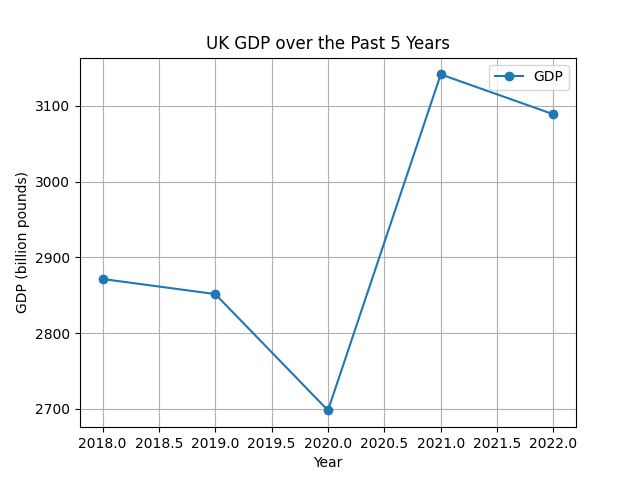

import getpass import os import re import uuid from dotenv import load_dotenv load_dotenv('.env') from typing import Annotated from langchain_community.tools.tavily_search import TavilySearchResults from langchain_core.tools import tool from langchain_experimental.utilities import PythonREPL from typing import Literal from langchain_core.messages import BaseMessage, HumanMessage from langchain_anthropic import ChatAnthropic from langgraph.prebuilt import create_react_agent from langgraph.graph import MessagesState, END from langgraph.types import Command from langchain_openai import ChatOpenAI from langgraph.graph import StateGraph, START tavily_tool = TavilySearchResults(max_results=5) # Warning: This executes code locally, which can be unsafe when not sandboxed repl = PythonREPL() @tool def python_repl_tool( code: Annotated[str, "The python code to execute to generate your chart."], ): """Use this to execute python code. If you want to see the output of a value, you should print it out with `print(...)`. This is visible to the user.""" # Extract the Python code from the code block match = re.search(r'```python\n(.*?)\n```', code, re.DOTALL) if match: code = match.group(1) # Replace plt.show() with plt.savefig() code = code.replace('plt.show()', 'plt.savefig("/home/song/workspace/me/LangGraphChatBot/08_multi_agent_network/uk_gdp.png")') print(f"Executing code: {code}") try: result = repl.run(code) print(f"Execution result: {result}") except BaseException as e: error_msg = f"Failed to execute. Error: {repr(e)}" print(error_msg) return error_msg result_str = f"Successfully executed:\n\`\`\`python\n{code}\n\`\`\`\nStdout: {result}" return ( result_str + "\n\nIf you have completed all tasks, respond with FINAL ANSWER." ) def make_system_prompt(suffix: str) -> str: return ( "You are a helpful AI assistant, collaborating with other assistants." " Use the provided tools to progress towards answering the question." " If you are unable to fully answer, that's OK, another assistant with different tools " " will help where you left off. Execute what you can to make progress." " If you or any of the other assistants have the final answer or deliverable," " prefix your response with FINAL ANSWER so the team knows to stop." f"\n{suffix}" ) # 模型配置字典 MODEL_CONFIGS = { "openai": { "base_url": "https://nangeai.top/v1", "api_key": "sk-0OWbyfzUSwajhvqGoNbjIEEWchM15CchgJ5hIaN6qh9I3XRl", "chat_model": "gpt-4o-mini", "embedding_model": "text-embedding-3-small" }, "oneapi": { "base_url": "http://139.224.72.218:3000/v1", "api_key": "sk-EDjbeeCYkD1OnI9E48018a018d2d4f44958798A261137591", "chat_model": "qwen-max", "embedding_model": "text-embedding-v1" }, "qwen": { "base_url": "https://dashscope.aliyuncs.com/compatible-mode/v1", "api_key": "sk-80a72f794bc4488d85798d590e96db43", "chat_model": "qwen-max", "embedding_model": "text-embedding-v1" }, "ollama": { "base_url": "http://localhost:11434/v1", "api_key": "ollama", "chat_model": "deepseek-r1:14b", "embedding_model": "nomic-embed-text:latest" }, "siliconflow": { "base_url": os.getenv("SILICONFLOW_API_URL", "https://api.siliconflow.cn/v1"), "api_key": os.getenv("SILICONFLOW_API_KEY", ""), "chat_model": os.getenv("SILICONFLOW_API_MODEL", 'Qwen/Qwen2.5-7B-Instruct'), "embedding_model": os.getenv("SILICONFLOW_API_EMBEDDING_MODEL"), }, "zhipu": { "base_url": os.getenv("ZHIPU_API_URL", "https://api.siliconflow.cn/v1"), "api_key": os.getenv("ZHIPU_API_KEY", ""), "chat_model": os.getenv("ZHIPU_API_MODEL", 'Qwen/Qwen2.5-7B-Instruct'), "embedding_model": os.getenv("ZHIPU_API_EMBEDDING_MODEL"), } } DEFAULT_LLM_TYPE = "zhipu" DEFAULT_TEMPERATURE = 0 config = MODEL_CONFIGS[DEFAULT_LLM_TYPE] # llm = ChatOpenAI(model="gpt-3.5-turbo") llm = ChatOpenAI( base_url=config["base_url"], api_key=config["api_key"], model=config["chat_model"], temperature=DEFAULT_TEMPERATURE, timeout=30, # 添加超时配置(秒) max_retries=2 # 添加重试次数 ) # llm = ChatAnthropic(model="claude-3-5-sonnet-latest") def get_next_node(last_message: BaseMessage, goto: str): if "FINAL ANSWER" in last_message.content or "Final ANSWER" in last_message.content: # Any agent decided the work is done return END return goto # Research agent and node research_agent = create_react_agent( llm, tools=[tavily_tool], prompt=make_system_prompt( "You can only do research. You are working with a chart generator colleague." ), ) def research_node( state: MessagesState, ) -> Command[Literal["chart_generator", END]]: result = research_agent.invoke(state) goto = get_next_node(result["messages"][-1], "chart_generator") # wrap in a human message, as not all providers allow # AI message at the last position of the input messages list result["messages"][-1] = HumanMessage( content=result["messages"][-1].content, name="researcher" ) return Command( update={ # share internal message history of research agent with other agents "messages": result["messages"], }, goto=goto, ) # Chart generator agent and node # NOTE: THIS PERFORMS ARBITRARY CODE EXECUTION, WHICH CAN BE UNSAFE WHEN NOT SANDBOXED chart_agent = create_react_agent( llm, [python_repl_tool], prompt=make_system_prompt( "You can only generate charts, save it in this directory '/home/song/workspace/me/LangGraphChatBot/08_multi_agent_network/usa_gdp.png'. You are working with a researcher colleague." ), ) def chart_node(state: MessagesState) -> Command[Literal["researcher", END]]: result = chart_agent.invoke(state) # 查看生成的代码内容 last_message = result["messages"][-1].content # print(f"Generated code in chart agent: {last_message}") goto = get_next_node(result["messages"][-1], "researcher") # wrap in a human message, as not all providers allow # AI message at the last position of the input messages list result["messages"][-1] = HumanMessage( content=result["messages"][-1].content, name="chart_generator" ) return Command( update={ # share internal message history of chart agent with other agents "messages": result["messages"], }, goto=goto, ) workflow = StateGraph(MessagesState) workflow.add_node("researcher", research_node) workflow.add_node("chart_generator", chart_node) workflow.add_edge(START, "researcher") graph = workflow.compile() config = {"configurable": {"thread_id": str(uuid.uuid4())}} events = graph.stream( { "messages": [ ( "user", "First, get the UK's GDP over the past 5 years, then make a line chart of it. Once you make the chart, finish.", ) ], }, # Maximum number of steps to take in the graph # {"recursion_limit": 150}, config=config, stream_mode="updates" ) for s in events: last_message = next(iter(s.values()))["messages"][-1] last_message.pretty_print() print("----")

浙公网安备 33010602011771号

浙公网安备 33010602011771号