Build a Customer Support Bot

Build a Customer Support Bot

https://langchain-ai.github.io/langgraph/tutorials/customer-support/customer-support/

Customer support bots can free up teams' time by handling routine issues, but it can be hard to build a bot that reliably handles diverse tasks in a way that doesn't leave the user pulling their hair out.

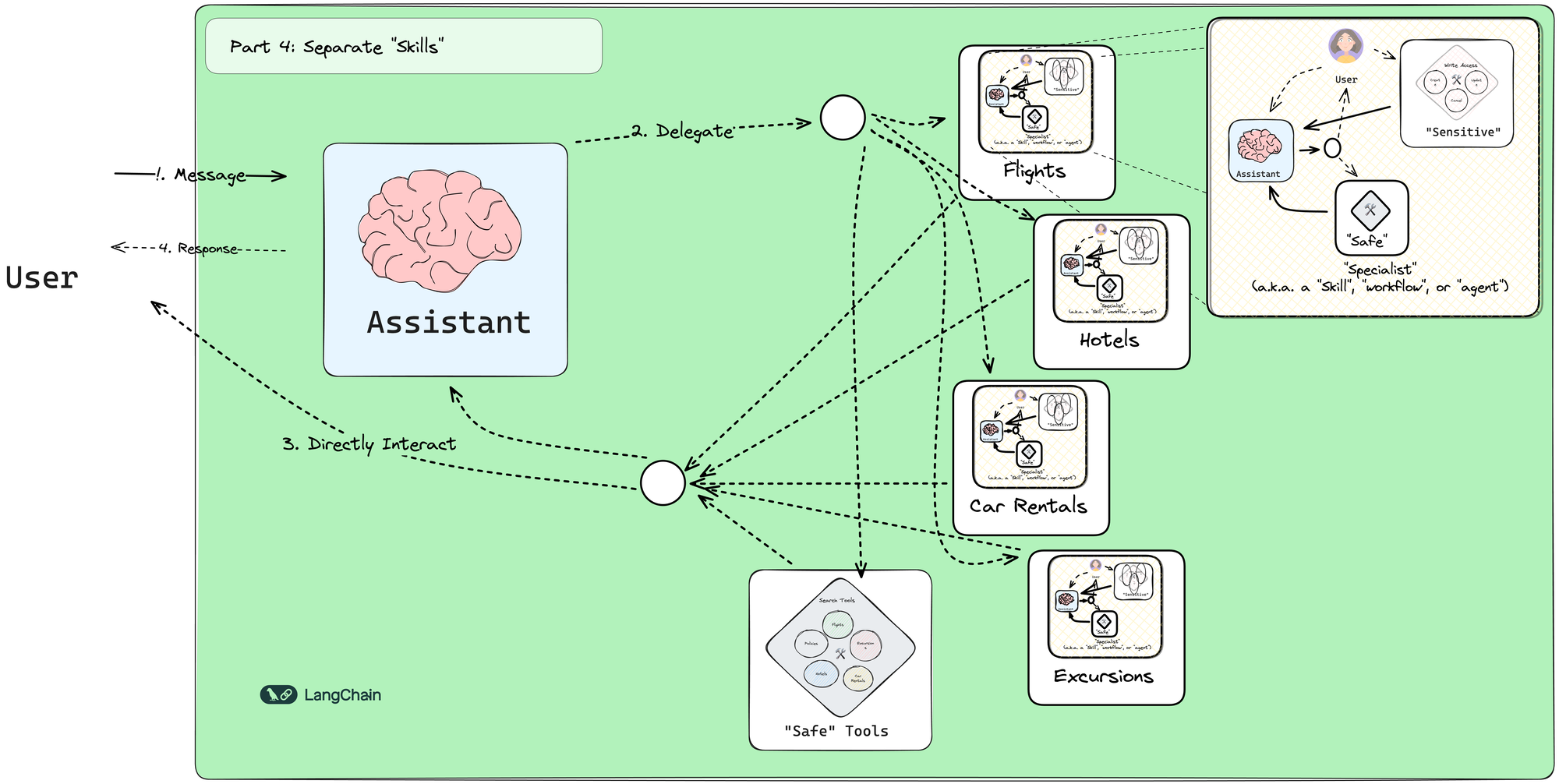

In this tutorial, you will build a customer support bot for an airline to help users research and make travel arrangements. You'll learn to use LangGraph's interrupts and checkpointers and more complex state to organize your assistant's tools and manage a user's flight bookings, hotel reservations, car rentals, and excursions. It assumes you are familiar with the concepts presented in the LangGraph introductory tutorial.

By the end, you'll have built a working bot and gained an understanding of LangGraph's key concepts and architectures. You'll be able to apply these design patterns to your other AI projects.

Your final chat bot will look something like the following diagram:

Let's start!

Tutorial

https://www.bilibili.com/video/BV124sMe9E8N?spm_id_from=333.788.videopod.sections&vd_source=41b9bfb5ef0a4175a4cb4170a475f680

https://github.com/langchain-ai/langgraph/blob/main/docs/docs/tutorials/customer-support/customer-support.ipynb

Code Tested

https://github.com/fanqingsong/LangGraphChatBot/tree/main/06_customer_support/disections

from pprint import pprint from typing import Annotated, Literal, Optional from typing import Annotated from tools import * # from db.vector_db import * from utils import * from db.sqlite_db import SQLiteTool from typing import Annotated from typing_extensions import TypedDict from langgraph.graph.message import AnyMessage, add_messages from tools import * # from db.vector_db import * from utils import * from utils.tools import ( _print_event ) from db.sqlite_db import SQLiteTool from LLM import * from typing_extensions import TypedDict from langgraph.graph.message import AnyMessage, add_messages from langgraph.graph import END, StateGraph, START def update_dialog_stack(left: list[str], right: Optional[str]) -> list[str]: """Push or pop the state.""" if right is None: return left if right == "pop": return left[:-1] return left + [right] class State(TypedDict): messages: Annotated[list[AnyMessage], add_messages] user_info: str dialog_state: Annotated[ list[ Literal[ "assistant", "update_flight", "book_car_rental", "book_hotel", "book_excursion", ] ], update_dialog_stack, ] # from langchain_anthropic import ChatAnthropic # from langchain_community.tools.tavily_search import TavilySearchResults from langchain_core.prompts import ChatPromptTemplate from langchain_core.runnables import Runnable, RunnableConfig from pydantic import BaseModel, Field class Assistant: def __init__(self, runnable: Runnable): self.runnable = runnable def __call__(self, state: State, config: RunnableConfig): while True: result = self.runnable.invoke(state) if not result.tool_calls and ( not result.content or isinstance(result.content, list) and not result.content[0].get("text") ): messages = state["messages"] + [("user", "Respond with a real output.")] state = {**state, "messages": messages} else: break return {"messages": result} class CompleteOrEscalate(BaseModel): """A tool to mark the current task as completed and/or to escalate control of the dialog to the main assistant, who can re-route the dialog based on the user's needs.""" cancel: bool = True reason: str class Config: json_schema_extra = { "example": { "cancel": True, "reason": "User changed their mind about the current task.", }, "example 2": { "cancel": True, "reason": "I have fully completed the task.", }, "example 3": { "cancel": False, "reason": "I need to search the user's emails or calendar for more information.", }, } # Flight booking assistant flight_booking_prompt = ChatPromptTemplate.from_messages( [ ( "system", "You are a specialized assistant for handling flight updates. " " The primary assistant delegates work to you whenever the user needs help updating their bookings. " "Confirm the updated flight details with the customer and inform them of any additional fees. " " When searching, be persistent. Expand your query bounds if the first search returns no results. " "If you need more information or the customer changes their mind, escalate the task back to the main assistant." " Remember that a booking isn't completed until after the relevant tool has successfully been used." "\n\nCurrent user flight information:\n<Flights>\n{user_info}\n</Flights>" "\nCurrent time: {time}." "\n\nIf the user needs help, and none of your tools are appropriate for it, then" ' "CompleteOrEscalate" the dialog to the host assistant. Do not waste the user\'s time. Do not make up invalid tools or functions.', ), ("placeholder", "{messages}"), ] ).partial(time=datetime.now) update_flight_safe_tools = [search_flights] update_flight_sensitive_tools = [update_ticket_to_new_flight, cancel_ticket] update_flight_tools = update_flight_safe_tools + update_flight_sensitive_tools update_flight_runnable = flight_booking_prompt | llm.bind_tools( update_flight_tools + [CompleteOrEscalate] ) # Hotel Booking Assistant book_hotel_prompt = ChatPromptTemplate.from_messages( [ ( "system", "You are a specialized assistant for handling hotel bookings. " "The primary assistant delegates work to you whenever the user needs help booking a hotel. " "Search for available hotels based on the user's preferences and confirm the booking details with the customer. " " When searching, be persistent. Expand your query bounds if the first search returns no results. " "If you need more information or the customer changes their mind, escalate the task back to the main assistant." " Remember that a booking isn't completed until after the relevant tool has successfully been used." "\nCurrent time: {time}." '\n\nIf the user needs help, and none of your tools are appropriate for it, then "CompleteOrEscalate" the dialog to the host assistant.' " Do not waste the user's time. Do not make up invalid tools or functions." "\n\nSome examples for which you should CompleteOrEscalate:\n" " - 'what's the weather like this time of year?'\n" " - 'nevermind i think I'll book separately'\n" " - 'i need to figure out transportation while i'm there'\n" " - 'Oh wait i haven't booked my flight yet i'll do that first'\n" " - 'Hotel booking confirmed'", ), ("placeholder", "{messages}"), ] ).partial(time=datetime.now) book_hotel_safe_tools = [search_hotels] book_hotel_sensitive_tools = [book_hotel, update_hotel, cancel_hotel] book_hotel_tools = book_hotel_safe_tools + book_hotel_sensitive_tools book_hotel_runnable = book_hotel_prompt | llm.bind_tools( book_hotel_tools + [CompleteOrEscalate] ) # Car Rental Assistant book_car_rental_prompt = ChatPromptTemplate.from_messages( [ ( "system", "You are a specialized assistant for handling car rental bookings. " "The primary assistant delegates work to you whenever the user needs help booking a car rental. " "Search for available car rentals based on the user's preferences and confirm the booking details with the customer. " " When searching, be persistent. Expand your query bounds if the first search returns no results. " "If you need more information or the customer changes their mind, escalate the task back to the main assistant." " Remember that a booking isn't completed until after the relevant tool has successfully been used." "\nCurrent time: {time}." "\n\nIf the user needs help, and none of your tools are appropriate for it, then " '"CompleteOrEscalate" the dialog to the host assistant. Do not waste the user\'s time. Do not make up invalid tools or functions.' "\n\nSome examples for which you should CompleteOrEscalate:\n" " - 'what's the weather like this time of year?'\n" " - 'What flights are available?'\n" " - 'nevermind i think I'll book separately'\n" " - 'Oh wait i haven't booked my flight yet i'll do that first'\n" " - 'Car rental booking confirmed'", ), ("placeholder", "{messages}"), ] ).partial(time=datetime.now) book_car_rental_safe_tools = [search_car_rentals] book_car_rental_sensitive_tools = [ book_car_rental, update_car_rental, cancel_car_rental, ] book_car_rental_tools = book_car_rental_safe_tools + book_car_rental_sensitive_tools book_car_rental_runnable = book_car_rental_prompt | llm.bind_tools( book_car_rental_tools + [CompleteOrEscalate] ) # Excursion Assistant book_excursion_prompt = ChatPromptTemplate.from_messages( [ ( "system", "You are a specialized assistant for handling trip recommendations. " "The primary assistant delegates work to you whenever the user needs help booking a recommended trip. " "Search for available trip recommendations based on the user's preferences and confirm the booking details with the customer. " "If you need more information or the customer changes their mind, escalate the task back to the main assistant." " When searching, be persistent. Expand your query bounds if the first search returns no results. " " Remember that a booking isn't completed until after the relevant tool has successfully been used." "\nCurrent time: {time}." '\n\nIf the user needs help, and none of your tools are appropriate for it, then "CompleteOrEscalate" the dialog to the host assistant. Do not waste the user\'s time. Do not make up invalid tools or functions.' "\n\nSome examples for which you should CompleteOrEscalate:\n" " - 'nevermind i think I'll book separately'\n" " - 'i need to figure out transportation while i'm there'\n" " - 'Oh wait i haven't booked my flight yet i'll do that first'\n" " - 'Excursion booking confirmed!'", ), ("placeholder", "{messages}"), ] ).partial(time=datetime.now) book_excursion_safe_tools = [search_trip_recommendations] book_excursion_sensitive_tools = [book_excursion, update_excursion, cancel_excursion] book_excursion_tools = book_excursion_safe_tools + book_excursion_sensitive_tools book_excursion_runnable = book_excursion_prompt | llm.bind_tools( book_excursion_tools + [CompleteOrEscalate] ) # Primary Assistant class ToFlightBookingAssistant(BaseModel): """Transfers work to a specialized assistant to handle flight updates and cancellations.""" request: str = Field( description="Any necessary followup questions the update flight assistant should clarify before proceeding." ) class ToBookCarRental(BaseModel): """Transfers work to a specialized assistant to handle car rental bookings.""" location: str = Field( description="The location where the user wants to rent a car." ) start_date: str = Field(description="The start date of the car rental.") end_date: str = Field(description="The end date of the car rental.") request: str = Field( description="Any additional information or requests from the user regarding the car rental." ) class Config: json_schema_extra = { "example": { "location": "Basel", "start_date": "2023-07-01", "end_date": "2023-07-05", "request": "I need a compact car with automatic transmission.", } } class ToHotelBookingAssistant(BaseModel): """Transfer work to a specialized assistant to handle hotel bookings.""" location: str = Field( description="The location where the user wants to book a hotel." ) checkin_date: str = Field(description="The check-in date for the hotel.") checkout_date: str = Field(description="The check-out date for the hotel.") request: str = Field( description="Any additional information or requests from the user regarding the hotel booking." ) class Config: json_schema_extra = { "example": { "location": "Zurich", "checkin_date": "2023-08-15", "checkout_date": "2023-08-20", "request": "I prefer a hotel near the city center with a room that has a view.", } } class ToBookExcursion(BaseModel): """Transfers work to a specialized assistant to handle trip recommendation and other excursion bookings.""" location: str = Field( description="The location where the user wants to book a recommended trip." ) request: str = Field( description="Any additional information or requests from the user regarding the trip recommendation." ) class Config: json_schema_extra = { "example": { "location": "Lucerne", "request": "The user is interested in outdoor activities and scenic views.", } } # The top-level assistant performs general Q&A and delegates specialized tasks to other assistants. # The task delegation is a simple form of semantic routing / does simple intent detection # llm = ChatAnthropic(model="claude-3-haiku-20240307") # llm = ChatAnthropic(model="claude-3-sonnet-20240229", temperature=1) primary_assistant_prompt = ChatPromptTemplate.from_messages( [ ( "system", "You are a helpful customer support assistant for Swiss Airlines. " "Your primary role is to search for flight information and company policies to answer customer queries. " "If a customer requests to update or cancel a flight, book a car rental, book a hotel, or get trip recommendations, " "delegate the task to the appropriate specialized assistant by invoking the corresponding tool. You are not able to make these types of changes yourself." " Only the specialized assistants are given permission to do this for the user." "The user is not aware of the different specialized assistants, so do not mention them; just quietly delegate through function calls. " "Provide detailed information to the customer, and always double-check the sqlite_db before concluding that information is unavailable. " " When searching, be persistent. Expand your query bounds if the first search returns no results. " " If a search comes up empty, expand your search before giving up." "\n\nCurrent user flight information:\n<Flights>\n{user_info}\n</Flights>" "\nCurrent time: {time}.", ), ("placeholder", "{messages}"), ] ).partial(time=datetime.now) primary_assistant_tools = [ # TavilySearchResults(max_results=1), search_flights, # lookup_policy, ] assistant_runnable = primary_assistant_prompt | llm.bind_tools( primary_assistant_tools + [ ToFlightBookingAssistant, ToBookCarRental, ToHotelBookingAssistant, ToBookExcursion, ] ) from typing import Callable from langchain_core.messages import ToolMessage def create_entry_node(assistant_name: str, new_dialog_state: str) -> Callable: def entry_node(state: State) -> dict: tool_call_id = state["messages"][-1].tool_calls[0]["id"] return { "messages": [ ToolMessage( content=f"The assistant is now the {assistant_name}. Reflect on the above conversation between the host assistant and the user." f" The user's intent is unsatisfied. Use the provided tools to assist the user. Remember, you are {assistant_name}," " and the booking, update, other other action is not complete until after you have successfully invoked the appropriate tool." " If the user changes their mind or needs help for other tasks, call the CompleteOrEscalate function to let the primary host assistant take control." " Do not mention who you are - just act as the proxy for the assistant.", tool_call_id=tool_call_id, ) ], "dialog_state": new_dialog_state, } return entry_node from typing import Literal from langgraph.checkpoint.memory import MemorySaver from langgraph.graph import StateGraph from langgraph.prebuilt import tools_condition builder = StateGraph(State) def user_info(state: State): return {"user_info": fetch_user_flight_information.invoke({})} builder.add_node("fetch_user_info", user_info) builder.add_edge(START, "fetch_user_info") # Flight booking assistant builder.add_node( "enter_update_flight", create_entry_node("Flight Updates & Booking Assistant", "update_flight"), ) builder.add_node("update_flight", Assistant(update_flight_runnable)) builder.add_edge("enter_update_flight", "update_flight") builder.add_node( "update_flight_sensitive_tools", create_tool_node_with_fallback(update_flight_sensitive_tools), ) builder.add_node( "update_flight_safe_tools", create_tool_node_with_fallback(update_flight_safe_tools), ) def route_update_flight( state: State, ): route = tools_condition(state) if route == END: return END tool_calls = state["messages"][-1].tool_calls did_cancel = any(tc["name"] == CompleteOrEscalate.__name__ for tc in tool_calls) if did_cancel: return "leave_skill" safe_toolnames = [t.name for t in update_flight_safe_tools] if all(tc["name"] in safe_toolnames for tc in tool_calls): return "update_flight_safe_tools" return "update_flight_sensitive_tools" builder.add_edge("update_flight_sensitive_tools", "update_flight") builder.add_edge("update_flight_safe_tools", "update_flight") builder.add_conditional_edges( "update_flight", route_update_flight, ["update_flight_sensitive_tools", "update_flight_safe_tools", "leave_skill", END], ) # This node will be shared for exiting all specialized assistants def pop_dialog_state(state: State) -> dict: """Pop the dialog stack and return to the main assistant. This lets the full graph explicitly track the dialog flow and delegate control to specific sub-graphs. """ messages = [] if state["messages"][-1].tool_calls: # Note: Doesn't currently handle the edge case where the llm performs parallel tool calls messages.append( ToolMessage( content="Resuming dialog with the host assistant. Please reflect on the past conversation and assist the user as needed.", tool_call_id=state["messages"][-1].tool_calls[0]["id"], ) ) return { "dialog_state": "pop", "messages": messages, } builder.add_node("leave_skill", pop_dialog_state) builder.add_edge("leave_skill", "primary_assistant") # Car rental assistant builder.add_node( "enter_book_car_rental", create_entry_node("Car Rental Assistant", "book_car_rental"), ) builder.add_node("book_car_rental", Assistant(book_car_rental_runnable)) builder.add_edge("enter_book_car_rental", "book_car_rental") builder.add_node( "book_car_rental_safe_tools", create_tool_node_with_fallback(book_car_rental_safe_tools), ) builder.add_node( "book_car_rental_sensitive_tools", create_tool_node_with_fallback(book_car_rental_sensitive_tools), ) def route_book_car_rental( state: State, ): route = tools_condition(state) if route == END: return END tool_calls = state["messages"][-1].tool_calls did_cancel = any(tc["name"] == CompleteOrEscalate.__name__ for tc in tool_calls) if did_cancel: return "leave_skill" safe_toolnames = [t.name for t in book_car_rental_safe_tools] if all(tc["name"] in safe_toolnames for tc in tool_calls): return "book_car_rental_safe_tools" return "book_car_rental_sensitive_tools" builder.add_edge("book_car_rental_sensitive_tools", "book_car_rental") builder.add_edge("book_car_rental_safe_tools", "book_car_rental") builder.add_conditional_edges( "book_car_rental", route_book_car_rental, [ "book_car_rental_safe_tools", "book_car_rental_sensitive_tools", "leave_skill", END, ], ) # Hotel booking assistant builder.add_node( "enter_book_hotel", create_entry_node("Hotel Booking Assistant", "book_hotel") ) builder.add_node("book_hotel", Assistant(book_hotel_runnable)) builder.add_edge("enter_book_hotel", "book_hotel") builder.add_node( "book_hotel_safe_tools", create_tool_node_with_fallback(book_hotel_safe_tools), ) builder.add_node( "book_hotel_sensitive_tools", create_tool_node_with_fallback(book_hotel_sensitive_tools), ) def route_book_hotel( state: State, ): route = tools_condition(state) if route == END: return END tool_calls = state["messages"][-1].tool_calls did_cancel = any(tc["name"] == CompleteOrEscalate.__name__ for tc in tool_calls) if did_cancel: return "leave_skill" tool_names = [t.name for t in book_hotel_safe_tools] if all(tc["name"] in tool_names for tc in tool_calls): return "book_hotel_safe_tools" return "book_hotel_sensitive_tools" builder.add_edge("book_hotel_sensitive_tools", "book_hotel") builder.add_edge("book_hotel_safe_tools", "book_hotel") builder.add_conditional_edges( "book_hotel", route_book_hotel, ["leave_skill", "book_hotel_safe_tools", "book_hotel_sensitive_tools", END], ) # Excursion assistant builder.add_node( "enter_book_excursion", create_entry_node("Trip Recommendation Assistant", "book_excursion"), ) builder.add_node("book_excursion", Assistant(book_excursion_runnable)) builder.add_edge("enter_book_excursion", "book_excursion") builder.add_node( "book_excursion_safe_tools", create_tool_node_with_fallback(book_excursion_safe_tools), ) builder.add_node( "book_excursion_sensitive_tools", create_tool_node_with_fallback(book_excursion_sensitive_tools), ) def route_book_excursion( state: State, ): route = tools_condition(state) if route == END: return END tool_calls = state["messages"][-1].tool_calls did_cancel = any(tc["name"] == CompleteOrEscalate.__name__ for tc in tool_calls) if did_cancel: return "leave_skill" tool_names = [t.name for t in book_excursion_safe_tools] if all(tc["name"] in tool_names for tc in tool_calls): return "book_excursion_safe_tools" return "book_excursion_sensitive_tools" builder.add_edge("book_excursion_sensitive_tools", "book_excursion") builder.add_edge("book_excursion_safe_tools", "book_excursion") builder.add_conditional_edges( "book_excursion", route_book_excursion, ["book_excursion_safe_tools", "book_excursion_sensitive_tools", "leave_skill", END], ) # Primary assistant builder.add_node("primary_assistant", Assistant(assistant_runnable)) builder.add_node( "primary_assistant_tools", create_tool_node_with_fallback(primary_assistant_tools) ) def route_primary_assistant( state: State, ): route = tools_condition(state) if route == END: return END tool_calls = state["messages"][-1].tool_calls if tool_calls: # The primary assistant will invoke one of the specialized assistants # based on the user's intent router_target = "primary_assistant_tools" if tool_calls[0]["name"] == ToFlightBookingAssistant.__name__: # We can directly route to the flight booking assistant router_target = "enter_update_flight" # return "enter_update_flight" elif tool_calls[0]["name"] == ToBookCarRental.__name__: router_target = "enter_book_car_rental" # return "enter_book_car_rental" elif tool_calls[0]["name"] == ToHotelBookingAssistant.__name__: router_target = "enter_book_hotel" return "enter_book_hotel" elif tool_calls[0]["name"] == ToBookExcursion.__name__: router_target = "enter_book_excursion" # return "enter_book_excursion" # return "primary_assistant_tools" print(f'==== router_target = {router_target} ======') return router_target raise ValueError("Invalid route") # The assistant can route to one of the delegated assistants, # directly use a tool, or directly respond to the user builder.add_conditional_edges( "primary_assistant", route_primary_assistant, [ "enter_update_flight", "enter_book_car_rental", "enter_book_hotel", "enter_book_excursion", "primary_assistant_tools", END, ], ) builder.add_edge("primary_assistant_tools", "primary_assistant") # Each delegated workflow can directly respond to the user # When the user responds, we want to return to the currently active workflow def route_to_workflow( state: State, ) -> Literal[ "primary_assistant", "update_flight", "book_car_rental", "book_hotel", "book_excursion", ]: """If we are in a delegated state, route directly to the appropriate assistant.""" dialog_state = state.get("dialog_state") if not dialog_state: return "primary_assistant" return dialog_state[-1] builder.add_conditional_edges("fetch_user_info", route_to_workflow) # Compile graph memory = MemorySaver() part_4_graph = builder.compile( checkpointer=memory, # Let the user approve or deny the use of sensitive tools interrupt_before=[ "update_flight_sensitive_tools", "book_car_rental_sensitive_tools", "book_hotel_sensitive_tools", "book_excursion_sensitive_tools", ], ) import uuid # Update with the backup file so we can restart from the original place in each section SQLiteTool().update_dates() thread_id = str(uuid.uuid4()) config = { "configurable": { # The passenger_id is used in our flight tools to # fetch the user's flight information "passenger_id": "3442 587242", # Checkpoints are accessed by thread_id "thread_id": thread_id, } } tutorial_questions = [ "Hi there, what time is my flight?", "Am i allowed to update my flight to something sooner? I want to leave later today.", "Update my flight to sometime next week then", "The next available option is great", "what about lodging and transportation?", "Yeah i think i'd like an affordable hotel for my week-long stay (7 days). And I'll want to rent a car.", "OK could you place a reservation for your recommended hotel? It sounds nice.", "yes go ahead and book anything that's moderate expense and has availability.", "Now for a car, what are my options?", "Awesome let's just get the cheapest option. Go ahead and book for 7 days", "Cool so now what recommendations do you have on excursions?", "Are they available while I'm there?", "interesting - i like the museums, what options are there? ", "OK great pick one and book it for my second day there.", ] _printed = set() # We can reuse the tutorial questions from part 1 to see how it does. # for question in tutorial_questions: while True: try: user = input("User (q/Q to quit): ") except: user = "" print(f"User (q/Q to quit): {user}") if user in {"q", "Q"}: print("AI: Byebye") break output = None question = user if question == "": continue events = part_4_graph.stream( {"messages": ("user", question)}, config, stream_mode="values" ) for event in events: _print_event(event, _printed) snapshot = part_4_graph.get_state(config) pprint(snapshot) while snapshot.next: # We have an interrupt! The agent is trying to use a tool, and the user can approve or deny it # Note: This code is all outside of your graph. Typically, you would stream the output to a UI. # Then, you would have the frontend trigger a new run via an API call when the user has provided input. try: user_input = input( "Do you approve of the above actions? Type 'y' to continue;" " otherwise, explain your requested changed.\n\n" ) except: user_input = "y" if user_input.strip() == "y": # Just continue result = part_4_graph.invoke( None, config, ) else: # Satisfy the tool invocation by # providing instructions on the requested changes / change of mind result = part_4_graph.invoke( { "messages": [ ToolMessage( tool_call_id=event["messages"][-1].tool_calls[0]["id"], content=f"API call denied by user. Reasoning: '{user_input}'. Continue assisting, accounting for the user's input.", ) ] }, config, ) snapshot = part_4_graph.get_state(config)

浙公网安备 33010602011771号

浙公网安备 33010602011771号