kubekey部署一个k8s集群,使用containerd作为容器运行时

参考连接:https://blog.csdn.net/weixin_40579389/article/details/128207113

一. 简介

KubeKey 是 KubeSphere 社区开源的一款高效集群部署工具,运行时默认使用 Docker , 也可对接 Containerd CRI-O iSula 等 CRI 运行时,且 ETCD 集群独立运行,支持与 K8s 分离部署,提高环境部署灵活性。

二. 资源

cpu:4 内存:8G 系统盘:100G

三台主机

192.168.30.11 k8s-master

192.168.30.12 k8s-node01

192.168.30.13 k8s-node02

三. 前期准备工作

1. 关闭selinux firewalld swap

2. 安装socat,conntrack

3. 设置系统变量:export KKZONE=cn

4. 下载kubekey软件包

5. 添加hosts解析以及自身做免密登录(也可以不做免密登录)

四. 安装kubekey

1. 从github上下载,然后解压即可。下载地址:

wget https://github.com/kubesphere/kubekey/releases/download/v3.1.5/kubekey-v3.1.5-linux-amd64.tar.gz

2. 查看kubekey支持哪些版本的k8s

[root@kubekey ~]# ./kk version --show-supported-k8s

3. 创建k8s集群

a. 生成配置文件模板,默认生成的模板文件名字为:config-sample.yaml,这里一般用默认的就行。

kk create config --with-kubernetes v1.30.4 # 根据自己的需求选择安装的版本。这里注意,如果版本是1.30.4的话,生成的配置文件默认就是containerd了

b.修改模板文件,贴上一个我修改的模板文件,红色的部分就是修改的内容

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: k8s-master, address: 192.168.30.11, internalAddress: 192.168.30.11, user: root, password: "123456"}

- {name: k8s-node01, address: 192.168.30.12, internalAddress: 192.168.30.12, user: root, password: "123456"}

- {name: k8s-node02, address: 192.168.30.13, internalAddress: 192.168.30.1d, user: root, password: "123456"}

roleGroups:

etcd:

- k8s-master

control-plane:

- k8s-master

worker:

- k8s-node01

- k8s-node02

controlPlaneEndpoint:

## Internal loadbalancer for apiservers

# internalLoadbalancer: haproxy

domain: lb.kubesphere.local

address: "192.168.30.11"

port: 6443

kubernetes:

version: v1.30.4

clusterName: cluster.local

autoRenewCerts: true

containerManager: containerd

etcd:

type: kubekey

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

## multus support. https://github.com/k8snetworkplumbingwg/multus-cni

multusCNI:

enabled: false

registry:

privateRegistry: ""

namespaceOverride: ""

registryMirrors: []

insecureRegistries: []

addons: []

c. 基于模板文件创建集群

kk create cluster -f config-sample.yaml

4. 重新部署

如果要重新部署集群,需要先删除集群,然后再冲重新部署

删除集群

kk delete cluster -f config-sample.yaml

清理文件

rm -rf /etc/kubernetes/manifests \

/var/lib/kubelet \

/etc/kubernetes/pki \

/etc/kubernetes/admin.conf \

/etc/kubernetes/kubelet.conf \

/etc/kubernetes/bootstrap-kubelet.conf \

/etc/kubernetes/controller-manager.conf \

/etc/kubernetes/scheduler.conf \

/etc/cni/net.d \

/etc/ssl \

/var/lib/etcd \

5. containerd配置harbor私有仓库

# 生成默认配置

mkdir /etc/containerd

containerd config default | tee /etc/containerd/config.toml

添加如下配置

#sandbox_image = "k8s.gcr.io/pause:3.8"

# 注释上面那行,添加下面这行

sandbox_image = "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.8"

# 配置Containerd直接使用systemd去管理cgroupfs

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

# 添加下面这行

SystemdCgroup = true

[plugins."io.containerd.grpc.v1.cri".registry] #此行下修改

config_path = "/etc/containerd/certs.d" # 改为此路径,并且需要在此路径下创建hosts.toml文件,用于存放镜像加速信息

# 创建/etc/containerd/certs.d下的hosts文件

mkdir -p /etc/containerd/certs.d/docker.io

vim /etc/containerd/certs.d/docker.io/hosts.toml

server = "https://docker.io"

[host."https://docker.1panel.live"]

capabilities = ["pull", "resolve"]

添加harbor源

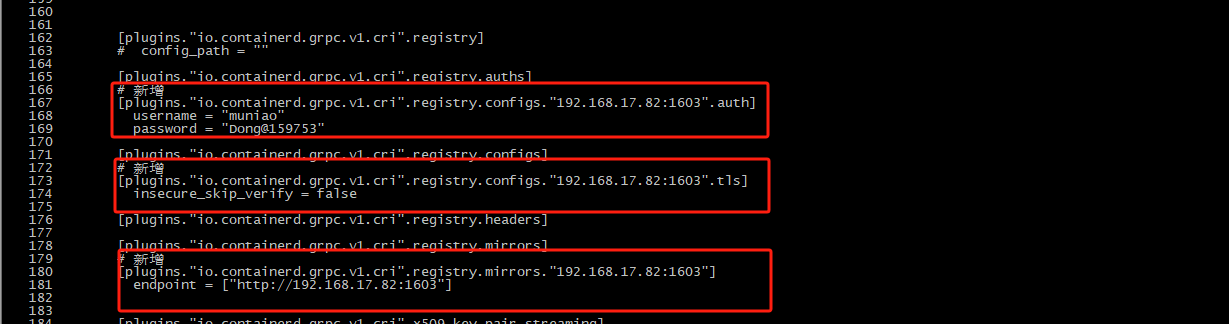

[plugins."io.containerd.grpc.v1.cri".registry.auths] # 新增如下内容:

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."192.168.30.14"]

endpoint = ["http://192.168.30.14"]

[plugins."io.containerd.grpc.v1.cri".registry.configs]

[plugins."io.containerd.grpc.v1.cri".registry.configs."192.168.30.14".auth]

username = "admin"

password = "Harbor123456"

[plugins."io.containerd.grpc.v1.cri".registry.configs."192.168.30.14".tls]

insecure_skip_verify = true

修改镜像存放路径

root = "/home/containerd" # 这里改成自己的路径即可

然后重启container。

注意:启动pod拉下来的镜像使用 crictl images ls 命令才可以查看到!

拉取镜像:

如果想在node节点上从仓库拉取镜像,使用命令:

crictl pull 192.168.17.82:1603/local.muniao.microservice/newapi:jenkins_v1.8.2.0.1_149

但是用如下命令会报错

root@mn-test-knode02-18-35 containerd]# ctr images pull 192.168.17.82:1603/local.muniao.microservice/newapi:jenkins_v1.8.2.0.1_149

INFO[0000] trying next host error="failed to do request: Head \"https://192.168.17.82:1603/v2/local.muniao.microservice/newapi/manifests/jenkins_v1.8.2.0.1_149\": http: server gave HTTP response to HTTPS client" host="192.168.17.82:1603"

ctr: failed to resolve reference "192.168.17.82:1603/local.muniao.microservice/newapi:jenkins_v1.8.2.0.1_149": failed to do request: Head "https://192.168.17.82:1603/v2/local.muniao.microservice/newapi/manifests/jenkins_v1.8.2.0.1_149": http: server gave HTTP response to HTTPS client

[root@mn-test-knode02-18-35 containerd]# ctr images pull 192.168.17.82:1603/local.muniao.microservice/newapi:jenkins_v1.8.2.0.1_149

可以忽略,原因未知,实际部署已经可以正常拉取镜像了。

浙公网安备 33010602011771号

浙公网安备 33010602011771号