Conversation Modeling on Reddit Using a Graph-Structured LSTM

publish: Transactions of the Association for Computational Linguistics,2016

tasks: predicting popularity of comments in Reddit discussions

contributions:

1\ graph-structured bidirectional LSTM (long-short term memory) which represents both hierarchical and temporal conversation structure.

可以捕获层次和时间上的对话结构表示, 图结构的双向LSTM

the LSTM units include both hierachical and temporal components to the update, which distinguishes this work from prior tree-structured LSTM models.

2\ the proposed model outperforms a node-independent architecture for different sets of input features. Analyses show a benefit to the model over the full course of the discussion, improving detection in both early and late stages.

比结点独立表示架构优越,能够建模整个讨论,提高对于初始早期和晚期的阶段探测?。

bidirectional tree state updates

双向树状态更新

Methods:

1)建模图结构:

When the comment-response links are preserved, those conversations can be represented in a tree structure where comments represent nodes, the root is theoriginal post, and each new reply to a previous comment is added as a child of that comment.

当保留了评论-响应链接时,这些对话可以用树结构表示,其中评论表示节点,根是原始的帖子,对前一个评论的每个新回复都被添加为该评论的子条目。

当贡献文件的时间戳可用时,可以用该信息对树的节点进行排序和注释。

树形结构有助于观察讨论如何展开成不同的子主题,并显示讨论的不同分支活动水平的差异。

但是尝试使用树结构来捕获信息流,以便更好地为提交评论的上下文建模,包括它所响应的历史以及随后对该评论的响应。

假设有一个树形结构的响应网络,并考虑了评论的相对顺序。

会话中的每个评论都对应于树中的一个节点,它的父节点是它要响应的评论,其子节点是它按时间顺序激发的响应评论

之前的研究发现,反应结构和时间对预测受欢迎程度都很重要 , LSTM单元包含了更新的层次和时间成分,这使得本工作有别于之前的树结构LSTM模型。我们的模型利用完整的讨论线程来预测流行度.

By introducing a forward-backward treestructured model, we provide a mechanism for leveraging early responses in predicting popularity, as well as a framework for better understanding the relative importance of these responses.

通过引入一个前后树结构模型,我们提供了一个机制来利用早期响应来预测受欢迎程度,以及一个框架来更好地理解这些响应的相对重要性。

2) proposed model

The proposed model is a bidirectional graph LSTM that characterizes a full threaded discussion, assuming a tree-structured response network and accounting for the relative order of the comments.

提出的模型是一个描述全线程讨论的双向图LSTM,假设响应网络是树形结构,并考虑了评论的相对顺序。

Each node in the tree is represented with a single recurrent neural network (RNN) unit that outputs a vector (embedding) that characterizes the interim state of the discussion, analogous to the vector output of an RNN unit which characterizes the word history in a sentence.

树中的每个节点都用一个递归神经网络(RNN)单元来表示,该单元输出表征讨论过渡状态的向量(嵌入),类似于RNN单元输出表征句子中单词历史的向量。

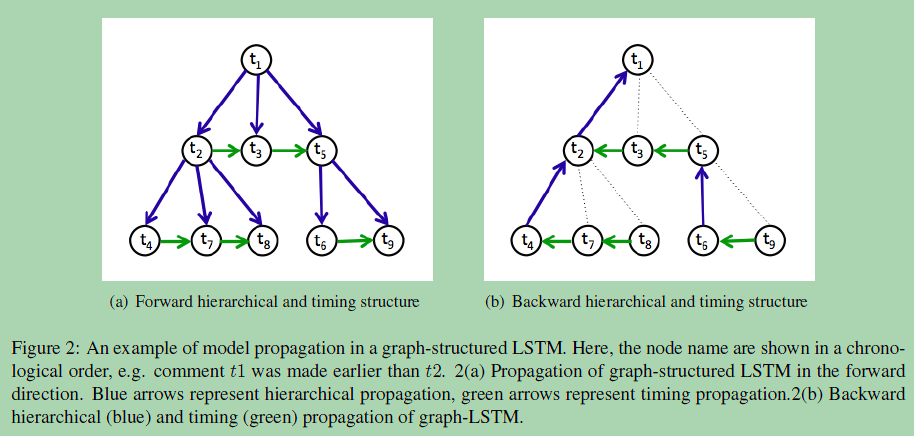

The RNN updates – both forward and backward – incorporate both temporal and hierarchical (tree-structured) dependencies, since commenters typically consider what has already been said in response to a parent comment. Hence, we refer to it as a graph-structured RNN rather than a tree-structured RNN.

RNN的更新——向前的和向后的——结合了时态和层次结构(树形结构)依赖,因为评论者通常会考虑对父评论的响应中已经说过的内容。因此,我们将其称为图结构的RNN,而不是树结构的RNN。

In the forward direction, the state vector can be thought of as a summary of the discussion pursued in a particular branch of the tree, while in the backward direction

the state vector summarizes the full response subtree that followed a particular comment.

the state vector summarizes the full response subtree that followed a particular comment.

在正向方向上,状态向量可以被认为是树的某个特定分支中所进行的讨论的总结,而在反向方向上,状态向量总结了某个特定评论之后的完整响应子树。

The state vectors for the forward and backward directions are concatenated for the purpose of predicting comment karma.

向前和向后方向的状态向量被连接起来,以预测评论因果报应。

We anticipate that the forward state will capture relevance and informativeness of the comment, and the backward process will capture sentiment and richness of the ensuing

discussion.

discussion.

我们预期,正向状态将捕获评论的相关性和信息,而逆向过程将捕获后续讨论的情感和丰富内容。

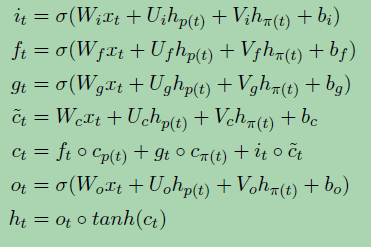

input gate, temporal forget gate, hierarchichal forget gate, cell, and output,

当整个树结构已知时,我们可以利用全响应子树更好地表示节点状态。

浙公网安备 33010602011771号

浙公网安备 33010602011771号