Python Requests

笔记-requests详解(二)

实例引入

import requests response = requests.get('https://www.baidu.com/') print(type(response)) print(response.status_code) print(type(response.text)) print(response.text) print(response.cookies)

各种请求方式

import requests requests.post('http://httpbin.org/post') requests.put('http://httpbin.org/put') requests.delete('http://httpbin.org/delete') requests.head('http://httpbin.org/get') requests.options('http://httpbin.org/get')

请求

基本GET请求

基本写法

import requests response = requests.get('http://httpbin.org/get') print(response.text)

带参数GET请求

import requests response = requests.get("http://httpbin.org/get?name=germey&age=22") print(response.text)

import requests data = { 'name': 'germey', 'age': 22 } response = requests.get("http://httpbin.org/get", params=data) print(response.text)

解析json

import requests import json response = requests.get("http://httpbin.org/get") print(type(response.text)) print(response.json()) print(json.loads(response.text)) print(type(response.json()))

获取二进制数据

import requests response = requests.get("https://github.com/favicon.ico") print(type(response.text), type(response.content)) print(response.text) print(response.content)

import requests response = requests.get("https://github.com/favicon.ico") with open('favicon.ico', 'wb') as f: f.write(response.content) f.close()

添加headers

import requests response = requests.get("https://www.zhihu.com/explore") print(response.text)

import requests headers = { 'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_4) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36' } response = requests.get("https://www.zhihu.com/explore", headers=headers) print(response.text)

基本POST请求

import requests data = {'name': 'germey', 'age': '22'} response = requests.post("http://httpbin.org/post", data=data) print(response.text)

import requests data = {'name': 'germey', 'age': '22'} headers = { 'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_4) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36' } response = requests.post("http://httpbin.org/post", data=data, headers=headers) print(response.json())

响应

reponse属性

import requests response = requests.get('http://www.jianshu.com') print(type(response.status_code), response.status_code) print(type(response.headers), response.headers) print(type(response.cookies), response.cookies) print(type(response.url), response.url) print(type(response.history), response.history)

import requests response = requests.get('http://www.jianshu.com/hello.html') exit() if not response.status_code == requests.codes.not_found else print('404 Not Found')

import requests response = requests.get('http://www.jianshu.com') exit() if not response.status_code == 200 else print('Request Successfully')

高级操作

文件上传

import requests files = {'file': open('favicon.ico', 'rb')} response = requests.post("http://httpbin.org/post", files=files) print(response.text)

获取cookie

import requests response = requests.get("https://www.baidu.com") print(response.cookies) for key, value in response.cookies.items(): print(key + '=' + value)

会话维持

模拟登录

import requests requests.get('http://httpbin.org/cookies/set/number/123456789') response = requests.get('http://httpbin.org/cookies') print(response.text)

import requests s = requests.Session() s.get('http://httpbin.org/cookies/set/number/123456789') response = s.get('http://httpbin.org/cookies') print(response.text)

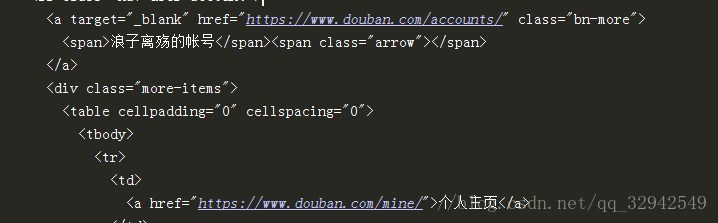

import requests s = requests.session() data = {'form_email':'账户','form_password':'密码'} #post 换成登录的地址, s.post('https://www.douban.com/accounts/login',data) #换成抓取的地址 response=s.get('https://movie.douban.com/top250') print(response.text)#显示以下信息,说明已登陆成功

证书验证

import requests response = requests.get('https://www.12306.cn') print(response.status_code)

import requests from requests.packages import urllib3 urllib3.disable_warnings() response = requests.get('https://www.12306.cn', verify=False) print(response.status_code)

import requests response = requests.get('https://www.12306.cn', cert=('/path/server.crt', '/path/key')) print(response.status_code)

代理设置

import requests proxies = { "http": "http://127.0.0.1:9743", "https": "https://127.0.0.1:9743", } response = requests.get("https://www.taobao.com", proxies=proxies) print(response.status_code)

import requests proxies = { "http": "http://user:password@127.0.0.1:9743/", } response = requests.get("https://www.taobao.com", proxies=proxies) print(response.status_code)

超时设置

import requests from requests.exceptions import ReadTimeout try: response = requests.get("http://httpbin.org/get", timeout = 0.5) print(response.status_code) except ReadTimeout: print('Timeout')

认证设置

import requests from requests.auth import HTTPBasicAuth r = requests.get('http://120.27.34.24:9001', auth=HTTPBasicAuth('user', '123')) print(r.status_code)

import requests r = requests.get('http://120.27.34.24:9001', auth=('user', '123')) print(r.status_code)

异常处理

import requests

from requests.exceptions import ReadTimeout, ConnectionError, RequestException

try:

response = requests.get("http://httpbin.org/get", timeout = 0.5)# timeout 仅对连接过程有效,与响应体的下载无关。# timeout 并不是整个下载响应的时间限制,而是如果服务器在15 秒内没有应答,将会引发一个异常(更精确地说,是在 timeout 秒内没有从基础套接字上接收到任何字节的数据时)

print(response.status_code)

except ReadTimeout:

print('Timeout')

except ConnectionError:

print('Connection error')

except RequestException:

print('Error')

json入参嵌套

方式一:

import requests,json

url = "http://xxx"

headers = {"Content-Type":"application/json","Authorization":"Bearer token值"}

data1 = {"key1":"value1","key2":"value2"}

data = {"params":data1}

r = request.post(url = url,data = json.dumps(data),headers = headers)

方式二:

import requests,json

url = "http://xxx"

headers = {"Content-Type":"application/json","Authorization":"Bearer token值"}

data1 = {"key1":"value1","key2":"value2"}

data = {"params":data1}

r = request.post(url = url,json = data,headers = headers)

Python Requests 小技巧总结

1:重定向

网络请求中可能会遇到重定向,我们需要一次处理一个请求,可以把重定向禁止。

self.session.post(url,data,allow_redirects=False)

2:写接口请求,debug时,会需要看下代码请求的详细信息,当然我们可以使用fiddler来查看,其实我们自己也可以在代码这样获取debug信息*

import requests import logging import httplib as http_client http_client.HTTPConnection.debuglevel = 1 logging.basicConfig() logging.getLogger().setLevel(logging.DEBUG) requests_log = logging.getLogger("requests.packages.urllib3") requests_log.setLevel(logging.DEBUG) requests_log.propagate = True requests.get('https://www.baidu.com') #更好的方法是自己封装一个装饰器,就可以为任意请求函数添加一个debug功能。

3:统计一个API请求花费的时间,我们可以使用如下方法

self.session.get(url).elapsed

4:发送自定义cookies

我们使用Session实例来保持请求之间的cookies,但是有些特殊情况,需要使用自定义的cookies

我们可以这样

# 自定义cookies cookie = {'guid':'5BF0FAB4-A7CF-463E-8C17-C1576fc7a9a8','uuid':'3ff5f4091f35a467'} session.post('http://wikipedia.org', cookies=cookie)

python中requests.session的妙用

在进行接口测试的时候,我们会调用多个接口发出多个请求,在这些请求中有时候需要保持一些共用的数据,例如cookies信息。

1、requests库的session对象能够帮我们跨请求保持某些参数,也会在同一个session实例发出的所有请求之间保持cookies。

s = requests.session() # req_param = '{"belongId": "300001312","userName": "alitestss003","password":"pxkj88","captcha":"pxpx","captchaKey":"59675w1v8kdbpxv"}' # res = s.post('http://test.e.fanxiaojian.cn/metis-in-web/auth/login', json=json.loads(req_param)) # # res1 = s.get("http://test.e.fanxiaojian.cn/eos--web/analysis/briefing") # print(res.cookies.values()) 获取登陆的所有session

2、requests库的session对象还能为我们提供请求方法的缺省数据,通过设置session对象的属性来实现

eg:

# 创建一个session对象

s = requests.Session()

# 设置session对象的auth属性,用来作为请求的默认参数

s.auth = ('user', 'pass')

# 设置session的headers属性,通过update方法,将其余请求方法中的headers属性合并起来作为最终的请求方法的headers

s.headers.update({'x-test': 'true'})

# 发送请求,这里没有设置auth会默认使用session对象的auth属性,这里的headers属性会与session对象的headers属性合并

r = s.get('http://httpbin.org/headers', headers={'x-test2': 'true'})

上面的请求数据等于:{'Authorization': 'Basic dXNlcjpwYXNz', 'x-test': 'false'}

# 查看发送请求的请求头

r.request.headers #打印响应中请求的所有header数据

res3 = s.get("http://pre.n.cn/irs-web/sso/login",cookies = cookie)

print(res3.request.headers.get("Cookie").split("IRSSID=")[-1])

print(type(res3.request.headers.get("Cookie").split("IRSSID=")[-1]))

print(res3.request._cookies)

Python:requests:详解超时和重试

网络请求不可避免会遇上请求超时的情况,在 requests 中,如果不设置你的程序可能会永远失去响应。

超时又可分为连接超时和读取超时。

连接超时

connect()),Request 等待的秒数。import time import requests url = 'http://www.google.com.hk' print(time.strftime('%Y-%m-%d %H:%M:%S')) try: html = requests.get(url, timeout=5).text print('success') except requests.exceptions.RequestException as e: print(e) print(time.strftime('%Y-%m-%d %H:%M:%S'))

因为 google 被墙了,所以无法连接,错误信息显示 connect timeout(连接超时)。

2019-10-25 15:03:40 HTTPConnectionPool(host='www.google.com.hk', port=80): Max retries exceeded with url: / (Caused by ConnectTimeoutError(<urllib3.connection.HTTPConnection object at 0x02DC4650>, 'Connection to www.google.com.hk timed out. (connect timeout=5)')) 2019-10-25 15:03:46

就算不设置,也会有一个默认的连接超时时间(我测试了下,大概是21秒)。

读取超时

读取超时指的就是客户端等待服务器发送请求的时间。(特定地,它指的是客户端要等待服务器发送字节之间的时间。在 99.9% 的情况下这指的是服务器发送第一个字节之前的时间)。

简单的说,连接超时就是发起请求连接到连接建立之间的最大时长,读取超时就是连接成功开始到服务器返回响应之间等待的最大时长。

如果你设置了一个单一的值作为 timeout,如下所示:

r = requests.get('https://github.com', timeout=5)

这一 timeout 值将会用作 connect 和 read 二者的 timeout。如果要分别制定,就传入一个元组:

r = requests.get('https://github.com', timeout=(3.05, 27))

黑板课爬虫闯关的第四关正好网站人为设置了一个15秒的响应等待时间,拿来做说明最好不过了。

#!/usr/bin/python

#coding=utf-8

import time

import requests

url_login = 'http://www.heibanke.com/accounts/login/?next=/lesson/crawler_ex03/'

session = requests.Session()

session.get(url_login)

token = session.cookies['csrftoken']

session.post(url_login, data={'csrfmiddlewaretoken': token, 'username': 'xx', 'password': 'xx'})

print(time.strftime('%Y-%m-%d %H:%M:%S'))

url_pw = 'http://www.heibanke.com/lesson/crawler_ex03/pw_list/'

try:

html = session.get(url_pw, timeout=(5, 10)).text

#html = session.get(url_pw, timeout=(5, 1)).text

print('success')

except requests.exceptions.RequestException as e:

print(e)

print(time.strftime('%Y-%m-%d %H:%M:%S'))

错误信息中显示的是 read timeout(读取超时)。

2019-10-25 15:07:46 HTTPConnectionPool(host='www.heibanke.com', port=80): Read timed out. (read timeout=1) 2019-10-25 15:07:48

读取超时是没有默认值的,如果不设置,程序将一直处于等待状态。我们的爬虫经常卡死又没有任何的报错信息,原因就在这里了。

超时重试

一般超时我们不会立即返回,而会设置一个三次重连的机制。

def gethtml(url): i = 0 while i < 3: try: html = requests.get(url, timeout=5).text return html except requests.exceptions.RequestException: i += 1

其实 requests 已经帮我们封装好了。(但是代码好像变多了...)

import time import requests from requests.adapters import HTTPAdapter s = requests.Session() s.mount('http://', HTTPAdapter(max_retries=3)) s.mount('https://', HTTPAdapter(max_retries=3)) print(time.strftime('%Y-%m-%d %H:%M:%S')) try: r = s.get('http://www.google.com.hk', timeout=5) print r.text except requests.exceptions.RequestException as e: print(e) print(time.strftime('%Y-%m-%d %H:%M:%S'))

max_retries 为最大重试次数,重试3次,加上最初的一次请求,一共是4次,所以上述代码运行耗时是20秒而不是15秒

2019-10-25 15:12:36 HTTPConnectionPool(host='www.google.com.hk', port=80): Max retries exceeded with url: / (Caused by ConnectTimeoutError(<urllib3.connection.HTTPConnection object at 0x02DA4B50>, 'Connection to www.google.com.hk timed out. (connect timeout=5)')) 2019-10-25 15:12:56

用GREQUESTS实现并发HTTP请求

起因

要用http请求探测服务的有效性,多进程,多线程,感觉似乎没有必要,看看有没有协程的方案

1. 简单用法

grequests 利用 requests和gevent库,做了一个简单封装,使用起来非常方便

import grequests import time import requests urls = [ 'https://docs.python.org/2.7/library/index.html', 'https://docs.python.org/2.7/library/dl.html', 'http://www.iciba.com/partial', 'http://2489843.blog.51cto.com/2479843/1407808', 'http://blog.csdn.net/woshiaotian/article/details/61027814', 'https://docs.python.org/2.7/library/unix.html', 'http://2489843.blog.51cto.com/2479843/1386820', 'http://www.bazhuayu.com/tutorial/extract_loop_url.aspx?t=0', ] def method1(): t1 = time.time() for url in urls: res = requests.get(url) #print res.status_code t2 = time.time() print 'method1', t2 - t1 def method2(): tasks = [grequests.get(u) for u in urls] t1 = time.time() res = grequests.map(tasks, size=3) # print res t2 = time.time() print 'method2', t2 - t1 def method3(): tasks = [grequests.get(u) for u in urls] t1 = time.time() res = grequests.map(tasks, size=6) # print res t2 = time.time() if __name__ == '__main__': method1() method2() method3()

运行结果如下:

method1 8.51106119156 method2 5.77834510803 method3 2.55373907089

可以看到使用协程以后,整个程序的完成时间有了大大缩短,并且每个协程的并发粒度也会影响整体时间

2. 重要参数

这里需要补充的是几个

grequests

def grequests.map(requests, stream=False, size=None, exception_handler=None, gtimeout=None)

另外,由于grequests底层使用的是requests,因此它支持

GET,OPTIONS, HEAD, POST, PUT, DELETE 等各种http method

所以以下的任务请求都是支持的

grequests.post(url, json={"name":"zhangsan"})

grequests.delete(url)

3. 事件钩子

grequests的底层库,是requests,因此它也支持事件钩子

def print_url(r, *args, **kwargs): print(r.url) url = "http://www.baidu.com" # 1. res = requests.get(url, hooks={"response":print_url}) # 2. tasks = [] req = grequests.get(url, callback=print_url) tasks.append(req) res = grequests.map(tasks)

浙公网安备 33010602011771号

浙公网安备 33010602011771号