openstack高可用集群搭建(分布式路由)(train版)

- 一、规划

- 二、基础服务

- 三、Keystone集群部署

- 四、Glance集群部署

- 五、Placement服务部署

- 六、Nova控制节点集群部署

- 七、Nova计算节点集群部署

- 八、Neutron控制+网络节点集群部署(openvswitch方式)

- 九、Neutron计算节点(openvswitch方式)

- 十、Horazion仪表盘集群部署

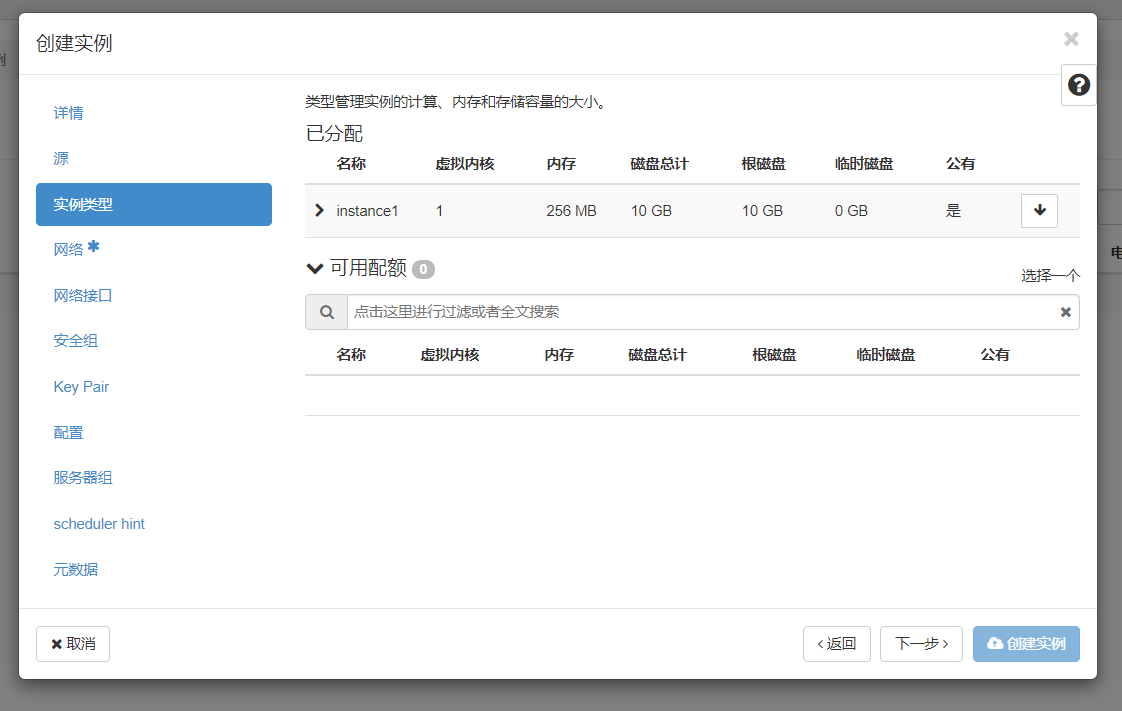

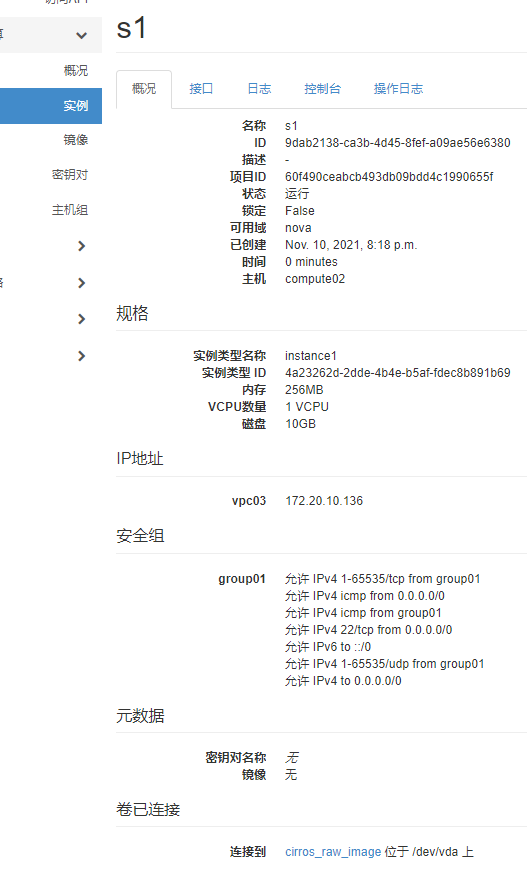

- 十一、创建虚拟网络并启动实例操作

- 十二、Ceph集群部署

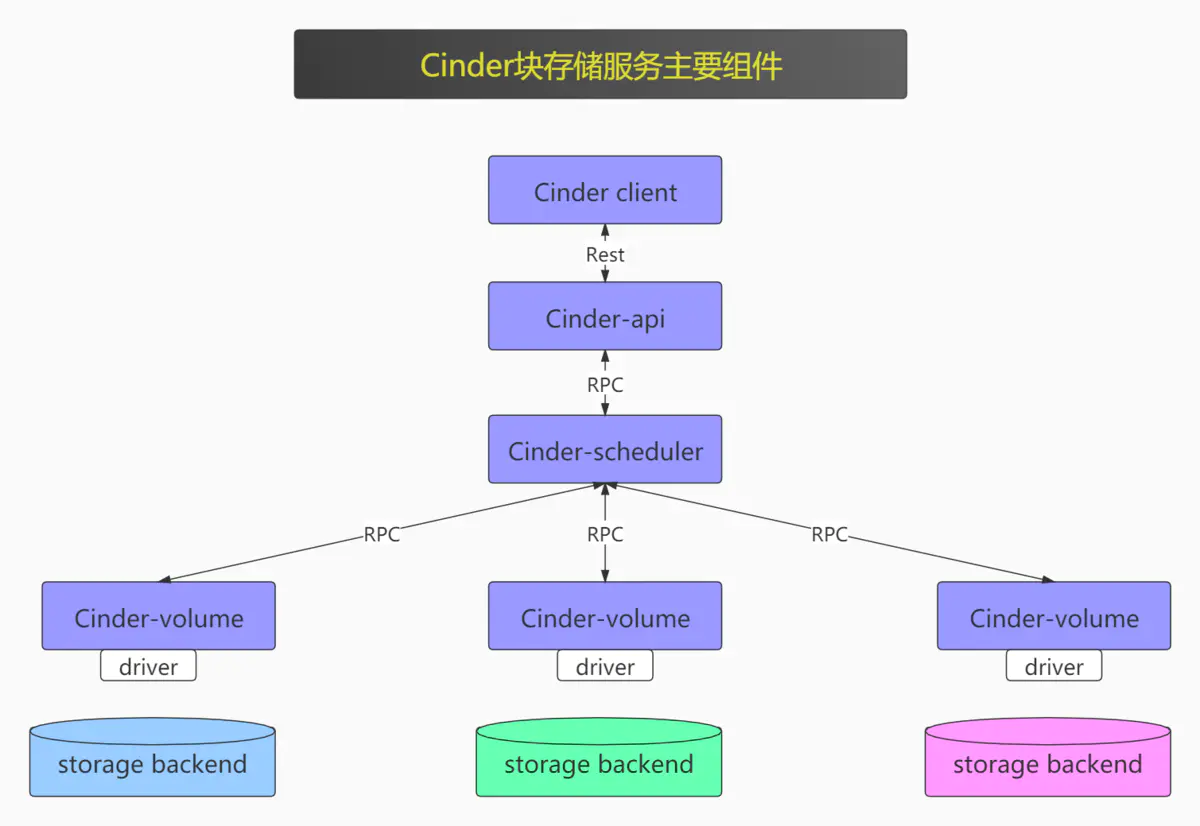

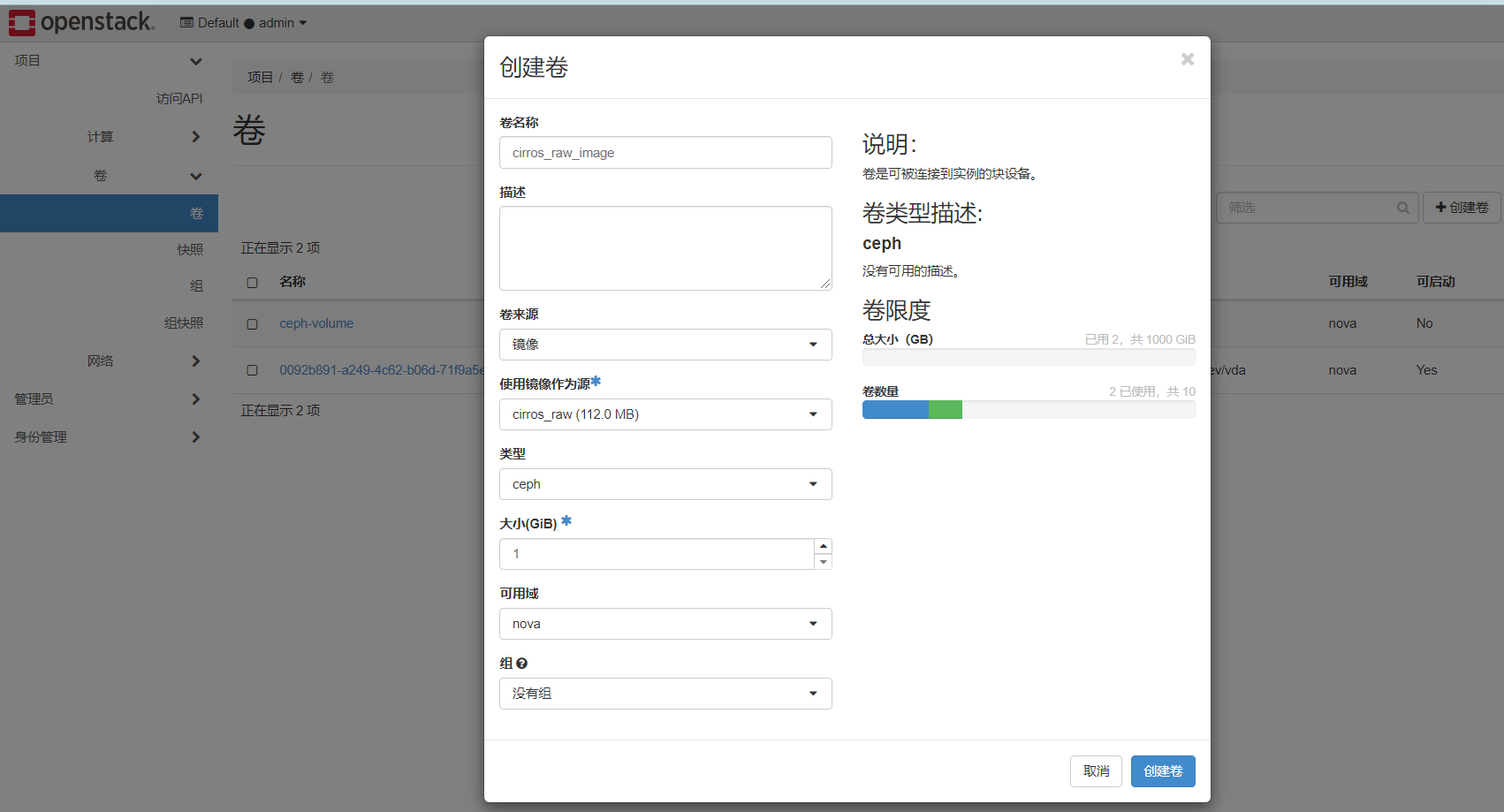

- 十三、Cinder部署

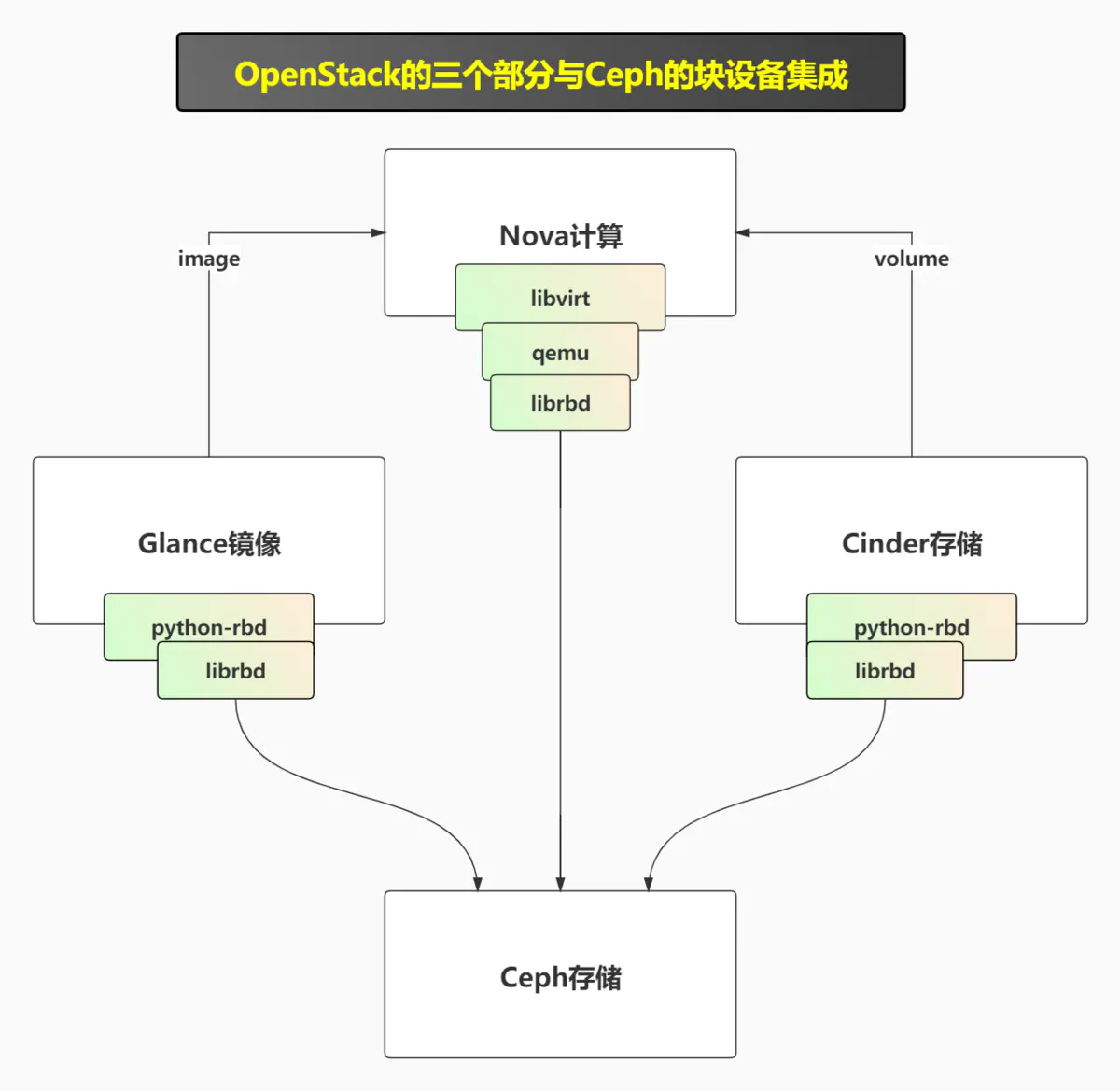

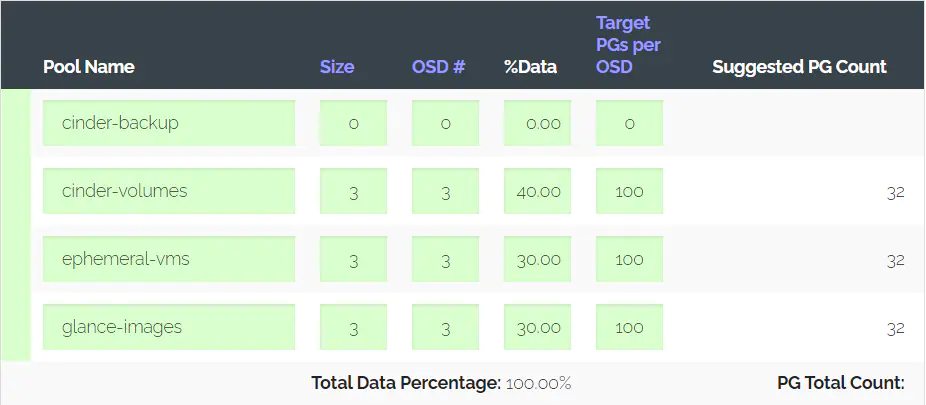

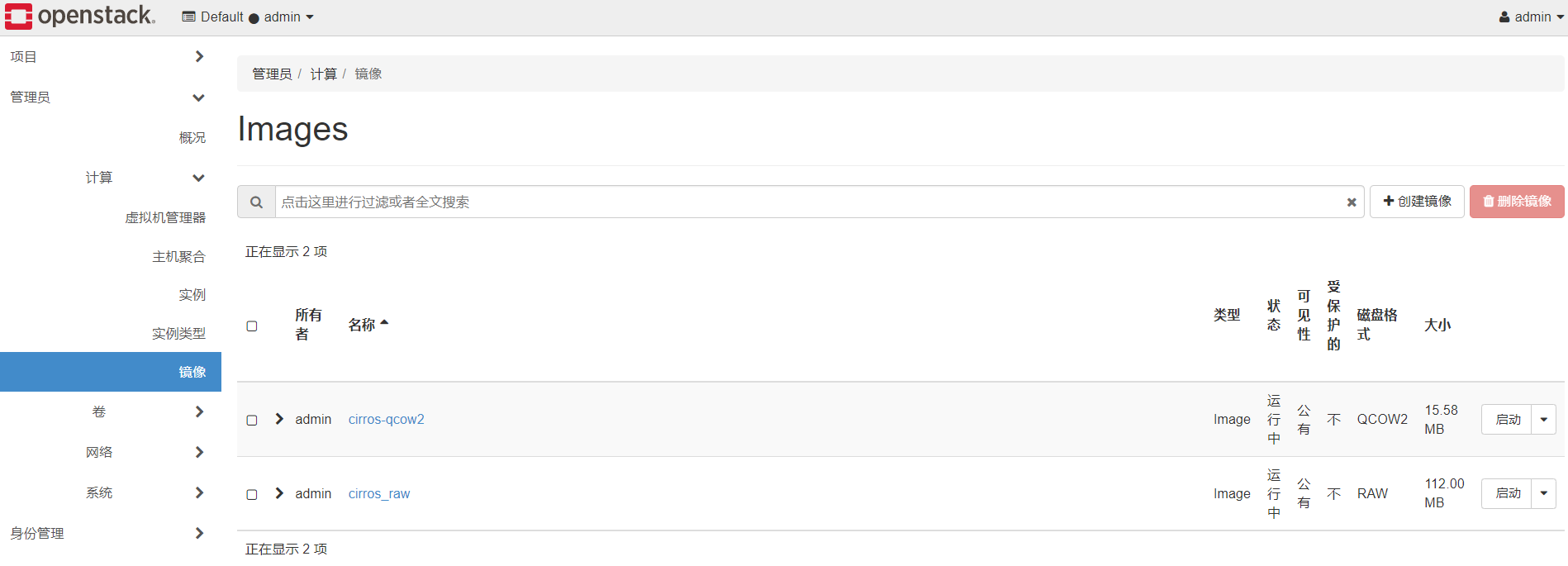

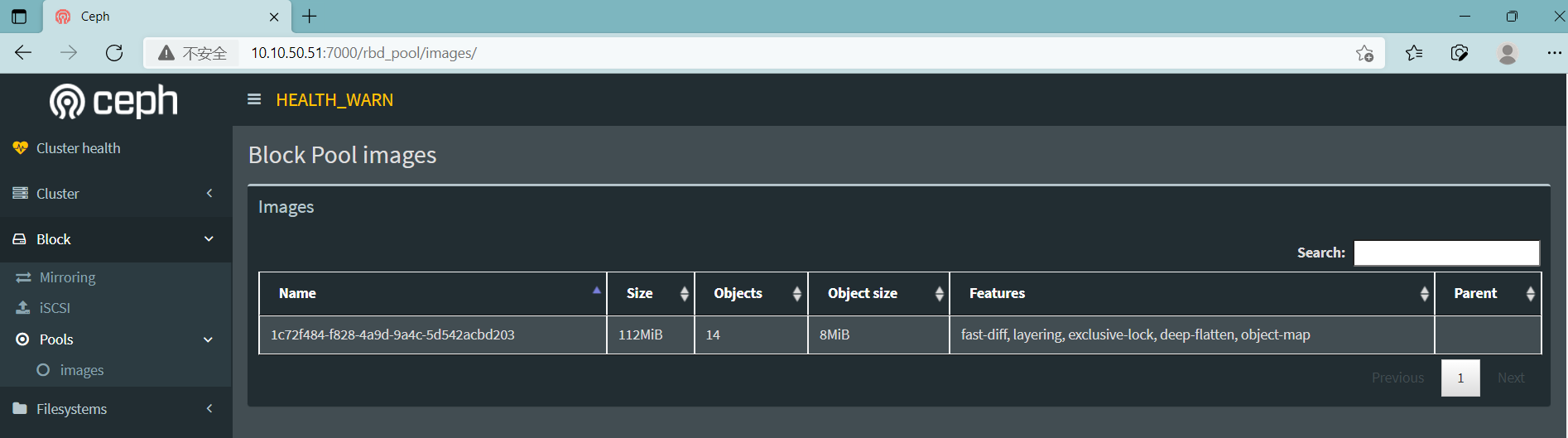

- 十四、对接Ceph存储

- 十五、负载均衡Octavia部署

- 其他组件

- 错误记录

一、规划

主机规划

# ha-node

10.10.10.21 ha01

10.10.10.22 ha02

# controller-node

10.10.10.31 controller01

10.10.10.32 controller02

10.10.10.33 controller03

# compute-node

10.10.10.41 compute01

10.10.10.42 compute02

# ceph-node

10.10.10.51 ceph01

10.10.10.52 ceph02

10.10.10.53 ceph03

- ha-node 使用 haproxy + keepalived 实现高可用,vip 10.10.10.10

- controller-node 部署控制节点相关组件以及网络节点 neutron 的 sever 与 agent 组件

- 系统:centos 7.9 ,内核:5.4.152-1.el7.elrepo.x86_64

系统拓扑

- congtroller节点运行keystone,glance,horizon,nova&neutron&cinder管理相关组件,另外openstack相关的基础服务;

- compute节点运行nova-compute,neutron-openvswitch-agent(只有openvswitch支持分布式路由),cinder-volume(后经验证,如果后端使用共享存储,建议部署在controller节点,可通过pacemaker控制运行模式,但写文档时,此验证环境的cinder-volume部署在compute节点)等

- 控制+网络节点

- 控制节点和网络节点部署在相同机器,也可以控制节点和网络节点分开(控制节点部署neutron-server;网络节点部署neutron-agent)

- 管理网络(红色):含host os管理,api等网络,如果生产环境允许,建议各逻辑网络使用独立的物理网络,api区分admin/internal/public接口,对客户端只开放public接口;

- 外部网络(蓝色,External Network):主要针对guest os访问internet/外部的floating ip;

- 租户(虚机)隧道网络(与vlan网络共存或2选1)(紫色):guest os之间通讯的网络,采用vxlan/gre等方式;

- 租户(虚机)vlan网络(与隧道网络共存或2选1)(黄色,不需要配置ip):guest os之间通讯的网络,采用vlan方式(虽然规划了,但是后面创建实例时是没有使用的);

- 存储网络(绿色):与存储集群通讯;为了glance和ceph通信;

- 计算节点网络

- 管理网络(红色):含host os管理,api等网络;

- 外部网络(蓝色,External Network):主要针对guest os访问internet/外部的floating ip;

- 存储网络(绿色):与存储集群通讯;

- 租户(虚机)隧道网络(与vlan网络共存或2选1)(紫色):guest os之间通讯的网络,采用vxlan/gre等方式;

- 租户(虚机)vlan网络(与隧道网络共存或2选1)(黄色,不需要配置ip):guest os之间通讯的网络,采用vlan方式(虽然规划了,但是后面创建实例时是没有使用的);

- 存储节点

- 管理网络(红色):含host os管理,api等网络;

- 存储网络(绿色):与外部存储客户端通信;

- 存储集群网络(黑色):存储集群内部通讯,数据复制同步网络,与外界没有直接联系;

- 无状态的服务,如xxx-api,采取active/active的模式运行;有状态的服务,如neturon-xxx-agent,cinder-volume等,建议采取active/passive的模式运行(因前端采用haproxy,客户端的多次请求可能会被转发到不同的控制节点,如果客户端请求被负载到无状态信息的控制节点,可能会导致操作请求失败);自身具有集群机制的服务,如rabbitmq,memcached等采用本身的集群机制即可;

Vmware 虚拟机网络配置

虚拟网络设置

- 因为外部网络ens34是连接在VMnet2上的,按理说应该是VMnet2是NAT模式(vmware只能设置一个NAT网络),但是因为所有主机需要yum安装软件,所以暂时把管理网络VMnet1设置为NAT模式,后面测试外部网络功能的时候会把VMnet2设置为NAT模式

ha node

controller + network node

compute node

ceph node

整体规划

| host | ip | service | remark |

|---|---|---|---|

| ha01-02 | ens33:10.10.10.21-22 | 1.haproxy 2.keepalived |

1.高可用 vip:10.10.10.10 |

| controller01-03 | ens33:10.10.10.31-33 ens34:10.10.20.31-33 ens35:10.10.30.31-33 ens36:Vlan Tenant Network ens37:10.10.50.31-33 |

1. keystone 2. glance-api , glance-registry 3. nova-api, nova-conductor, nova-consoleauth, nova-scheduler, nova-novncproxy 4. neutron-api, neutron-openvswitch-agent, neutron-dhcp-agent, neutron-metadata-agent, neutron-l3-agent 5. cinder-api, cinder-schedulera 6. dashboard 7. mariadb, rabbitmq, memcached等 |

1.控制节点: keystone, glance, horizon, nova&neutron管理组件; 2.网络节点:虚机网络,L2(虚拟交换机)/L3(虚拟路由器),dhcp,route,nat等; 3.openstack基础服务 |

| compute01-02 | ens33:10.10.10.41-42 ens34:10.10.50.41-42 ens35:10.10.30.41-42 ens36:Vlan Tenant Network ens37:10.10.50.41-42 |

1. nova-compute 2. neutron-openvswitch-agent, neutron-metadata-agent, neutron-l3-agent 3. cinder-volume(如果后端使用共享存储,建议部署在controller节点) |

1.计算节点:hypervisor(kvm); 2.网络节点:虚机网络 L2(虚拟交换机)/L3(虚拟路由器)等; |

| ceph01-03 | ens33:10.10.10.51-53 ens34:10.10.50.51-53 ens35:10.10.60.51-53 |

1. ceph-mon, ceph-mgr 2. ceph-osd |

1.存储节点:调度,监控(ceph)等组件; 2.存储节点:卷服务等组件 |

网卡配置参考

[root@controller01 ~]# tail /etc/sysconfig/network-scripts/ifcfg-ens*

==> /etc/sysconfig/network-scripts/ifcfg-ens33 <==

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=7fff7303-8b35-4728-a4f2-f33d20aefdf4

DEVICE=ens33

ONBOOT=yes

IPADDR=10.10.10.31

NETMASK=255.255.255.0

GATEWAY=10.10.10.2

DNS1=10.10.10.2

==> /etc/sysconfig/network-scripts/ifcfg-ens34 <==

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens34

UUID=8f98810c-a504-4d16-979d-4829501a8c7c

DEVICE=ens34

ONBOOT=yes

IPADDR=10.10.20.31

NETMASK=255.255.255.0

==> /etc/sysconfig/network-scripts/ifcfg-ens35 <==

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens35

UUID=ba3ac372-df26-4226-911e-4a48031f80a8

DEVICE=ens35

ONBOOT=yes

IPADDR=10.10.30.31

NETMASK=255.255.255.0

==> /etc/sysconfig/network-scripts/ifcfg-ens36 <==

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens36

UUID=d7ab5617-a38f-4c28-b30a-f49a1cfd0060

DEVICE=ens36

ONBOOT=yes

==> /etc/sysconfig/network-scripts/ifcfg-ens37 <==

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens40

UUID=662b80cb-31f1-386d-b293-c86cfe98d755

ONBOOT=yes

IPADDR=10.10.50.31

NETMASK=255.255.255.0

- 其中一张网卡配置默认网关和DNS

升级内核

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

# 安装ELRepo

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

# 载入elrepo-kernel元数据

yum --disablerepo=\* --enablerepo=elrepo-kernel repolist

# 查看可用的rpm包

yum --disablerepo=\* --enablerepo=elrepo-kernel list kernel*

# 安装长期支持版本的kernel

yum --disablerepo=\* --enablerepo=elrepo-kernel install -y kernel-lt.x86_64

# 删除旧版本工具包

yum remove kernel-tools-libs.x86_64 kernel-tools.x86_64 -y

# 安装新版本工具包

yum --disablerepo=\* --enablerepo=elrepo-kernel install -y kernel-lt-tools.x86_64

#查看默认启动顺序

awk -F\' '$1=="menuentry " {print $2}' /etc/grub2.cfg

#默认启动的顺序是从0开始,新内核是从头插入(目前位置在0,而4.4.4的是在1),所以需要选择0。

grub2-set-default 0

- 内核:5.4.152-1.el7.elrepo.x86_64

配置firewalld、selinux、ntp时间同步、hostname、hosts文件

echo "# ha-node

10.10.10.21 ha01

10.10.10.22 ha02

# controller-node

10.10.10.31 controller01

10.10.10.32 controller02

10.10.10.33 controller03

# compute-node

10.10.10.41 compute01

10.10.10.42 compute02

# ceph-node

10.10.10.51 ceph01

10.10.10.52 ceph02

10.10.10.53 ceph03

" >> /etc/hosts

配置集群 ssh 信任关系

# 生成密钥

ssh-keygen -t rsa -P ''

# 拷贝公钥给本机

ssh-copy-id -i .ssh/id_rsa.pub root@localhost

# 拷贝 .ssh 目录所有文件到集群其他节点

scp -rp .ssh/ root@ha01:/root

scp -rp .ssh/ root@ha02:/root

scp -rp .ssh/ root@controller01:/root

scp -rp .ssh/ root@controller02:/root

scp -rp .ssh/ root@controller03:/root

scp -rp .ssh/ root@compute1:/root

scp -rp .ssh/ root@compute2:/root

scp -rp .ssh/ root@ceph01:/root

scp -rp .ssh/ root@ceph02:/root

scp -rp .ssh/ root@ceph03:/root

完成后,集群中所有主机可以互相免密登录了

优化 ssh 登陆速度

sed -i 's/#UseDNS yes/UseDNS no/g' /etc/ssh/sshd_config

systemctl restart sshd

内核参数优化

所有节点

echo 'modprobe br_netfilter' >> /etc/rc.d/rc.local

chmod 755 /etc/rc.d/rc.local

modprobe br_netfilter

echo 'net.ipv4.ip_forward = 1' >> /etc/sysctl.conf

echo 'net.bridge.bridge-nf-call-iptables=1' >> /etc/sysctl.conf

echo 'net.bridge.bridge-nf-call-ip6tables=1' >>/etc/sysctl.conf

sysctl -p

在 ha01 和 ha02 节点上添加,允许本地不存在 IP 绑定监听端口,允许运行中的 HAProxy 实例绑定端口到VIP

echo 'net.ipv4.ip_nonlocal_bind = 1' >> /etc/sysctl.conf

sysctl -p

安装基础软件包

所有节点

yum install epel-release -y

yum install centos-release-openstack-train -y

yum clean all

yum makecache

yum install python-openstackclient -y

- ha 节点可以不用安装

openstack-utils能够让openstack安装更加简单,直接在命令行修改配置文件(全部节点)

mkdir -p /opt/tools

yum install wget crudini -y

wget --no-check-certificate -P /opt/tools https://cbs.centos.org/kojifiles/packages/openstack-utils/2017.1/1.el7/noarch/openstack-utils-2017.1-1.el7.noarch.rpm

rpm -ivh /opt/tools/openstack-utils-2017.1-1.el7.noarch.rpm

- ha 节点可以不用安装

二、基础服务

MariaDB集群

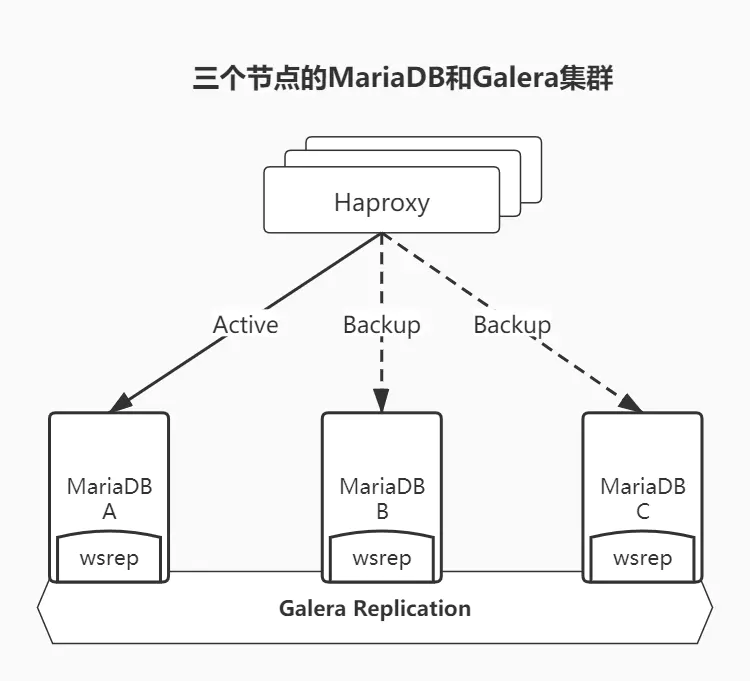

采用MariaDB + Galera组成三个Active节点,外部访问通过Haproxy的active + backend方式代理。平时主库为A,当A出现故障,则切换到B或C节点。目前测试将MariaDB三个节点部署到了控制节点上。

官方推荐:三个节点的MariaDB和Galera集群,建议每个集群具有4个vCPU和8 GB RAM

安装与配置修改

在全部controller节点安装mariadb,以controller01节点为例

yum install mariadb mariadb-server python2-PyMySQL -y

在全部controller节点安装galera相关插件,利用galera搭建集群

yum install mariadb-server-galera mariadb-galera-common galera xinetd rsync -y

systemctl restart mariadb.service

systemctl enable mariadb.service

在全部controller节点初始化mariadb数据库密码,以controller01节点为例

[root@controller01 ~]# mysql_secure_installation

#输入root用户的当前密码(不输入密码)

Enter current password for root (enter for none):

#设置root密码?

Set root password? [Y/n] y

#新密码:

New password:

#重新输入新的密码:

Re-enter new password:

#删除匿名用户?

Remove anonymous users? [Y/n] y

#禁止远程root登录?

Disallow root login remotely? [Y/n] n

#删除测试数据库并访问它?

Remove test database and access to it? [Y/n] y

#现在重新加载特权表?

Reload privilege tables now? [Y/n] y

修改mariadb配置文件

在全部控制节点/etc/my.cnf.d/目录下新增openstack.cnf配置文件,主要设置集群同步相关参数,以controller01节点为例,个别涉及ip地址/host名等参数根据当前节点实际情况修改

创建和编辑/etc/my.cnf.d/openstack.cnf文件

[server]

[mysqld]

bind-address = 10.10.10.31

max_connections = 1000

datadir=/var/lib/mysql

socket=/var/lib/mysql/mysql.sock

log-error=/var/log/mariadb/mariadb.log

pid-file=/run/mariadb/mariadb.pid

max_allowed_packet = 500M

net_read_timeout = 120

net_write_timeout = 300

thread_pool_idle_timeout = 300

[galera]

wsrep_on=ON

wsrep_provider=/usr/lib64/galera/libgalera_smm.so

wsrep_cluster_name="mariadb_galera_cluster"

wsrep_cluster_address="gcomm://controller01,controller02,controller03"

wsrep_node_name="controller01"

wsrep_node_address="10.10.10.31"

binlog_format=ROW

default_storage_engine=InnoDB

innodb_autoinc_lock_mode=2

wsrep_slave_threads=4

innodb_flush_log_at_trx_commit=2

innodb_buffer_pool_size=1024M

wsrep_sst_method=rsync

[embedded]

[mariadb]

[mariadb-10.3]

构建集群

停止全部控制节点的mariadb服务,以controller01节点为例

systemctl stop mariadb

在controller01节点通过如下方式启动mariadb服务

/usr/libexec/mysqld --wsrep-new-cluster --user=root &

其他控制节点加入mariadb集群

systemctl start mariadb.service

systemctl status mariadb.service

- 启动后加入集群,当前节点会从 controller01 节点同步数据,查看 mariadb 日志 /var/log/mariadb/mariadb.log

回到controller01节点重新配置mariadb

#重启controller01节点;并在启动前删除contrller01节点之前的数据

pkill -9 mysqld

rm -rf /var/lib/mysql/*

#注意以system unit方式启动mariadb服务时的权限

chown mysql:mysql /var/run/mariadb/mariadb.pid

## 启动后查看节点所在服务状态,正常的话 contrller01 节点会从 contrller02 节点同步数据

systemctl start mariadb.service

systemctl status mariadb.service

查看集群状态

[root@controller01 ~]# mysql -uroot -p123456

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 13

Server version: 10.3.20-MariaDB MariaDB Server

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> show status like "wsrep_cluster_size";

+--------------------+-------+

| Variable_name | Value |

+--------------------+-------+

| wsrep_cluster_size | 3 |

+--------------------+-------+

1 row in set (0.001 sec)

MariaDB [(none)]> show status LIKE 'wsrep_ready';

+---------------+-------+

| Variable_name | Value |

+---------------+-------+

| wsrep_ready | ON |

+---------------+-------+

1 row in set (0.000 sec)

MariaDB [(none)]>

在controller01创建数据库,到另外两台节点上查看是否可以同步

[root@controller01 ~]# mysql -uroot -p123456

MariaDB [(none)]> create database cluster_test charset utf8mb4;

Query OK, 1 row affected (0.005 sec)

MariaDB [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| cluster_test |

| information_schema |

| mysql |

| performance_schema |

+--------------------+

另外两台查看

[root@controller02 ~]# mysql -uroot -p123456 -e 'show databases;'

+--------------------+

| Database |

+--------------------+

| cluster_test | √

| information_schema |

| mysql |

| performance_schema |

+--------------------+

[root@controller03 ~]# mysql -uroot -p123456 -e 'show databases;'

+--------------------+

| Database |

+--------------------+

| cluster_test | √

| information_schema |

| mysql |

| performance_schema |

+--------------------+

设置心跳检测clustercheck

在全部控制节点下载修改 clustercheck 脚本

wget -P /extend/shell/ https://raw.githubusercontent.com/olafz/percona-clustercheck/master/clustercheck

在任意一个控制节点的数据库中创建 clustercheck_user 用户并赋权; 其他两台节点会自动同步

mysql -uroot -p123456

GRANT PROCESS ON *.* TO 'clustercheck'@'localhost' IDENTIFIED BY '123456';

flush privileges;

exit;

修改所有控制节点 clustercheck 脚本,注意账号/密码与上一步新建的账号/密码对应

$ vi /extend/shell/clustercheck

MYSQL_USERNAME="clustercheck"

MYSQL_PASSWORD="123456"

MYSQL_HOST="localhost"

MYSQL_PORT="3306"

...

#添加执行权限并复制到/usr/bin/下

$ chmod +x /extend/shell/clustercheck

$ cp /extend/shell/clustercheck /usr/bin/

- 最新下载的 clustercheck 脚本好像不用设置 MYSQL_HOST 与 MYSQL_PORT 参数

- /usr/bin/clustercheck 参考

#!/bin/bash

#

# Script to make a proxy (ie HAProxy) capable of monitoring Percona XtraDB Cluster nodes properly

#

# Author: Olaf van Zandwijk <olaf.vanzandwijk@nedap.com>

# Author: Raghavendra Prabhu <raghavendra.prabhu@percona.com>

#

# Documentation and download: https://github.com/olafz/percona-clustercheck

#

# Based on the original script from Unai Rodriguez

#

if [[ $1 == '-h' || $1 == '--help' ]];then

echo "Usage: $0 <user> <pass> <available_when_donor=0|1> <log_file> <available_when_readonly=0|1> <defaults_extra_file>"

exit

fi

# if the disabled file is present, return 503. This allows

# admins to manually remove a node from a cluster easily.

if [ -e "/var/tmp/clustercheck.disabled" ]; then

# Shell return-code is 1

echo -en "HTTP/1.1 503 Service Unavailable\r\n"

echo -en "Content-Type: text/plain\r\n"

echo -en "Connection: close\r\n"

echo -en "Content-Length: 51\r\n"

echo -en "\r\n"

echo -en "Percona XtraDB Cluster Node is manually disabled.\r\n"

sleep 0.1

exit 1

fi

set -e

if [ -f /etc/sysconfig/clustercheck ]; then

. /etc/sysconfig/clustercheck

fi

MYSQL_USERNAME="clustercheck"

MYSQL_PASSWORD="123456"

MYSQL_HOST="localhost"

MYSQL_PORT="3306"

AVAILABLE_WHEN_DONOR=${AVAILABLE_WHEN_DONOR:-0}

ERR_FILE="${ERR_FILE:-/dev/null}"

AVAILABLE_WHEN_READONLY=${AVAILABLE_WHEN_READONLY:-1}

DEFAULTS_EXTRA_FILE=${DEFAULTS_EXTRA_FILE:-/etc/my.cnf}

#Timeout exists for instances where mysqld may be hung

TIMEOUT=10

EXTRA_ARGS=""

if [[ -n "$MYSQL_USERNAME" ]]; then

EXTRA_ARGS="$EXTRA_ARGS --user=${MYSQL_USERNAME}"

fi

if [[ -n "$MYSQL_PASSWORD" ]]; then

EXTRA_ARGS="$EXTRA_ARGS --password=${MYSQL_PASSWORD}"

fi

if [[ -r $DEFAULTS_EXTRA_FILE ]];then

MYSQL_CMDLINE="mysql --defaults-extra-file=$DEFAULTS_EXTRA_FILE -nNE --connect-timeout=$TIMEOUT \

${EXTRA_ARGS}"

else

MYSQL_CMDLINE="mysql -nNE --connect-timeout=$TIMEOUT ${EXTRA_ARGS}"

fi

#

# Perform the query to check the wsrep_local_state

#

WSREP_STATUS=$($MYSQL_CMDLINE -e "SHOW STATUS LIKE 'wsrep_local_state';" \

2>${ERR_FILE} | tail -1 2>>${ERR_FILE})

if [[ "${WSREP_STATUS}" == "4" ]] || [[ "${WSREP_STATUS}" == "2" && ${AVAILABLE_WHEN_DONOR} == 1 ]]

then

# Check only when set to 0 to avoid latency in response.

if [[ $AVAILABLE_WHEN_READONLY -eq 0 ]];then

READ_ONLY=$($MYSQL_CMDLINE -e "SHOW GLOBAL VARIABLES LIKE 'read_only';" \

2>${ERR_FILE} | tail -1 2>>${ERR_FILE})

if [[ "${READ_ONLY}" == "ON" ]];then

# Percona XtraDB Cluster node local state is 'Synced', but it is in

# read-only mode. The variable AVAILABLE_WHEN_READONLY is set to 0.

# => return HTTP 503

# Shell return-code is 1

echo -en "HTTP/1.1 503 Service Unavailable\r\n"

echo -en "Content-Type: text/plain\r\n"

echo -en "Connection: close\r\n"

echo -en "Content-Length: 43\r\n"

echo -en "\r\n"

echo -en "Percona XtraDB Cluster Node is read-only.\r\n"

sleep 0.1

exit 1

fi

fi

# Percona XtraDB Cluster node local state is 'Synced' => return HTTP 200

# Shell return-code is 0

echo -en "HTTP/1.1 200 OK\r\n"

echo -en "Content-Type: text/plain\r\n"

echo -en "Connection: close\r\n"

echo -en "Content-Length: 40\r\n"

echo -en "\r\n"

echo -en "Percona XtraDB Cluster Node is synced.\r\n"

sleep 0.1

exit 0

else

# Percona XtraDB Cluster node local state is not 'Synced' => return HTTP 503

# Shell return-code is 1

echo -en "HTTP/1.1 503 Service Unavailable\r\n"

echo -en "Content-Type: text/plain\r\n"

echo -en "Connection: close\r\n"

echo -en "Content-Length: 44\r\n"

echo -en "\r\n"

echo -en "Percona XtraDB Cluster Node is not synced.\r\n"

sleep 0.1

exit 1

fi

创建心跳检测服务

在全部控制节点新增心跳检测服务配置文件/etc/xinetd.d/galera-monitor,以controller01节点为例

$ vi /etc/xinetd.d/galera-monitor

# default:on

# description: galera-monitor

service galera-monitor

{

port = 9200

disable = no

socket_type = stream

protocol = tcp

wait = no

user = root

group = root

groups = yes

server = /usr/bin/clustercheck

type = UNLISTED

per_source = UNLIMITED

log_on_success =

log_on_failure = HOST

flags = REUSE

}

修改 /etc/services

...

#wap-wsp 9200/tcp # WAP connectionless session service

galera-monitor 9200/tcp # galera-monitor

...

启动 xinetd 服务

# 全部控制节点都需要启动

systemctl daemon-reload

systemctl enable xinetd

systemctl start xinetd

systemctl status xinetd

测试心跳检测脚本

在全部控制节点验证,以controller01节点为例

$ /usr/bin/clustercheck

HTTP/1.1 200 OK

Content-Type: text/plain

Connection: close

Content-Length: 40

Percona XtraDB Cluster Node is synced.

异常关机或异常断电后的修复

当突然停电,所有galera主机都非正常关机,来电后开机,会导致galera集群服务无法正常启动。以下为处理办法

第1步:开启galera集群的群主主机的mariadb服务。

第2步:开启galera集群的成员主机的mariadb服务。

异常处理:galera集群的群主主机和成员主机的mysql服务无法启动,如何处理?

#解决方法一:

第1步、删除garlera群主主机的/var/lib/mysql/grastate.dat状态文件

/bin/galera_new_cluster启动服务。启动正常。登录并查看wsrep状态。

第2步:删除galera成员主机中的/var/lib/mysql/grastate.dat状态文件

systemctl restart mariadb重启服务。启动正常。登录并查看wsrep状态。

#解决方法二:

第1步、修改garlera群主主机的/var/lib/mysql/grastate.dat状态文件中的0为1

/bin/galera_new_cluster启动服务。启动正常。登录并查看wsrep状态。

第2步:修改galera成员主机中的/var/lib/mysql/grastate.dat状态文件中的0为1

systemctl restart mariadb重启服务。启动正常。登录并查看wsrep状态。

经过实际发现,以下操作步骤也可以:

第1步、修改garlera群主主机的/var/lib/mysql/grastate.dat状态文件中的0为1

systemctl restart mariadb重启服务

第2步:修改galera成员主机直接使用systemctl restart mariadb重启服务

RabbitMQ集群

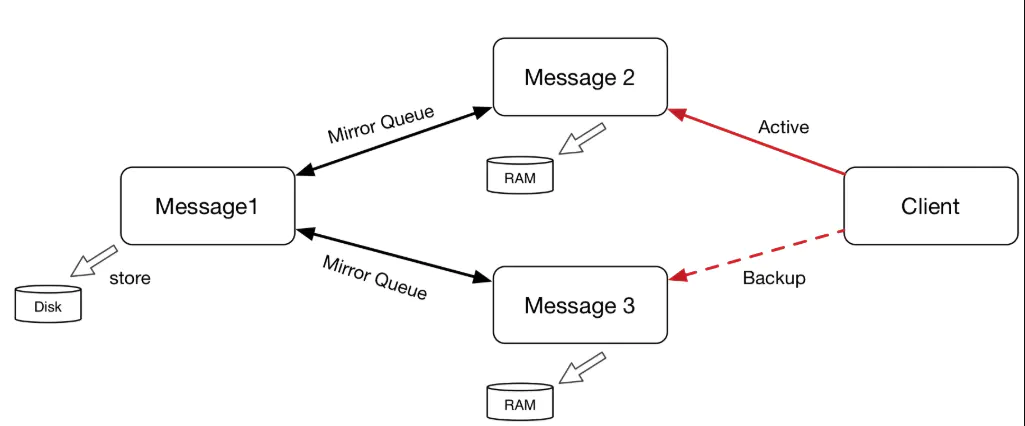

RabbitMQ采用原生Cluster集群,所有节点同步镜像队列。三台物理机,其中2个Mem节点主要提供服务,1个Disk节点用于持久化消息,客户端根据需求分别配置主从策略。

目前测试将RabbitMQ三个节点部署到了控制节点上。

下载相关软件包(所有控制节点)

以controller01节点为例,RabbbitMQ基与erlang开发,首先安装erlang,采用yum方式

yum install erlang rabbitmq-server -y

systemctl enable rabbitmq-server.service

构建rabbitmq集群

任选1个控制节点首先启动rabbitmq服务

这里选择controller01节点

systemctl start rabbitmq-server.service

rabbitmqctl cluster_status

分发.erlang.cookie到其他控制节点

scp -p /var/lib/rabbitmq/.erlang.cookie controller02:/var/lib/rabbitmq/

scp -p /var/lib/rabbitmq/.erlang.cookie controller03:/var/lib/rabbitmq/

修改controller02和03节点.erlang.cookie文件的用户/组

[root@controller02 ~]# chown rabbitmq:rabbitmq /var/lib/rabbitmq/.erlang.cookie

[root@controller03 ~]# chown rabbitmq:rabbitmq /var/lib/rabbitmq/.erlang.cookie

- 注意:修改全部控制节点.erlang.cookie文件的权限,默认为400权限,可用不修改

启动controller02和03节点的rabbitmq服务

[root@controller02 ~]# systemctl start rabbitmq-server

[root@controller03 ~]# systemctl start rabbitmq-server

构建集群,controller02和03节点以ram节点的形式加入集群

controller02

rabbitmqctl stop_app

rabbitmqctl join_cluster --ram rabbit@controller01

rabbitmqctl start_app

controller03

rabbitmqctl stop_app

rabbitmqctl join_cluster --ram rabbit@controller01

rabbitmqctl start_app

任意控制节点查看RabbitMQ集群状态

$ rabbitmqctl cluster_status

Cluster status of node rabbit@controller01

[{nodes,[{disc,[rabbit@controller01]},

{ram,[rabbit@controller03,rabbit@controller02]}]},

{running_nodes,[rabbit@controller03,rabbit@controller02,rabbit@controller01]},

{cluster_name,<<"rabbit@controller01">>},

{partitions,[]},

{alarms,[{rabbit@controller03,[]},

{rabbit@controller02,[]},

{rabbit@controller01,[]}]}]

创建rabbitmq管理员账号

# 在任意节点新建账号并设置密码,以controller01节点为例

[root@controller01 ~]# rabbitmqctl add_user openstack 123456

Creating user "openstack"

# 设置新建账号的状态

[root@controller01 ~]# rabbitmqctl set_user_tags openstack administrator

Setting tags for user "openstack" to [administrator]

# 设置新建账号的权限

[root@controller01 ~]# rabbitmqctl set_permissions -p "/" openstack ".*" ".*" ".*"

Setting permissions for user "openstack" in vhost "/"

# 查看账号

[root@controller01 ~]# rabbitmqctl list_users

Listing users

openstack [administrator]

guest [administrator]

镜像队列的ha

设置镜像队列高可用

[root@controller01 ~]# rabbitmqctl set_policy ha-all "^" '{"ha-mode":"all"}'

Setting policy "ha-all" for pattern "^" to "{\"ha-mode\":\"all\"}" with priority "0"

任意控制节点查看镜像队列策略

[root@controller01 ~]# rabbitmqctl list_policies

Listing policies

/ ha-all all ^ {"ha-mode":"all"} 0

安装web管理插件

在全部控制节点安装web管理插件,以controller01节点为例

[root@controller01 ~]# rabbitmq-plugins enable rabbitmq_management

The following plugins have been enabled:

amqp_client

cowlib

cowboy

rabbitmq_web_dispatch

rabbitmq_management_agent

rabbitmq_management

Applying plugin configuration to rabbit@controller01... started 6 plugins.

[root@controller01 ~]# ss -ntlp|grep 5672

LISTEN 0 128 *:25672 *:* users:(("beam",pid=2222,fd=42))

LISTEN 0 1024 *:15672 *:* users:(("beam",pid=2222,fd=54))

LISTEN 0 128 [::]:5672 [::]:* users:(("beam",pid=2222,fd=53))

- 访问任意控制节点,如:http://10.10.10.31:15672

Memcached集群

Memcached是无状态的,各控制节点独立部署,openstack各服务模块统一调用多个控制节点的memcached服务即可。

安装memcache的软件包

在全部控制节点安装

yum install memcached python-memcached -y

设置memcached

在全部安装memcached服务的节点设置服务监听本地地址

sed -i 's|127.0.0.1,::1|0.0.0.0|g' /etc/sysconfig/memcached

启动服务

systemctl enable memcached.service

systemctl start memcached.service

systemctl status memcached.service

ss -tnlp|grep memcached

高可用 haproxy + keepalived

Openstack官网使用开源的pacemaker cluster stack做为集群高可用资源管理软件。但是我没接触过,也不想去研究了,直接使用熟悉的配方:haproxy + keepalived。

vip规划:10.10.10.10

安装软件

两台 ha 节点执行

yum install haproxy keepalived -y

配置 keepalived

修改 ha01 节点 keepalived 配置 /etc/keepalived/keepalived.conf:

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script chk_haproxy {

script "/data/sh/check_haproxy.sh"

interval 2

weight 2

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.10.10.10

}

track_script {

chk_haproxy

}

}

- 注意网卡名与vip

修改 ha02 节点 keepalived 配置 /etc/keepalived/keepalived.conf:

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script chk_haproxy {

script "/data/sh/check_haproxy.sh"

interval 2

weight 2

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.10.10.10

}

track_script {

chk_haproxy

}

}

ha01 和 ha02 添加 haproxy 检测脚本:

$ mkdir -p /data/sh/

$ vi /data/sh/check_haproxy.sh

#!/bin/bash

#auto check haprox process

haproxy_process_count=$(ps aux|grep haproxy|grep -v check_haproxy|grep -v grep|wc -l)

if [[ $haproxy_process_count == 0 ]];then

systemctl stop keepalived

fi

$ chmod 755 /data/sh/check_haproxy.sh

启动 haproxy 与 keepalived

systemctl enable haproxy

systemctl start haproxy

systemctl status haproxy

systemctl enable keepalived

systemctl start keepalived

systemctl status keepalived

启动正常后,在 ha01 节点应该可以看到已经正常添加 vip 10.10.10.10

测试高可用

在 ha01 停止 haproxy,正常的话 vip 会漂移到 ha02 主机上

在 ha01 重新启动 haproxy 和 keepalived 后 vip 会漂移回来

配置 haproxy

建议开启haproxy的日志功能,便于后续的问题排查

mkdir /var/log/haproxy

chmod a+w /var/log/haproxy

在rsyslog文件下修改以下字段

# 取消注释并添加

$ vi /etc/rsyslog.conf

19 $ModLoad imudp

20 $UDPServerRun 514

24 $ModLoad imtcp

25 $InputTCPServerRun 514

# 在文件最后添加haproxy配置日志

local0.=info -/var/log/haproxy/haproxy-info.log

local0.=err -/var/log/haproxy/haproxy-err.log

local0.notice;local0.!=err -/var/log/haproxy/haproxy-notice.log

# 重启rsyslog

$ systemctl restart rsyslog

haproxy 配置中涉及服务较多,这里针对涉及到的 openstack 服务,一次性设置完成

全部 ha 节点都需配置,配置文件 /etc/haproxy/haproxy.cfg

global

log 127.0.0.1 local0

chroot /var/lib/haproxy

daemon

group haproxy

user haproxy

maxconn 4000

pidfile /var/run/haproxy.pid

stats socket /var/lib/haproxy/stats

defaults

mode http

log global

maxconn 4000 #最大连接数

option httplog

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout check 10s

# haproxy监控页

listen stats

bind 0.0.0.0:1080

mode http

stats enable

stats uri /

stats realm OpenStack\ Haproxy

stats auth admin:123456

stats refresh 30s

stats show-node

stats show-legends

stats hide-version

# horizon服务

listen dashboard_cluster

bind 10.10.10.10:80

balance source

option tcpka

option httpchk

option tcplog

server controller01 10.10.10.31:80 check inter 2000 rise 2 fall 5

server controller02 10.10.10.32:80 check inter 2000 rise 2 fall 5

server controller03 10.10.10.33:80 check inter 2000 rise 2 fall 5

# mariadb服务;

#设置controller01节点为master,controller02/03节点为backup,一主多备的架构可规避数据不一致性;

#另外官方示例为检测9200(心跳)端口,测试在mariadb服务宕机的情况下,虽然”/usr/bin/clustercheck”脚本已探测不到服务,但受xinetd控制的9200端口依然正常,导致haproxy始终将请求转发到mariadb服务宕机的节点,暂时修改为监听3306端口

listen galera_cluster

bind 10.10.10.10:3306

balance source

mode tcp

server controller01 10.10.10.31:3306 check inter 2000 rise 2 fall 5

server controller02 10.10.10.32:3306 backup check inter 2000 rise 2 fall 5

server controller03 10.10.10.33:3306 backup check inter 2000 rise 2 fall 5

#为rabbirmq提供ha集群访问端口,供openstack各服务访问;

#如果openstack各服务直接连接rabbitmq集群,这里可不设置rabbitmq的负载均衡

listen rabbitmq_cluster

bind 10.10.10.10:5672

mode tcp

option tcpka

balance roundrobin

timeout client 3h

timeout server 3h

option clitcpka

server controller01 10.10.10.31:5672 check inter 10s rise 2 fall 5

server controller02 10.10.10.32:5672 check inter 10s rise 2 fall 5

server controller03 10.10.10.33:5672 check inter 10s rise 2 fall 5

# glance_api服务

listen glance_api_cluster

bind 10.10.10.10:9292

balance source

option tcpka

option httpchk

option tcplog

timeout client 3h

timeout server 3h

server controller01 10.10.10.31:9292 check inter 2000 rise 2 fall 5

server controller02 10.10.10.32:9292 check inter 2000 rise 2 fall 5

server controller03 10.10.10.33:9292 check inter 2000 rise 2 fall 5

# keystone_public _api服务

listen keystone_public_cluster

bind 10.10.10.10:5000

balance source

option tcpka

option httpchk

option tcplog

server controller01 10.10.10.31:5000 check inter 2000 rise 2 fall 5

server controller02 10.10.10.32:5000 check inter 2000 rise 2 fall 5

server controller03 10.10.10.33:5000 check inter 2000 rise 2 fall 5

listen nova_compute_api_cluster

bind 10.10.10.10:8774

balance source

option tcpka

option httpchk

option tcplog

server controller01 10.10.10.31:8774 check inter 2000 rise 2 fall 5

server controller02 10.10.10.32:8774 check inter 2000 rise 2 fall 5

server controller03 10.10.10.33:8774 check inter 2000 rise 2 fall 5

listen nova_placement_cluster

bind 10.10.10.10:8778

balance source

option tcpka

option tcplog

server controller01 10.10.10.31:8778 check inter 2000 rise 2 fall 5

server controller02 10.10.10.32:8778 check inter 2000 rise 2 fall 5

server controller03 10.10.10.33:8778 check inter 2000 rise 2 fall 5

listen nova_metadata_api_cluster

bind 10.10.10.10:8775

balance source

option tcpka

option tcplog

server controller01 10.10.10.31:8775 check inter 2000 rise 2 fall 5

server controller02 10.10.10.32:8775 check inter 2000 rise 2 fall 5

server controller03 10.10.10.33:8775 check inter 2000 rise 2 fall 5

listen nova_vncproxy_cluster

bind 10.10.10.10:6080

balance source

option tcpka

option tcplog

server controller01 10.10.10.31:6080 check inter 2000 rise 2 fall 5

server controller02 10.10.10.32:6080 check inter 2000 rise 2 fall 5

server controller03 10.10.10.33:6080 check inter 2000 rise 2 fall 5

listen neutron_api_cluster

bind 10.10.10.10:9696

balance source

option tcpka

option httpchk

option tcplog

server controller01 10.10.10.31:9696 check inter 2000 rise 2 fall 5

server controller02 10.10.10.32:9696 check inter 2000 rise 2 fall 5

server controller03 10.10.10.33:9696 check inter 2000 rise 2 fall 5

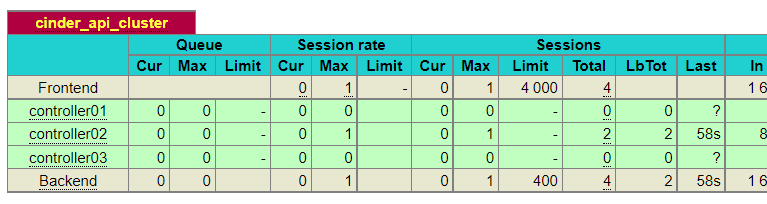

listen cinder_api_cluster

bind 10.10.10.10:8776

balance source

option tcpka

option httpchk

option tcplog

server controller01 10.10.10.31:8776 check inter 2000 rise 2 fall 5

server controller02 10.10.10.32:8776 check inter 2000 rise 2 fall 5

server controller03 10.10.10.33:8776 check inter 2000 rise 2 fall 5

- bind ip 设置为 vip

重启 haproxy

systemctl restart haproxy

systemctl status haproxy

访问 haproxy 自带 web 管理页面:

http://10.10.10.10:1080/ or http://10.10.10.21:1080/ or http://10.10.10.22:1080/

admin 123456

每个项的状态可以清晰看到;可以看到很多为红色,正常,因为这些服务现在还未安装;

至此,openstack的基础依赖服务基本部署完成。

三、Keystone集群部署

Keystone 的主要功能:

- 管理用户及其权限;

- 维护 OpenStack 服务的 Endpoint;

- Authentication(认证)和 Authorization(鉴权)。

创建keystone数据库

在任意控制节点创建数据库,数据库自动同步

mysql -uroot -p123456

create database keystone;

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY '123456';

flush privileges;

exit;

安装keystone

在全部控制节点安装 keystone

wget ftp://ftp.pbone.net/mirror/archive.fedoraproject.org/epel/testing/6.2019-05-29/x86_64/Packages/p/python2-qpid-proton-0.28.0-1.el7.x86_64.rpm

wget ftp://ftp.pbone.net/mirror/vault.centos.org/7.8.2003/messaging/x86_64/qpid-proton/Packages/q/qpid-proton-c-0.28.0-1.el7.x86_64.rpm

rpm -ivh qpid-proton-c-0.28.0-1.el7.x86_64.rpm

rpm -ivh python2-qpid-proton-0.28.0-1.el7.x86_64.rpm

# yum install openstack-keystone httpd python3-mod_wsgi mod_ssl -y # centos 8

yum install openstack-keystone httpd mod_wsgi mod_ssl -y

#备份Keystone配置文件

cp /etc/keystone/keystone.conf{,.bak}

egrep -v '^$|^#' /etc/keystone/keystone.conf.bak > /etc/keystone/keystone.conf

-

如果要使用https访问,需要安装mod_ssl

-

自带的 python2-qpid-proton 为 0.26,不满足版本需求,需升级

配置Keystone

openstack-config --set /etc/keystone/keystone.conf cache backend oslo_cache.memcache_pool

openstack-config --set /etc/keystone/keystone.conf cache enabled true

openstack-config --set /etc/keystone/keystone.conf cache memcache_servers controller01:11211,controller02:11211,controller03:11211

openstack-config --set /etc/keystone/keystone.conf database connection mysql+pymysql://keystone:123456@10.10.10.10/keystone

openstack-config --set /etc/keystone/keystone.conf token provider fernet

- 三个控制节点配置一样

初始化keystone数据库

在任意控制节点操作

# keystone用户初始化数据库

$ su -s /bin/sh -c "keystone-manage db_sync" keystone

# 验证数据库

$ mysql -uroot -p123456 keystone -e "show tables";

+------------------------------------+

| Tables_in_keystone |

+------------------------------------+

| access_rule |

| access_token |

| application_credential |

| application_credential_access_rule |

| application_credential_role |

| assignment |

| config_register |

| consumer |

| credential |

| endpoint |

| endpoint_group |

| federated_user |

| federation_protocol |

| group |

| id_mapping |

| identity_provider |

| idp_remote_ids |

| implied_role |

| limit |

| local_user |

| mapping |

| migrate_version |

| nonlocal_user |

| password |

| policy |

| policy_association |

| project |

| project_endpoint |

| project_endpoint_group |

| project_option |

| project_tag |

| region |

| registered_limit |

| request_token |

| revocation_event |

| role |

| role_option |

| sensitive_config |

| service |

| service_provider |

| system_assignment |

| token |

| trust |

| trust_role |

| user |

| user_group_membership |

| user_option |

| whitelisted_config |

+------------------------------------+

初始化Fernet密钥存储库,无报错即为成功

# 在/etc/keystone/生成相关秘钥及目录

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

#并将初始化的密钥拷贝到其他的控制节点

scp -rp /etc/keystone/fernet-keys /etc/keystone/credential-keys controller02:/etc/keystone/

scp -rp /etc/keystone/fernet-keys /etc/keystone/credential-keys controller03:/etc/keystone/

#同步后修改另外两台控制节点fernet的权限

chown -R keystone:keystone /etc/keystone/credential-keys/

chown -R keystone:keystone /etc/keystone/fernet-keys/

认证引导

任意控制节点操作;初始化admin用户(管理用户)与密码,3种api端点,服务实体可用区等

注意:这里使用的是vip

keystone-manage bootstrap --bootstrap-password 123456 \

--bootstrap-admin-url http://10.10.10.10:5000/v3/ \

--bootstrap-internal-url http://10.10.10.10:5000/v3/ \

--bootstrap-public-url http://10.10.10.10:5000/v3/ \

--bootstrap-region-id RegionOne

配置Http Server

在全部控制节点设置,以controller01节点为例

配置 httpd.conf

#修改域名为主机名

cp /etc/httpd/conf/httpd.conf{,.bak}

sed -i "s/#ServerName www.example.com:80/ServerName ${HOSTNAME}/" /etc/httpd/conf/httpd.conf

#不同的节点替换不同的ip地址

# controller01

sed -i "s/Listen\ 80/Listen\ 10.10.10.31:80/g" /etc/httpd/conf/httpd.conf

# controller02

sed -i "s/Listen\ 80/Listen\ 10.10.10.32:80/g" /etc/httpd/conf/httpd.conf

# controller03

sed -i "s/Listen\ 80/Listen\ 10.10.10.33:80/g" /etc/httpd/conf/httpd.conf

配置wsgi-keystone.conf

#创建软连接wsgi-keystone.conf文件

ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

#不同的节点替换不同的ip地址

##controller01

sed -i "s/Listen\ 5000/Listen\ 10.10.10.31:5000/g" /etc/httpd/conf.d/wsgi-keystone.conf

sed -i "s#*:5000#10.10.10.31:5000#g" /etc/httpd/conf.d/wsgi-keystone.conf

##controller02

sed -i "s/Listen\ 5000/Listen\ 10.10.10.32:5000/g" /etc/httpd/conf.d/wsgi-keystone.conf

sed -i "s#*:5000#10.10.10.32:5000#g" /etc/httpd/conf.d/wsgi-keystone.conf

##controller03

sed -i "s/Listen\ 5000/Listen\ 10.10.10.33:5000/g" /etc/httpd/conf.d/wsgi-keystone.conf

sed -i "s#*:5000#10.10.10.33:5000#g" /etc/httpd/conf.d/wsgi-keystone.conf

启动服务

systemctl restart httpd.service

systemctl enable httpd.service

systemctl status httpd.service

配置admin用户变量脚本

openstack client环境脚本定义client调用openstack api环境变量,以方便api的调用(不必在命令行中携带环境变量); 官方文档将admin用户和demo租户的变量写入到了家目录下,根据不同的用户角色,需要定义不同的脚本; 一般将脚本创建在用户主目录

admin-openrc

$ cat >> ~/admin-openrc << EOF

# admin-openrc

export OS_USERNAME=admin

export OS_PASSWORD=123456

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://10.10.10.10:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

EOF

$ source ~/admin-openrc

# 验证

$ openstack domain list

+---------+---------+---------+--------------------+

| ID | Name | Enabled | Description |

+---------+---------+---------+--------------------+

| default | Default | True | The default domain |

+---------+---------+---------+--------------------+

# 也可以使用下面的命令

$ openstack token issue

#拷贝到其他的控制节点

scp -rp ~/admin-openrc controller02:~/

scp -rp ~/admin-openrc controller03:~/

创建新域、项目、用户和角色

身份服务为每个OpenStack服务提供身份验证服务,其中包括服务使用域、项目、用户和角色的组合。

在任意控制节点操作

创建域

$ openstack domain create --description "An Example Domain" example

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | An Example Domain |

| enabled | True |

| id | 4a208138a0004bb1a05d6c61e14f47dc |

| name | example |

| options | {} |

| tags | [] |

+-------------+----------------------------------+

$ openstack domain list

+----------------------------------+---------+---------+--------------------+

| ID | Name | Enabled | Description |

+----------------------------------+---------+---------+--------------------+

| 4a208138a0004bb1a05d6c61e14f47dc | example | True | An Example Domain |

| default | Default | True | The default domain |

+----------------------------------+---------+---------+--------------------+

创建demo项目

由于admin的项目角色用户都已经存在了;重新创建一个新的项目角色demo

以创建demo项目为例,demo项目属于”default”域

openstack project create --domain default --description "demo Project" demo

创建demo用户

需要输入新用户的密码

--password-prompt为交互式;--password+密码为非交互式

openstack user create --domain default --password 123456 demo

创建user角色

openstack role create user

查看角色

openstack role list

将user角色添加到demo项目和demo用户

openstack role add --project demo --user demo user

配置 demo 用户变量脚本

cat >> ~/demo-openrc << EOF

#demo-openrc

export OS_USERNAME=demo

export OS_PASSWORD=123456

export OS_PROJECT_NAME=

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://10.10.10.10:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

EOF

source ~/demo-openrc

openstack token issue

# 拷贝到其他的控制节点

scp -rp ~/demo-openrc controller02:~/

scp -rp ~/demo-openrc controller03:~/

验证keystone

任意一台控制节点;以admin用户身份,请求身份验证令牌, 使用admin用户变量

$ source admin-openrc

$ openstack --os-auth-url http://10.10.10.10:5000/v3 \

--os-project-domain-name Default --os-user-domain-name Default \

--os-project-name admin --os-username admin token issue

任意一台控制节点;以demo用户身份,请请求认证令牌, 使用demo用户变量

$ source demo-openrc

$ openstack --os-auth-url http://10.10.10.10:5000/v3 \

--os-project-domain-name Default --os-user-domain-name Default \

--os-project-name demo --os-username demo token issue

四、Glance集群部署

Glance 具体功能如下:

- 提供 RESTful API 让用户能够查询和获取镜像的元数据和镜像本身;

- 支持多种方式存储镜像,包括普通的文件系统、Swift、Ceph 等;

- 对实例执行快照创建新的镜像。

创建glance数据库

在任意控制节点创建数据库,数据库自动同步,以controller01节点为例

mysql -u root -p123456

CREATE DATABASE glance;

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY '123456';

flush privileges;

exit;

创建glance-api相关服务凭证

在任意控制节点创建数据库,以controller01节点为例

source ~/admin-openrc

# 创建service项目

openstack project create --domain default --description "Service Project" service

# 创建glance用户

openstack user create --domain default --password 123456 glance

# 将管理员admin用户添加到glance用户和项目中

openstack role add --project service --user glance admin

# 创建glance服务实体

openstack service create --name glance --description "OpenStack Image" image

# 创建glance-api;

openstack endpoint create --region RegionOne image public http://10.10.10.10:9292

openstack endpoint create --region RegionOne image internal http://10.10.10.10:9292

openstack endpoint create --region RegionOne image admin http://10.10.10.10:9292

# 查看创建之后的api;

openstack endpoint list

部署与配置glance

安装glance

在全部控制节点安装glance,以controller01节点为例

yum install openstack-glance python-glance python-glanceclient -y

# 备份glance配置文件

cp /etc/glance/glance-api.conf{,.bak}

egrep -v '^$|^#' /etc/glance/glance-api.conf.bak >/etc/glance/glance-api.conf

配置glance-api.conf

注意bind_host参数,根据不同节点修改;以controller01节点为例

openstack-config --set /etc/glance/glance-api.conf DEFAULT bind_host 10.10.10.31

openstack-config --set /etc/glance/glance-api.conf database connection mysql+pymysql://glance:123456@10.10.10.10/glance

openstack-config --set /etc/glance/glance-api.conf glance_store stores file,http

openstack-config --set /etc/glance/glance-api.conf glance_store default_store file

openstack-config --set /etc/glance/glance-api.conf glance_store filesystem_store_datadir /var/lib/glance/images/

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken www_authenticate_uri http://10.10.10.10:5000

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_url http://10.10.10.10:5000

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken memcached_servers controller01:11211,controller02:11211,controller03:11211

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_type password

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken project_name service

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken username glance

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken password 123456

openstack-config --set /etc/glance/glance-api.conf paste_deploy flavor keystone

创建镜像存储目录并赋权限

/var/lib/glance/images是默认的存储目录,在全部控制节点创建

mkdir /var/lib/glance/images/

chown glance:nobody /var/lib/glance/images

初始化glance数据库

任意控制节点操作

su -s /bin/sh -c "glance-manage db_sync" glance

验证glance数据库是否正常写入

$ mysql -uglance -p123456 -e "use glance;show tables;"

+----------------------------------+

| Tables_in_glance |

+----------------------------------+

| alembic_version |

| image_locations |

| image_members |

| image_properties |

| image_tags |

| images |

| metadef_namespace_resource_types |

| metadef_namespaces |

| metadef_objects |

| metadef_properties |

| metadef_resource_types |

| metadef_tags |

| migrate_version |

| task_info |

| tasks |

+----------------------------------+

启动服务

全部控制节点

systemctl enable openstack-glance-api.service

systemctl restart openstack-glance-api.service

systemctl status openstack-glance-api.service

sleep 3s

lsof -i:9292

下载cirros镜像验证glance服务

在任意控制节点上;下载cirros镜像;格式指定为qcow2,bare;设置public权限;

镜像生成后,在指定的存储目录下生成以镜像id命名的镜像文件

$ source ~/admin-openrc

$ wget -c http://download.cirros-cloud.net/0.5.2/cirros-0.5.2-x86_64-disk.img

$ openstack image create --file ~/cirros-0.5.2-x86_64-disk.img --disk-format qcow2 --container-format bare --public cirros-qcow2

$ openstack image list

+--------------------------------------+--------------+--------+

| ID | Name | Status |

+--------------------------------------+--------------+--------+

| 1c66cd7e-b6d9-4e70-a3d4-f73b27a84230 | cirros-qcow2 | active |

+--------------------------------------+--------------+--------+

查看镜像

[root@controller01 ~]# ls -l /var/lib/glance/images/

total 0

[root@controller02 ~]# ls -l /var/lib/glance/images/

total 15956

-rw-r----- 1 glance glance 16338944 Nov 4 17:25 1c66cd7e-b6d9-4e70-a3d4-f73b27a84230

[root@controller03 ~]# ls -l /var/lib/glance/images/

total 0

scp -pr /var/lib/glance/images/* controller01:/var/lib/glance/images/

scp -pr /var/lib/glance/images/* controller03:/var/lib/glance/images/

chown -R glance. /var/lib/glance/images/*

这时候发现只有1台glance节点上有相关镜像,如果请求发到没有的机器就会找不到镜像;所以实际生产中一般用共性存储 nfs,或者 swift、ceph,方法后面再说。

五、Placement服务部署

Placement具体功能:

- 通过HTTP请求来跟踪和过滤资源

- 数据保存在本地数据库中

- 具备丰富的资源管理和筛选策略

创建Placement数据库

在任意控制节点创建数据库

mysql -u root -p123456

CREATE DATABASE placement;

GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' IDENTIFIED BY '123456';

flush privileges;

exit;

创建placement-api

在任意控制节点操作

创建Placement服务用户

openstack user create --domain default --password=123456 placement

将Placement用户添加到服务项目并赋予admin权限

openstack role add --project service --user placement admin

创建placement API服务实体

openstack service create --name placement --description "Placement API" placement

创建placement API服务访问端点

openstack endpoint create --region RegionOne placement public http://10.10.10.10:8778

openstack endpoint create --region RegionOne placement internal http://10.10.10.10:8778

openstack endpoint create --region RegionOne placement admin http://10.10.10.10:8778

- 使用vip

安装placement软件包

在全部控制节点操作

yum install openstack-placement-api -y

修改配置文件

在全部控制节点操作

# 备份Placement配置

cp /etc/placement/placement.conf /etc/placement/placement.conf.bak

grep -Ev '^$|#' /etc/placement/placement.conf.bak > /etc/placement/placement.conf

openstack-config --set /etc/placement/placement.conf placement_database connection mysql+pymysql://placement:123456@10.10.10.10/placement

openstack-config --set /etc/placement/placement.conf api auth_strategy keystone

openstack-config --set /etc/placement/placement.conf keystone_authtoken auth_url http://10.10.10.10:5000/v3

openstack-config --set /etc/placement/placement.conf keystone_authtoken memcached_servers controller01:11211,controller02:11211,controller03:11211

openstack-config --set /etc/placement/placement.conf keystone_authtoken auth_type password

openstack-config --set /etc/placement/placement.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/placement/placement.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/placement/placement.conf keystone_authtoken project_name service

openstack-config --set /etc/placement/placement.conf keystone_authtoken username placement

openstack-config --set /etc/placement/placement.conf keystone_authtoken password 123456

初始化placement数据库

任意控制节点操作

su -s /bin/sh -c "placement-manage db sync" placement

mysql -uroot -p123456 placement -e " show tables;"

配置00-placement-api.conf

修改placement的apache配置文件

在全部控制节点操作,以controller01节点为例;注意根据不同节点修改监听地址;官方文档没有提到,如果不修改,计算服务检查时将会报错;

# 备份00-Placement-api配置

# controller01上

cp /etc/httpd/conf.d/00-placement-api.conf{,.bak}

sed -i "s/Listen\ 8778/Listen\ 10.10.10.31:8778/g" /etc/httpd/conf.d/00-placement-api.conf

sed -i "s/*:8778/10.10.10.31:8778/g" /etc/httpd/conf.d/00-placement-api.conf

# controller02上

cp /etc/httpd/conf.d/00-placement-api.conf{,.bak}

sed -i "s/Listen\ 8778/Listen\ 10.10.10.32:8778/g" /etc/httpd/conf.d/00-placement-api.conf

sed -i "s/*:8778/10.10.10.32:8778/g" /etc/httpd/conf.d/00-placement-api.conf

# controller03上

cp /etc/httpd/conf.d/00-placement-api.conf{,.bak}

sed -i "s/Listen\ 8778/Listen\ 10.10.10.33:8778/g" /etc/httpd/conf.d/00-placement-api.conf

sed -i "s/*:8778/10.10.10.33:8778/g" /etc/httpd/conf.d/00-placement-api.conf

启用placement API访问

在全部控制节点操作

$ vi /etc/httpd/conf.d/00-placement-api.conf (15gg)

...

#SSLCertificateKeyFile

#SSLCertificateKeyFile ...

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

...

重启apache服务

在全部控制节点操作;启动placement-api监听端口

systemctl restart httpd.service

ss -tnlp|grep 8778

lsof -i:8778

# curl地址看是否能返回json

$ curl http://10.10.10.10:8778

{"versions": [{"id": "v1.0", "max_version": "1.36", "min_version": "1.0", "status": "CURRENT", "links": [{"rel": "self", "href": ""}]}]}

验证检查Placement健康状态

$ placement-status upgrade check

+----------------------------------+

| Upgrade Check Results |

+----------------------------------+

| Check: Missing Root Provider IDs |

| Result: Success |

| Details: None |

+----------------------------------+

| Check: Incomplete Consumers |

| Result: Success |

| Details: None |

+----------------------------------+

六、Nova控制节点集群部署

Nova具体功能如下:

- 实例生命周期管理

- 管理计算资源

- 网络和认证管理

- REST风格的API

- 异步的一致性通信

- Hypervisor透明:支持Xen,XenServer/XCP, KVM, UML, VMware vSphere and Hyper-V

创建nova相关数据库

在任意控制节点创建数据库

# 创建nova_api,nova和nova_cell0数据库并授权

mysql -uroot -p123456

CREATE DATABASE nova_api;

CREATE DATABASE nova;

CREATE DATABASE nova_cell0;

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY '123456';

flush privileges;

exit;

创建nova相关服务凭证

在任意控制节点操作

创建nova用户

source ~/admin-openrc

openstack user create --domain default --password 123456 nova

向nova用户赋予admin权限

openstack role add --project service --user nova admin

创建nova服务实体

openstack service create --name nova --description "OpenStack Compute" compute

创建Compute API服务端点

api地址统一采用vip,如果public/internal/admin分别设计使用不同的vip,请注意区分;

--region与初始化admin用户时生成的region一致;

openstack endpoint create --region RegionOne compute public http://10.10.10.10:8774/v2.1

openstack endpoint create --region RegionOne compute internal http://10.10.10.10:8774/v2.1

openstack endpoint create --region RegionOne compute admin http://10.10.10.10:8774/v2.1

安装nova软件包

在全部控制节点安装nova相关服务,以controller01节点为例

-

nova-api(nova主服务)

-

nova-scheduler(nova调度服务)

-

nova-conductor(nova数据库服务,提供数据库访问)

-

nova-novncproxy(nova的vnc服务,提供实例的控制台)

yum install openstack-nova-api openstack-nova-conductor openstack-nova-novncproxy openstack-nova-scheduler -y

部署与配置

在全部控制节点配置nova相关服务,以controller01节点为例

注意my_ip参数,根据节点修改;注意nova.conf文件的权限:root:nova

# 备份配置文件/etc/nova/nova.conf

cp -a /etc/nova/nova.conf{,.bak}

grep -Ev '^$|#' /etc/nova/nova.conf.bak > /etc/nova/nova.conf

openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 10.10.10.31

openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron true

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

# 暂不使用haproxy配置的rabbitmq;直接连接rabbitmq集群

#openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstack:123456@10.10.10.10:5672

openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstack:123456@controller01:5672,openstack:123456@controller02:5672,openstack:123456@controller03:5672

# 自动发现 nova 计算节点

openstack-config --set /etc/nova/nova.conf scheduler discover_hosts_in_cells_interval 600

openstack-config --set /etc/nova/nova.conf DEFAULT osapi_compute_listen_port 8774

openstack-config --set /etc/nova/nova.conf DEFAULT metadata_listen_port 8775

openstack-config --set /etc/nova/nova.conf DEFAULT metadata_listen '$my_ip'

openstack-config --set /etc/nova/nova.conf DEFAULT osapi_compute_listen '$my_ip'

openstack-config --set /etc/nova/nova.conf api auth_strategy keystone

openstack-config --set /etc/nova/nova.conf api_database connection mysql+pymysql://nova:123456@10.10.10.10/nova_api

openstack-config --set /etc/nova/nova.conf cache backend oslo_cache.memcache_pool

openstack-config --set /etc/nova/nova.conf cache enabled True

openstack-config --set /etc/nova/nova.conf cache memcache_servers controller01:11211,controller02:11211,controller03:11211

openstack-config --set /etc/nova/nova.conf database connection mysql+pymysql://nova:123456@10.10.10.10/nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken www_authenticate_uri http://10.10.10.10:5000/v3

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://10.10.10.10:5000/v3

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers controller01:11211,controller02:11211,controller03:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password 123456

openstack-config --set /etc/nova/nova.conf vnc enabled true

openstack-config --set /etc/nova/nova.conf vnc server_listen '$my_ip'

openstack-config --set /etc/nova/nova.conf vnc server_proxyclient_address '$my_ip'

openstack-config --set /etc/nova/nova.conf vnc novncproxy_host '$my_ip'

openstack-config --set /etc/nova/nova.conf vnc novncproxy_port 6080

openstack-config --set /etc/nova/nova.conf glance api_servers http://10.10.10.10:9292

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp

openstack-config --set /etc/nova/nova.conf placement region_name RegionOne

openstack-config --set /etc/nova/nova.conf placement project_domain_name Default

openstack-config --set /etc/nova/nova.conf placement project_name service

openstack-config --set /etc/nova/nova.conf placement auth_type password

openstack-config --set /etc/nova/nova.conf placement user_domain_name Default

openstack-config --set /etc/nova/nova.conf placement auth_url http://10.10.10.10:5000/v3

openstack-config --set /etc/nova/nova.conf placement username placement

openstack-config --set /etc/nova/nova.conf placement password 123456

注意:

前端采用haproxy时,服务连接rabbitmq会出现连接超时重连的情况,可通过各服务与rabbitmq的日志查看;

transport_url=rabbit://openstack:123456@10.10.10.10:5672

rabbitmq本身具备集群机制,官方文档建议直接连接rabbitmq集群;但采用此方式时服务启动有时会报错,原因不明;如果没有此现象,建议连接rabbitmq直接对接集群而非通过前端haproxy的vip+端口

openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstack:123456@controller01:5672,openstack:123456@controller02:5672,openstack:123456@controller03:5672

初始化nova相关数据库并验证

任意控制节点操作

# 初始化nova-api数据库,无输出

# 初始化cell0数据库,无输出

# 创建cell1表

# 初始化nova数据库

su -s /bin/sh -c "nova-manage api_db sync" nova

su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

su -s /bin/sh -c "nova-manage db sync" nova

验证nova cell0和cell1是否正确注册

$ su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

+-------+--------------------------------------+-------------------------------------------+--------------------------------------------------+----------+

| Name | UUID | Transport URL | Database Connection | Disabled |

+-------+--------------------------------------+-------------------------------------------+--------------------------------------------------+----------+

| cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:****@10.10.10.10/nova_cell0 | False |

| cell1 | 3e74f43a-74db-4eba-85ee-c8330f906b1b | rabbit://openstack:****@controller03:5672 | mysql+pymysql://nova:****@10.10.10.10/nova | False |

+-------+--------------------------------------+-------------------------------------------+--------------------------------------------------+----------+

验证nova数据库是否正常写入

mysql -unova -p123456 -e "use nova_api;show tables;"

mysql -unova -p123456 -e "use nova;show tables;"

mysql -unova -p123456 -e "use nova_cell0;show tables;"

启动nova服务

在全部控制节点操作,以controller01节点为例

systemctl enable openstack-nova-api.service

systemctl enable openstack-nova-scheduler.service

systemctl enable openstack-nova-conductor.service

systemctl enable openstack-nova-novncproxy.service

systemctl restart openstack-nova-api.service

systemctl restart openstack-nova-scheduler.service

systemctl restart openstack-nova-conductor.service

systemctl restart openstack-nova-novncproxy.service

systemctl status openstack-nova-api.service

systemctl status openstack-nova-scheduler.service

systemctl status openstack-nova-conductor.service

systemctl status openstack-nova-novncproxy.service

ss -tlnp | egrep '8774|8775|8778|6080'

curl http://10.10.10.10:8774

验证

列出各服务控制组件,查看状态

$ source ~/admin-openrc

$ openstack compute service list

+----+----------------+--------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+----------------+--------------+----------+---------+-------+----------------------------+

| 21 | nova-scheduler | controller01 | internal | enabled | up | 2021-11-04T10:24:01.000000 |

| 24 | nova-conductor | controller01 | internal | enabled | up | 2021-11-04T10:24:05.000000 |

| 27 | nova-scheduler | controller02 | internal | enabled | up | 2021-11-04T10:24:13.000000 |

| 30 | nova-scheduler | controller03 | internal | enabled | up | 2021-11-04T10:24:05.000000 |

| 33 | nova-conductor | controller02 | internal | enabled | up | 2021-11-04T10:24:07.000000 |

| 36 | nova-conductor | controller03 | internal | enabled | up | 2021-11-04T10:24:10.000000 |

+----+----------------+--------------+----------+---------+-------+----------------------------+

展示api端点

$ openstack catalog list

+-----------+-----------+------------------------------------------+

| Name | Type | Endpoints |

+-----------+-----------+------------------------------------------+

| placement | placement | RegionOne |

| | | admin: http://10.10.10.10:8778 |

| | | RegionOne |

| | | internal: http://10.10.10.10:8778 |

| | | RegionOne |

| | | public: http://10.10.10.10:8778 |

| | | |

| glance | image | RegionOne |

| | | public: http://10.10.10.10:9292 |

| | | RegionOne |

| | | internal: http://10.10.10.10:9292 |

| | | RegionOne |

| | | admin: http://10.10.10.10:9292 |

| | | |

| keystone | identity | RegionOne |

| | | internal: http://10.10.10.10:5000/v3/ |

| | | RegionOne |

| | | admin: http://10.10.10.10:5000/v3/ |

| | | RegionOne |

| | | public: http://10.10.10.10:5000/v3/ |

| | | |

| nova | compute | RegionOne |

| | | public: http://10.10.10.10:8774/v2.1 |

| | | RegionOne |

| | | internal: http://10.10.10.10:8774/v2.1 |

| | | RegionOne |

| | | admin: http://10.10.10.10:8774/v2.1 |

| | | |

+-----------+-----------+------------------------------------------+

检查cell与placement api;都为success为正常

$ nova-status upgrade check

+--------------------------------------------------------------------+

| Upgrade Check Results |

+--------------------------------------------------------------------+

| Check: Cells v2 |

| Result: Success |

| Details: No host mappings or compute nodes were found. Remember to |

| run command 'nova-manage cell_v2 discover_hosts' when new |

| compute hosts are deployed. |

+--------------------------------------------------------------------+

| Check: Placement API |

| Result: Success |

| Details: None |

+--------------------------------------------------------------------+

| Check: Ironic Flavor Migration |

| Result: Success |

| Details: None |

+--------------------------------------------------------------------+

| Check: Cinder API |

| Result: Success |

| Details: None |

+--------------------------------------------------------------------+

七、Nova计算节点集群部署

安装nova-compute

在全部计算节点安装nova-compute服务,以compute01节点为例

# 在基础配置时已经下载好了openstack的源和需要的依赖,所以直接下载需要的服务组件即可

wget ftp://ftp.pbone.net/mirror/archive.fedoraproject.org/epel/testing/6.2019-05-29/x86_64/Packages/p/python2-qpid-proton-0.28.0-1.el7.x86_64.rpm

wget ftp://ftp.pbone.net/mirror/vault.centos.org/7.8.2003/messaging/x86_64/qpid-proton/Packages/q/qpid-proton-c-0.28.0-1.el7.x86_64.rpm

rpm -ivh qpid-proton-c-0.28.0-1.el7.x86_64.rpm

rpm -ivh python2-qpid-proton-0.28.0-1.el7.x86_64.rpm

yum install openstack-nova-compute -y

部署与配置

在全部计算节点安装nova-compute服务,以compute01节点为例

注意my_ip参数,根据节点修改;注意nova.conf文件的权限:root:nova

# 备份配置文件/etc/nova/nova.conf

cp /etc/nova/nova.conf{,.bak}

grep -Ev '^$|#' /etc/nova/nova.conf.bak > /etc/nova/nova.conf

确定计算节点是否支持虚拟机硬件加速

$ egrep -c '(vmx|svm)' /proc/cpuinfo

4

# 如果此命令返回值不是0,则计算节点支持硬件加速,不需要加入下面的配置。

# 如果此命令返回值是0,则计算节点不支持硬件加速,并且必须配置libvirt为使用QEMU而不是KVM

# 需要编辑/etc/nova/nova.conf 配置中的[libvirt]部分, vmware按照下面设置可以开启硬件加速

编辑配置文件nova.conf

openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstack:123456@10.10.10.10

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 10.10.10.41

openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron true

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

openstack-config --set /etc/nova/nova.conf api auth_strategy keystone

openstack-config --set /etc/nova/nova.conf keystone_authtoken www_authenticate_uri http://10.10.10.10:5000

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://10.10.10.10:5000

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers controller01:11211,controller02:11211,controller03:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password 123456

openstack-config --set /etc/nova/nova.conf libvirt virt_type kvm

openstack-config --set /etc/nova/nova.conf vnc enabled true

openstack-config --set /etc/nova/nova.conf vnc server_listen 0.0.0.0

openstack-config --set /etc/nova/nova.conf vnc server_proxyclient_address '$my_ip'

openstack-config --set /etc/nova/nova.conf vnc novncproxy_base_url http://10.10.10.10:6080/vnc_auto.html

openstack-config --set /etc/nova/nova.conf glance api_servers http://10.10.10.10:9292

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp

openstack-config --set /etc/nova/nova.conf placement region_name RegionOne

openstack-config --set /etc/nova/nova.conf placement project_domain_name Default

openstack-config --set /etc/nova/nova.conf placement project_name service

openstack-config --set /etc/nova/nova.conf placement auth_type password

openstack-config --set /etc/nova/nova.conf placement user_domain_name Default

openstack-config --set /etc/nova/nova.conf placement auth_url http://10.10.10.10:5000/v3

openstack-config --set /etc/nova/nova.conf placement username placement

openstack-config --set /etc/nova/nova.conf placement password 123456

启动计算节点的nova服务

全部计算节点操作

systemctl restart libvirtd.service openstack-nova-compute.service

systemctl enable libvirtd.service openstack-nova-compute.service

systemctl status libvirtd.service openstack-nova-compute.service

向cell数据库添加计算节点

任意控制节点执行;查看计算节点列表

$ source ~/admin-openrc

$ openstack compute service list --service nova-compute

+----+--------------+-----------+------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+--------------+-----------+------+---------+-------+----------------------------+

| 39 | nova-compute | compute01 | nova | enabled | up | 2021-11-04T10:45:46.000000 |

| 42 | nova-compute | compute02 | nova | enabled | up | 2021-11-04T10:45:48.000000 |

+----+--------------+-----------+------+---------+-------+----------------------------+

控制节点上发现计算主机

添加每台新的计算节点时,都必须在控制器节点上运行

手动发现计算节点

$ su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

Found 2 cell mappings.

Skipping cell0 since it does not contain hosts.

Getting computes from cell 'cell1': 3e74f43a-74db-4eba-85ee-c8330f906b1b

Checking host mapping for compute host 'compute01': a476abf2-030f-4943-b8a7-167d4a65a393

Creating host mapping for compute host 'compute01': a476abf2-030f-4943-b8a7-167d4a65a393

Checking host mapping for compute host 'compute02': ed0a899f-d898-4a73-9100-a69a26edb932

Creating host mapping for compute host 'compute02': ed0a899f-d898-4a73-9100-a69a26edb932

Found 2 unmapped computes in cell: 3e74f43a-74db-4eba-85ee-c8330f906b1b

自动发现计算节点

为避免新加入计算节点时,手动执行注册操作nova-manage cell_v2 discover_hosts,可设置控制节点定时自动发现主机;涉及控制节点nova.conf文件的[scheduler]字段;

在全部控制节点操作;设置自动发现时间为10min,可根据实际环境调节

openstack-config --set /etc/nova/nova.conf scheduler discover_hosts_in_cells_interval 600

systemctl restart openstack-nova-api.service

验证

列出服务组件以验证每个进程的成功启动和注册情况

$ source ~/admin-openrc

$ openstack compute service list

+----+----------------+--------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+----------------+--------------+----------+---------+-------+----------------------------+

| 21 | nova-scheduler | controller01 | internal | enabled | up | 2021-11-04T10:49:48.000000 |

| 24 | nova-conductor | controller01 | internal | enabled | up | 2021-11-04T10:49:42.000000 |

| 27 | nova-scheduler | controller02 | internal | enabled | up | 2021-11-04T10:49:43.000000 |

| 30 | nova-scheduler | controller03 | internal | enabled | up | 2021-11-04T10:49:45.000000 |

| 33 | nova-conductor | controller02 | internal | enabled | up | 2021-11-04T10:49:47.000000 |

| 36 | nova-conductor | controller03 | internal | enabled | up | 2021-11-04T10:49:50.000000 |

| 39 | nova-compute | compute01 | nova | enabled | up | 2021-11-04T10:49:46.000000 |

| 42 | nova-compute | compute02 | nova | enabled | up | 2021-11-04T10:49:48.000000 |

+----+----------------+--------------+----------+---------+-------+----------------------------+

列出身份服务中的API端点以验证与身份服务的连接

$ openstack catalog list

+-----------+-----------+------------------------------------------+

| Name | Type | Endpoints |

+-----------+-----------+------------------------------------------+

| placement | placement | RegionOne |

| | | admin: http://10.10.10.10:8778 |

| | | RegionOne |

| | | internal: http://10.10.10.10:8778 |

| | | RegionOne |

| | | public: http://10.10.10.10:8778 |

| | | |

| glance | image | RegionOne |

| | | public: http://10.10.10.10:9292 |

| | | RegionOne |

| | | internal: http://10.10.10.10:9292 |

| | | RegionOne |

| | | admin: http://10.10.10.10:9292 |

| | | |

| keystone | identity | RegionOne |

| | | internal: http://10.10.10.10:5000/v3/ |

| | | RegionOne |

| | | admin: http://10.10.10.10:5000/v3/ |

| | | RegionOne |

| | | public: http://10.10.10.10:5000/v3/ |

| | | |

| nova | compute | RegionOne |

| | | public: http://10.10.10.10:8774/v2.1 |

| | | RegionOne |

| | | internal: http://10.10.10.10:8774/v2.1 |

| | | RegionOne |

| | | admin: http://10.10.10.10:8774/v2.1 |

| | | |

+-----------+-----------+------------------------------------------+

列出镜像服务中的镜像以及镜像的状态

$ openstack image list

+--------------------------------------+--------------+--------+

| ID | Name | Status |

+--------------------------------------+--------------+--------+

| 1c66cd7e-b6d9-4e70-a3d4-f73b27a84230 | cirros-qcow2 | active |

+--------------------------------------+--------------+--------+

检查Cells和placement API是否正常运行

$ nova-status upgrade check

+--------------------------------+

| Upgrade Check Results |

+--------------------------------+

| Check: Cells v2 |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Placement API |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Ironic Flavor Migration |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Cinder API |

| Result: Success |

| Details: None |

+--------------------------------+

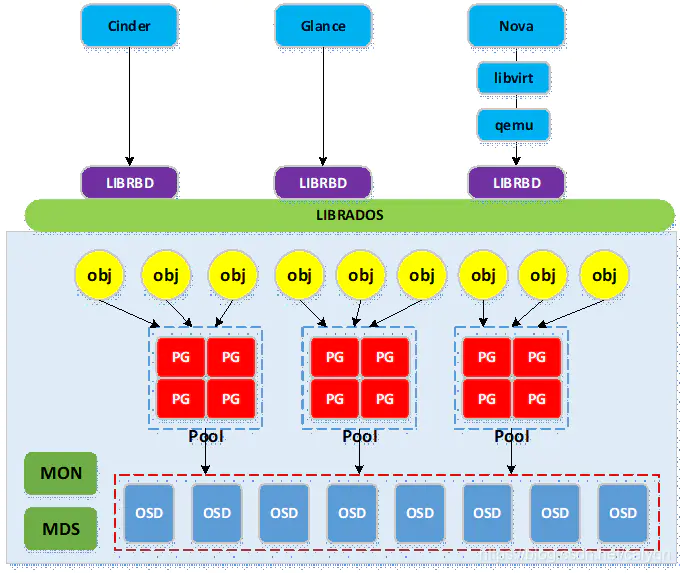

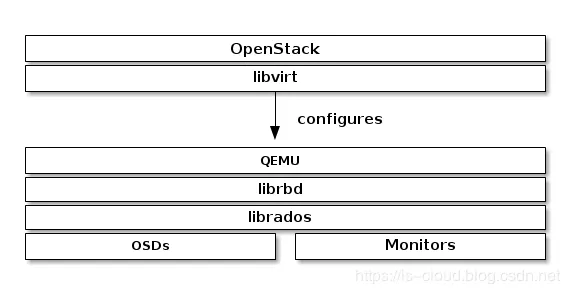

扩展:openstack(nova)、kvm、qemu和libvirtd之间的联系

一:QEMU

QEMU是一个模拟器,通过动态二进制转换来模拟cpu以及其他一系列硬件,使guest os认为自己就是在和真正的硬件打交道,其实是和qemu模拟的硬件交互。这种模式下,guest os可以和主机上的硬件进行交互,但是所有的指令都需要qemu来进行翻译,性能会比较差。

二:KVM

KVM是Linux内核提供的虚拟化架构,它需要硬件硬件CPU支持,比如采用硬件辅助虚拟化的Intel-VT,AMD-V。

KVM通过一个内核模块kvm.ko来实现核心虚拟化功能,以及一个和处理器相关的模块,如kvm-intel.ko或者kvm-amd.ko。kvm本身不实现模拟,仅暴露一个接口/dev/kvm,用户态程序可以通过访问这个接口的ioctl函数来实现vcpu的创建,和虚拟内存的地址空间分配。

有了kvm之后,guest-os的CPU指令不用再经过qemu翻译就可以运行,大大提升了运行速度。

但是kvm只能模拟cpu和内存,不能模拟其他设备,于是就有了下面这个两者合一的技术qemu-kvm。

三:QEMU-KVM

qemu-kvm,是qemu一个特定于kvm加速模块的分支。

qemu将kvm整合进来,通过ioctl调用/dev/kvm,将cpu相关的指令交给内核模块来做,kvm只实现了cpu和内存虚拟化,但不能模拟其它设备,因此qemu还需要模拟其它设备(如:硬盘、网卡等),qemu加上kvm就是完整意义上的服务器虚拟化

综上所述,QEMU-KVM具有两大作用:

- 提供对cpu,内存(KVM负责),IO设备(QEMU负责)的虚拟

- 对各种虚拟设备的创建,调用进行管理(QEMU负责)

qemu-kvm架构如下:

四:libvirtd

Libvirtd是目前使用最广泛的对kvm虚拟机进行管理的工具和api。Libvirtd是一个Domain进程可以被本地virsh调用,也可以被远端的virsh调用,libvirtd调用kvm-qemu控制虚拟机。

libvirtd由几个不同的部分组成,其中包括应用程序编程接口(API)库,一个守护进程(libvirtd)和一个默认的命令行工具(virsh),libvirtd守护进程负责对虚拟机的管理,因此要确保这个进程的运行。

五:openstack(nova)、kvm、qemu-kvm和libvirtd之间的关系。

kvm是最底层的VMM,它可以模拟cpu和内存,但是缺少对网络、I/O及周边设备的支持,因此不能直接使用。

qemu-kvm是构建与kvm之上的,它提供了完整的虚拟化方案

openstack(nova)的核心功能就是管理一大堆虚拟机,虚拟机可以是各种各样(kvm, qemu, xen, vmware...),而且管理的方法也可以是各种各样(libvirt, xenapi, vmwareapi...)。而nova中默认使用的管理虚拟机的API就是libvirtd。

简单说就是,openstack不会去直接控制qemu-kvm,而是通过libvirtd库去间接控制qemu-kvm。

另外,libvirt还提供了跨VM平台的功能,它可以控制除了QEMU之外的模拟器,包括vmware, virtualbox, xen等等。所以为了openstack的跨VM性,所以openstack只会用libvirt而不直接用qemu-kvm

八、Neutron控制+网络节点集群部署(openvswitch方式)

Nova具体功能如下:

- Neutron 为整个 OpenStack 环境提供网络支持,包括二层交换,三层路由,负载均衡,防火墙和 VPN 等。

- Neutron 提供了一个灵活的框架,通过配置,无论是开源还是商业软件都可以被用来实现这些功能。

创建nova相关数据库(控制节点)

在任意控制节点创建数据库;

$ mysql -uroot -p123456

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY '123456';

flush privileges;

exit;

创建neutron相关服务凭证

在任意控制节点操作;

创建neutron用户

source ~/admin-openrc

openstack user create --domain default --password 123456 neutron

向neutron用户赋予admin权限

openstack role add --project service --user neutron admin

创建neutron服务实体

openstack service create --name neutron --description "OpenStack Networking" network

创建neutron API服务端点

api地址统一采用vip,如果public/internal/admin分别设计使用不同的vip,请注意区分;

--region与初始化admin用户时生成的region一致;neutron-api 服务类型为network;

openstack endpoint create --region RegionOne network public http://10.10.10.10:9696

openstack endpoint create --region RegionOne network internal http://10.10.10.10:9696

openstack endpoint create --region RegionOne network admin http://10.10.10.10:9696

安装配置

-

openstack-neutron:neutron-server的包

-

openstack-neutron-ml2:ML2 plugin的包

-

openstack-neutron-openvswitch:openvswitch相关的包

-

ebtables:防火墙相关的包

-

conntrack-tools: 该模块可以对iptables进行状态数据包检查

安装软件包

在全部控制节点安装neutron相关服务

yum install openstack-neutron openstack-neutron-ml2 ebtables conntrack-tools openstack-neutron-openvswitch libibverbs net-tools -y

在全部控制节点配置neutron相关服务,以controller01节点为例;

内核配置

在全部控制节点执行

echo '

net.ipv4.conf.all.rp_filter=0

net.ipv4.conf.default.rp_filter=0

' >> /etc/sysctl.conf

sysctl -p

配置neutron.conf

注意my_ip参数,根据节点修改;注意neutron.conf文件的权限:root:neutron

注意bind_host参数,根据节点修改;

# 备份配置文件/etc/neutron/neutron.conf

cp -a /etc/neutron/neutron.conf{,.bak}

grep -Ev '^$|#' /etc/neutron/neutron.conf.bak > /etc/neutron/neutron.conf

openstack-config --set /etc/neutron/neutron.conf DEFAULT bind_host 10.10.10.31

openstack-config --set /etc/neutron/neutron.conf DEFAULT core_plugin ml2

openstack-config --set /etc/neutron/neutron.conf DEFAULT service_plugins router

openstack-config --set /etc/neutron/neutron.conf DEFAULT allow_overlapping_ips true

#直接连接rabbitmq集群