VictoriaMetrics入门到使用

1:VictoriaMetrics简介

VictoriaMetrics(VM)是一个支持高可用,经济高效且可扩展的监控解决方案和时间序列数据库,可用于Prometheus监控数据做长期远程存储

官网:`https://victoriametrics.com`

1:对外支持`Prometheus`相关API,可以直接用于`Grafana`作为`Prometheus`数据源使用

2:指标数据摄取和查询具备高性能和良好的可扩展性,性能比`InfluxDB`和`TimescaleDB`高出约20倍

3:这处理高基数时间序列时,内存方面也做了优化,比`InfluxDB`少约10倍,比`Prometheus`,`Thanos`,`Cortex`少了7倍

4:高性能的数据压缩方式,与`TimescaleDB`相比,可以将多达70倍的数据点存入有限的存储空间,与`Prometheus`,`Thanos`或`Cortex`相比,所需的存储空间减少约7倍

5:它针对具有高延迟`IO`和低`IOPS`的存储进行了优化

6:提供全局的查询视图,多个`Prometheus`实例或者任何其他数据源可能会将数据提取到`VictoriaMetrics`

7:操作简单:

1:`VictoriaMetrics`由一个没有外部依赖的小型可执行文件组成

2:所有的配置都是通过明确的flags和合理的默认值完成

3:所有数据都存储在 `storageDataPath`命令行参数指向的目录中

4:可以使用 `vmbackup/vmrestore`工具轻松快速的从实时快照备份到`S3`和`GCS`对象存储中

8:支持第三方时序数据库获取数据源

9:由于存储架构,它可以保护存储在非正常关机(OOM,硬件重置或Kill -9)时免受数据损坏

10:同样支持指标的`relabel`操作

2:VictoriaMetrics架构

VM分为单节点和集群两个方案,根据业务需求选择即可,单节点版本直接运行二进制文件即可,官方建议采集数据点(Data Points)低于100w/s,推荐VM单节点版,简单易于维护,但不支持告警,集群版支持数据水平拆分,下面来看看官方给的集群版架构图

主要包含如下几个组件:

1:vmstorage:数据存储以及查询结果的返回,默认端口为8482,它是个有状态应用

2:vminsert:数据录入,可实现类似分片,副本功能,默认端口为8480

3:vmselect:数据查询,汇总和数据去重,默认端口为8481

4:vmagent:数据指标抓取,支持多种后端存储,会占用本地磁盘缓存,默认端口8429

5:vmalert:报警相关组件,如果不需要告警功能,可以不使用该组件,默认端口为8880

集群方案把功能拆分为vmstorage,vminset,vmselect组件,如果替换Prometheus,还需要使用vmagent,vmalert,从上图都可以看出来vminset以及vmselect都是无状态的,所以扩展起来很简单,只有vmstorage是由状态应用。

vmagent的主要目的是用来收集指标数据然后存储到VM以及Prometheus兼容的存储系统中(支持remote_write)

下面我们来看看vmagent的一个简单的架构图,可以看得出它也实现了metrics和push功能,此外还有很多其他特性:

1:替换Prometheus和scraping target

2:支持基于prometheus relabeling的模式添加,移除,修改labels,可以方便在数据发送到远端存储之前进行数据过滤

3:支持多种数据协议,influx line协议,graphite文本协议,opentsdb协议,prometheus remote write协议,json lines协议,csv数据

4:支持收集数据的同时,并复制到多种远端存储系统

5:支持不可靠远端存储(通过本地存储 --remoteWrite.tmpDataPath),同时支持最大磁盘占用

6:相比prometheus使用较少的内存,CPU,磁盘,IO以及网络带宽

接下来我们就来看看vm的单节点和集群两个方案的使用

3:VictoriaMetrics单节点

这里我们采集的目标还是以node-exporter为例子,首先使用Prometheus采集数据,然后将Prometheus数据远程写入到VM存储,由于vm提供了vmagent组件,最后我们将使用VM来完全替换Prometheus,可以使框架更简单,更低的资源占用。

# 这里我们直接以K8S作为底座

1:创建namespace

[root@k-m-1 ~]# kubectl create ns kube-vm

namespace/kube-vm created

2:我们首先要部署的就是node-exporter,以DaemonSet控制器方式在所有的节点上都部署一下

# vm-node-exporter

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: kube-vm

labels:

app: node-exporter

spec:

selector:

matchLabels:

app: node-exporter

template:

metadata:

labels:

app: node-exporter

spec:

hostPID: true

hostIPC: true

hostNetwork: true

nodeSelector:

kubernetes.io/os: linux

containers:

- name: node-exporter

image: prom/node-exporter:latest

args:

- --web.listen-address=$(HOSTIP):9100

- --path.procfs=/host/proc

- --path.sysfs=/host/sys

- --path.rootfs=/host/root

- --no-collector.hwmon

- --no-collector.nfs

- --no-collector.nfsd

- --no-collector.nvme

- --no-collector.dmi

- --no-collector.arp

- --collector.filesystem.ignored-mount-points=^/(dev|proc|sys|var/lib/containerd/.+|/var/lib/docker/.+|var/lib/kubelet/pods/.+)($|/)

- --collector.filesystem.ignored-fs-types=^(autofs|binfmt_misc|cgroup|configfs|debugfs|devpts|devtmpfs|fusectl|hugetlbfs|mqueue|overlay|proc|procfs|pstore|rpc_pipefs|securityfs|sysfs|tracefs)$

ports:

- containerPort: 9100

env:

- name: HOSTIP

valueFrom:

fieldRef:

fieldPath: status.hostIP

resources:

requests:

cpu: 150m

memory: 180Mi

limits:

cpu: 150m

memory: 180Mi

securityContext:

runAsNonRoot: true

runAsUser: 65534

volumeMounts:

- name: proc

mountPath: /host/proc

- name: sys

mountPath: /host/sys

- name: root

mountPath: /host/root

mountPropagation: HostToContainer

readOnly: true

tolerations:

- operator: "Exists"

volumes:

- name: proc

hostPath:

path: /proc

- name: dev

hostPath:

path: /dev

- name: sys

hostPath:

path: /sys

- name: root

hostPath:

path: /

# 查看部署状态

[root@k-m-1 kube-vm]# kubectl get pod -n kube-vm

NAME READY STATUS RESTARTS AGE

node-exporter-j62mm 1/1 Running 0 16s

node-exporter-lbx4b 1/1 Running 0 16s

# 查看是否占用端口

[root@k-m-1 kube-vm]# curl 10.0.0.11:9100 -I

HTTP/1.1 200 OK

Date: Sat, 15 Apr 2023 09:03:25 GMT

Content-Length: 150

Content-Type: text/html; charset=utf-8

[root@k-m-1 kube-vm]# curl 10.0.0.12:9100 -I

HTTP/1.1 200 OK

Date: Sat, 15 Apr 2023 09:03:28 GMT

Content-Length: 150

Content-Type: text/html; charset=utf-8

# 然后我们需要部署一套Prometheus,为了简单我们直接使用static_configs静态态配置的方式来抓取node-exporter的指标,

# vm-prom-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-vm

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'nodes'

static_configs:

- targets: ['10.0.0.11:9100','10.0.0.12:9100']

relabel_configs:

- source_labels: [__address__]

regex: "(.*):(.*)"

replacement: "${1}"

target_label: 'ip'

action: replace

这里面的relabel操作其实就是为了方便我们去获取IP而已,写不写都无所谓,大眼一眼都能看明白,我们从__address__里面匹配使用正则匹配出两组数据,一组是IP,另一组是端口。我们取出第一组数据然后创建一个新的标签为IP,将数据写入进去。

# 部署配置文件

[root@k-m-1 kube-vm]# kubectl apply -f vm-prom-config.yaml

configmap/prometheus-config created

下面我们就开始部署Prometheus了

# vm-local-storage.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-storage

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

# vm-prom-pv.yaml

kind: PersistentVolume

apiVersion: v1

metadata:

name: prometheus-data

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 10Gi

storageClassName: local-storage

local:

path: /data/

persistentVolumeReclaimPolicy: Retain

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k-w-1

# vm-prom-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: prometheus-data

namespace: kube-vm

spec:

volumeName: prometheus-data

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: local-storage

# vm-prom.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: prometheus

namespace: kube-vm

labels:

app: prometheus

spec:

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

containers:

- name: prometheus

image: prom/prometheus:latest

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus"

- "--storage.tsdb.retention.time=24h"

- "--web.enable-admin-api"

- "--web.enable-lifecycle"

ports:

- name: http

containerPort: 9090

securityContext:

runAsUser: 0

volumeMounts:

- mountPath: "/etc/prometheus"

name: config-volume

- mountPath: "/prometheus"

name: data

resources:

requests:

cpu: 200m

memory: 1024Mi

limits:

cpu: 200m

memory: 1024Mi

volumes:

- name: data

persistentVolumeClaim:

claimName: prometheus-data

- configMap:

name: prometheus-config

name: config-volume

# vm-prom-svc.yaml

kind: Service

apiVersion: v1

metadata:

name: prometheus

namespace: kube-vm

labels:

app: prometheus

spec:

selector:

app: prometheus

type: NodePort

ports:

- name: web

port: 9090

targetPort: http

我们直接查看最后部署出来的结果

[root@k-m-1 kube-vm]# kubectl get pod,svc,pvc -n kube-vm

NAME READY STATUS RESTARTS AGE

pod/node-exporter-j62mm 1/1 Running 0 32m

pod/node-exporter-lbx4b 1/1 Running 0 32m

pod/prometheus-855c7c4755-572hb 1/1 Running 0 32s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/prometheus NodePort 10.96.1.12 <none> 9090:30270/TCP 15s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/prometheus-data Bound prometheus-data 10Gi RWO local-storage 4m43s

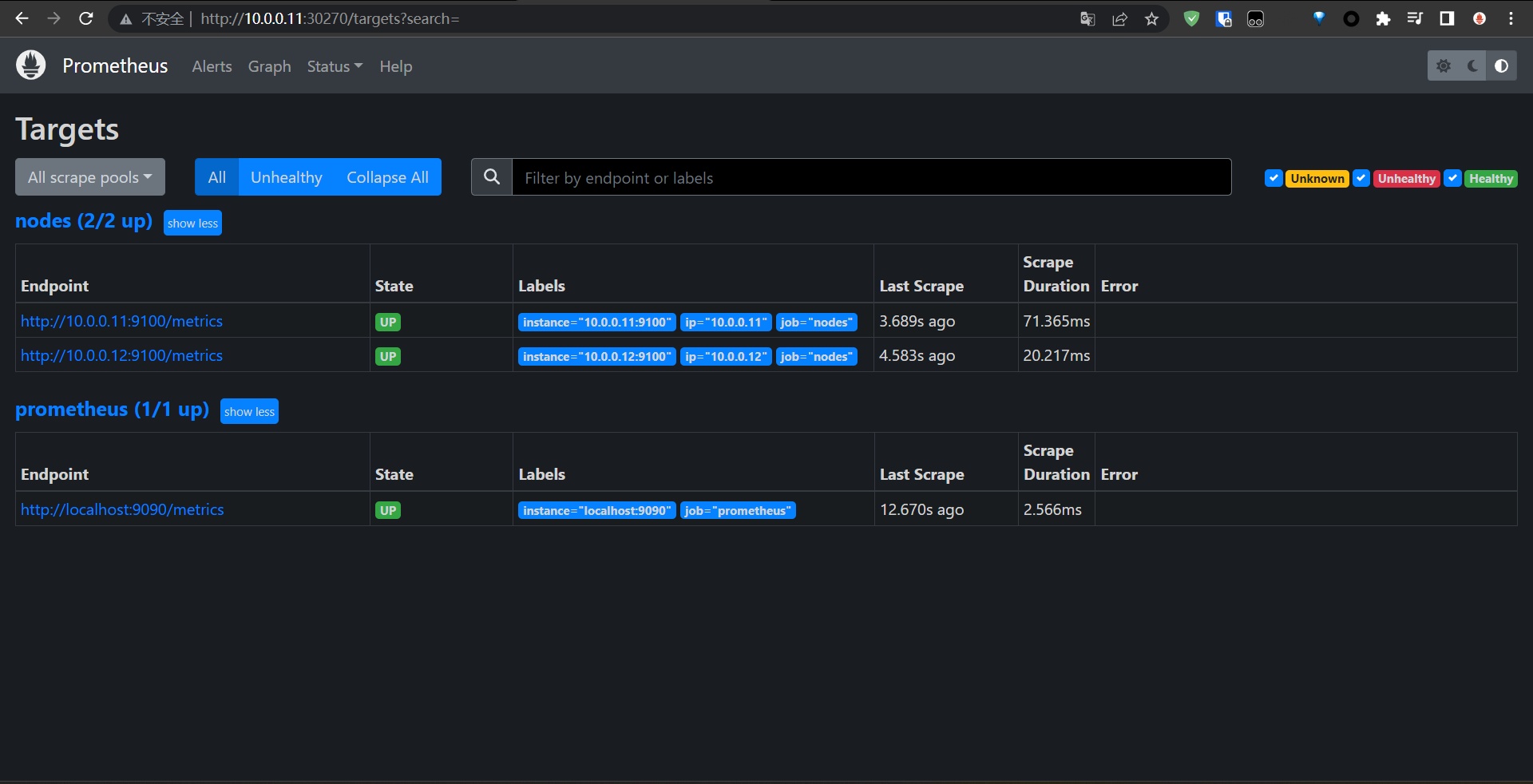

我们直接访问看看Prometheus是否有监控到我们定义的数据

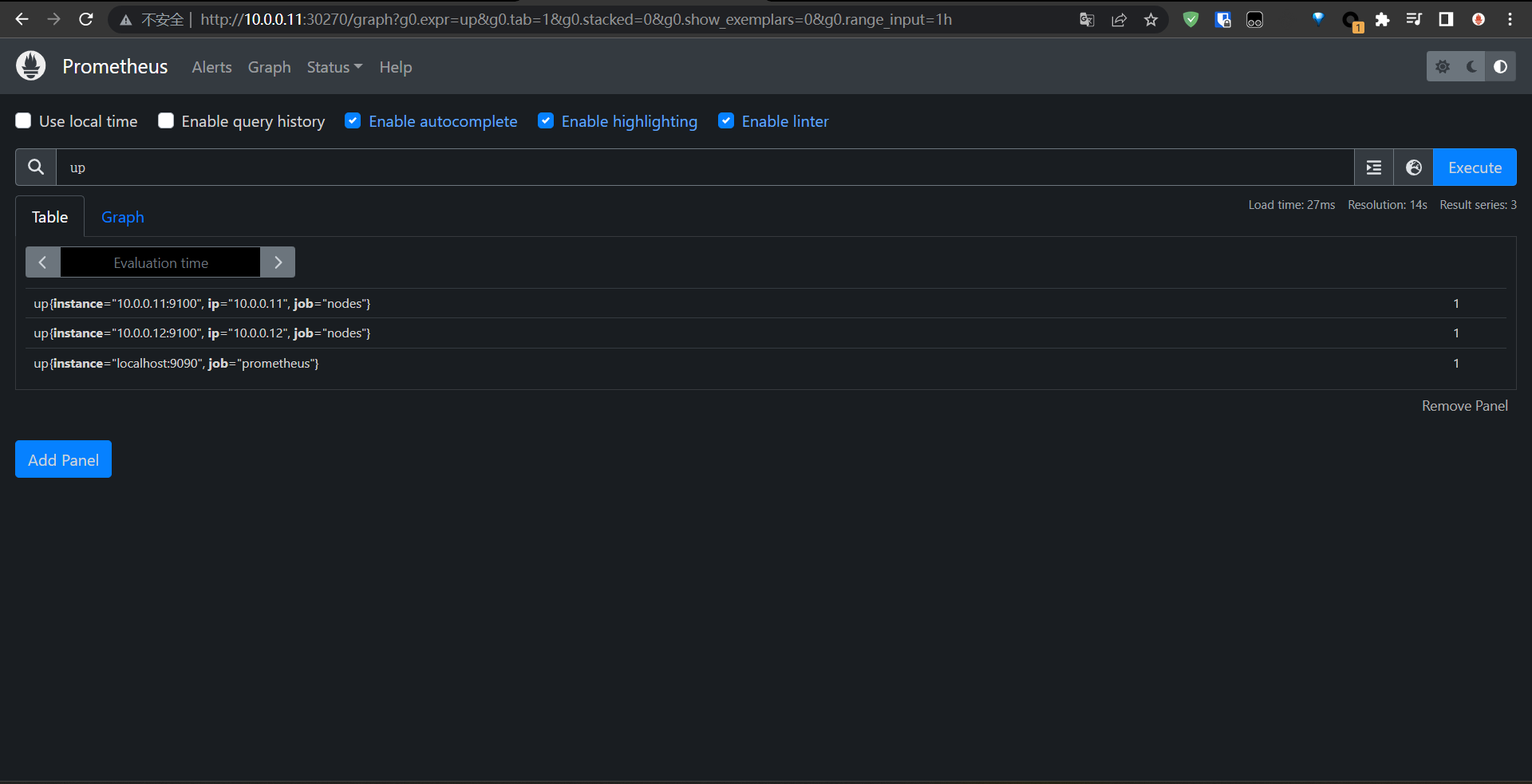

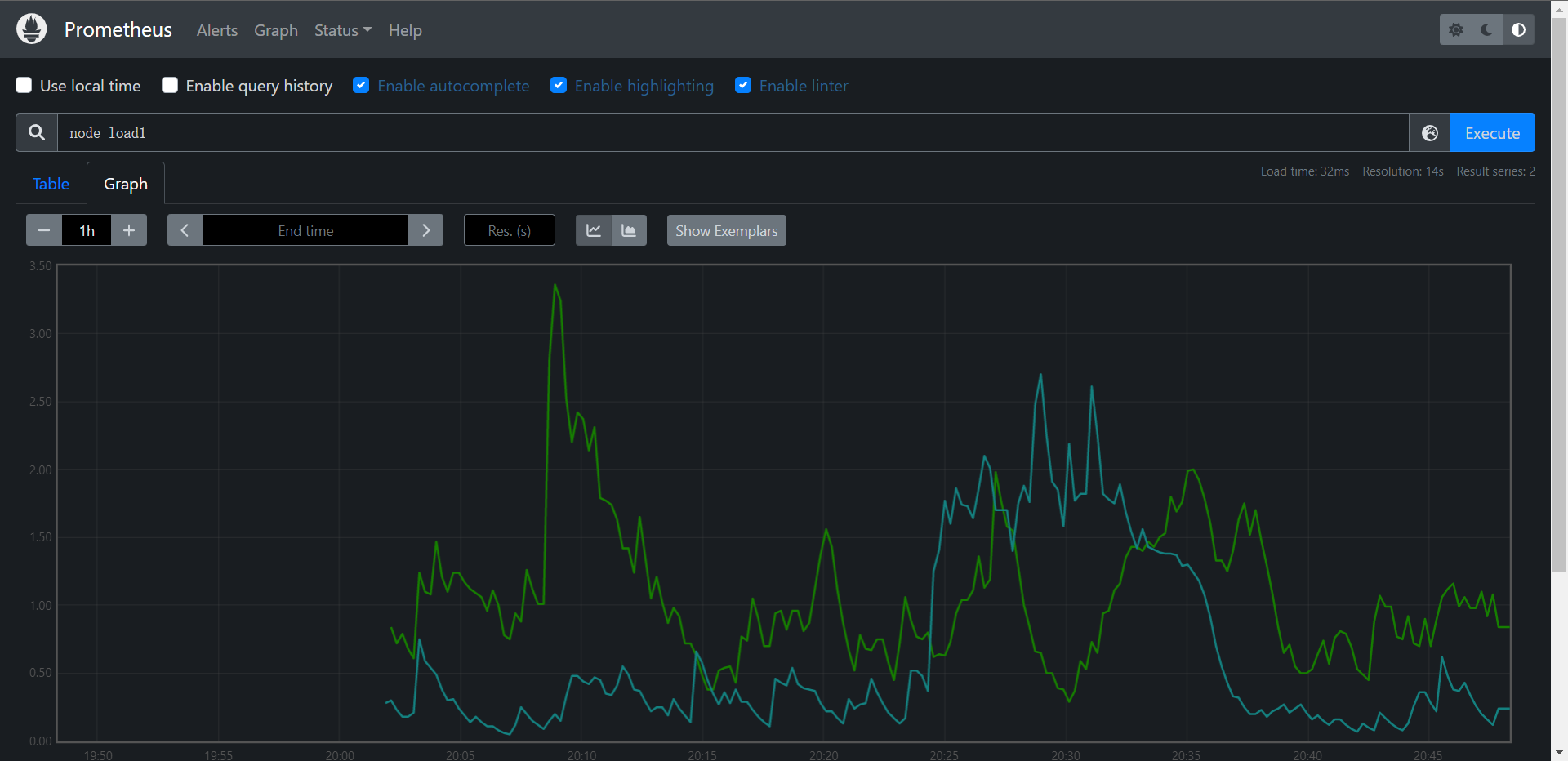

可以看到数据是没有什么问题的,可以看到我们自定义的标签也出现了,证明我们的配置也是生效的

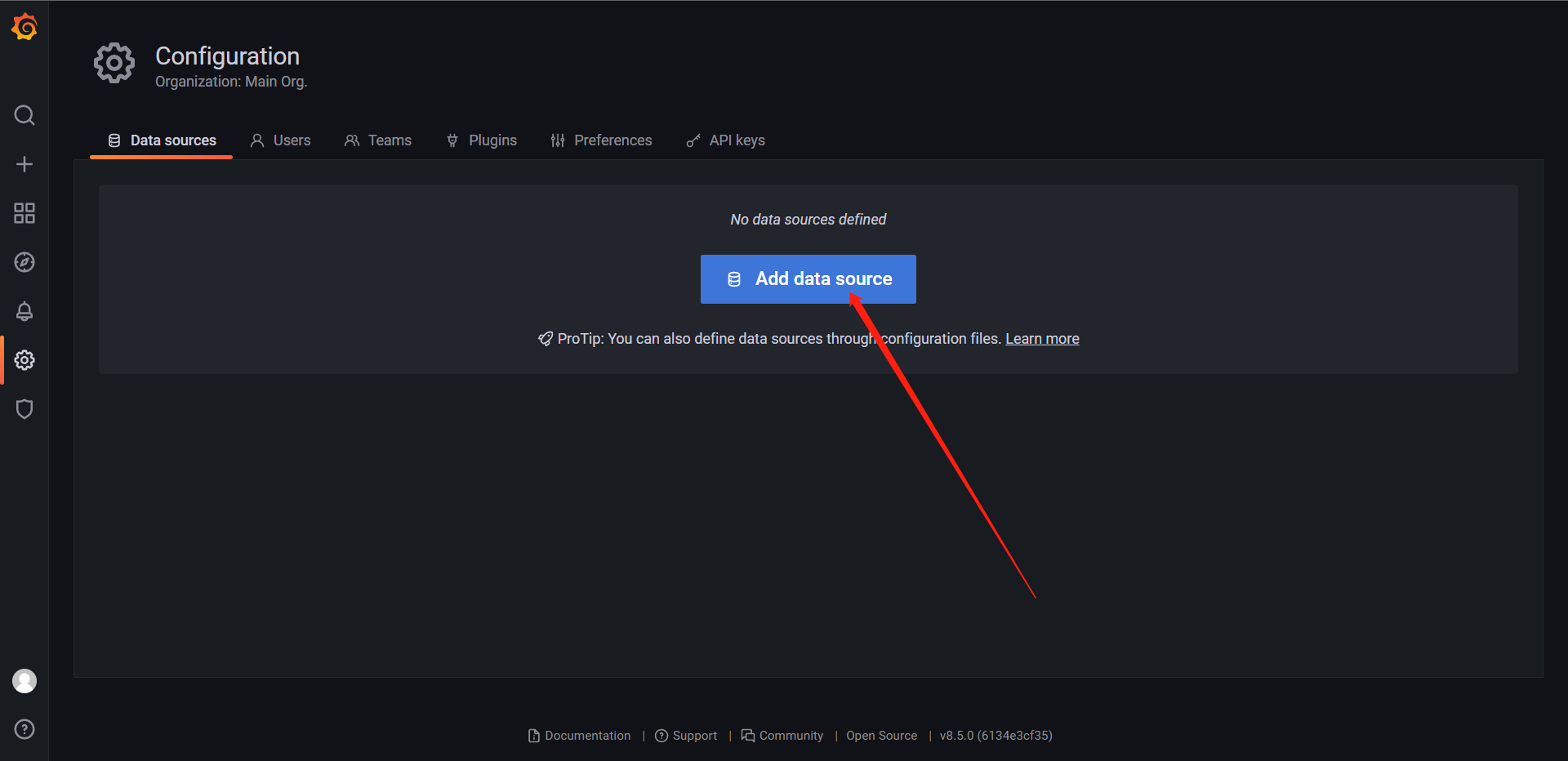

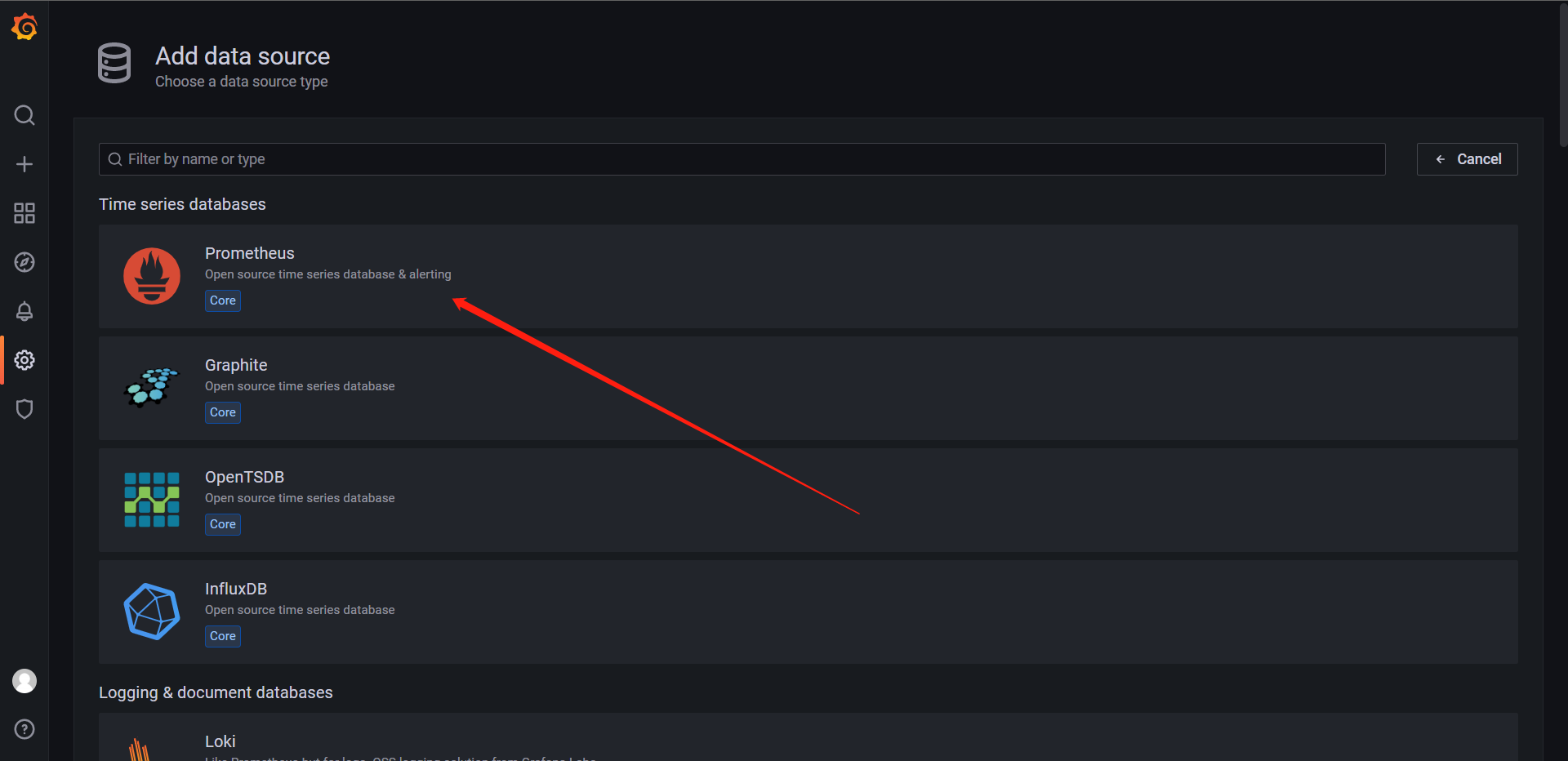

这里数据也是没有问题的,下面我们开始部署Grafana,因为我们需要做数据可视化嘛,所以需要部署一套Grafana

# vm-grafana-pv.yaml

kind: PersistentVolume

apiVersion: v1

metadata:

name: grafana-data

labels:

app: grafana

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 10Gi

storageClassName: local-storage

local:

path: /data/grafana

persistentVolumeReclaimPolicy: Retain

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k-w-1

# vm-grafana-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: grafana-data

namespace: kube-vm

spec:

volumeName: grafana-data

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: local-storage

# vm-grafana.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: grafana

namespace: kube-vm

labels:

app: grafana

spec:

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

volumes:

- name: storage

persistentVolumeClaim:

claimName: grafana-data

# 两种方式 - 1 任选其一

initContainers:

- name: fix-permissions

image: busybox

command: [chown, -R, "472:472", "/var/lib/grafana"]

volumeMounts:

- mountPath: /var/lib/grafana

name: storage

containers:

- name: grafana

image: grafana/grafana:8.5.0

ports:

- containerPort: 3000

name: grafana

# 两种方式 - 2 任选其一

# securityContext:

# runAsUser: 0

env:

- name: GF_SECURITY_ADMIN_USER

value: admin

- name: GF_SECURITY_ADMIN_PASSWORD

value: admin123

readinessProbe:

failureThreshold: 10

httpGet:

path: /api/health

port: 3000

scheme: HTTP

initialDelaySeconds: 60

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 30

livenessProbe:

failureThreshold: 10

httpGet:

path: /api/health

port: 3000

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

limits:

cpu: 150m

memory: 512Mi

requests:

cpu: 150m

memory: 512Mi

volumeMounts:

- mountPath: /var/lib/grafana

name: storage

# vm-grafana-svc.yaml

kind: Service

apiVersion: v1

metadata:

name: grafana

namespace: kube-vm

spec:

type: NodePort

ports:

- name: http

port: 3000

targetPort: grafana

selector:

app: grafana

[root@k-m-1 kube-vm]# kubectl get pod,svc,pv,pvc -n kube-vm

NAME READY STATUS RESTARTS AGE

pod/grafana-7dcb9dd5f4-kbg8h 1/1 Running 0 91s

pod/node-exporter-j62mm 1/1 Running 0 93m

pod/node-exporter-lbx4b 1/1 Running 0 93m

pod/prometheus-855c7c4755-572hb 1/1 Running 0 61m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/grafana NodePort 10.96.2.58 <none> 3000:32102/TCP 10s

service/prometheus NodePort 10.96.1.12 <none> 9090:30270/TCP 61m

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/grafana-data 10Gi RWO Retain Bound kube-vm/grafana-data local-storage 2m17s

persistentvolume/prometheus-data 10Gi RWO Retain Bound kube-vm/prometheus-data local-storage 69m

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/grafana-data Bound grafana-data 10Gi RWO local-storage 2m14s

persistentvolumeclaim/prometheus-data Bound prometheus-data 10Gi RWO local-storage 66m

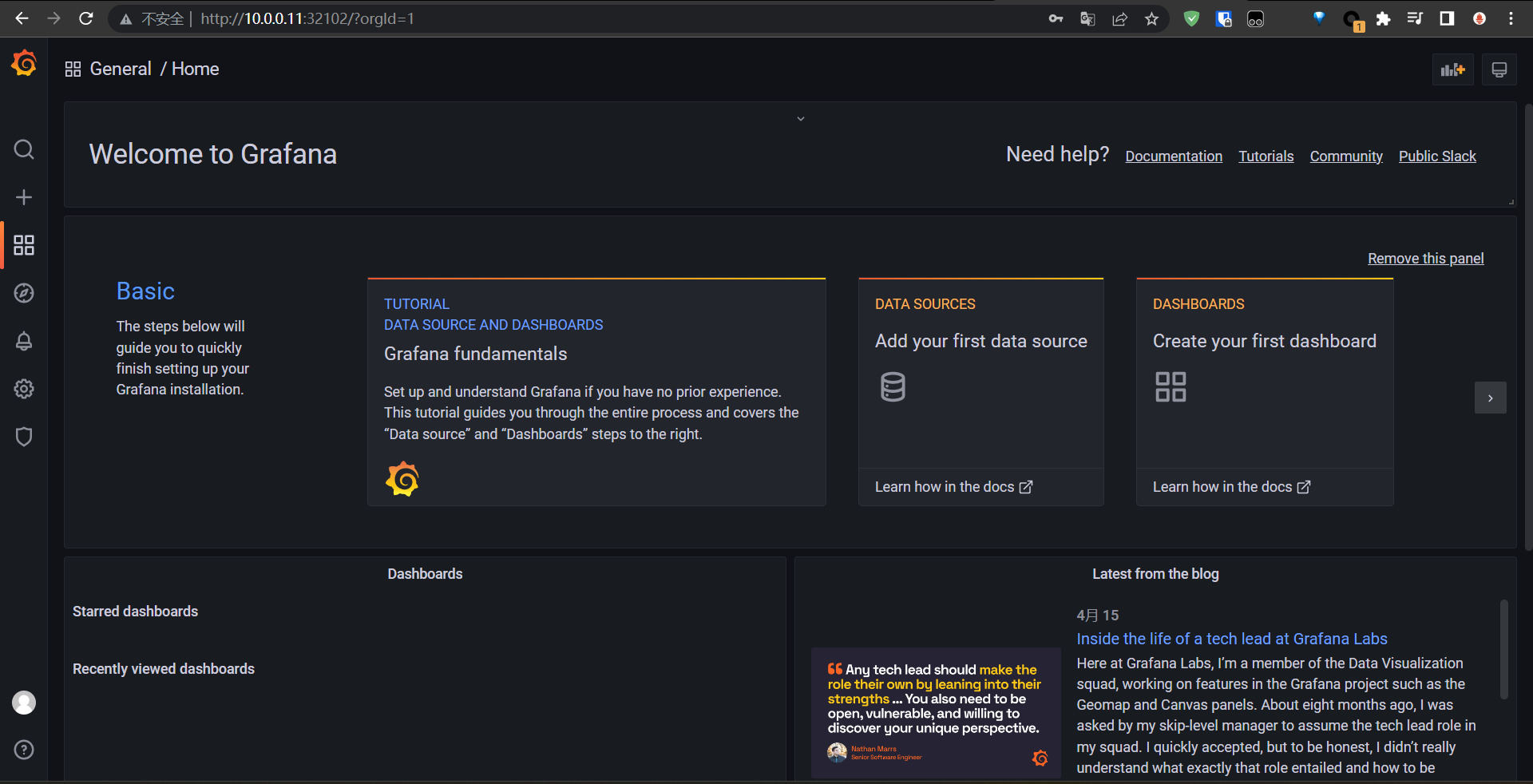

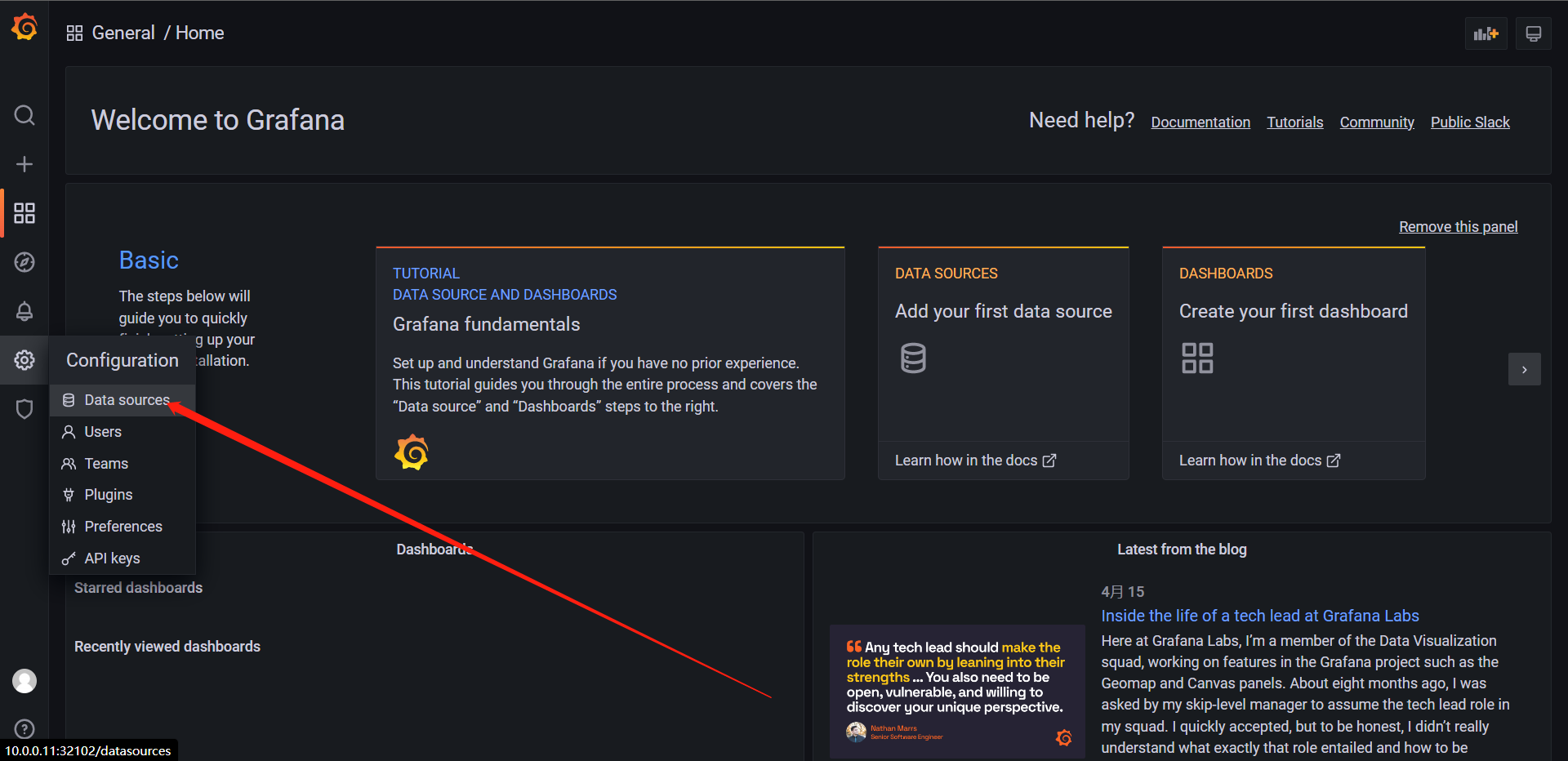

部署完成之后我们可以去访问一下Web

# 注:这里的yaml直接制定了管理员的账号密码,可以直接登录了

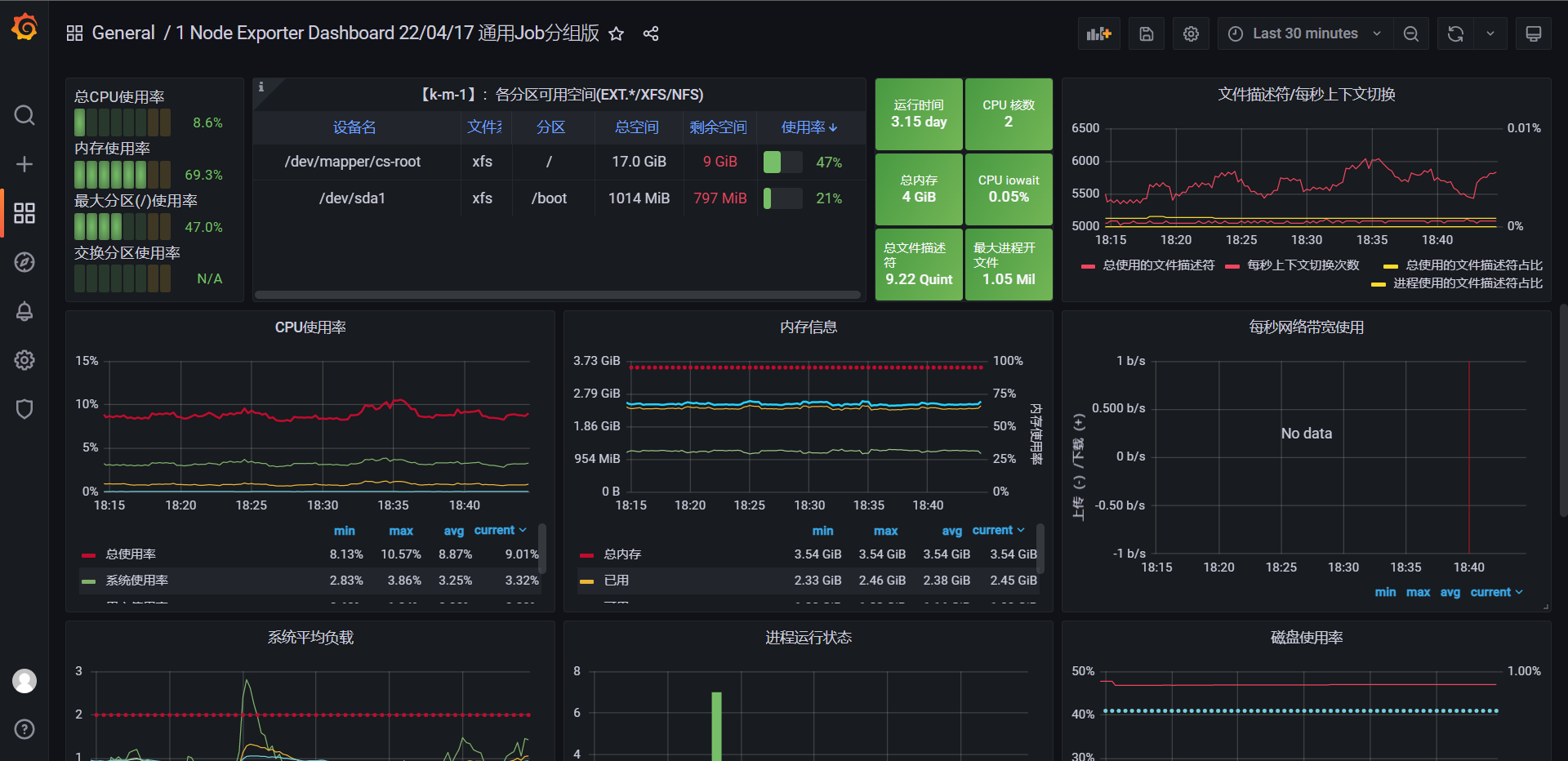

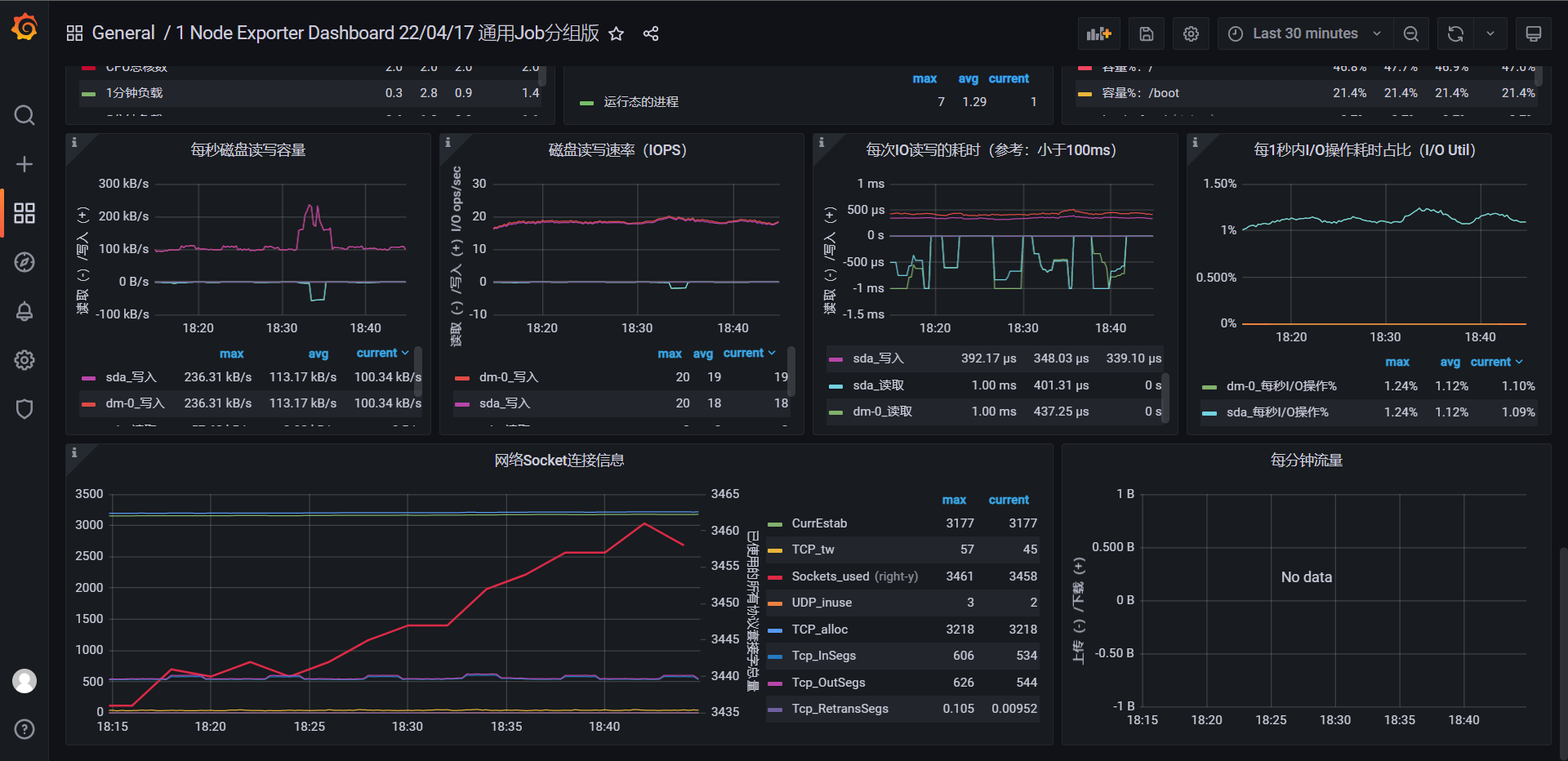

可以到,这个Dashboard是非常的全面的,那么我们下面该考虑的是如何将Prometheus的数据远程写入到VM内,那么我们肯定是要去部署一下VM的,这里我们用的是VM的单节点方案

首先我们需要一个单节点模式的VM,运行VM也很简单,可以直接下载对应的二进制文件启动,也可以使用Docker镜像一键启动,我们同样部署在K8S中

# vm-victoria-metrics-pv.yaml

kind: PersistentVolume

apiVersion: v1

metadata:

name: victoria-metrics-data

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 10Gi

storageClassName: local-storage

local:

path: /data/vm

persistentVolumeReclaimPolicy: Retain

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k-w-1

# vm-victoria-metrics-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: victoria-metrics-data

namespace: kube-vm

spec:

volumeName: victoria-metrics-data

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: local-storage

# vm-victoria-metrics.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: victoria-metrics

namespace: kube-vm

spec:

selector:

matchLabels:

app: victoria-metrics

template:

metadata:

labels:

app: victoria-metrics

spec:

volumes:

- name: storage

persistentVolumeClaim:

claimName: victoria-metrics-data

containers:

- name: vm

image: victoriametrics/victoria-metrics:stable

args:

- "-storageDataPath=/var/lib/victoria-metrics-data"

- "-retentionPeriod=1w"

ports:

- name: http

containerPort: 8428

volumeMounts:

- mountPath: /var/lib/victoria-metrics-data

name: storage

# vm-victoria-metrics-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: victoria-metrics

namespace: kube-vm

spec:

type: NodePort

ports:

- name: http

port: 8428

targetPort: http

selector:

app: victoria-metrics

[root@k-m-1 kube-vm]# kubectl get pv,pvc,pod,svc -n kube-vm

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/grafana-data 10Gi RWO Retain Bound kube-vm/grafana-data local-storage 3h

persistentvolume/prometheus-data 10Gi RWO Retain Bound kube-vm/prometheus-data local-storage 4h7m

persistentvolume/victoria-metrics-data 10Gi RWO Retain Bound kube-vm/victoria-metrics-data local-storage 115s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/grafana-data Bound grafana-data 10Gi RWO local-storage 3h

persistentvolumeclaim/prometheus-data Bound prometheus-data 10Gi RWO local-storage 4h4m

persistentvolumeclaim/victoria-metrics-data Bound victoria-metrics-data 10Gi RWO local-storage 112s

NAME READY STATUS RESTARTS AGE

pod/grafana-7dcb9dd5f4-kbg8h 1/1 Running 0 179m

pod/node-exporter-j62mm 1/1 Running 0 4h31m

pod/node-exporter-lbx4b 1/1 Running 0 4h31m

pod/prometheus-855c7c4755-572hb 1/1 Running 0 3h59m

pod/victoria-metrics-598849c7c8-ct5br 1/1 Running 0 31s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/grafana NodePort 10.96.2.58 <none> 3000:32102/TCP 178m

service/prometheus NodePort 10.96.1.12 <none> 9090:30270/TCP 3h59m

service/victoria-metrics NodePort 10.96.3.77 <none> 8428:31135/TCP 14s

# 这个时候PRometheus其实和VM是没任何关系的,我们需要去修改Prometheus的配置,让它将数据写入到VM中去

# vm-prom-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-vm

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

remote_write:

- url: http://victoria-metrics:8428/api/v1/write

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'nodes'

static_configs:

- targets: ['10.0.0.11:9100','10.0.0.12:9100']

relabel_configs:

- source_labels: [__address__]

regex: "(.*):(.*)"

replacement: "${1}"

target_label: 'ip'

action: replace

[root@k-m-1 kube-vm]# kubectl apply -f vm-prom-config.yaml

configmap/prometheus-config configured

# 重载Prometheus资源

[root@k-m-1 kube-vm]# curl -X POST "http://10.0.0.11:30270/-/reload"

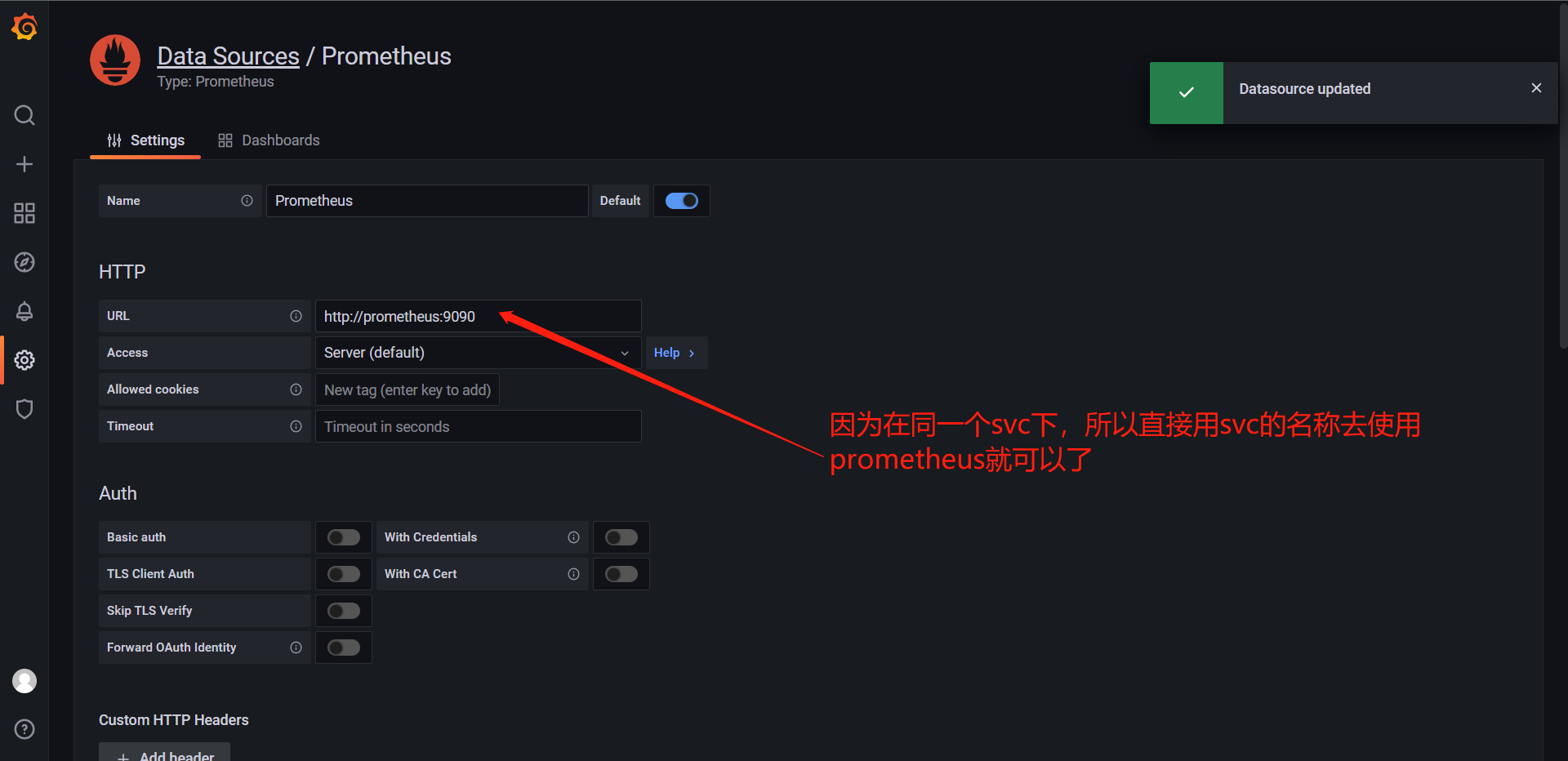

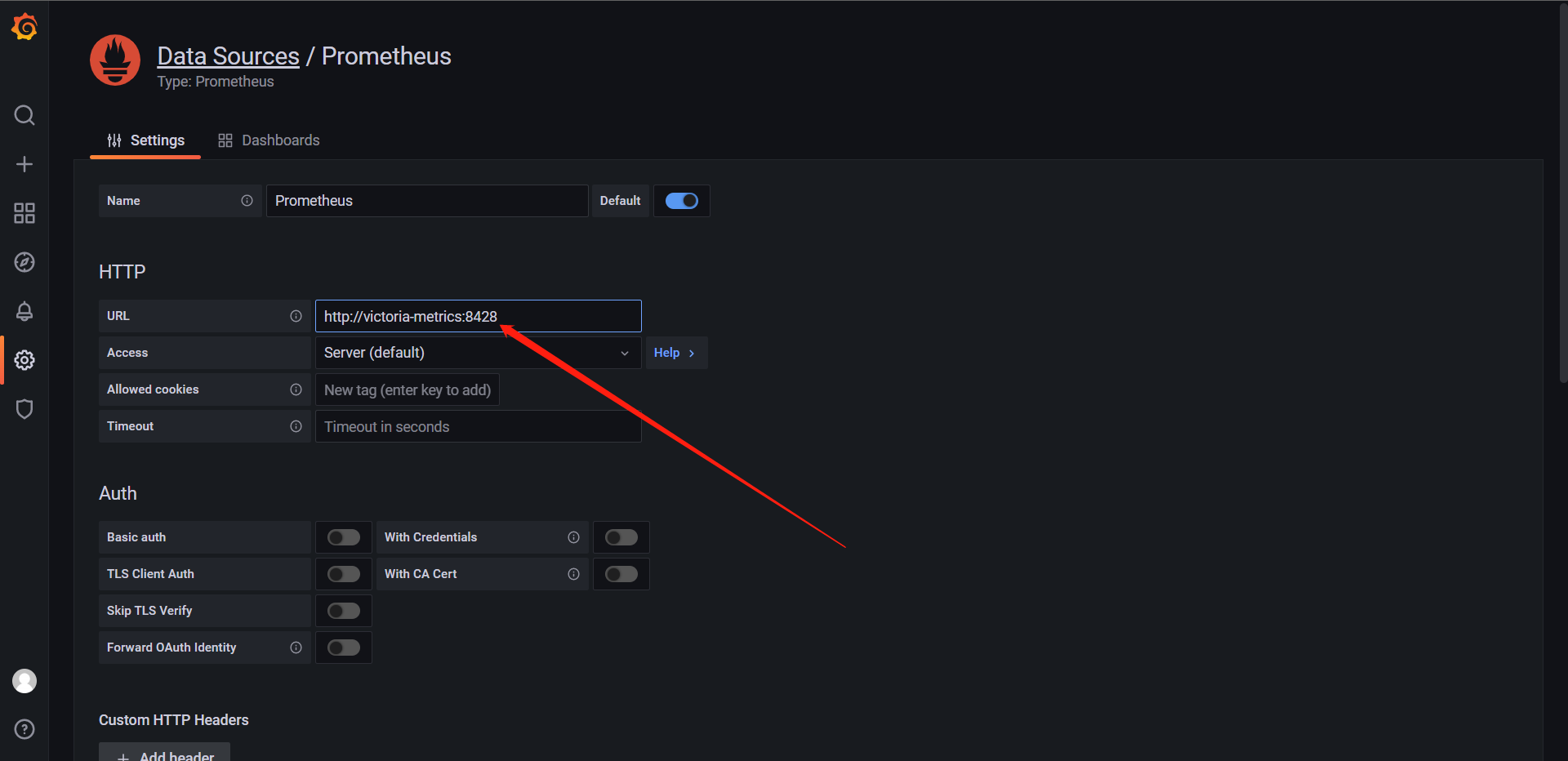

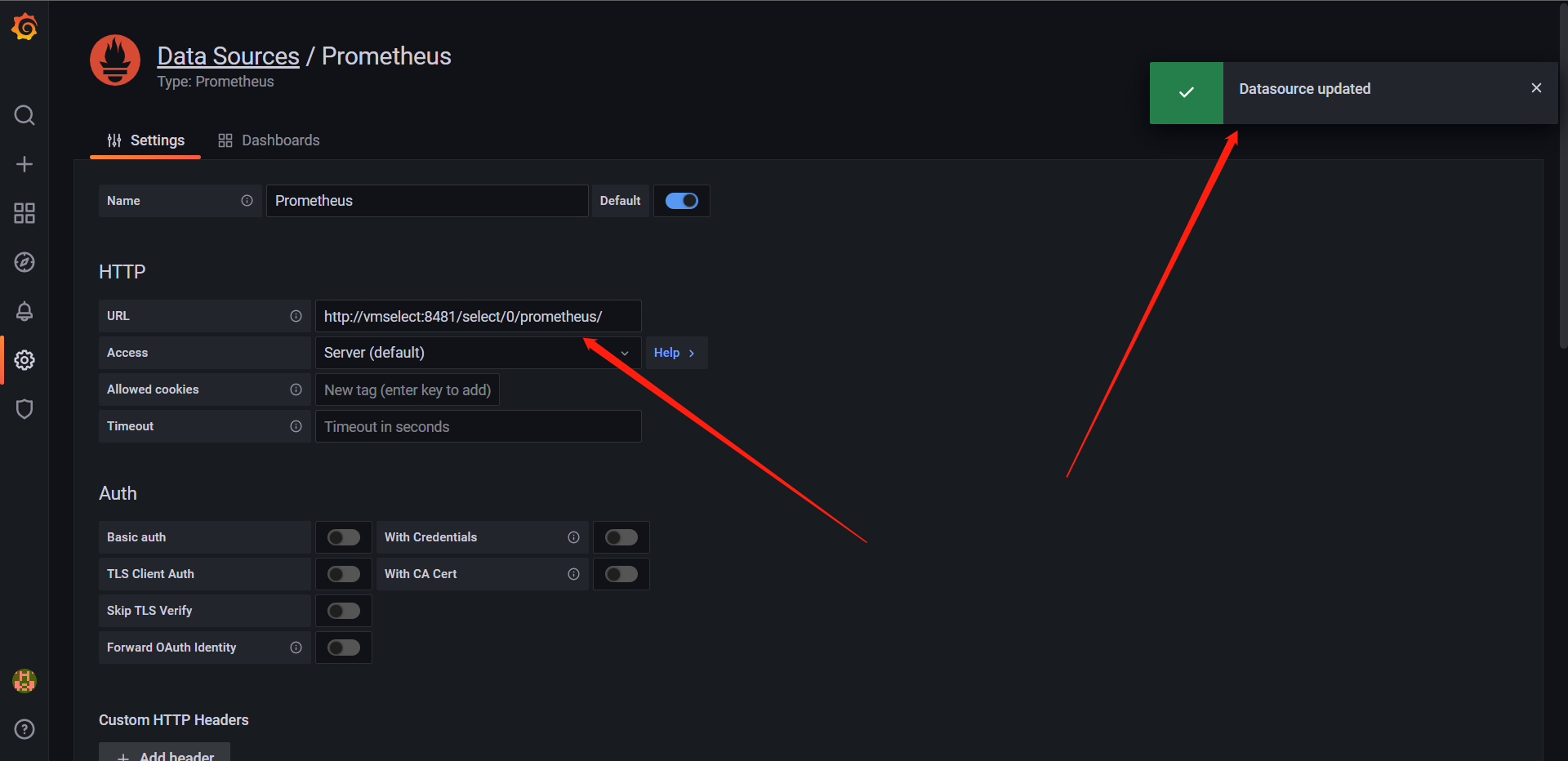

# 这个时候其实数据已经在向VM写入了,因为我们部署了Grafana嘛,所以我们直接去换数据源就行

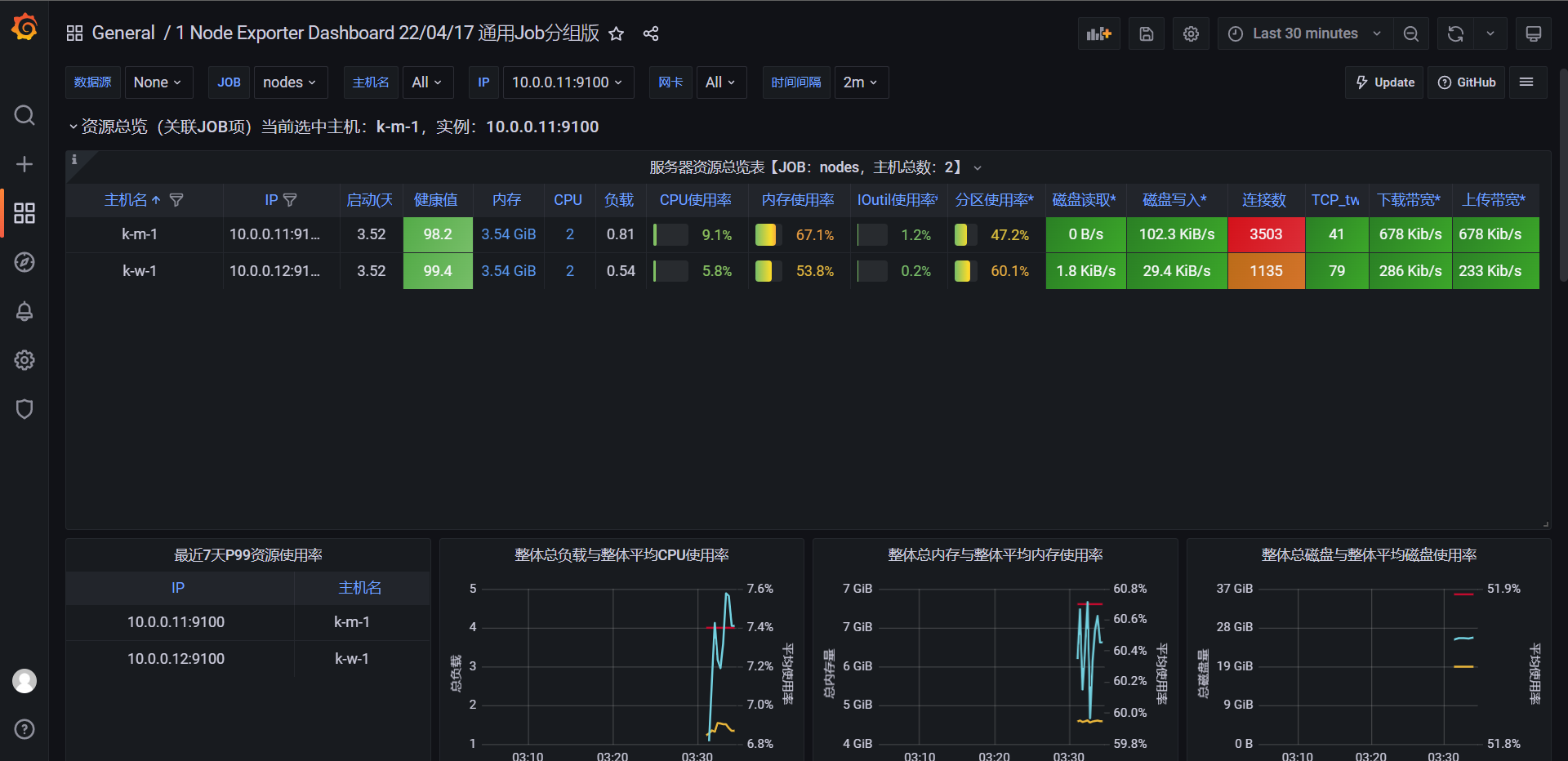

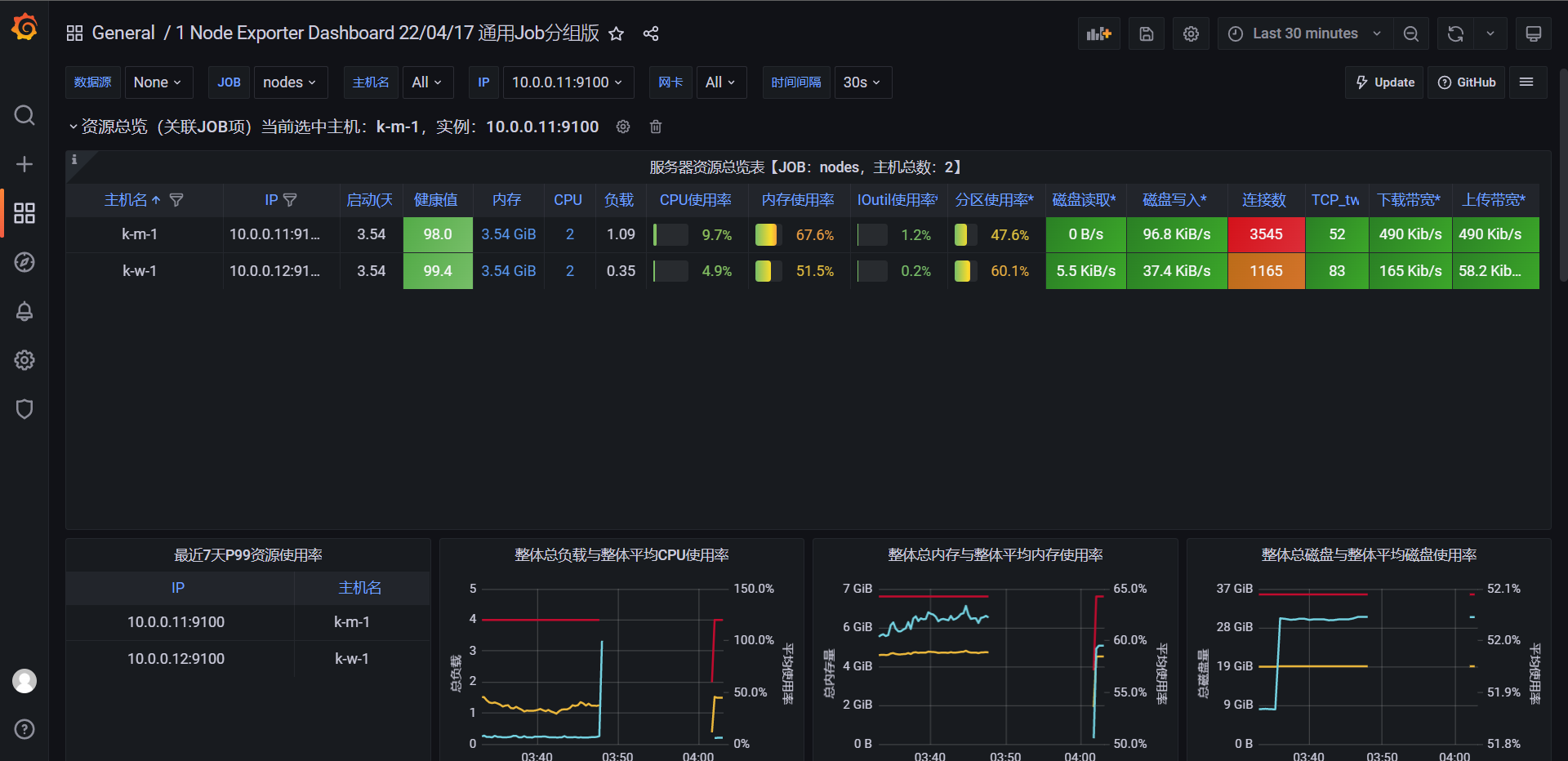

可以看到,数据是我配置Prometheus生效的时间也就是在刚刚3点30分左右,数据传入到VM之后这边的数据也就是从3点30开始的。

Prometheus远程写入到VM,我们去查看VM的持久化存储数据目录是否有数据产生

[root@k-w-1 ~]# ls /data/vm/data/

big flock.lock small

# 可以看到是有数据存在的,那么下面我们就该考虑替换掉Prometheus了,因为VM本身有收集数据的模块,所以我们可以从这里开始替换掉它

# 首先我们暂停Prometheus的服务

[root@k-m-1 kube-vm]# kubectl scale deployment -n kube-vm prometheus --replicas 0

deployment.apps/prometheus scaled

# 然后我们将Prometheus的配置文件挂载到VM的的容器中,使用参数-promscrape.config来指定Prometheus的配置文件

# vm-victoria-metrics.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: victoria-metrics

namespace: kube-vm

spec:

selector:

matchLabels:

app: victoria-metrics

template:

metadata:

labels:

app: victoria-metrics

spec:

volumes:

- name: storage

persistentVolumeClaim:

claimName: victoria-metrics-data

- name: prometheus-config

configMap:

name: prometheus-config

containers:

- name: vm

image: victoriametrics/victoria-metrics:stable

args:

- "-storageDataPath=/var/lib/victoria-metrics-data"

- "-retentionPeriod=1w"

- "-promscrape.config=/etc/prometheus/prometheus.yml"

ports:

- name: http

containerPort: 8428

volumeMounts:

- mountPath: /var/lib/victoria-metrics-data

name: storage

- mountPath: /etc/prometheus

name: prometheus-config

# 这里记得去掉prometheus中的远程写入的那个配置,然后重新更新一下configmap

[root@k-m-1 kube-vm]# kubectl apply -f vm-prom-config.yaml

configmap/prometheus-config configured

# 更新VM

[root@k-m-1 kube-vm]# kubectl apply -f vm-victoria-metrics.yaml

deployment.apps/victoria-metrics unchanged

可以看到中间有断数据,那是因为我切了Prometheus到VM上,中间用了十来分钟,因为有问题排查,所以现在我们基本上是把Prometheus全部给替代掉了,因为Prometheus的Pod也没了,现在不管是数据源还是数据存储都是VM,只有收集的客户端用的是Exporter,但是数据也都是没问题的。

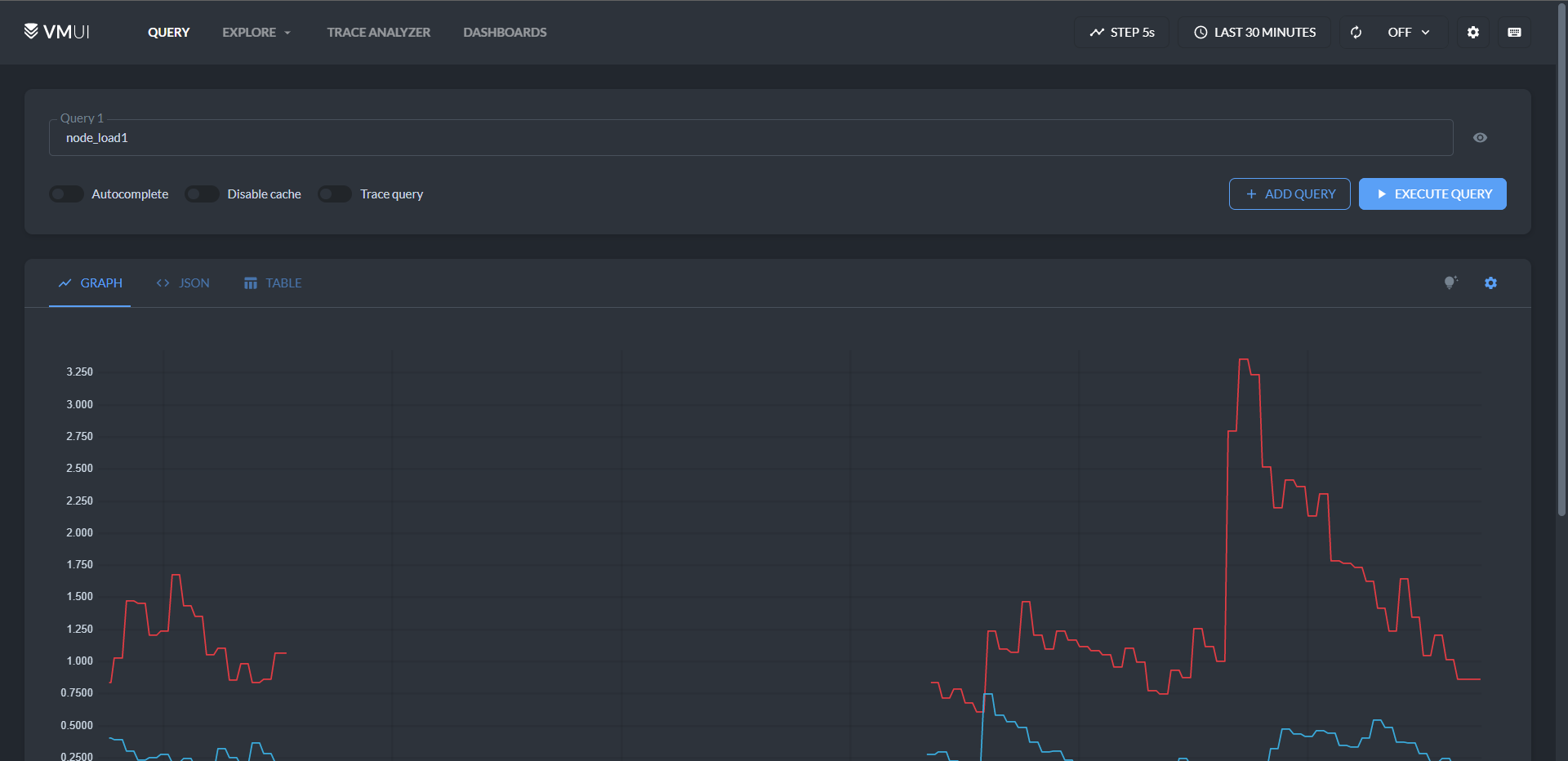

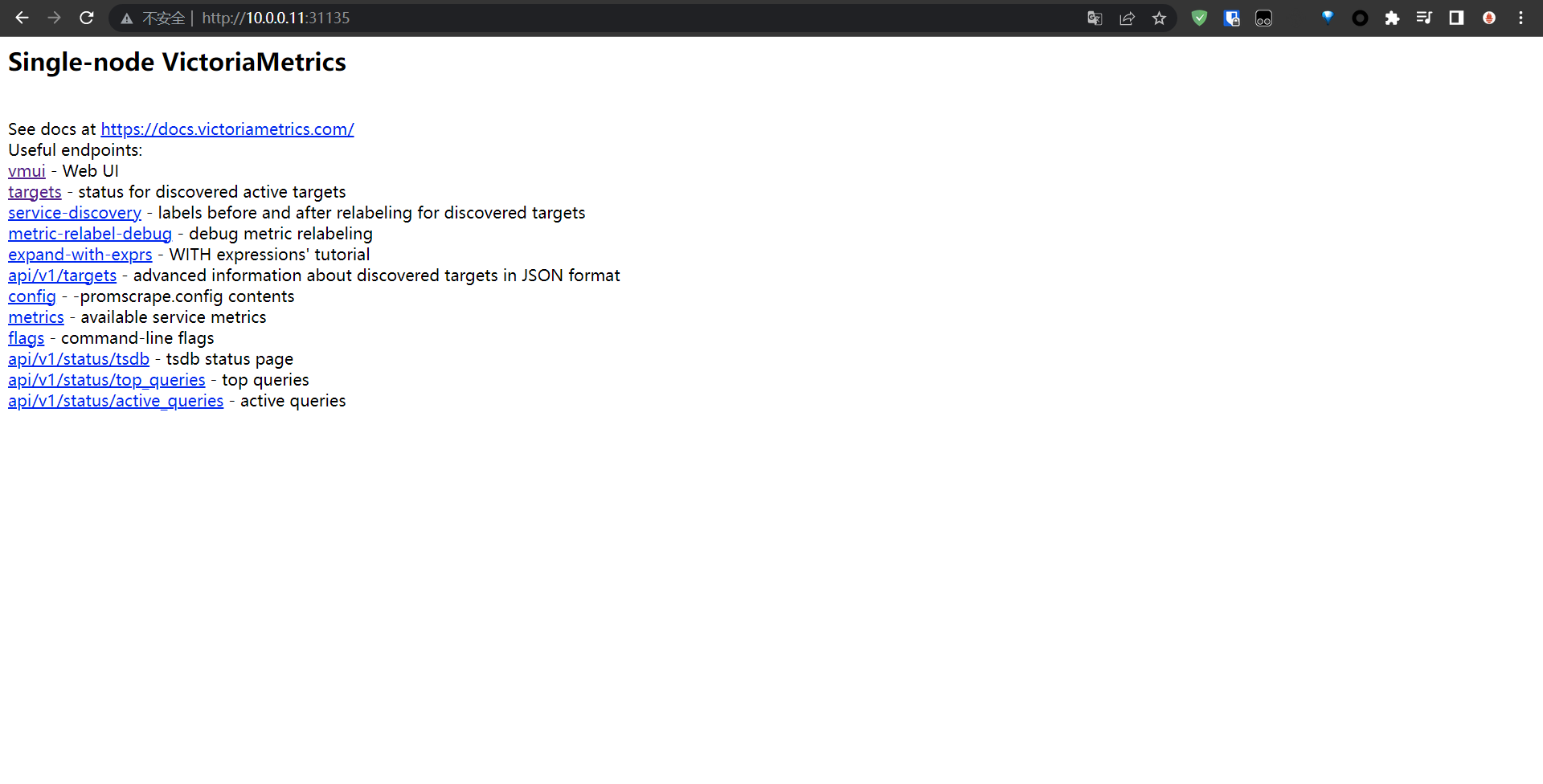

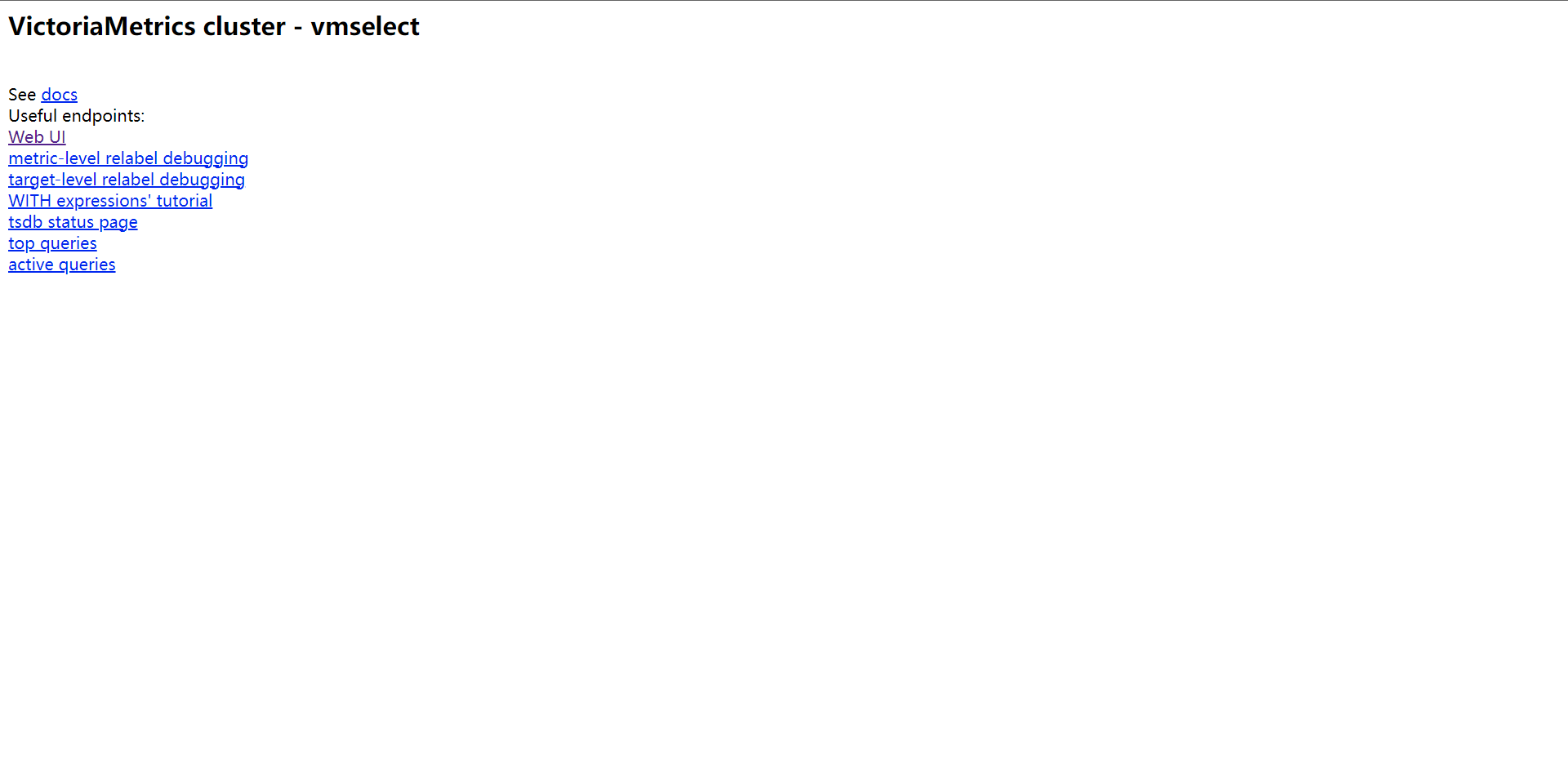

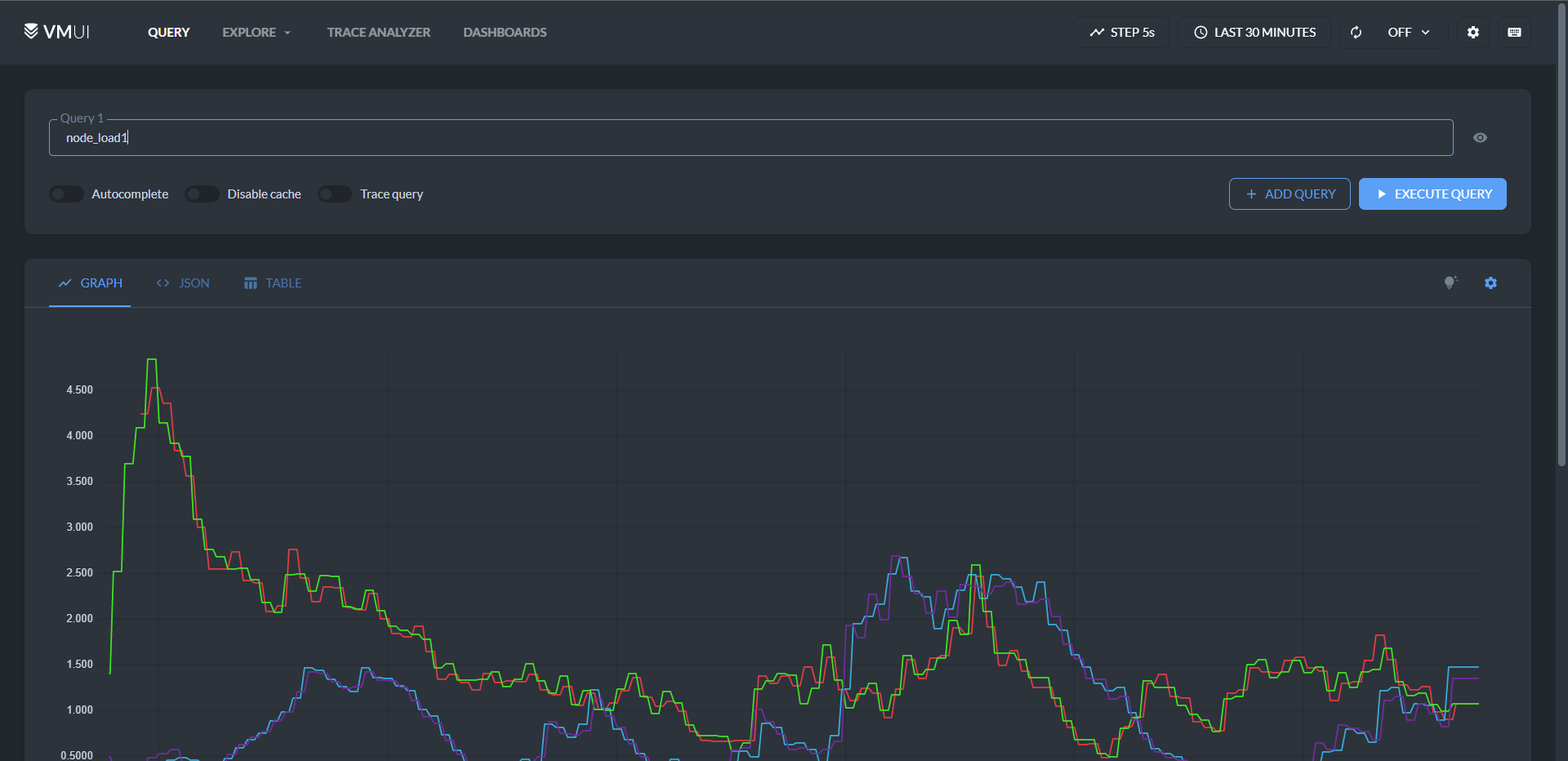

其实VM也有UI,它的地址是:http://10.0.0.11:31135/vmui

# 它也支持PromQL语句我们可以看到下图已经可以看到我们的数据了

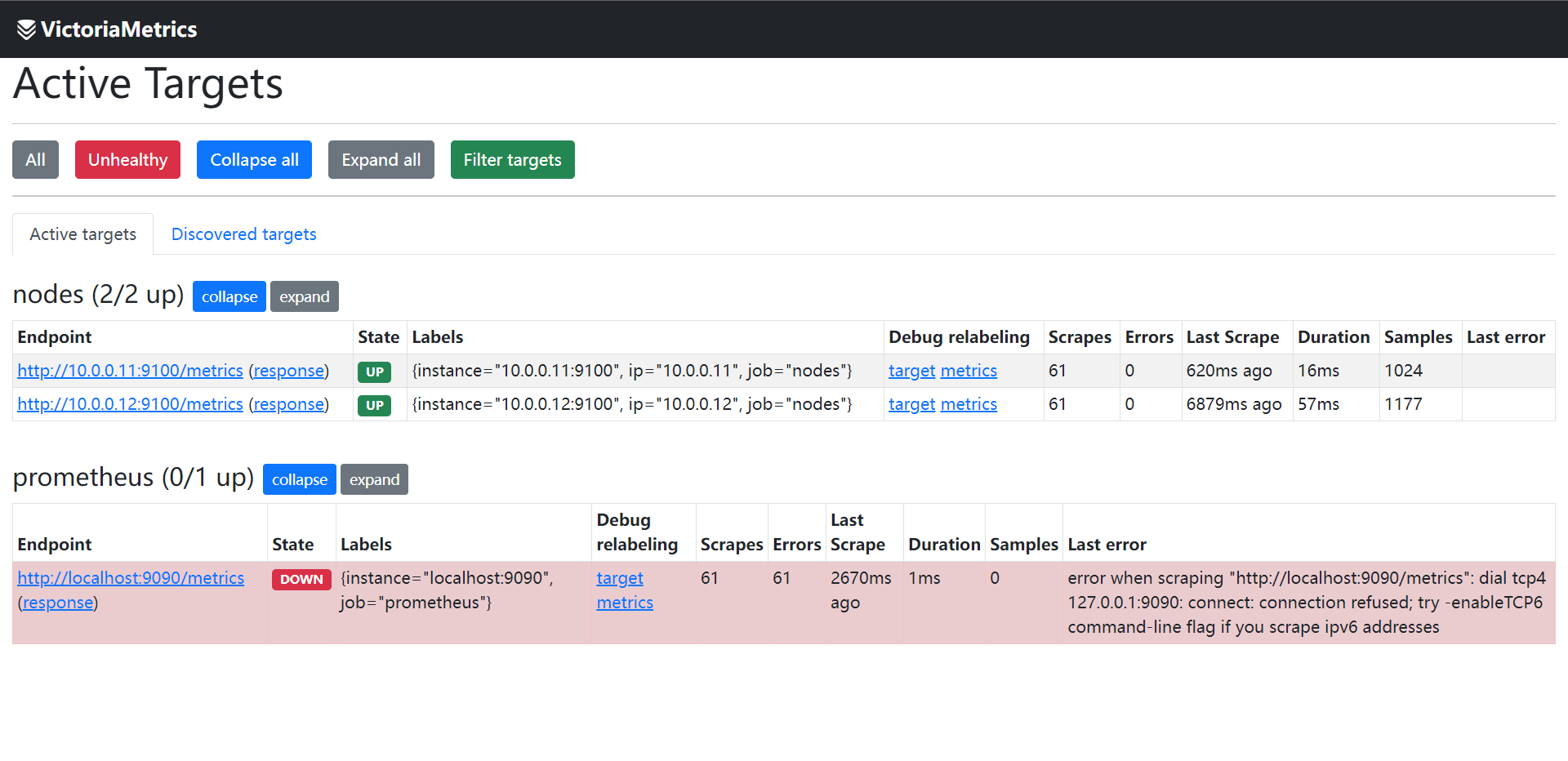

它的其他配置都可以在:http://10.0.0.11:31135 这个地址去选择,比如targets

这里的报错就不多说了,是因为地址和Prometheus的Pod存在的原因,其实把Prometheus的configmap中的prometheus的localhost配置去掉就行了

不过说起来VM的WebUI还是不是特别实用的,如果说大家还是喜欢Prometheus的UI,那么可以部署一个promxy进行多VM聚合以及targets查看等

# vm-promxy-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: promxy-config

namespace: kube-vm

data:

config.yaml: |

promxy:

server_groups:

- static_configs:

- targets: [victoria-metrics:8428]

path_prefix: /prometheus

# vm-promxy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: promxy

namespace: kube-vm

spec:

selector:

matchLabels:

app: promxy

template:

metadata:

labels:

app: promxy

spec:

volumes:

- configMap:

name: promxy-config

name: promxy-config

containers:

- name: promxy

image: quay.io/jacksontj/promxy:latest

ports:

- name: web

containerPort: 8082

env:

- name: ROLE

value: "1"

args:

- "--config=/etc/promxy/config.yaml"

- "--web.enable-lifecycle"

- "--log-level=trace"

command:

- "/bin/promxy"

volumeMounts:

- mountPath: "/etc/promxy/"

name: promxy-config

readOnly: true

- name: promxy-server-configmaps-reload

image: jimmidyson/configmap-reload:v0.1

args:

- "--volume-dir=/etc/promxy"

- "--webhook-url=http://localhost:8082/-/reload"

volumeMounts:

- mountPath: "/etc/promxy"

name: promxy-config

readOnly: true

# vm-promxy-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: promxy

namespace: kube-vm

spec:

type: NodePort

ports:

- name: web

port: 8082

targetPort: web

selector:

app: promxy

[root@k-m-1 kube-vm]# kubectl get pod,svc -n kube-vm

NAME READY STATUS RESTARTS AGE

pod/grafana-7dcb9dd5f4-kbg8h 1/1 Running 0 10h

pod/node-exporter-j62mm 1/1 Running 0 11h

pod/node-exporter-lbx4b 1/1 Running 0 11h

pod/promxy-c74446bc8-tqqxf 1/1 Running 0 12s

pod/victoria-metrics-797575b5ff-rsw79 1/1 Running 0 36m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/grafana NodePort 10.96.2.58 <none> 3000:32102/TCP 10h

service/prometheus NodePort 10.96.1.12 <none> 9090:30270/TCP 11h

service/promxy NodePort 10.96.0.251 <none> 8082:32757/TCP 3m15s

service/victoria-metrics NodePort 10.96.3.77 <none> 8428:31135/TCP 7h6m

# 但是大家需要知道,这个其实和Prometheus还是不一样的,它只是有部分的Prometheus的功能,目前只有查询数据的时候是可以按照Prometheus的方式去查询的,其他的还不兼容。

这其实就是整体的一个VM单节点的部署方式和替换Prometheus的方法了,那么我们下面就是介绍VM的集群版本和使用方法了

4:VictoriaMetrics集群介绍

对于低于每秒100w个数据点的摄取率,建议使用单节点版本而不是集群版本,单节点版本可以根据CPU内核,RAM和可用存储空间的数量进行扩展,单节点版本比集群版更容易配置和操作,所以在使用集群版本之前要考虑清楚,上面我们用了单节点版本做存储,也用了它替换Prometheus,那么接下来我们就来看看集群版该如何使用

集群版主要特点:

1:支持单节点版本所有功能

2:性能和容量支持水平扩展

3:支持时间序列数据的多个独立命名空间(多租户)

4:支持多副本

组件服务

在前面我们已经了解了VM的基础架构了,对于集群模式下主要包含以下几个服务:

1:vmstorage:存储原始数据,并返回指定标签过滤器在给定时间范围内的查询数据,当 `-storageDataPath` 指向的目录包含的可用空间少于`-storage.minFreeDiskSpaceBytes`时,`vmstorage`会切换到只读模式,`vminset`节点也会停止向此类节点发送数据并开始将数据重新路由到剩余的`vmstorage`

2:vminset:接受摄取的数据并根据指标名称及其所有标签的一致性哈希将其分散存储到`vmstorage`节点

3:vmselect:通过所有配置的`vmstorage`节点获取所需数据来执行查询

对于每个服务都可以独立扩展,`vmstorage`节点之间互不了解,互补通信,并且不共享任何数据,这样可以增加集群的可用性,并且简化了集群的维护和扩展

最小集群必须以下节点

1:带有`-retentionPeriod`和`-storageDataPath`参数的单`vmstorage`节点

2:带有`-storageNode=<vmstorage_host>`的单`vminsert`节点

3:带有`-storageNode=<vmstorage_host>`的单`vmselect`节点

但其实如果我们生产上使用的话还是建议每个服务运行至少两个节点实现高可用,然后利用K8S的亲和性等功能实现,当一个节点处于崩溃状态时,我们不至于集群都垮掉,如果集群规模比较大的话,那么可以运行多个小型的vmstorage节点,因为这样可以在某些vmstorage节点暂时不可用时减少剩余vmstorage节点上工作负载增加

各个服务除了可以通过flags进行配置之外,也可以通过环境变量的方式配置:

1:-envflag.enable:这个标志必须设置

2:每个标志中的 . 必须替换为_,例如 `-insert.maxQueueDuration <duration>` 可以转换为 `-insert_maxQueueDuration=<duration>`

3:对于重复的标志,可以使用另一种语法,通过使用,作为分隔符将不同的值连成一个,例如 `-storageNode <node1> -storageNode <node2>` 将转换为 `-storageNode=<node1>,<node2>`

4:可以使用-envflag.prefix为环境变量设置前缀,例如设置了-envflag.prefix=VM*,则环境变量参数必须为VM*开头

多租户

此外VM集群也支持多个独立的租户(也叫命名空间),租户由`accountID`或`accountID:projectID`来标识,它们被放在请求的URL中

1:每个accountID和projectID都由一个[0 .. 2^32]范围内的任意32位整数标识,如果缺少projectID,则自动将其分配为0,有关多租户的其他信息,例如身份验证令牌,租户名称,限额,计费等,将存储在一个单独的关系型数据库内,此数据库必须由位于VictoriaMetrics集群前面的单独服务管理,例如:vmauth或者vmgateway、

2:当一个数据点写入指定租户时,租户被自动创建

3:所有租户的数据均匀分布在可用的vmstorage节点中,当不同的租户有不同的数据量和不同的查询负载时,这保证了vmstorage节点之间的均匀均衡

4:数据库性能和资源使用不依赖于租户的配置,它主要取决于所有租户中活跃时间序列的总数,如果一个时间序列在过去一小时内至少收到一个样本,或者在过去一小时内被查询,则认为时间序列是活跃的。

5:VictoriaMetrics不支持在单个请求中查询多个租户

集群大小调整和可扩展性

VM集群的性能和容量可以通过两种方式进行扩展:

1:通过向集群中的现有节点添加资源(CPU,RAM,IO,磁盘,磁盘空间,网络带宽),这也叫垂直可扩展性

2:通过向集群中添加更多的节点,这叫水平可扩展性

对于集群扩展有一些比较通用的建议:

1:向所有vmselect节点添加更多的CPU和内存,可以提高复杂查询的性能,这些查询可以处理大量的时间序列和大量的原始样本

2:添加更多vmstorage节点可以增加集群可处理的活跃时间序列的数量,这样也提高了对高流失率(churn rate)的时间序列的查询性能,集群稳定性也会随着vmstorage的节点数量的增高而增高,当一些vmstorage节点不可用时,活跃的vmstorage节点需要处理较低的额外工作负载。

3:向现有vmstorage节点添加更多的CPU和内存,可以添加集群可处理的活跃时间序列的数量。与向现有vmstorage节点添加vmstorage节点添加更多的CPI和内存相比,最好添加更多的vmstorage节点,因为更多的vmstorage节点可以提高集群的稳定性,并提高对高流失率的时间序列的查询性能。

4:添加更多的vmselect节点会提高数据摄取的最大速度,因为摄取的数据可以在更多的vminsert节点之间进行拆分。

集群可用性:

1:HTTP负载均衡器需要停止将请求路由到不可用的vminsert和vmselect

2:如果至少存在一个vmstorage节点,则集群仍然可用:

1:vminsert将传入数据从不可用的vmstorage节点重新路由到健康的vmstorage节点

2:如果至少有一个vmstorage节点可用,则vmselect会继续提供部分响应,如果优先考虑可用性的一致性,则将 `-search.denyPartialResponse`标志传递给vmselect或请求中的`deny_partial_response=1`查询参数传递给vmselect

重复数据删除

如果`-dedup.minScrapeInterval`命令行标志设置为大于0的时间,VictoriaMetrics会去除重复数据点,例如 `-dedup.minScrapeInterval=60s`将对同一时间序列上的数据点进行重复数据删除,如果它们位于同一个离散的60秒存储桶内,最早的数据点将被保留,在时间戳相等的情况下,将保留任意数据点。

`--dedup.minScrapeInterval`推荐值等于Prometheus配置中的scrape_interval的值,建议在所有抓取目标中使用一个scrape_interval配置。

如果HA中多个相同配置的vmagent或者Prometheus实例将数据写入同一个VictoriaMetrics实例,则重复数据删除会减少磁盘空间使用,这些vmagent或prometheus实例在配置中必须具有相同的external_labels部分,因为它们将数据写入相同的时间序列。

容量规划

根据案例研究u,与竞争解决方案(Prometheus,Thanos,Cortex,TimescaleDB,InfluxDB,QuestDB,M3DB)相比,VictoriaMetrics在生产工作负载上使用的CPU,内存和存储空间更少

每种节点类型 - vminsert,vmselect和vmstorage都可以在最适合的硬件上运行,集群容量随着可用资源的增加而线性扩展,每个节点类型所需的CPU和内存数量很大程度上取决于工作负载 - 活跃时间序列的数量,序列流失率,查询类型,查询QPS等,建议为每个生产的工作负载部署一个测试的VictoriaMetrics集群,并反复调整每个节点的资源和每个节点类型的节点数量,直到集群变化稳定,同样也建议为集群设置集群监控,有助于确定集群设置中的瓶颈问题。

指定保留所需的存储空间(可以通过vmstorage中的`-retentionPeriod`的命令标识来设置)可以从测试运行中的磁盘空间使用情况推断出来,例如,如果生产工作负载上运行一天后的存储空间使用量设置为10GB,那么对于`-retentionPeriod=100d`(100天的保留)来说,它至少需要10GB*100=1TB的磁盘空间,可以使用VictoriaMetrics集群的官方Grafana仪表盘监控存储空间的使用情况。

建议留出以下数量的备用资源:

1:所有节点类型中50%的空闲内存,以减少工作负载临时激增因为OOM崩溃的可能性

2:所有节点类型中50%空闲的CPU,以减少工作负载临时高峰期间的慢速概率

3:vmstorage节点上`-storageDataPath`命令标志指向的目录中至少有30%的可用存储空间

VictoriaMetrics集群的一些容量规划技巧

1:副本集将集群所需的资源最多增加N倍,其中N是复制因子

2:可以通过添加更多vmstorage节点和/或通过增加每个vmstorage节点的内存和CPU资源来增加活跃时间序列的集群容量。

3:可以通过增加vmstorage节点的数量和/或通过增加每个vmselect节点的内存和CPU资源来减少查询延迟

4:所有vminsert节点所需的CPU内核总数可以通过摄取率计算:CPUs = ingestion_rate / 100K。

5:vminsert节点上的`-rpc.disableCompression`标志可以增加摄取容量,但代价是vminsert和vmstorage之间的网络带宽使用率会增高

复制和数据安全

默认情况下,VM的数据复制依赖 `-storageDataPath`指向的底层存储来完成。

但我们可以手动通过将`-replicationFactor=N`参数传递给vminsert来启用复制,这保证了多达N-1个vmstorage节点不可用,所有数据仍可以用于查询,集群必须至少包含2*N-1个vmstorage节点,其中N是复制因子,以便在N-1个存储节点丢失时为新摄取的数据维持指定的复制因子,

例如,当`-replicationFactor=3`传递给vminsert时,它将所有摄取的数据复制到3个不同的vmstorage节点,因此最多丢失2个vmstorage节点而不丢失数据,vmstorage节点的最小数应该等于2*3-1=5,因此当2个vmstorage节点丢失时,剩余3个vmstorage节点可以为新摄取的数据提供服务。

启用复制后必须将`-dedup.minScrapeInterval=1ms`参数传递给vmselect节点,当多达n-1个vmstorage节点响应缓慢和/或暂不可用时,可以将可选的`-replicationFactor=N`参数传给vmselect以提高查询性能,因为vmselect不等待来自多达N-1个vmstorage节点的响应,有时,vmselect节点上的`-replicationFactor`可能会导致部分响应。`-dedup.minScrapeInterval=1ms`在查询期间对复制的数据进行重复数据删除,如果重复数据从配置相同的vmagent实例或者Prometheus实例推送到VM,则必须根据重复数据删除文档将 `-dedup.minScrapeInterval`设置为最大的值,请注意,复制不会从灾难中保存,因此建议执行定期备份,另外,复制会增加资源使用率, CPU,内存,磁盘空间,网络带宽 最多 `-replicationFactor`倍,所以可以将复制转移`-storageDataPath`指向的底层存储来做保证,例如Google Compute Engine永久磁盘,该磁盘可以防止数据丢失和数据损坏,它还提供始终如一的高性能,并且可以在不停机的情况下调整大小,对于大多数用例来说,基于HDD的永久性磁盘应该足够了。

备份

建议从即时快照执行定期备份,防止意外数据删除等错误,必须为每个vmstorage节点执行以下步骤来创建备份

通过导航到/snapshot/create HTTP handler 来创建一个即时快照,它将创建快照并返回其名称。

1:可以通过访问/snapshot/create这个HTTP Handler来创建即时快照,它将创建快照并返回其名称

2:使用vmbackup组件从`<-storageDataPath>/snapshots/<snapshot_name>`文件夹归档创建的快照,归档过程不会干扰vmstorage工作,因此可以在任何的时间执行

3:通过/snapshot/delete?snapshot=<snapshot_name>或者/snapshot/delete_all删除未使用的快照,以释放占用的存储空间

4:无需在所有vmstorage节点之间同步备份。

从备份恢复

1:使用kill -INT 停止vmstorage节点

2:使用vmrestore组件将备份中的数据还原到`-storageDataPath`目录

3:启动vmstorage节点

在了解了VM集群的一些配置细节后,接下来就是部署VM集群了

5:VictoriaMetrics集群部署

如果说你对Helm是比较熟悉的,那么你可以用 Helm Chart的方式部署它,使得可以一键安装

helm repo add vm https://victoriametrics.github.io/helm-charts/

helm repo update

# 导出默认的values值到values.yaml中

helm show values vm/victoria-metrics-cluster > values.yaml

# 大家可以根据自己的需求去改配置文件

helm install victoria-metrics vm/victoria-metrics-cluster -f values.yaml -n NAMESPACE

# 获取Pod信息

kubectl get pods -A | grep 'victoria-metrics'

不过我们这里用手动方式部署它,主要是为了更加深入的理解vm集群模式

由于vmstorage是有状态的,这里我们使用Sts部署,由于该组件是可以进行扩展的,这里我们先部署两个副本

# cluster-storage-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: vmstorage

namespace: kube-vm

labels:

app: vmstorage

spec:

clusterIP: None

ports:

- name: http

port: 8482

targetPort: http

- name: vmselect

port: 8401

targetPort: vmselect

- name: vminsert

port: 8400

targetPort: vminsert

selector:

app: vmstorage

# cluster-storage.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: vmstorage

namespace: kube-vm

labels:

app: vmstorage

spec:

serviceName: vmstorage

selector:

matchLabels:

app: vmstorage

replicas: 2

podManagementPolicy: OrderedReady

template:

metadata:

labels:

app: vmstorage

spec:

containers:

- name: vmstorage

image: victoriametrics/vmstorage:cluster-stable

ports:

- name: http

containerPort: 8482

- name: vminsert

containerPort: 8400

- name: vmselect

containerPort: 8401

args:

- "--retentionPeriod=1"

- "--storageDataPath=/storage"

- --envflag.enable=true

- --envflag.prefix=VM_

- --loggerFormat=json

livenessProbe:

failureThreshold: 10

initialDelaySeconds: 30

periodSeconds: 30

tcpSocket:

port: http

timeoutSeconds: 5

readinessProbe:

failureThreshold: 3

initialDelaySeconds: 5

periodSeconds: 15

httpGet:

path: /health

port: http

timeoutSeconds: 5

volumeMounts:

- name: storage

mountPath: /storage

volumeClaimTemplates:

- metadata:

name: storage

spec:

storageClassName: managed-nfs-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: "5Gi"

首先需要创建的就是HeadLess的Service,因为后面的组件需访问到每一个具体的Pod,在vmstorage启动参数内通过`--retentionPeriod`参数指定指标数据保留时长,1表示一个月,这也是默认的时长,然后通过`--storageDataPath`参数指定了数据的存储路径,记得将该目录进行持久化,

[root@k-m-1 kube-vm-cluster]# kubectl get pv,pvc,pod,svc -n kube-vm

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-a88869e2-1210-448f-900f-20d5bc0a3fa0 5Gi RWO Delete Bound kube-vm/storage-vmstorage-1 managed-nfs-storage 28s

persistentvolume/pvc-adcbc6c9-4e5d-4f21-a85a-7f4790fbd660 5Gi RWO Delete Bound kube-vm/storage-vmstorage-0 managed-nfs-storage 3m28s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/storage-vmstorage-0 Bound pvc-adcbc6c9-4e5d-4f21-a85a-7f4790fbd660 5Gi RWO managed-nfs-storage 3m28s

persistentvolumeclaim/storage-vmstorage-1 Bound pvc-a88869e2-1210-448f-900f-20d5bc0a3fa0 5Gi RWO managed-nfs-storage 28s

NAME READY STATUS RESTARTS AGE

pod/node-exporter-8fqx8 1/1 Running 0 47m

pod/node-exporter-prw95 1/1 Running 0 47m

pod/vmstorage-0 1/1 Running 0 44s

pod/vmstorage-1 1/1 Running 0 28s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/vmstorage ClusterIP None <none> 8482/TCP,8401/TCP,8400/TCP 14m

接着我们就可以去部署vmselect组件了,由于该组件是无状态的,我们可以直接用Deployment进行管理

# cluster-vmselect-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: vmselect

namespace: kube-vm

labels:

app: vmselect

spec:

ports:

- name: http

port: 8481

targetPort: http

selector:

app: vmselect

# cluster-vmselect.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: vmselect

namespace: kube-vm

labels:

app: vmselect

spec:

selector:

matchLabels:

app: vmselect

template:

metadata:

labels:

app: vmselect

spec:

volumes:

- name: cache-valume

emptyDir: {}

containers:

- name: vmselect

image: victoriametrics/vmselect:cluster-stable

ports:

- name: http

containerPort: 8481

args:

- "--cacheDataPath=/cache"

- "--storageNode=vmstorage-0.vmstorage.kube-vm.svc.cluster.local:8401"

- "--storageNode=vmstorage-1.vmstorage.kube-vm.svc.cluster.local:8401"

- --envflag.enable=true

- --envflag.prefix=VM_

- --loggerFormat=json

livenessProbe:

failureThreshold: 10

initialDelaySeconds: 30

periodSeconds: 30

tcpSocket:

port: http

timeoutSeconds: 5

readinessProbe:

failureThreshold: 3

initialDelaySeconds: 5

periodSeconds: 15

httpGet:

path: /health

port: http

timeoutSeconds: 5

volumeMounts:

- name: cache-volume

mountPath: /cache

这里面最重要的是部分通过`--storageNode`参数指定所有的vmstorage节点地址,上面我们使用的Statefulset部署䣌,所以可以直接使用FQDN的形式进行访问,直接应用上面的对象

[root@k-m-1 kube-vm-cluster]# kubectl apply -f cluster-vmselect.yaml -f cluster-vmselect-svc.yaml

deployment.apps/vmselect created

service/vmselect created

[root@k-m-1 kube-vm-cluster]# kubectl get pod,svc -n kube-vm

NAME READY STATUS RESTARTS AGE

pod/node-exporter-8fqx8 1/1 Running 0 141m

pod/node-exporter-prw95 1/1 Running 0 141m

pod/vmselect-74f6d996c7-prrrq 1/1 Running 0 30s

pod/vmstorage-0 1/1 Running 0 94m

pod/vmstorage-1 1/1 Running 0 94m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/vmselect ClusterIP 10.96.0.174 <none> 8481/TCP 31s

service/vmstorage ClusterIP None <none> 8482/TCP,8401/TCP,8400/TCP 107m

# 因为前面我把Grafana删了,这里我们重新装一下Grafana

[root@k-m-1 kube-vm-cluster]# kubectl get pv,pvc,pod,svc -n kube-vm

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-a88869e2-1210-448f-900f-20d5bc0a3fa0 5Gi RWO Delete Bound kube-vm/storage-vmstorage-1 managed-nfs-storage 124m

persistentvolume/pvc-adcbc6c9-4e5d-4f21-a85a-7f4790fbd660 5Gi RWO Delete Bound kube-vm/storage-vmstorage-0 managed-nfs-storage 127m

persistentvolume/pvc-e350f2d3-c8af-49fa-b65e-d365c7182e8e 5Gi RWX Delete Bound kube-vm/grafana-data managed-nfs-storage 96s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/grafana-data Bound pvc-e350f2d3-c8af-49fa-b65e-d365c7182e8e 5Gi RWX managed-nfs-storage 96s

persistentvolumeclaim/storage-vmstorage-0 Bound pvc-adcbc6c9-4e5d-4f21-a85a-7f4790fbd660 5Gi RWO managed-nfs-storage 127m

persistentvolumeclaim/storage-vmstorage-1 Bound pvc-a88869e2-1210-448f-900f-20d5bc0a3fa0 5Gi RWO managed-nfs-storage 124m

NAME READY STATUS RESTARTS AGE

pod/grafana-7dcb9dd5f4-csmsg 1/1 Running 0 72s

pod/node-exporter-8fqx8 1/1 Running 0 171m

pod/node-exporter-prw95 1/1 Running 0 171m

pod/vmselect-74f6d996c7-prrrq 1/1 Running 0 31m

pod/vmstorage-0 1/1 Running 0 124m

pod/vmstorage-1 1/1 Running 0 124m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/grafana NodePort 10.96.2.32 <none> 3000:31213/TCP 52s

service/vmselect ClusterIP 10.96.0.174 <none> 8481/TCP 31m

service/vmstorage ClusterIP None <none> 8482/TCP,8401/TCP,8400/TCP 138m

# 然后替换源

然后我们需要去部署用来接收指标数据插入的vminsert组件,同样的该组件是无状态的,其中重要的也是需要通过`--storageNode`参数指定所有的vmstorage节点

# cluster-vminsert-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: vminsert

namespace: kube-vm

labels:

app: vminsert

spec:

ports:

- name: http

port: 8480

targetPort: http

selector:

app: vminsert

# cluster-vminsert.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: vminsert

namespace: kube-vm

labels:

app: vminsert

spec:

selector:

matchLabels:

app: vminsert

template:

metadata:

labels:

app: vminsert

spec:

containers:

- name: vminsert

image: victoriametrics/vminsert:cluster-stable

ports:

- name: http

containerPort: 8480

args:

- "--storageNode=vmstorage-0.vmstorage.kube-vm.svc.cluster.local:8400"

- "--storageNode=vmstorage-1.vmstorage.kube-vm.svc.cluster.local:8400"

- --envflag.enable=true

- --envflag.prefix=VM_

- loggerFormat=json

livenessProbe:

failureThreshold: 10

initialDelaySeconds: 30

periodSeconds: 30

tcpSocket:

port: http

timeoutSeconds: 5

readinessProbe:

failureThreshold: 3

initialDelaySeconds: 5

periodSeconds: 15

httpGet:

path: /health

port: http

timeoutSeconds: 5

[root@k-m-1 kube-vm-cluster]# kubectl get pod,svc -n kube-vm

NAME READY STATUS RESTARTS AGE

pod/grafana-7dcb9dd5f4-csmsg 1/1 Running 0 23m

pod/node-exporter-8fqx8 1/1 Running 0 3h14m

pod/node-exporter-prw95 1/1 Running 0 3h14m

pod/vminsert-68db99c457-q4xz6 1/1 Running 0 2m46s

pod/vmselect-74f6d996c7-prrrq 1/1 Running 0 53m

pod/vmstorage-0 1/1 Running 0 147m

pod/vmstorage-1 1/1 Running 0 147m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/grafana NodePort 10.96.2.32 <none> 3000:31213/TCP 23m

service/vminsert ClusterIP 10.96.3.161 <none> 8480/TCP 4m28s

service/vmselect ClusterIP 10.96.0.174 <none> 8481/TCP 53m

service/vmstorage ClusterIP None <none> 8482/TCP,8401/TCP,8400/TCP 161m

# 那么其实这个时候VM集群就部署好了,但是我们还有个疑问,就是我们的数据从哪儿来,这里暂且我们还用Prometheus

# cluster-prom-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-vm

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

remote_write:

- url: http://vminsert:8480/insert/0/prometheus/

scrape_configs:

- job_name: 'nodes'

static_configs:

- targets: ['10.0.0.11:9100','10.0.0.12:9100']

relabel_configs:

- source_labels: [__address__]

regex: "(.*):(.*)"

replacement: "${1}"

target_label: 'ip'

action: replace

# cluster-prom-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: prometheus-data

namespace: kube-vm

spec:

storageClassName: "managed-nfs-storage"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

# cluster-prom.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: prometheus

namespace: kube-vm

labels:

app: prometheus

spec:

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

containers:

- name: prometheus

image: prom/prometheus:latest

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus"

- "--storage.tsdb.retention.time=24h"

- "--web.enable-admin-api"

- "--web.enable-lifecycle"

ports:

- name: http

containerPort: 9090

securityContext:

runAsUser: 0

volumeMounts:

- mountPath: "/etc/prometheus"

name: config-volume

- mountPath: "/prometheus"

name: data

resources:

requests:

cpu: 200m

memory: 1024Mi

limits:

cpu: 200m

memory: 1024Mi

volumes:

- name: data

persistentVolumeClaim:

claimName: prometheus-data

- configMap:

name: prometheus-config

name: config-volume

# cluster-prom-svc.yaml

kind: Service

apiVersion: v1

metadata:

name: prometheus

namespace: kube-vm

labels:

app: prometheus

spec:

selector:

app: prometheus

type: NodePort

ports:

- name: web

port: 9090

targetPort: http

[root@k-m-1 kube-vm-cluster]# kubectl get cm,pvc,pod,svc -n kube-vm

NAME DATA AGE

configmap/kube-root-ca.crt 1 2d5h

configmap/peomxy-config 1 41h

configmap/prometheus-config 1 96s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/grafana-data Bound pvc-e350f2d3-c8af-49fa-b65e-d365c7182e8e 5Gi RWX managed-nfs-storage 60m

persistentvolumeclaim/prometheus-data Bound pvc-e3ff64d6-c211-4115-92a4-94a1d2c5813c 5Gi RWX managed-nfs-storage 93s

persistentvolumeclaim/storage-vmstorage-0 Bound pvc-adcbc6c9-4e5d-4f21-a85a-7f4790fbd660 5Gi RWO managed-nfs-storage 3h6m

persistentvolumeclaim/storage-vmstorage-1 Bound pvc-a88869e2-1210-448f-900f-20d5bc0a3fa0 5Gi RWO managed-nfs-storage 3h3m

NAME READY STATUS RESTARTS AGE

pod/grafana-7dcb9dd5f4-csmsg 1/1 Running 0 60m

pod/node-exporter-8fqx8 1/1 Running 0 3h51m

pod/node-exporter-prw95 1/1 Running 0 3h51m

pod/prometheus-855c7c4755-xbkxf 1/1 Running 0 89s

pod/vminsert-68db99c457-q4xz6 1/1 Running 0 39m

pod/vmselect-74f6d996c7-prrrq 1/1 Running 0 90m

pod/vmstorage-0 1/1 Running 0 3h4m

pod/vmstorage-1 1/1 Running 0 3h3m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/grafana NodePort 10.96.2.32 <none> 3000:31213/TCP 60m

service/prometheus NodePort 10.96.0.71 <none> 9090:31864/TCP 12s

service/vminsert ClusterIP 10.96.3.161 <none> 8480/TCP 41m

service/vmselect ClusterIP 10.96.0.174 <none> 8481/TCP 90m

service/vmstorage ClusterIP None <none> 8482/TCP,8401/TCP,8400/TCP 3h17m

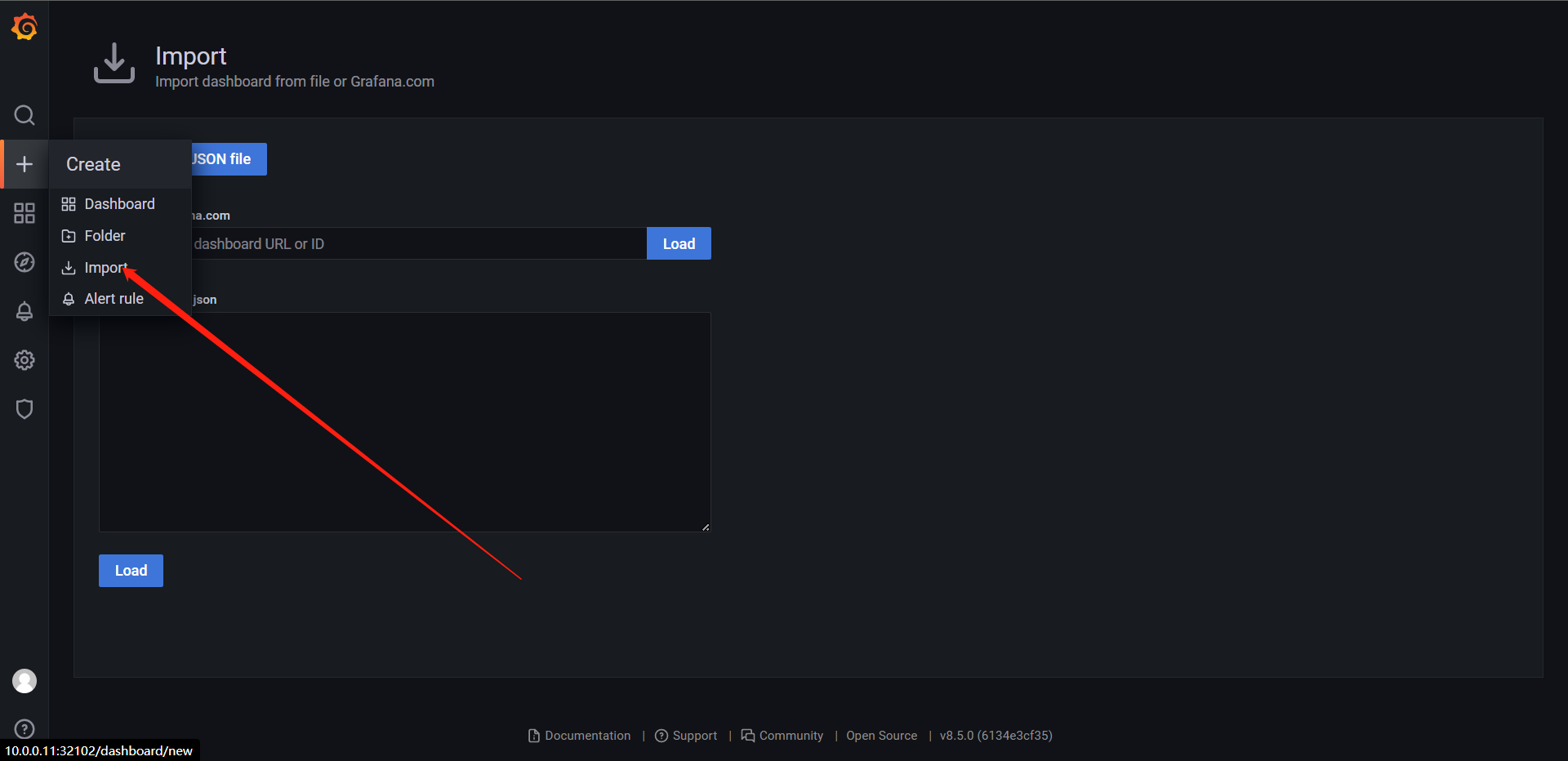

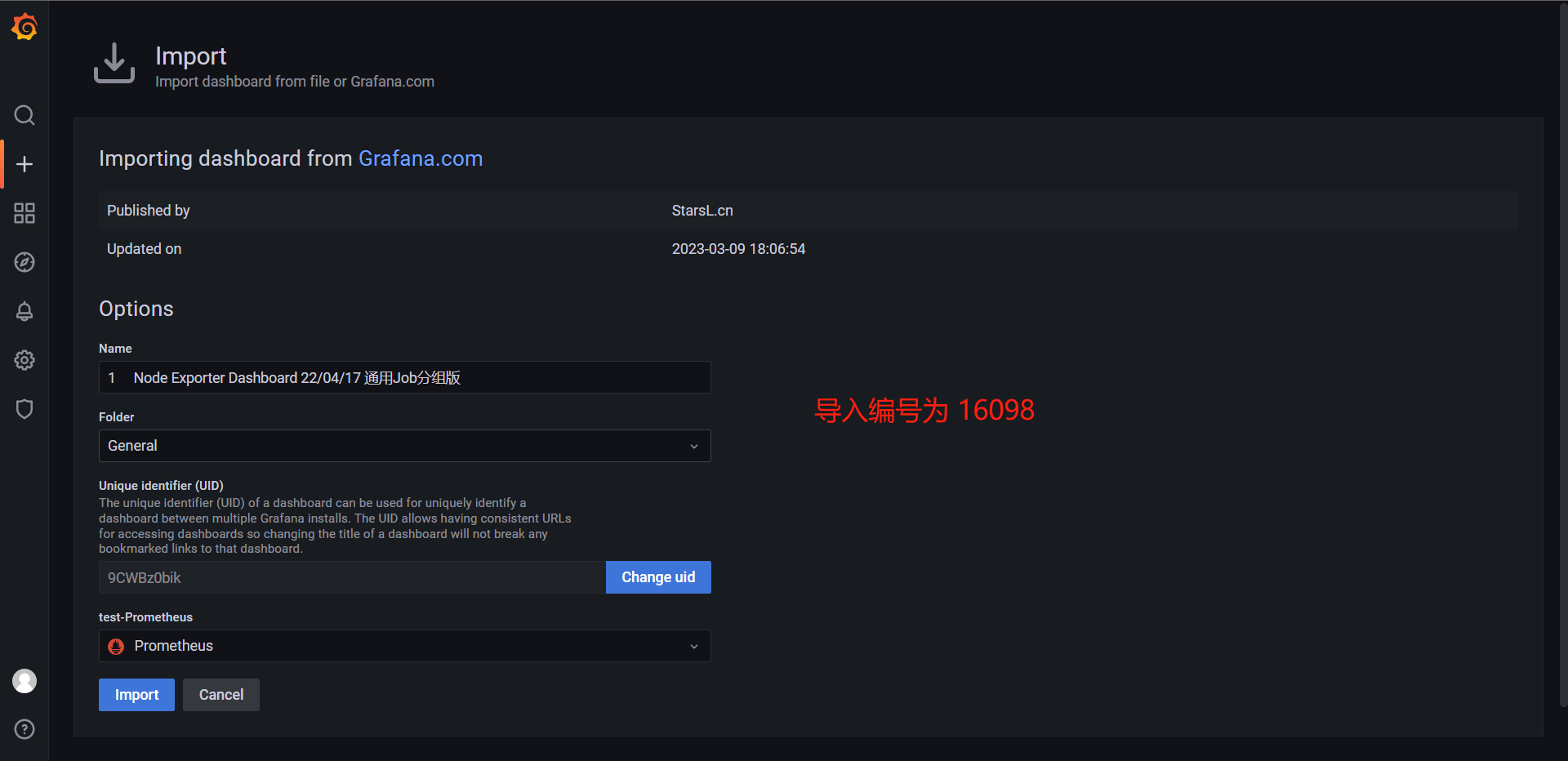

# 修改完了之后我们还是去Grafana导入那个模板,然后再看数据,可以看到还是有的。

6:vmagent概述及部署

vmagent可以帮助我们从各种来源收集指标并将它们存储到VM或者任何其他支持remote write协议的Prometheus兼容的存储系统中,vmagent相比于Prometheus抓取指标来说具有更多的灵活性,比如除了拉取(pull)指标还可以推送(push)指标,此外还有很多其他特性。

1:可以替换Prometheus的scrape target

2:支持从Kafka读写数据

3:支持基于prometheus relabeling的模式添加,移除,修改,labels,可以在数据发送到远程存储之前进行数据过滤

4:支持多种数据协议,influx line协议,graphite 文本协议,opentsdb协议,prometheus remote write协议,json lines协议,csv数据等

5:支持收集数据的同时,并复制到多中远端存储系统

6:支持不可靠的远端存储,如果远端存储不可用,收集的指标会在`-remoteWrite.tmpDataPath`缓冲,一旦与远端存储的链接被修复,缓冲的指标就会被发送到远程存储,缓冲区的最大磁盘用量可以用`-remoteWrite.maxDiskUsagePerURL`来限制。

7:相比Prometheus使用较少的内存,cpu,磁盘,io以及网络带宽

8:当需要抓取大量的目标时,抓取目标可以分散在多个vmagent实例中

9:可以通过在抓取时间和将其发送到远程存储系统之前限制唯一时间序列的数量来处理高基数和高流失率问题

10:可以从多个文件中加载scrape配置

接下来我们就以抓取Kubernetes集群指标为例子说明如何使用vmagent,我们这里使用自动发现的方式进行配置,vmagent是兼容Prometheus中的kubernetes_sd_configs的配置的,所以我们同样可以使用。

要让vmagent自动发现监控的资源对象,需要访问APIServer获取资源对象,首先需要配置rbac权限

# vmagent-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: vmagent

namespace: kube-vm

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: vmagent

rules:

- apiGroups: ["", "networking.k8s.io", "extensions"]

resources:

- nodes

- nodes/metrics

- services

- endpoints

- endpointslices

- pods

- app

- ingresses

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources:

- namespaces

- configmaps

verbs: ["get"]

- nonResourceURLs: ["/metrics", "/metrics/resources"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: vmagent

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: vmagent

subjects:

- kind: ServiceAccount

name: vmagent

namespace: kube-vm

# 部署RBAC

[root@k-m-1 kube-vm-cluster]# kubectl apply -f vmagent-rbac.yaml

serviceaccount/vmagent created

clusterrole.rbac.authorization.k8s.io/vmagent created

clusterrolebinding.rbac.authorization.k8s.io/vmagent created

然后做vmagent的配置,我们先配置自动发现Kubernetes节点的任务,创建如下Configmap

# vmagent-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: vmagent-config

namespace: kube-vm

data:

scrape.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

scrape_configs:

- job_name: nodes

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: "(.*):10250"

replacement: "${1}:9100"

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

这里我们通过自动发现Kubernetes节点获取节点监控指标,需要注意的是node这种role是自动发现默认获取的节点的10250端口,这里我们需要使用relabel将其replace为9100

[root@k-m-1 kube-vm-cluster]# kubectl apply -f vmagent-config.yaml

configmap/vmagent-config created

然后添加vmagent的部署资源信息

# vmagent-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaimk

metadata:

name: vmagent-data

namespace: kube-vm

spec:

storageClassName: "managed-nfs-storage"

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

# vmagent.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: vmagent

namespace: kube-vm

labels:

app: vmagent

spec:

selector:

matchLabels:

app: vmagent

template:

metadata:

labels:

app: vmagent

spec:

serviceAccountName: vmagent

containers:

- name: vmagent

image: victoriametrics/vmagent:stable

ports:

- name: http

containerPort: 8429

args:

- -promscrape.config=/config/scrape.yml

- -remoteWrite.tmpDataPath=/tmpData

- -remoteWrite.url=http://vminsert:8480/insert/0/prometheus

- -envflag.enable=true

- -envflag.prefix=VM_

- -loggerFormat=json

volumeMounts:

- name: tmpdata

mountPath: /tmpData

- name: config

mountPath: /config

volumes:

- name: tmpdata

persistentVolumeClaim:

claimName: vmagent-data

- name: config

configMap:

name: vmagent-config

我们vmagent通过配置configmap挂载到容器/config/scrape.yml,另外通过`-remoteWrite.url=http://vminsert:8480/insert/0/prometheus`指定远程写入的地址,这里我们写入前面的vminsert服务,另外有一个参数`-remoteWrite.tmpDataPath`,该路径会在远程存储不可用的时候来缓存收集的指标,当远程存储修复后,缓存的数据指标会被正常发送到远程写入,所以最好持久化一下

单个vmagent实例可以抓取数万个抓取目标,但是有时由于CPU,网络,内存等方面的限额,这还不够,在这种情况下,抓取目标可以在多个vmagent实例之间进行拆分,集群中的每个vmagent实例必须使用具有不同`-promscrape.cluster.memberNum`值的相同`-promscrape.config`配置文件,该参数必须在0 ... N-1范围内,其中N是集群中vmagent实例的数量,集群中vmagent实例数量必须传递给-promscape.cluster.membersCount命令行标志,例如,以下命令行可以在两个vmagent实例的集群中传播抓取目标:

vmagent -promscrape.cluster.membersCount=2 -promscrape.cluster.memberNum=0 -promscrape.config=/path/config.yml ...

vmagent -promscrape.cluster.membersCount=2 -promscrape.cluster.memberNum=1 -promscrape.config=/path/config.yml ...

当vmagent在kubernetes中运行时,可以将 -promscrape.cluster.memberNum设置为Statefulset Pod名称,Pod必须以0 ... promscrape.cluster.memberNum-1 范围内的数字结尾,例如:`-promscrape.cluster.memberNum-0`

默认情况下,每个抓取目标仅由集群的单个vmagent实例抓取,如果需要在多个vmagent实例之间复制抓取目标,则可以通过-promscrape.cluster.replicationFactor参数设置为所需的副本数,例如:以下启动命令包含三个vmagent实例的集群,其中每个目标由两个vmagent实时抓取

vmagent -promscrape.cluster.membersCount=3 -promscrape.cluster.replicationFactor=2 -promscrape.cluster.memberNum=0 -promscrape.config=/path/to/config.yml ...

vmagent -promscrape.cluster.membersCount=3 -promscrape.cluster.replicationFactor=2 -promscrape.cluster.memberNum=1 -promscrape.config=/path/to/config.yml ...

vmagent -promscrape.cluster.membersCount=3 -promscrape.cluster.replicationFactor=2 -promscrape.cluster.memberNum=2 -promscrape.config=/path/to/config.yml ...

需要注意的是如果每个每个目标被多个vmagent实例抓取,则必须在`-remoteWrite.url`指向的远程存储上启用重复数据删除

如果你要抓取的监控目标非常大,那么我们建议使用vmagent集群模式,那么可以用Statefulset方式部署

# vmagent-sts-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: vmagent

namespace: kube-vm

labels:

app: vmagent

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "8429"

spec:

selector:

app: vmagent

clusterIP: None

ports:

- name: http

port: 8429

targetPort: http

# vmagent-sts.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: vmagent

namespace: kube-vm

labels:

app: vmagent

spec:

replicas: 2

serviceName: vmagent

selector:

matchLabels:

app: vmagent

template:

metadata:

labels:

app: vmagent

spec:

serviceAccountName: vmagent

containers:

- name: vmagent

image: victoriametrics/vmagent:stable

ports:

- name: http

containerPort: 8429

args:

- -promscrape.config=/config/scrape.yml

- -remoteWrite.tmpDataPath=/tmpData

- -promscrape.cluster.membersCount=2

- -promscrape.cluster.memberNum=$(POD_NAME)

- -remoteWrite.url=http://vminsert:8480/insert/0/prometheus

- -envflag.enable=true

- -envflag.prefix=VM_

- -loggerFormat=json

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

volumeMounts:

- name: tmpdata

mountPath: /tmpData

- name: config

mountPath: /config

volumes:

- name: config

configMap:

name: vmagent-config

volumeClaimTemplates:

- metadata:

name: tmpdata

spec:

storageClassName: managed-nfs-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: "1Gi"

我们这里使用Sts的方式来管理vmagent

[root@k-m-1 kube-vm-cluster]# kubectl get pod,svc -n kube-vm

NAME READY STATUS RESTARTS AGE

pod/grafana-7dcb9dd5f4-csmsg 1/1 Running 0 21h

pod/node-exporter-8fqx8 1/1 Running 0 24h

pod/node-exporter-prw95 1/1 Running 0 24h

pod/prometheus-855c7c4755-xbkxf 1/1 Running 0 20h

pod/vmagent-0 1/1 Running 0 38s

pod/vmagent-1 1/1 Running 0 17s

pod/vminsert-68db99c457-q4xz6 1/1 Running 0 21h

pod/vmselect-74f6d996c7-prrrq 1/1 Running 0 21h

pod/vmstorage-0 1/1 Running 0 23h

pod/vmstorage-1 1/1 Running 0 23h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/grafana NodePort 10.96.2.32 <none> 3000:31213/TCP 21h

service/prometheus NodePort 10.96.0.71 <none> 9090:31864/TCP 20h

service/vmagent ClusterIP None <none> 8429/TCP 5m48s

service/vminsert ClusterIP 10.96.3.161 <none> 8480/TCP 21h

service/vmselect ClusterIP 10.96.0.174 <none> 8481/TCP 21h

service/vmstorage ClusterIP None <none> 8482/TCP,8401/TCP,8400/TCP 23h

# 这些不是完成之后呢,如果我们想用VM的那个UI,可以去改vmselect的svc为NodePort,这样就可以了

[root@k-m-1 kube-vm-cluster]# kubectl get svc -n kube-vm

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana NodePort 10.96.2.32 <none> 3000:31213/TCP 22h

prometheus NodePort 10.96.0.71 <none> 9090:31864/TCP 21h

vmagent ClusterIP None <none> 8429/TCP 49m

vminsert ClusterIP 10.96.3.161 <none> 8480/TCP 21h

vmselect NodePort 10.96.0.174 <none> 8481:31515/TCP 22h

vmstorage ClusterIP None <none> 8482/TCP,8401/TCP,8400/TCP 24h

访问url:http://10.0.0.11:31515/select/0/

# 这里还是因为多租户,所以我们需要具体到某一个租户上

看到这儿,大家应该都非常的熟悉了吧,然后如果想自动化发现K8S所有的组件配置,我们可以去完善vmagent的那个配置,我这里就不做了,但是有一点必须提一下,就是vmagent的relabels部分语法是比Prometheus要强的,比如:

1:keep_if_equal

2:drop_if_equal

3:if: '<metric>{label=~"x|y"}'

......

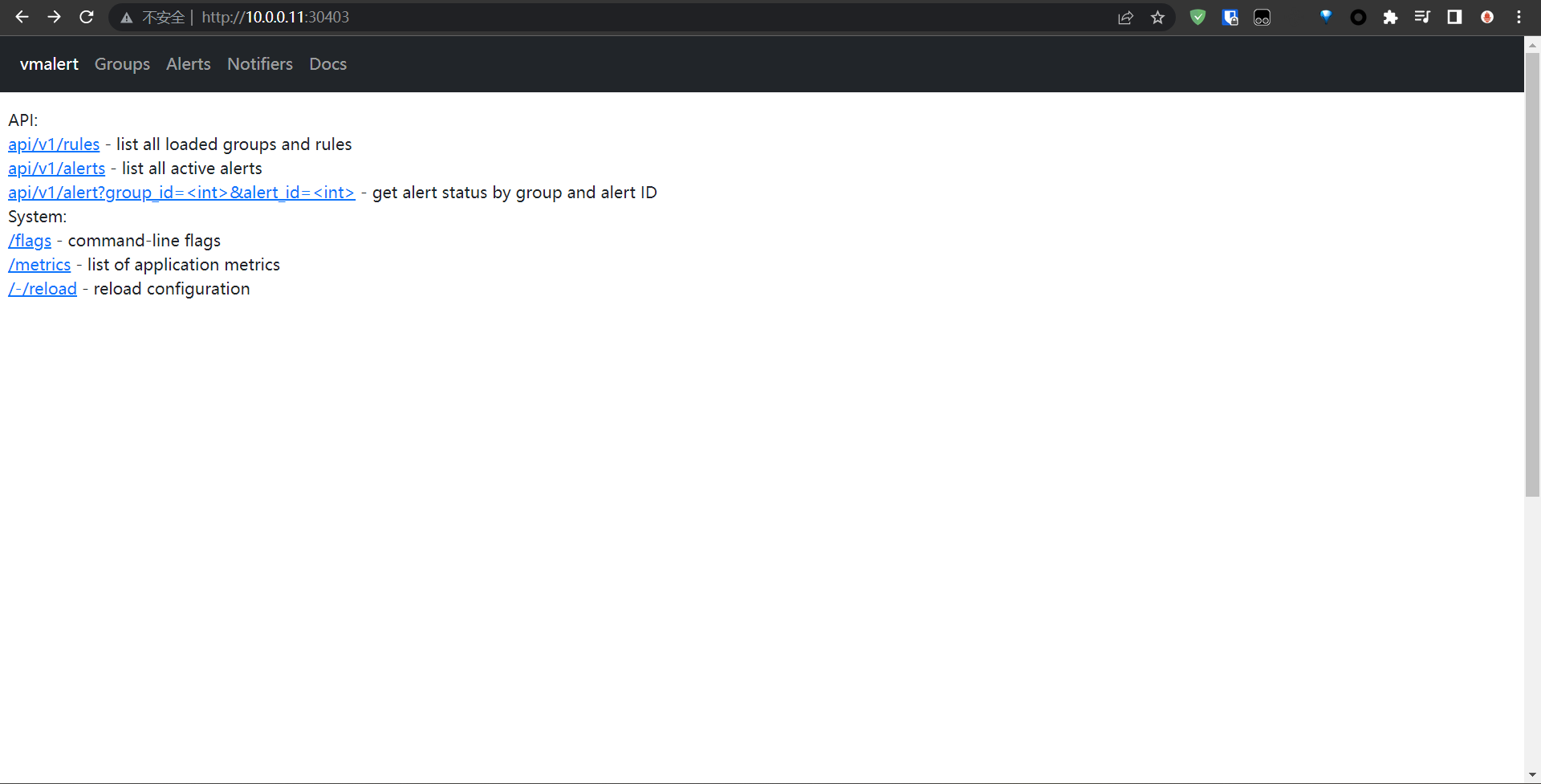

7:vmalert报警

在前面其实我们说过VM全家桶可以替换Prometheus的全家桶,当然想要完全替换Prometheus,最重要的就是报警模块,之前我们都是在Prometheus中配置告警规则评估后推送给Alertmanager,同样对应到vm的一个报警处理模块是vmalert

vmalert会针对`-datasource.url`地址执行配置的报警记录规则,然后可以将报警发送给`-notifier.url`配置的Alertmanager,记录规则结果会通过远程协议进行保存,所需的配置是`-remoteWrite.url`

vmalert的特性:

1:与VictoriaMetrics TSDB集成

2:VictorMetrics MetricsQL支持和表达式验证

3:Prometheus告警规则定义格式支持

4:与Alertmanager集成

5:在重启时可以保持报警状态

6:Graphite数据源可用于警报和记录规则

7:支持记录和报警规则重放

8:非常轻量级,没有额外的依赖

要开始使用vmalert,需要满足以下条件:

1:报警规则列表:要执行的PromQL/MetricsQL表达式

2:数据源地址:可以访问的VictoriaMetrics实例,用于规则执行

3:通知程序地址:可以访问Alertmanager实例,用于处理,汇总警报和发送通知

安装

首先需要安装Alertmanager用来接收报警信息,Alertmanager在我的博客都已经讲过了

# vmalert-alertmanager-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: alert-config

namespace: kube-vm

data:

config.yml: |-

global:

resolve_timeout: 5m

smtp_smarthost: 'smtp.163.com:456'

smtp_from: 'xxx@163.com' # 自己的邮箱

smtp_auth_username: 'xxx@163.com' # 邮箱账号

smtp_auth_password: '<auth code>' # 邮箱的smtp打开时的key

smtp_hello: '163.com'

route:

group_by: ['severity', 'source']

group_wait: 30s

group_interval: 5m

repeat_interval: 24h

receiver: email

receivers:

- name: 'email'

email_configs:

- to: '2054076300@qq.com'

send_resolved: true

# vmalert-alertmanager-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: alertmanager

namespace: kube-vm

labels:

app: alertmanager

spec:

type: NodePort

selector:

app: alertmanager

ports:

- name: web

port: 9093

targetPort: http

# vmalert-alertmanager.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: alertmanager

namespace: kube-vm

labels:

app: alertmanager

spec:

selector:

matchLabels:

app: alertmanager

template:

metadata:

labels:

app: alertmanager

spec:

volumes:

- name: cfg

configMap:

name: alert-config

containers:

- name: alertmanager

image: prom/alertmanager:latest

ports:

- name: http

containerPort: 9093

args:

- "--config.file=/etc/alertmanager/config.yml"

volumeMounts:

- name: cfg

mountPath: "/etc/alertmanager"

[root@k-m-1 kube-vm-cluster]# kubectl get cm,svc,pod -n kube-vm -l app=alertmanager

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/alertmanager NodePort 10.96.0.227 <none> 9093:32165/TCP 5m1s

NAME READY STATUS RESTARTS AGE

pod/alertmanager-67dd5ccc88-z294f 1/1 Running 0 4m58s

# 然后我们就该去配置规则了

# vmalert-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: vmalert-config

namespace: kube-vm

data:

record.yaml: |

groups:

- name: record

rules:

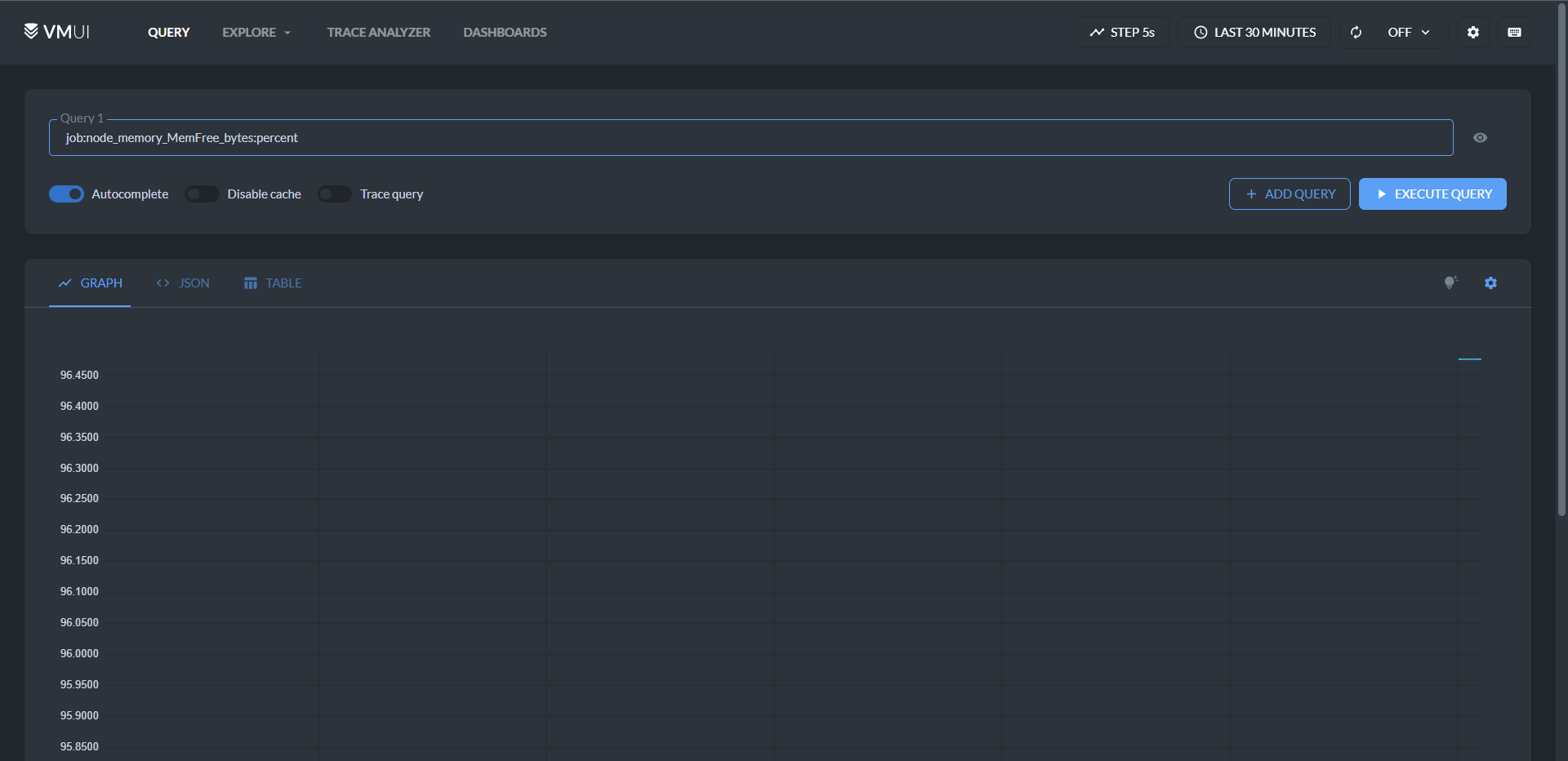

- record: job:node_memory_MemFree_bytes:percent

expr: 100 - (100 * node_memory_MemFree_bytes / node_memory_MemTotal_bytes)

# vmalert-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: vmalert

namespace: kube-vm

labels:

app: vmalert

spec:

type: NodePort

selector:

app: vmalert

ports:

- name: vmalert

port: 8080

targetPort: 8080

# vmalert.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: vmalert

namespace: kube-vm

labels:

app: vmalert

spec:

selector:

matchLabels:

app: vmalert

template:

metadata:

labels:

app: vmalert

spec:

containers:

- name: vmalert

image: victoriametrics/vmalert:stable

args:

- -rule=/etc/ruler/*.yaml

- -datasource.url=http://vmselect.kube-vm.svc.cluster.local:8481/select/0/prometheus

- -notifier.url=http://alertmanager.kube-vm.svc.cluster.local:9093

- -remoteWrite.url=http://vminsert.kube-vm.svc.cluster.local:8480/insert/0/prometheus

- -evaluationInterval=15s

- -httpListenAddr=0.0.0.0:8080

volumeMounts:

- name: ruler

mountPath: /etc/ruler/

readOnly: true

volumes:

- name: ruler

configMap:

name: vmalert-config

[root@k-m-1 kube-vm-cluster]# kubectl get cm,svc,pod -n kube-vm

NAME DATA AGE

configmap/alert-config 1 54m

configmap/kube-root-ca.crt 1 3d5h

configmap/peomxy-config 1 2d17h

configmap/prometheus-config 1 24h

configmap/vmagent-config 1 3h43m

configmap/vmalert-config 1 3m2s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/alertmanager NodePort 10.96.0.227 <none> 9093:32165/TCP 54m

service/grafana NodePort 10.96.2.32 <none> 3000:31213/TCP 25h

service/prometheus NodePort 10.96.0.71 <none> 9090:31864/TCP 24h

service/vmagent ClusterIP None <none> 8429/TCP 3h48m

service/vmalert NodePort 10.96.0.242 <none> 8080:30403/TCP 2m4s

service/vminsert ClusterIP 10.96.3.161 <none> 8480/TCP 24h

service/vmselect NodePort 10.96.0.174 <none> 8481:31515/TCP 25h

service/vmstorage ClusterIP None <none> 8482/TCP,8401/TCP,8400/TCP 27h

NAME READY STATUS RESTARTS AGE

pod/alertmanager-67dd5ccc88-z294f 1/1 Running 0 54m

pod/grafana-7dcb9dd5f4-csmsg 1/1 Running 0 25h

pod/node-exporter-8fqx8 1/1 Running 0 27h

pod/node-exporter-prw95 1/1 Running 0 27h

pod/vmagent-0 1/1 Running 0 3h25m

pod/vmagent-1 1/1 Running 0 3h25m

pod/vmalert-54ff577cf-7fwjl 1/1 Running 0 17s

pod/vminsert-68db99c457-q4xz6 1/1 Running 0 24h

pod/vmselect-74f6d996c7-prrrq 1/1 Running 0 25h

pod/vmstorage-0 1/1 Running 0 27h

pod/vmstorage-1 1/1 Running 0 27h

# 然后我们看看我们的记录规则到UI了没有

可以看到我们的记录规则到了UI了,也就是我们的vmalert规则已经生效了,而且已经写入到vmstorage了,因为我们的vmselect已经查到了

这里是vmalert的一个界面,可以根据自己定义的配置来看看,以及正在报警的规则等,其实它就是Prometheus的部分功能

8:vm-operator管理vm集群

Operator大家都知道是Kubernetes的一个大杀器,可以大大简化应用的安装,配置和管理,同样对于VictoriaMetrics官方也开发了一个对应的Operator来进行管理 - vm-operator,它的设计和实现灵感来源于prometheus-operator,它是管理应用程序监控配置的绝佳工具

vm-operator定义的CRD有以下这些:

1:VMServiceScrape:定义从Service支持Pod中抓取指标配置

2:VMPodScrape:定义从Pod中抓取指标配置

3:VMRule:定义报警和记录规则

4:VMProbe:使用blackbox expoter为目标定义探测配置

......

此外该Operator默认还可以识别prometheus-operator中的ServiceMonitor,PodMonitor,PrometheusRule和Probe对象,还允许你使用CRD对象来管理Kubernetes集群内的VM应用,

安装

vm-operator提供了helm charts的包,所以可以直接用helm来安装,这个Helm和前面用的仓库是一样的

[root@k-m-1 vm-operator]# helm repo add vm https://victoriametrics.github.io/helm-charts/

[root@k-m-1 vm-operator]# helm repo update

根据自己需要定制的values值,默认的values.yaml可以通过命令导出出来去修改

[root@k-m-1 vm-operator]# helm show values vm/victoria-metrics-operator > values.yaml

# 基本上将关于PSP的配置设置为false,其次就是WebHook,生产建议打开,但是我们这边是测试就不打开了

[root@k-m-1 vm-operator]# helm upgrade --install victoria-metrics-operator vm/victoria-metrics-operator -f values.yaml -n vm-operator --create-namespace

Release "victoria-metrics-operator" does not exist. Installing it now.

NAME: victoria-metrics-operator

LAST DEPLOYED: Wed Apr 19 00:23:47 2023

NAMESPACE: vm-operator

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

victoria-metrics-operator has been installed. Check its status by running:

kubectl --namespace vm-operator get pods -l "app.kubernetes.io/instance=victoria-metrics-operator"

Get more information on https://github.com/VictoriaMetrics/helm-charts/tree/master/charts/victoria-metrics-operator.

See "Getting started guide for VM Operator" on https://docs.victoriametrics.com/guides/getting-started-with-vm-operator.html .

[root@k-m-1 vm-operator]# kubectl --namespace vm-operator get pods -l "app.kubernetes.io/instance=victoria-metrics-operator"

NAME READY STATUS RESTARTS AGE

victoria-metrics-operator-7cb9d4685d-lzdv4 1/1 Running 0 71s

[root@k-m-1 vm-operator]# kubectl get crd | grep victoriametrics

vmagents.operator.victoriametrics.com 2023-04-18T16:23:48Z

vmalertmanagerconfigs.operator.victoriametrics.com 2023-04-18T16:23:48Z

vmalertmanagers.operator.victoriametrics.com 2023-04-18T16:23:48Z

vmalerts.operator.victoriametrics.com 2023-04-18T16:23:48Z

vmauths.operator.victoriametrics.com 2023-04-18T16:23:48Z

vmclusters.operator.victoriametrics.com 2023-04-18T16:23:48Z

vmnodescrapes.operator.victoriametrics.com 2023-04-18T16:23:48Z

vmpodscrapes.operator.victoriametrics.com 2023-04-18T16:23:48Z

vmprobes.operator.victoriametrics.com 2023-04-18T16:23:48Z

vmrules.operator.victoriametrics.com 2023-04-18T16:23:48Z

vmservicescrapes.operator.victoriametrics.com 2023-04-18T16:23:48Z

vmsingles.operator.victoriametrics.com 2023-04-18T16:23:48Z

vmstaticscrapes.operator.victoriametrics.com 2023-04-18T16:23:48Z

vmusers.operator.victoriametrics.com 2023-04-18T16:23:48Z

[root@k-m-1 vm-operator]# kubectl api-resources | grep victoriametrics

vmagents operator.victoriametrics.com/v1beta1 true VMAgent

vmalertmanagerconfigs operator.victoriametrics.com/v1beta1 true VMAlertmanagerConfig

vmalertmanagers vma operator.victoriametrics.com/v1beta1 true VMAlertmanager

vmalerts operator.victoriametrics.com/v1beta1 true VMAlert

vmauths operator.victoriametrics.com/v1beta1 true VMAuth

vmclusters operator.victoriametrics.com/v1beta1 true VMCluster

vmnodescrapes operator.victoriametrics.com/v1beta1 true VMNodeScrape

vmpodscrapes operator.victoriametrics.com/v1beta1 true VMPodScrape

vmprobes operator.victoriametrics.com/v1beta1 true VMProbe

vmrules operator.victoriametrics.com/v1beta1 true VMRule

vmservicescrapes operator.victoriametrics.com/v1beta1 true VMServiceScrape

vmsingles operator.victoriametrics.com/v1beta1 true VMSingle

vmstaticscrapes operator.victoriametrics.com/v1beta1 true VMStaticScrape

vmusers operator.victoriametrics.com/v1beta1 true VMUser

# 可以看到它支持的资源还是挺多的我们前面只是说了一点点,那么我们如果想创建一个VMCluster的集群,那么该如何去创建呢?我们来上手创建

# vmcluster.yaml

apiVersion: operator.victoriametrics.com/v1beta1

kind: VMCluster

metadata:

name: vm-cluster

spec:

retentionPeriod: 1w

replicationFactor: 2

vminsert:

replicaCount: 2

vmselect:

replicaCount: 2

cacheMountPath: /cache

storage:

volumeClaimTemplate:

spec:

storageClassName: managed-nfs-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: "5Gi"

vmstorage:

replicaCount: 2

storageDataPath: /vm-data

storage:

volumeClaimTemplate:

spec:

storageClassName: managed-nfs-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: "5Gi"

[root@k-m-1 vm-operator]# kubectl apply -f vmcluster.yaml

vmcluster.operator.victoriametrics.com/vm-cluster created

[root@k-m-1 vm-operator]# kubectl get vmclusters

NAME INSERT COUNT STORAGE COUNT SELECT COUNT AGE STATUS

vm-cluster 2 2 2 24s expanding

[root@k-m-1 vm-operator]# kubectl get pod

NAME READY STATUS RESTARTS AGE

vminsert-vm-cluster-74b8895bdf-6pg54 1/1 Running 0 81s

vminsert-vm-cluster-74b8895bdf-lhfjt 1/1 Running 0 81s

vmselect-vm-cluster-0 1/1 Running 0 81s

vmselect-vm-cluster-1 1/1 Running 0 81s

vmstorage-vm-cluster-0 1/1 Running 0 81s

vmstorage-vm-cluster-1 1/1 Running 0 81s

[root@k-m-1 vm-operator]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 12d

vminsert-vm-cluster ClusterIP 10.96.1.162 <none> 8480/TCP 8m21s

vmselect-vm-cluster ClusterIP None <none> 8481/TCP 8m21s

vmstorage-vm-cluster ClusterIP None <none> 8482/TCP,8400/TCP,8401/TCP 8m21s

# 可以看到集群就创建好了

9:vm-operator抓取监控指标

我们集群安装成功了,但是如何去抓取数据,这里我们就用vmagent去抓取了,这个其实我们在CRD都看到了它支持vmagent的创建

# vmagent.yaml

apiVersion: operator.victoriametrics.com/v1beta1

kind: VMAgent

metadata:

name: vmagent

spec:

serviceScrapeNamespaceSelector: {}

podScrapeNamespaceSelector: {}

podScrapeSelector: {}

serviceScrapeSelector: {}

nodeScrapeSelector: {}

nodeScrapeNamespaceSelector: {}

staticScrapeSelector: {}

staticScrapeNamespaceSelector: {}

replicaCount: 1

remoteWrite:

- url: "http://vminsert-vm-cluster.default.svc.cluster.local:8480/insert/0/prometheus/api/v1/write" # 数据写入的地址

[root@k-m-1 vm-operator]# kubectl apply -f vmagent.yaml

vmagent.operator.victoriametrics.com/vmagent created

[root@k-m-1 vm-operator]# kubectl get pod

NAME READY STATUS RESTARTS AGE

vmagent-vmagent-78855bc6cc-mqq7m 2/2 Running 0 3s

vminsert-vm-cluster-74b8895bdf-6pg54 1/1 Running 0 27m

vminsert-vm-cluster-74b8895bdf-lhfjt 1/1 Running 0 27m

vmselect-vm-cluster-0 1/1 Running 0 27m

vmselect-vm-cluster-1 1/1 Running 0 27m

vmstorage-vm-cluster-0 1/1 Running 0 27m

vmstorage-vm-cluster-1 1/1 Running 0 27m

[root@k-m-1 vm-operator]# kubectl get vmagents

NAME SHARDS COUNT REPLICA COUNT

vmagent 0 1

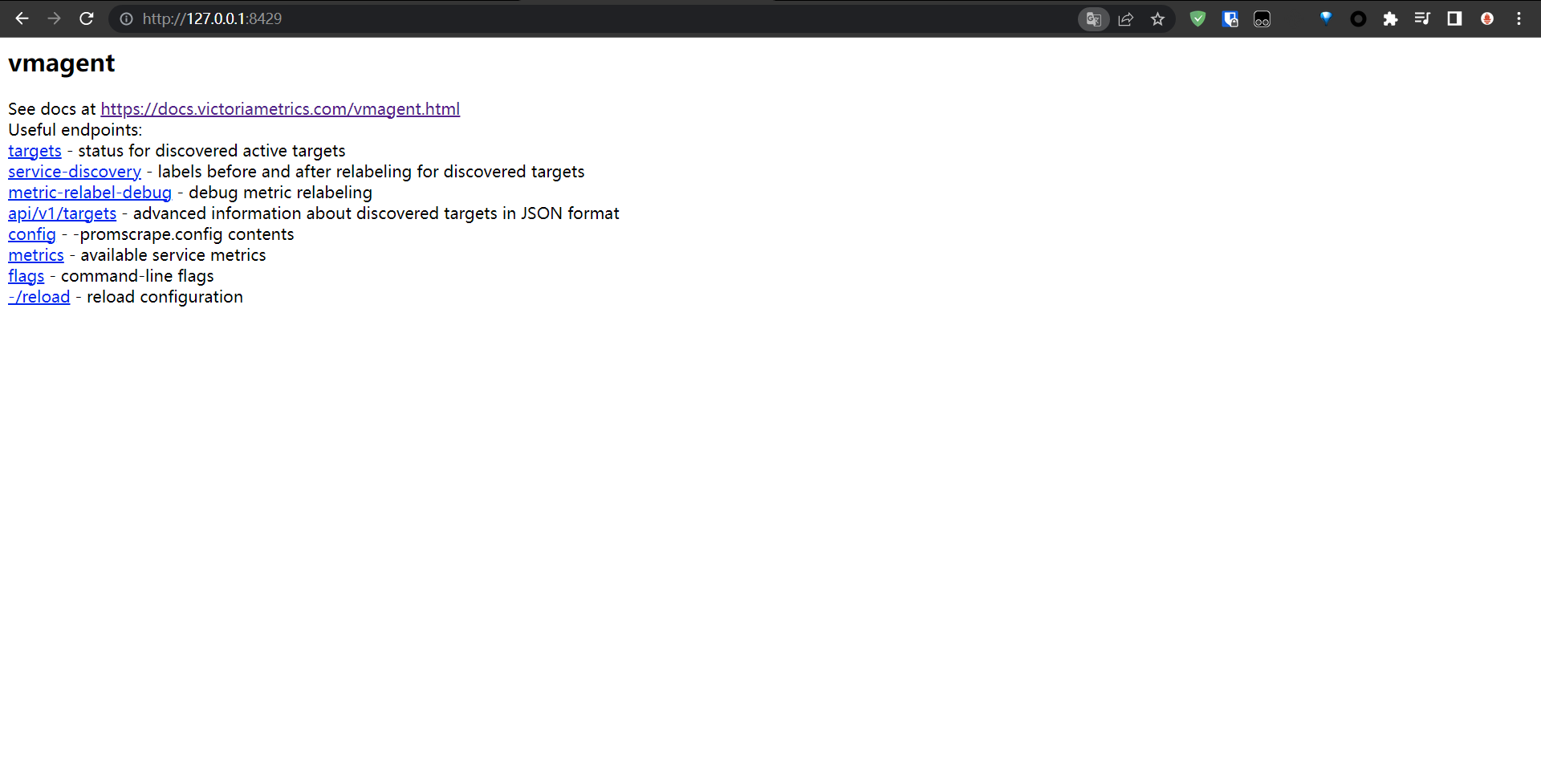

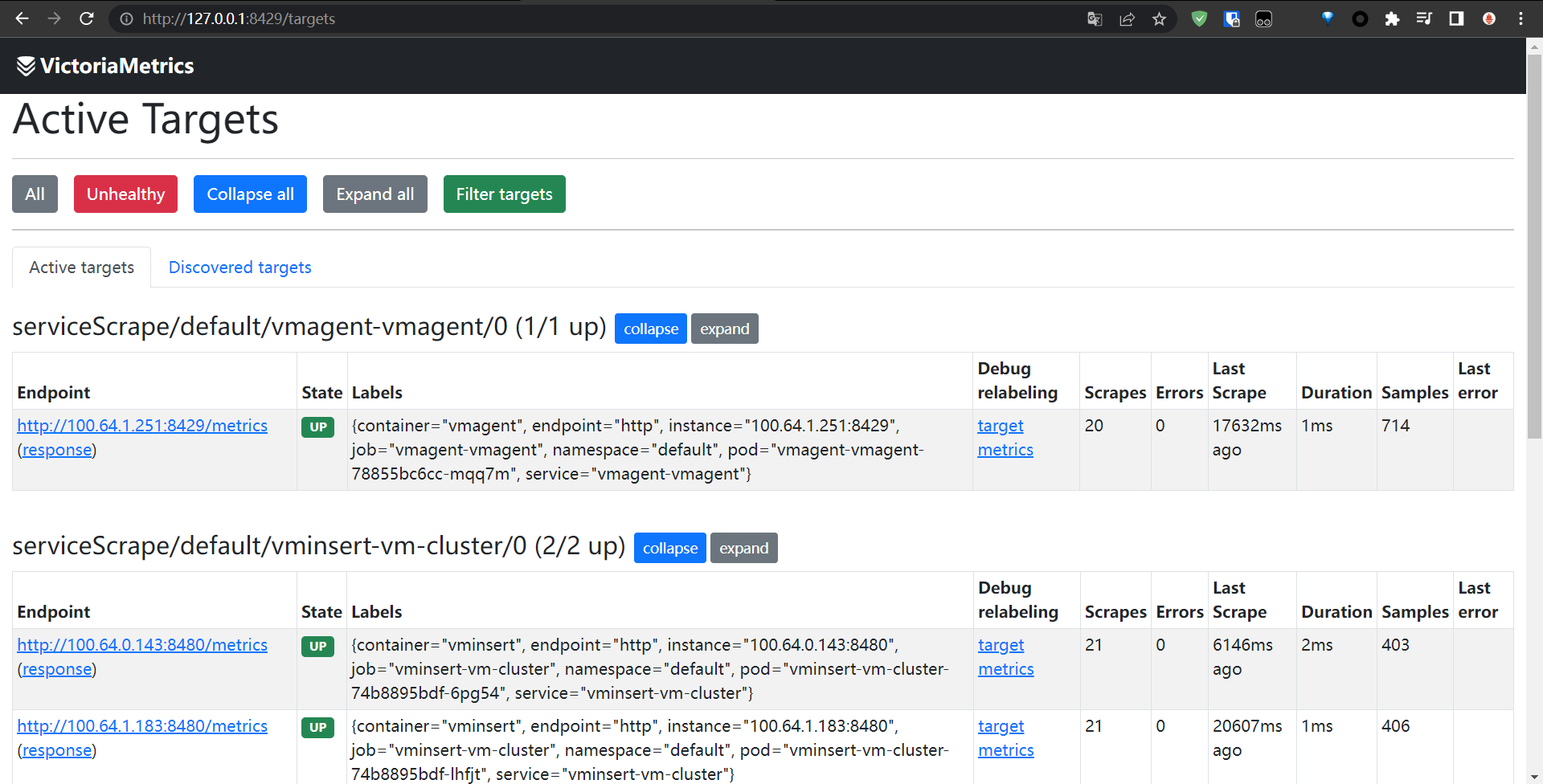

# 我这里是windows,我用kubectl创建一个转发来访问下vmagent

kubectl port-forward svc/vmagent-vmagent 8429:8429

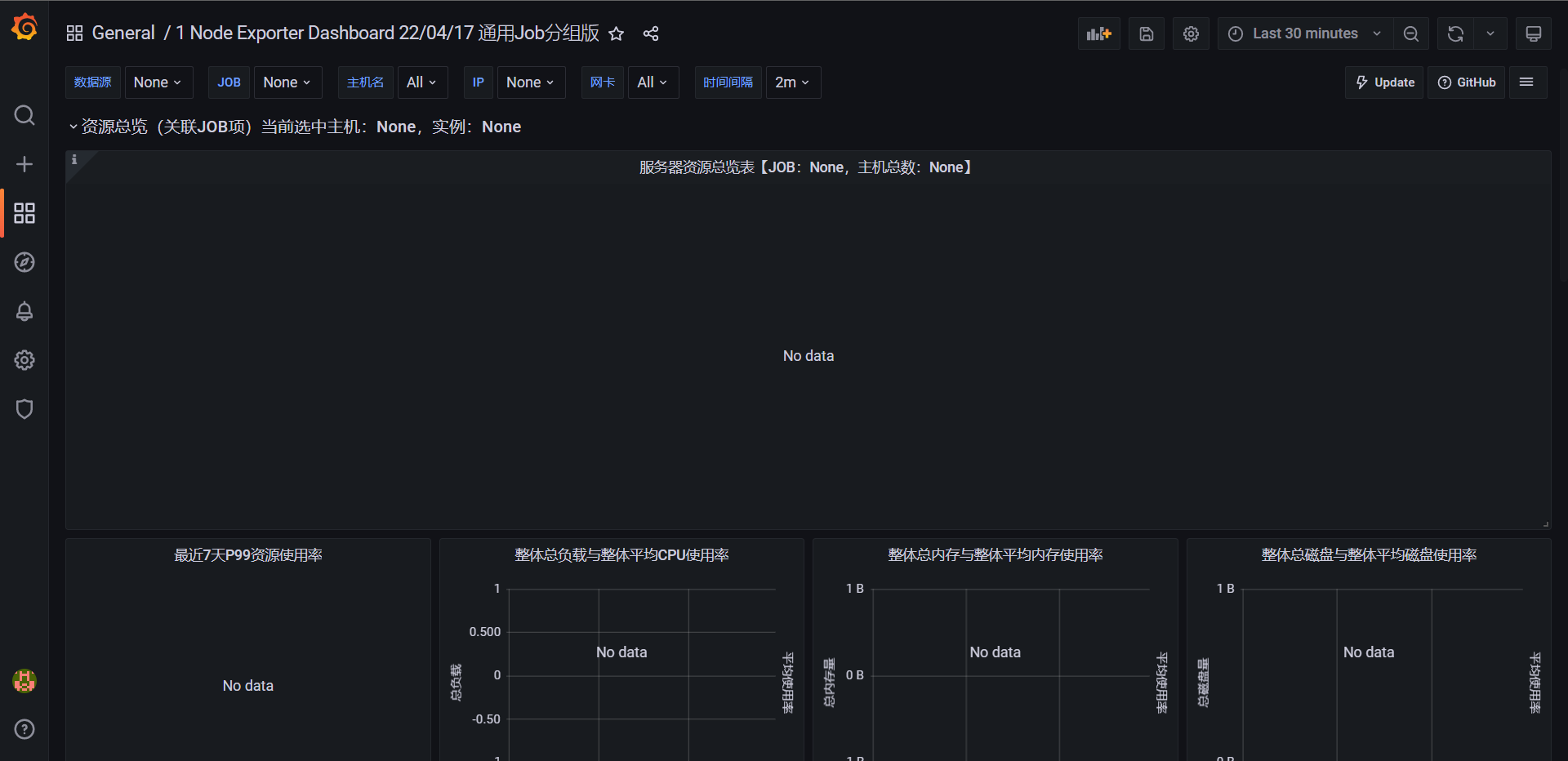

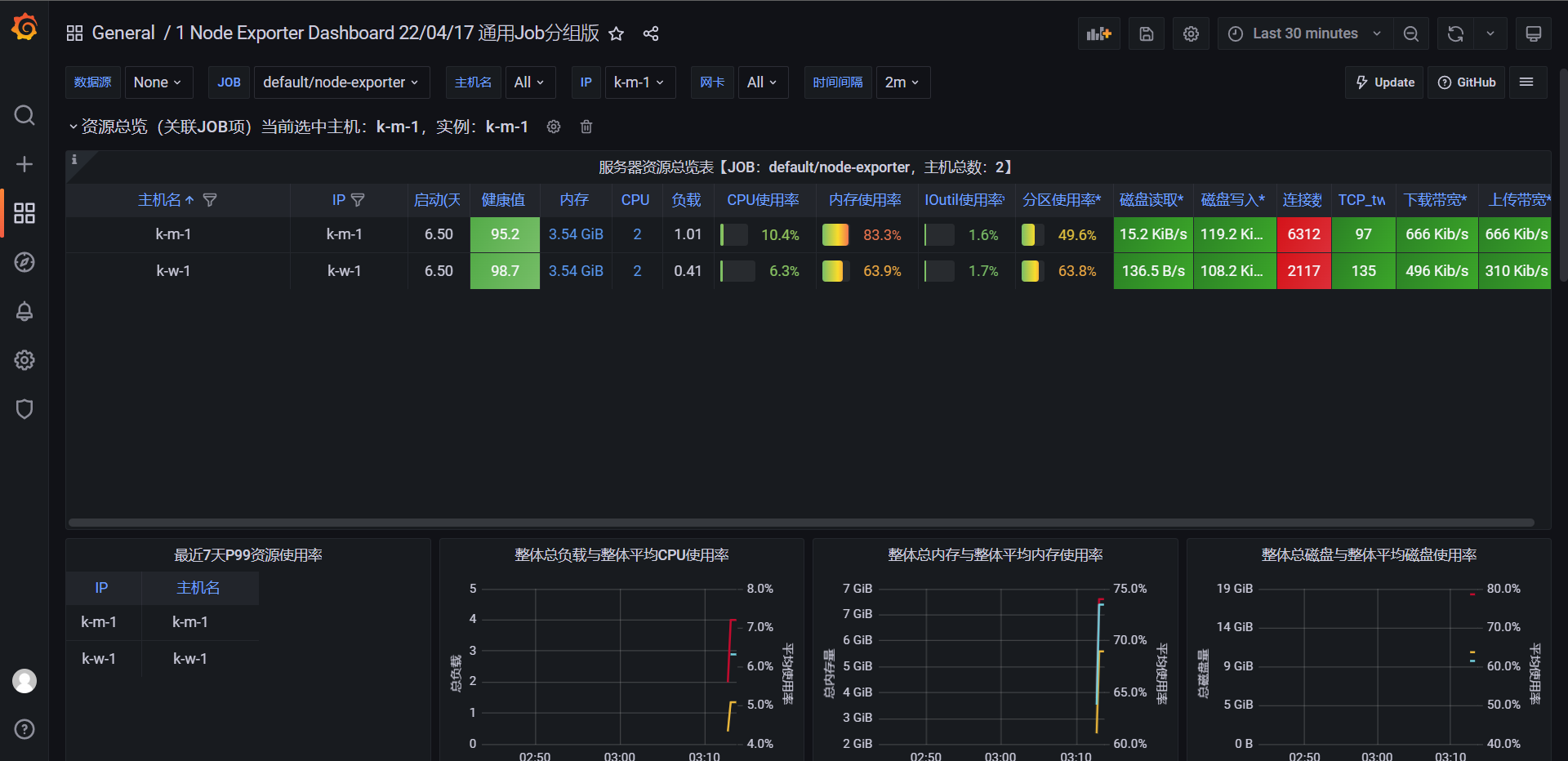

可以看到它其实里面已经集成了很多的监控了,大致一看就是它把自己的集群的组件全部监控起来了,那么我们再次去Grafana去看看数据

配置数据源:http://vmselect-vm-cluster.default.svc.cluster.local:8481/select/0/prometheus

导入如下模板:

1:11176

2:12683

3:14205

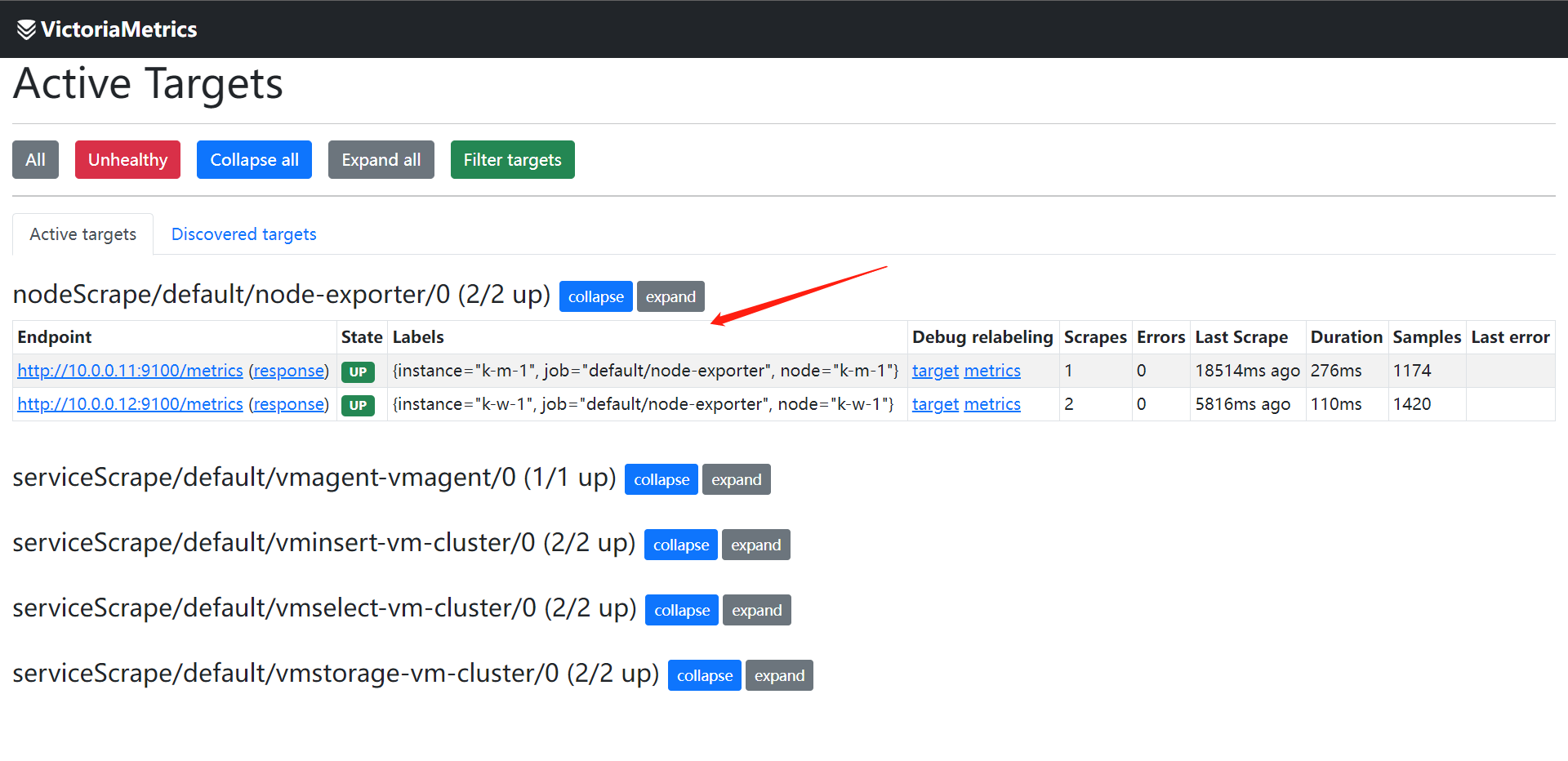

上面的问题就是因为我们没有去抓取Node-Exporter,我们可以用vm-operator的CRD去抓取一下

# vmnodescrape.yaml

apiVersion: operator.victoriametrics.com/v1beta1

kind: VMNodeScrape

metadata:

name: node-exporter

spec:

port: "9100"

path: /metrics

scheme: http

[root@k-m-1 vm-operator]# kubectl apply -f vmnodescrape.yaml

vmnodescrape.operator.victoriametrics.com/node-exporter created

[root@k-m-1 vm-operator]# kubectl get vmnodescrapes

NAME AGE

node-exporter 16s

# 去查看vmagent和grafana的数据

关于其他的CRD我这里也就不多说了,其他的大家可以自己去发觉使用方法。基本的套路都是一样的,使用方法也都大同小异

浙公网安备 33010602011771号

浙公网安备 33010602011771号