Kubernetes集群部署+ArgoCD部署

Kubernetes集群部署

本次部署版本为Kubernetes:1.23.1 CRI:Containerd1.4.4

| OS | HostName | IP | Config |

|---|---|---|---|

| CentOS7.9 | kubernetes-master-01 | 10.0.0.10 | 2C4G |

| CentOS7.9 | kubernetes-worker-01 | 10.0.0.11 | 2C2G |

| CentOS7.9 | kubernetes-master-02 | 10.0.0.12 | 2C2G |

1:基础环境配置

1:关闭防火墙

systemctl stop firewalld && systemctl disable firewalld

2:永久关闭seLinux(需重启系统生效)

setenforce 0

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

3:关闭swap

swapoff -a # 临时关闭

sed -i 's/.*swap.*/#&/g' /etc/fstab

4:设置主机名(三台主机都操作)

hostnamectl set-hostname kubernetes-master-01

hostnamectl set-hostname kubernetes-worker-01

hostnamectl set-hostname kubernetes-worker-02

5:添加hosts(三台主机都操作)

cat >> /etc/hosts << EOF

10.0.0.10 kubernetes-master-01

10.0.0.11 kubernetes-worker-01

10.0.0.12 kubernetes-worker-02

EOF

6:同步服务器时间(三台主机都操作)

yum install chrony -y

systemctl enable chronyd

systemctl start chronyd

chronyc sources -v

2:部署Containerd

1:安装依赖及常用工具(三台主机都操作)

yum install -y yum-utils device-mapper-persistent-data lvm2 wget vim yum-utils net-tools epel-release

2:添加加载的内核模块(三台主机都操作)

cat <<EOF >> /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

3:加载内核模块(三台主机都操作)

modprobe overlay

modprobe br_netfilter

4:设置内核参数(三台主机都操作)

cat <<EOF >>/etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

5:应用内核参数(三台主机都操作)

sysctl --system

6:添加docker源

# containerd也是在docker源内的

# 国外docker源

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

# 阿里云源

cat <<EOF >> /etc/yum.repos.d/docker-ce.repo

[docker]

name=docker-ce

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/x86_64/stable/

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

EOF

7:安装containerd

yum -y install containerd.io-1.4.4-3.1.el7.x86_64

8:配置containerd

mkdir -p /etc/containerd

containerd config default | sudo tee /etc/containerd/config.toml

# 修改cgroup Driver为systemd

sed -i '/runc.options/a\ SystemdCgroup = true' /etc/containerd/config.toml && \

grep 'SystemdCgroup = true' -B 7 /etc/containerd/config.toml

9:镜像加速

# endpoint位置添加阿里云的镜像源

$ vim /etc/containerd/config.toml

[plugins."io.containerd.grpc.v1.cri".registry]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["https://registry.cn-hangzhou.aliyuncs.com" ,"https://registry-1.docker.io"]

# 更改sandbox_image

$ vim /etc/containerd/config.toml

...

[plugins."io.containerd.grpc.v1.cri"]

disable_tcp_service = true

stream_server_address = "127.0.0.1"

stream_server_port = "0"

stream_idle_timeout = "4h0m0s"

enable_selinux = false

selinux_category_range = 1024

# 将这里改为aliyun的镜像源

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.2"

...

10:启动服务

systemctl enable containerd && systemctl start containerd

#如果你的环境中网络代理去访问外网,containerd也需要单独添加代理(可选)

mkdir /etc/systemd/system/containerd.service.d

cat > /etc/systemd/system/containerd.service.d/http_proxy.conf << EOF

[Service]

Environment="HTTP_PROXY=http://<proxy_ip>:<proxy_port>/"

Environment="HTTPS_PROXY=http://<proxy_ip>:<proxy_port>/"

Environment="NO_PROXY=x.x.x.x,x.x.x.x"

EOF

# 加载配置并重启服务

systemctl daemon-reload && systemctl restart containerd

3:部署Kubernetes

1:添加kubernetes源

# 原Kubernetes源

cat <<EOF >> /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-\$basearch

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

# 阿里云Kubernetes源

cat << EOF >> /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

2:安装部署所需组件

yum install -y kubelet-1.23.1 kubeadm-1.23.1 kubectl-1.23.1 --disableexcludes=kubernetes

3:启动并设置开机自启

systemctl enable --now kubelet

4:设置crictl

cat << EOF >> /etc/crictl.yaml

runtime-endpoint: unix:///var/run/containerd/containerd.sock

image-endpoint: unix:///var/run/containerd/containerd.sock

timeout: 10

debug: false

EOF

5:初始化集群

kubeadm config print init-defaults > kubeadm-init.yaml

6:修改文件

# 修改kubeadm-init.yaml文件的advertiseAddress,criSocket,kubernetesVersion,添加podSubnet

# 在scheduler: {}下添加

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

#########################################################################################

vi kubeadm-init.yaml

#########################################################################################

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 10.0.0.10

bindPort: 6443

nodeRegistration:

criSocket: /run/containerd/containerd.sock

name: kubernetes-master-01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.23.1

networking:

dnsDomain: cluster.local

podSubnet: 100.244.0.0/16

serviceSubnet: 200.96.0.0/12

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

#############################################################################

7:初始化集群

# 在初始化之前呢,我这里用的是CentOS7.3的操作系统,在初始化的时候会失败,需要更新下systemd包

# 注意我这里node节点也是CentOS7.3的操作系统,所以也需要更新下systemd包

yum -y upgrade systemd

# 更新好以后开始初始化

kubeadm init --config=kubeadm-init.yaml

# 设置kubectl默认访问的api

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

echo "source <(kubectl completion bash)" >> ~/.bashrc

source ~/.bashrc

查看CRI是否是containerd

kubectl get nodes -o wide

8:部署calico网络插件

curl https://docs.projectcalico.org/manifests/calico.yaml -O

kubectl apply -f calico.yaml

9:查看calico的pod

kubectl get pod -n kube-system | grep "calico"

4:Argo-CD部署

1:了解什么是Argo-CD

什么是ArgoCD:Argo CD is a declarative, GitOps continuous delivery tool for Kubernetes.

Argo CD是一个基于Kubernetes的声明式的GitOps工具。

在说Argo CD之前,我们先来了解一下什么是GitOps。

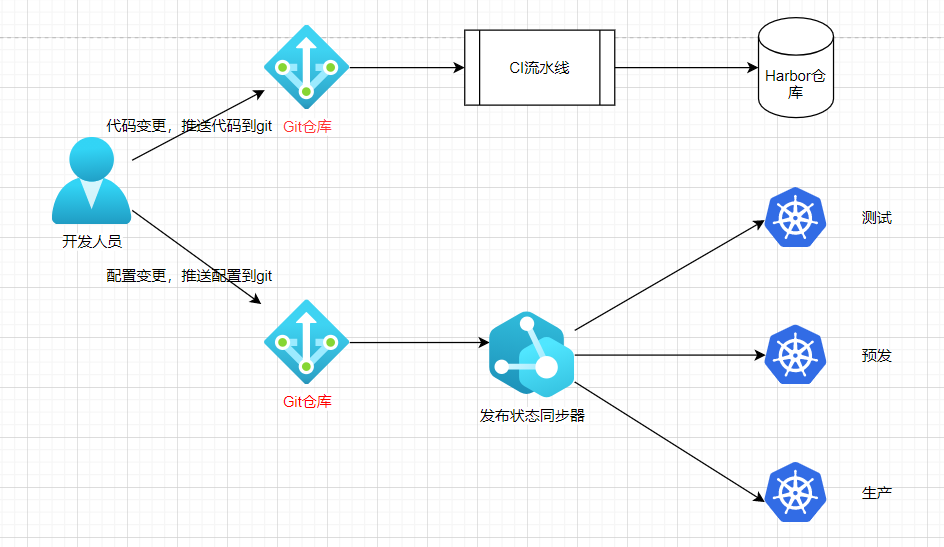

什么是GitOps

GitOps是以Git为基础,使用CI/CD来更新运行在云原生环境的应用,它秉承了DevOps的核心理念--“构建它并交付它(you built it you ship it)”。

上图!

#当开发人员将开发完成的代码推送到git仓库会触发CI制作镜像并推送到镜像仓库

#CI处理完成后,可以手动或者自动修改应用配置,再将其推送到git仓库

#GitOps会同时对比目标状态和当前状态,如果两者不一致会触发CD将新的配置部署到集群中

其中,目标状态是Git中的状态,现有状态是集群的里的应用状态。

不用GitOps可以么?

当然可以,我们可以使用kubectl、helm等工具直接发布配置,但这会存在一个很严重的安全问题,那就是密钥共享。

为了让CI系统能够自动的部署应用,我们需要将集群的访问密钥共享给它,这会带来潜在的安全问题。

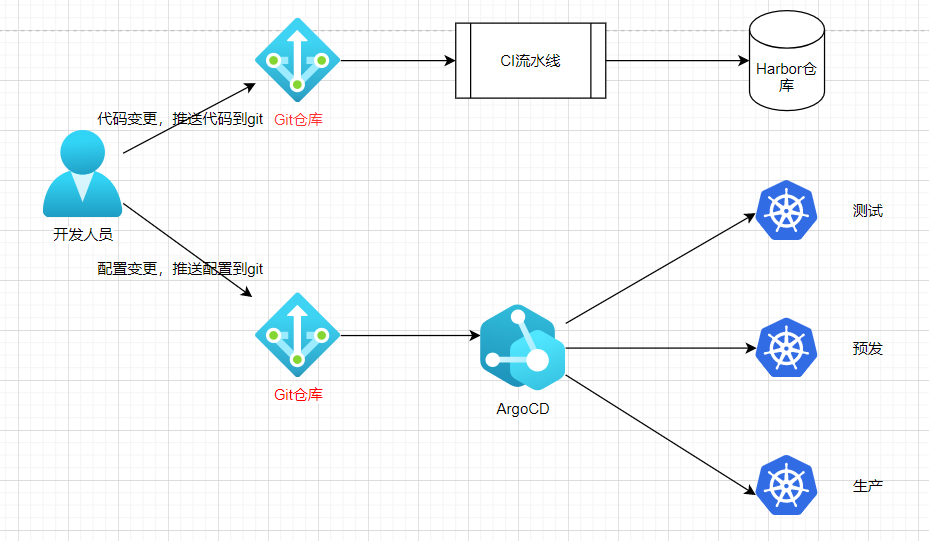

2:ArgoCD

Argo CD遵循GitOps模式,使用Git存储库存储所需应用程序的配置。

# Kubernetes清单可以通过以下几种方式指定:

# kustomize应用程序

# helm图表

# ksonnet应用程序

# jsonnet文件

# 基于YAML/json配置

# 配置管理插件配置的任何自定义配置管理工具

Argo CD实现为kubernetes控制器,它持续监视运行中的应用程序,并将当前的活动状态与期望的目标状态进行比较(如Git repo中指定的那样)。如果已部署的应用程序的活动状态偏离了目标状态,则认为是OutOfSync。Argo CD报告和可视化这些差异,同时提供了方法,可以自动或手动将活动状态同步回所需的目标状态。在Git repo中对所需目标状态所做的任何修改都可以自动应用并反映到指定的目标环境中。

Argo CD就处在如下位置:

它的优势总结如下:

# 应用定义、配置和环境信息是声明式的,并且可以进行版本控制;

# 应用部署和生命周期管理是全自动化的,是可审计的,清晰易懂;

# Argo CD是一个独立的部署工具,支持对多个环境、多个Kubernetes集群上的应用进行统一部署和管理

实践

前提:有一个可用的Kubernetes集群。(向上看)

1:安装Argo CD

安装很简单,不过在实际使用中需要对数据进行持久化。

我这里直接使用官方文档的安装命令:

kubectl create namespace argocd

kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

# 执行成功后会在argocd的namespace下创建如下资源。

[root@kubernetes-master-01 ~]# kubectl get pod,svc,deploy,replicaset,statefulset

NAME READY STATUS RESTARTS AGE

pod/argocd-application-controller-0 1/1 Running 0 5h22m

pod/argocd-dex-server-85fdf8f56b-zk9v2 1/1 Running 0 5h22m

pod/argocd-redis-68864d9cf7-l5dvz 1/1 Running 0 5h22m

pod/argocd-repo-server-6fd9cf7779-t8qdp 1/1 Running 0 5h22m

pod/argocd-server-b597d7df6-865hq 1/1 Running 0 5h22m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/argocd-dex-server ClusterIP 200.104.179.117 <none> 5556/TCP,5557/TCP,5558/TCP 5h22m

service/argocd-metrics ClusterIP 200.101.49.211 <none> 8082/TCP 5h22m

service/argocd-redis ClusterIP 200.103.85.1 <none> 6379/TCP 5h22m

service/argocd-repo-server ClusterIP 200.108.111.137 <none> 8081/TCP,8084/TCP 5h22m

service/argocd-server NodePort 200.97.43.193 <none> 80:32482/TCP,443:32599/TCP 5h22m

service/argocd-server-metrics ClusterIP 200.106.198.205 <none> 8083/TCP 5h22m

service/kubernetes ClusterIP 200.96.0.1 <none> 443/TCP 7h41m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/argocd-dex-server 1/1 1 1 5h22m

deployment.apps/argocd-redis 1/1 1 1 5h22m

deployment.apps/argocd-repo-server 1/1 1 1 5h22m

deployment.apps/argocd-server 1/1 1 1 5h22m

NAME DESIRED CURRENT READY AGE

replicaset.apps/argocd-dex-server-85fdf8f56b 1 1 1 5h22m

replicaset.apps/argocd-redis-68864d9cf7 1 1 1 5h22m

replicaset.apps/argocd-repo-server-6fd9cf7779 1 1 1 5h22m

replicaset.apps/argocd-server-b597d7df6 1 1 1 5h22m

NAME READY AGE

statefulset.apps/argocd-application-controller 1/1 5h22m

# 访问Argo server的方式有两种:

# 通过web ui

# 使用argocd 客户端工具

这里使用webUI

通过kubectl edit -n argocd svc argocd-server将service的type类型改NodePort。改完后通过以下命令查看端口:

[root@k8s-master ~]# kubectl get svc -n argocd

然后通过http://hostip:32482访问页面

账号:admin

密码获取方式:

echo $(kubectl get secret -n argocd argocd-initial-admin-secret -o yaml | grep password | awk -F: '{print $2}') | base64 -d

浙公网安备 33010602011771号

浙公网安备 33010602011771号