完整的中英文词频统计

步骤:

1.准备utf-8编码的文本文件file

2.通过文件读取字符串 str

3.对文本进行预处理

4.分解提取单词 list

5.单词计数字典 set , dict

6.按词频排序 list.sort(key=)

7.排除语法型词汇,代词、冠词、连词等无语义词

8.输出TOP(20) 完成:

1、英文词频统计:

输入代码:

1 #通过文件读取字符串 str 2 strThousand = '''Twilight 4Ever 3 Heartbeats fast 4 Colors and promises 5 How to be brave 6 How can I love when I'm afraid to fall 7 But watching you stand alone 8 All of my doubt suddenly goes away somehow 9 One step closer 10 I have died everyday waiting for you 11 Darling don't be afraid I have loved you 12 For a thousand years 13 I love you for a thousand more 14 Time stands still 15 Beauty in all she is 16 I will be brave 17 I will not let anything take away 18 What's standing in front of me 19 Every breath 20 Every hour has come to this 21 One step closer 22 I have died everyday waiting for you 23 Darling don't be afraid I have loved you 24 For a thousand years 25 I love you for a thousand more 26 And all along I believed I would find you 27 Time has brought your heart to me 28 I have loved you for a thousand years 29 I love you for a thousand more 30 One step closer 31 One step closer 32 I have died everyday waiting for you 33 Darling don't be afraid I have loved you 34 For a thousand years 35 I love you for a thousand more 36 And all along I believed I would find you 37 Time has brought your heart to me 38 I have loved you for a thousand years 39 I love you for a thousand more''' 40 41 #准备utf-8编码的文本文件file 42 fo=open('Thousand.txt','r',encoding='utf-8') 43 Thousand = fo.read().lower() 44 fo.close() 45 print(Thousand) 46 47 #字符串预处理: #大小写#标点符号#特殊符号 48 sep = '''.,;:?!-_''' 49 for ch in sep: 50 strThousnad = strThousand.replace(ch,' ') 51 52 #分解提取单词 list 53 strList = strThousand.split() 54 print(len(strList),strList) 55 56 #单词计数字典 set , dict 57 strSet = set(strList) 58 exclude = {'a','the','and','i','you','in'} #排除语法型词汇,代词、冠词、连词等无语义词 59 strSet = strSet-exclude 60 61 print(len(strSet),strSet) 62 63 strDict ={} 64 for word in strSet: 65 strDict[word] = strList.count(word) 66 67 print(len(strDict),strDict) 68 wcList = list(strDict.items()) 69 print(wcList) 70 wcList.sort(key=lambda x:x[1],reverse=True) #按词频排序 list.sort(key=) 71 print(wcList) 72 73 for i in range(20): #输出TOP20 74 print(wcList[i])

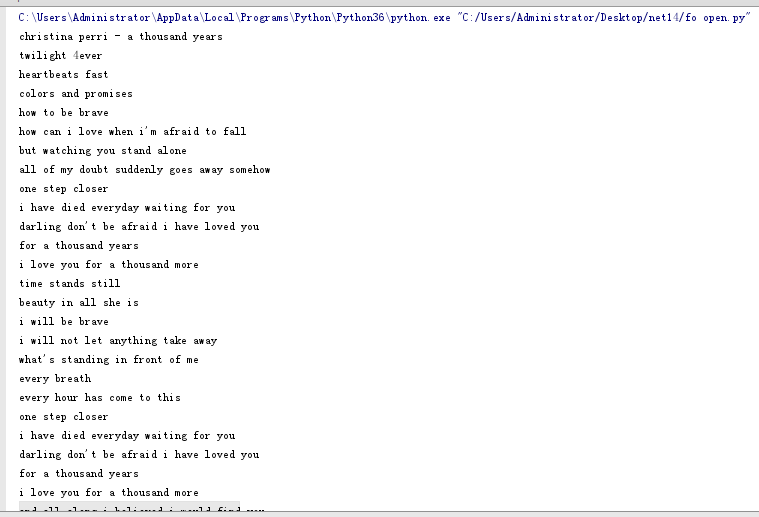

运行代码1:

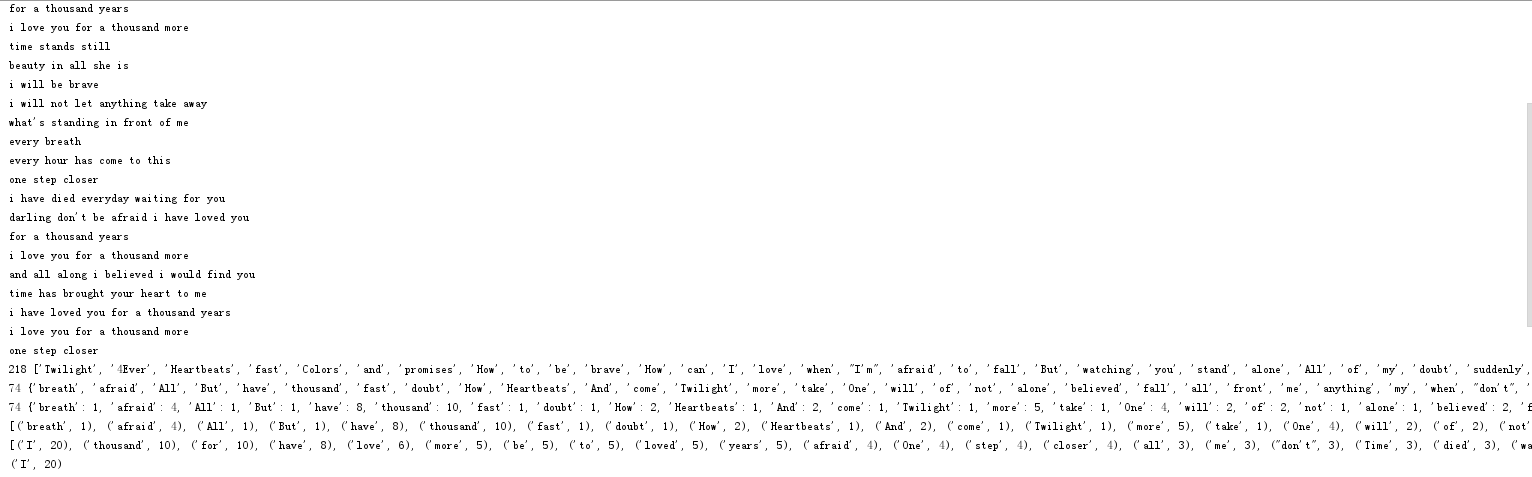

运行代码2:

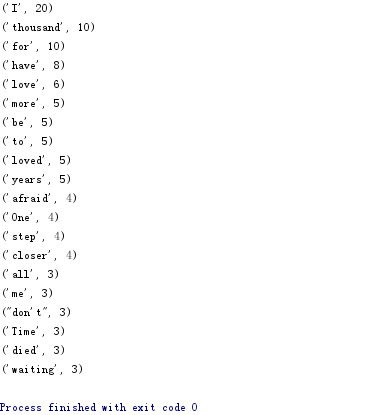

运行代码3:

2、中文词频统计:

1 import jieba; 2 f = open('Beauty and beast.txt','r',encoding='utf-8') 3 fo=f.read() 4 f.close() 5 6 wordsls = jieba.lcut(fo) #用字典形式统计每个词的字数 7 wcdict = {} 8 for word in wordsls: 9 if len(word)==1: 10 continue 11 else: 12 wcdict[word]=wcdict.get(word,0)+1 13 print(wcdict) 14 15 print(list(jieba.cut(fo))) #精确模式,将句子最精确的分开,适合文本分析 16 print(list(jieba.cut(fo,cut_all=True))) #全模式,把句子中所有的可以成词的词语都扫描出来 17 print(list(jieba.cut_for_search(fo))) #搜索引擎模式,在精确模式的基础上,对长词再次切分,提高召回率,适合用于搜索引擎分词 18 19 wcList = list(wcdict.items()) #以列表返回可遍历的(键, 值) 元组数组 20 wcList.sort(key = lambda x:x[1],reverse=True) #出现词汇次数由高到低排序 21 print(wcList) 22 23 for i in range(5): #第一个词循环遍历输出5次 24 print(wcList[1])

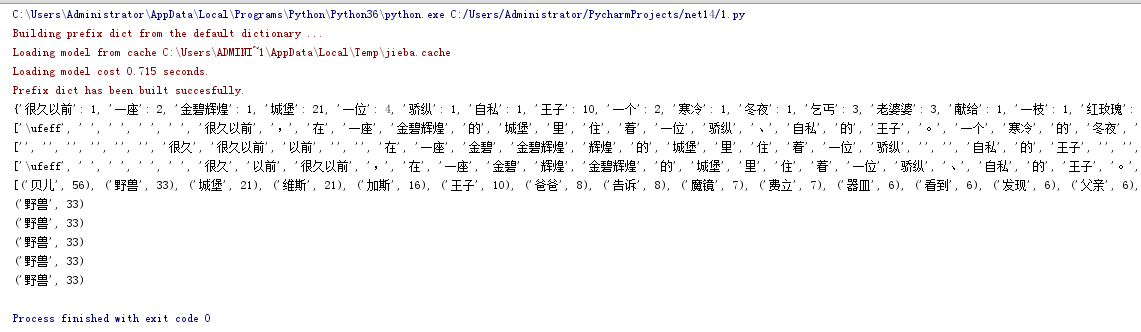

运行结果:

浙公网安备 33010602011771号

浙公网安备 33010602011771号