selenium&phantom实战--获取代理数据

获取快代理网站的数据

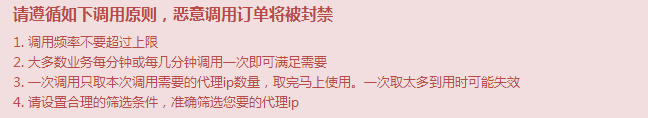

注意:

#!/usr/bin/env python

# _*_ coding: utf-8 _*_

# __author__ ='kong'

# 导入模块

from selenium import webdriver

# 定义一个类用来存放代理数据

class Item(object):

ip = None

port = None

anonymous = None

type = None

support = None

local = None

speed = None

# 主类

class GetProx(object):

def __init__(self):

self.startUrl = "http://www.kuaidaili.com/proxylist/"

self.urls = self.getUrls()

self.proxList = self.getProxyList(self.urls)

self.fileName = 'proxy.txt'

self.saveFile(self.fileName,self.proxList)

# 获取所有要访问的url

def getUrls(self):

urls = []

for i in xrange(1,11):

url = self.startUrl + str(i)

urls.append(url)

return urls

# 获取每个url的代理数据

def getProxyList(self,urls):

# 创建一个浏览器实例

browser = webdriver.PhantomJS()

proxyList = []

item = Item()

for url in urls:

# 向指定的url发送请求

browser.get(url)

# 智能等待5秒

browser.implicitly_wait(5)

# 获取网页上的代理表格数据

elements = browser.find_elements_by_xpath("//tbody/tr")

for element in elements:

item.ip = element.find_element_by_xpath("./td[1]").text.encode("utf8")

item.port = element.find_element_by_xpath("./td[2]").text.encode("utf8")

item.anonymous = element.find_element_by_xpath("./td[3]").text.encode("utf8")

item.type = element.find_element_by_xpath("./td[4]").text.encode("utf8")

item.support = element.find_element_by_xpath("./td[5]").text.encode("utf8")

item.local = element.find_element_by_xpath("./td[6]").text.encode("utf8")

item.speed = element.find_element_by_xpath("./td[7]").text.encode("utf8")

proxyList.append(item)

# 最后退出浏览器实例

browser.quit()

return proxyList

# 代理数据写入文件中

def saveFile(self,fileName,proxyList):

with open(fileName,'w') as fp:

for each in proxyList:

fp.write(each.ip + "\t")

fp.write(each.port + "\t")

fp.write(each.anonymous +"\t")

fp.write(each.type + "\t")

fp.write(each.support + "\t")

fp.write(each.local + "\t")

fp.write(each.speed + "\t")

fp.write("\n")

if __name__ == '__main__':

gp = GetProx()

浙公网安备 33010602011771号

浙公网安备 33010602011771号