朴素贝叶斯算法实验

【实验目的】

理解朴素贝叶斯算法原理,掌握朴素贝叶斯算法框架。

【实验内容】

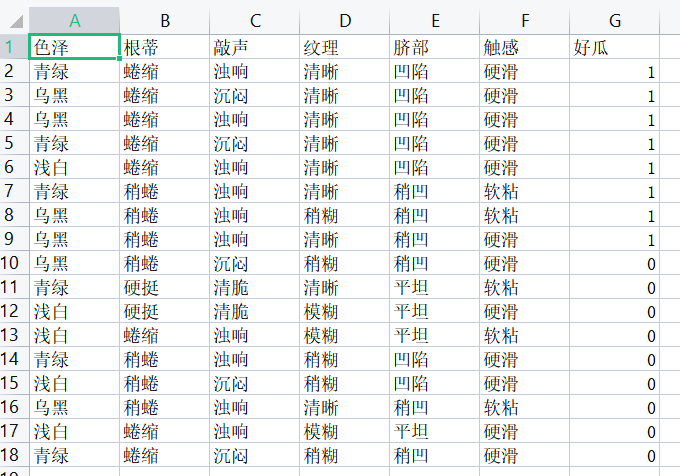

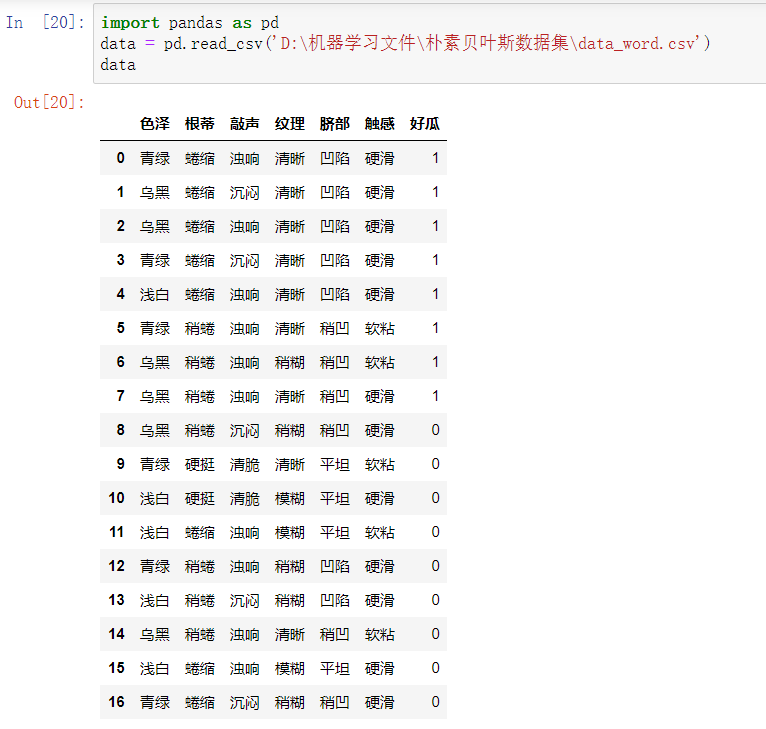

针对下表中的数据,编写python程序实现朴素贝叶斯算法(不使用sklearn包),对输入数据进行预测;

熟悉sklearn库中的朴素贝叶斯算法,使用sklearn包编写朴素贝叶斯算法程序,对输入数据进行预测;

【实验报告要求】

对照实验内容,撰写实验过程、算法及测试结果;

代码规范化:命名规则、注释;

查阅文献,讨论朴素贝叶斯算法的应用场景。

1.针对下表中的数据,编写python程序实现朴素贝叶斯算法(不使用sklearn包),对输入数据进行预测;

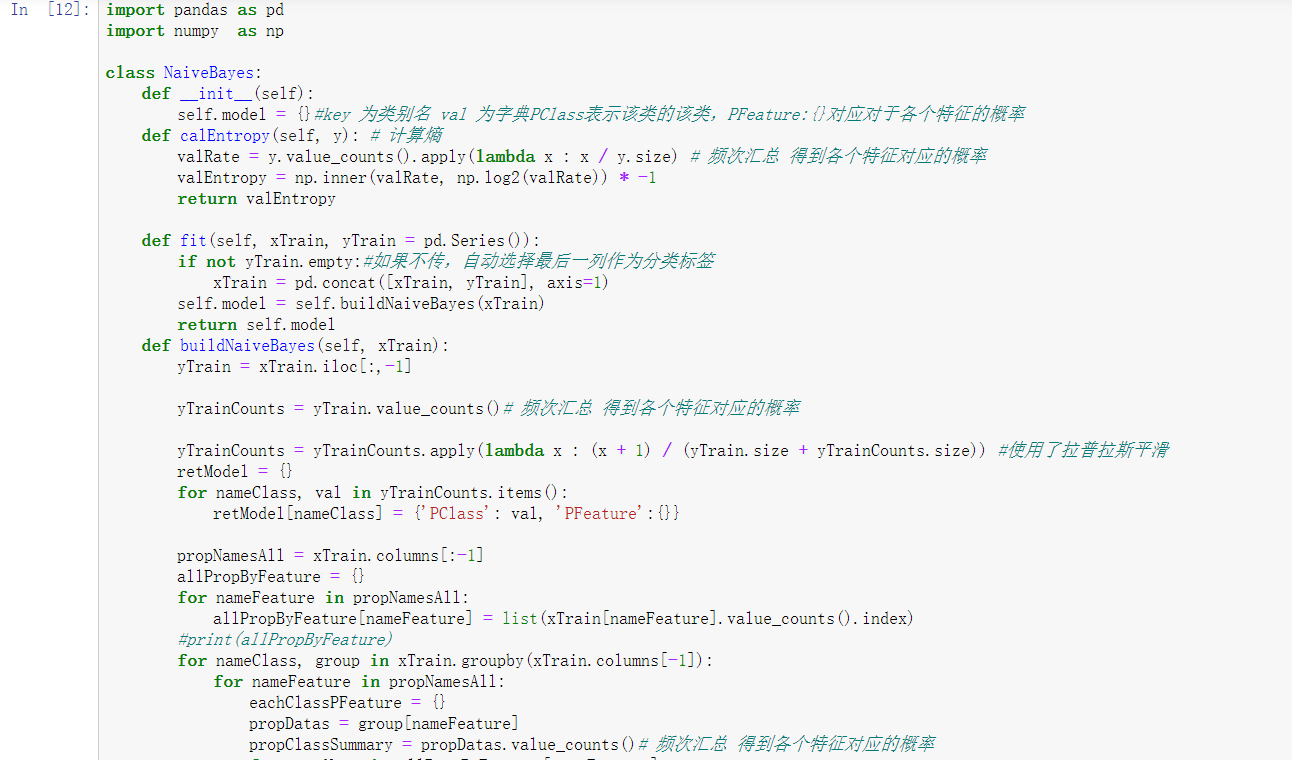

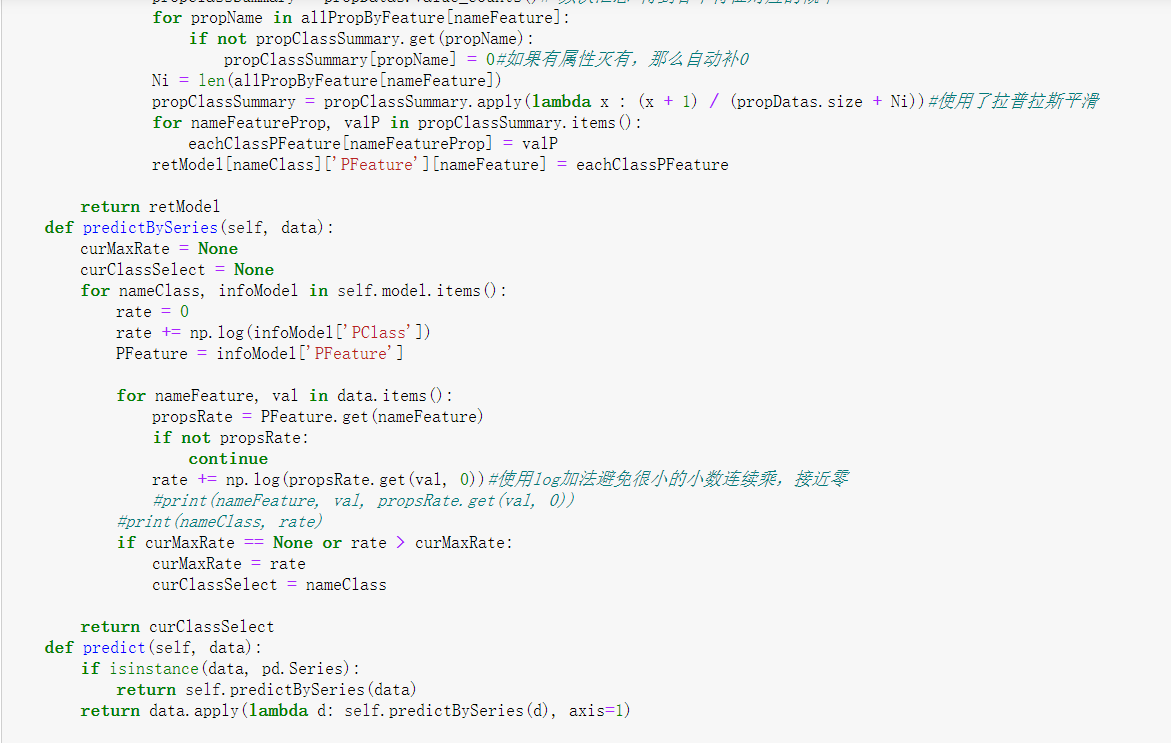

import pandas as pd import numpy as np class NaiveBayes: def __init__(self): self.model = {}#key 为类别名 val 为字典PClass表示该类的该类,PFeature:{}对应对于各个特征的概率 def calEntropy(self, y): # 计算熵 valRate = y.value_counts().apply(lambda x : x / y.size) # 频次汇总 得到各个特征对应的概率 valEntropy = np.inner(valRate, np.log2(valRate)) * -1 return valEntropy def fit(self, xTrain, yTrain = pd.Series()): if not yTrain.empty:#如果不传,自动选择最后一列作为分类标签 xTrain = pd.concat([xTrain, yTrain], axis=1) self.model = self.buildNaiveBayes(xTrain) return self.model def buildNaiveBayes(self, xTrain): yTrain = xTrain.iloc[:,-1] yTrainCounts = yTrain.value_counts()# 频次汇总 得到各个特征对应的概率 yTrainCounts = yTrainCounts.apply(lambda x : (x + 1) / (yTrain.size + yTrainCounts.size)) #使用了拉普拉斯平滑 retModel = {} for nameClass, val in yTrainCounts.items(): retModel[nameClass] = {'PClass': val, 'PFeature':{}} propNamesAll = xTrain.columns[:-1] allPropByFeature = {} for nameFeature in propNamesAll: allPropByFeature[nameFeature] = list(xTrain[nameFeature].value_counts().index) #print(allPropByFeature) for nameClass, group in xTrain.groupby(xTrain.columns[-1]): for nameFeature in propNamesAll: eachClassPFeature = {} propDatas = group[nameFeature] propClassSummary = propDatas.value_counts()# 频次汇总 得到各个特征对应的概率 for propName in allPropByFeature[nameFeature]: if not propClassSummary.get(propName): propClassSummary[propName] = 0#如果有属性灭有,那么自动补0 Ni = len(allPropByFeature[nameFeature]) propClassSummary = propClassSummary.apply(lambda x : (x + 1) / (propDatas.size + Ni))#使用了拉普拉斯平滑 for nameFeatureProp, valP in propClassSummary.items(): eachClassPFeature[nameFeatureProp] = valP retModel[nameClass]['PFeature'][nameFeature] = eachClassPFeature return retModel def predictBySeries(self, data): curMaxRate = None curClassSelect = None for nameClass, infoModel in self.model.items(): rate = 0 rate += np.log(infoModel['PClass']) PFeature = infoModel['PFeature'] for nameFeature, val in data.items(): propsRate = PFeature.get(nameFeature) if not propsRate: continue rate += np.log(propsRate.get(val, 0))#使用log加法避免很小的小数连续乘,接近零 #print(nameFeature, val, propsRate.get(val, 0)) #print(nameClass, rate) if curMaxRate == None or rate > curMaxRate: curMaxRate = rate curClassSelect = nameClass return curClassSelect def predict(self, data): if isinstance(data, pd.Series): return self.predictBySeries(data) return data.apply(lambda d: self.predictBySeries(d), axis=1) dataTrain = pd.read_csv('D:\机器学习文件\朴素贝叶斯数据集\data_word.csv') naiveBayes = NaiveBayes() treeData = naiveBayes.fit(dataTrain) import json print(json.dumps(treeData, ensure_ascii=False)) pd = pd.DataFrame({'预测值':naiveBayes.predict(dataTrain), '正取值':dataTrain.iloc[:,-1]}) print(pd) print('正确率:%f%%'%(pd[pd['预测值'] == pd['正取值']].shape[0] * 100.0 / pd.shape[0]))

2.熟悉sklearn库中的朴素贝叶斯算法,使用sklearn包编写朴素贝叶斯算法程序,对输入数据进行预测;

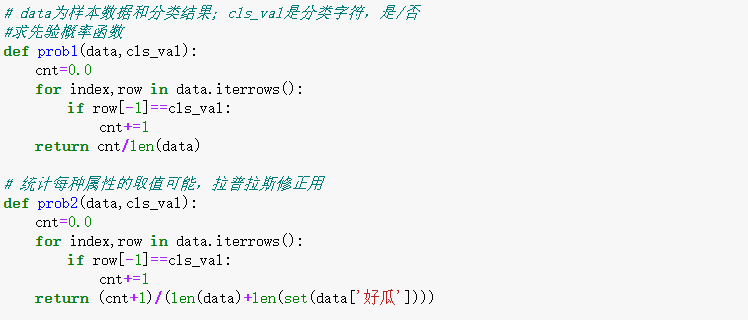

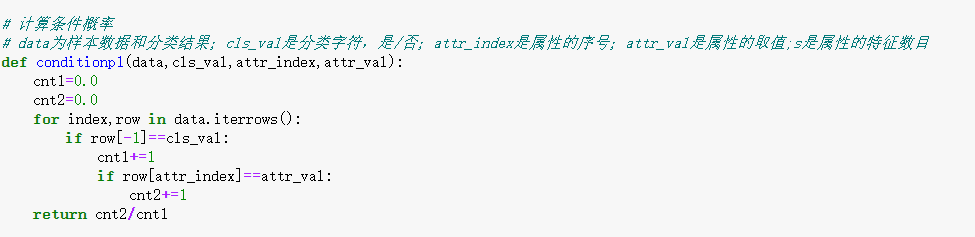

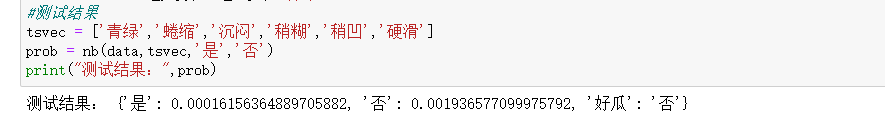

# data为样本数据和分类结果;cls_val是分类字符,是/否 #求先验概率函数 def prob1(data,cls_val): cnt=0.0 for index,row in data.iterrows(): if row[-1]==cls_val: cnt+=1 return cnt/len(data) # 统计每种属性的取值可能,拉普拉斯修正用 def prob2(data,cls_val): cnt=0.0 for index,row in data.iterrows(): if row[-1]==cls_val: cnt+=1 return (cnt+1)/(len(data)+len(set(data['好瓜']))) # 计算条件概率 # data为样本数据和分类结果;cls_val是分类字符,是/否;attr_index是属性的序号;attr_val是属性的取值;s是属性的特征数目 def conditionp1(data,cls_val,attr_index,attr_val): cnt1=0.0 cnt2=0.0 for index,row in data.iterrows(): if row[-1]==cls_val: cnt1+=1 if row[attr_index]==attr_val: cnt2+=1 return cnt2/cnt1 # 统计每种属性的取值可能,拉普拉斯修正用 def conditionp2(data,cls_val,attr_index,attr_val,s): cnt1=0.0 cnt2=0.0 for index,row in data.iterrows(): if row[-1]==cls_val: cnt1+=1 if row[attr_index]==attr_val: cnt2+=1 return (cnt2+1)/(cnt1+s) # 利用后验概率计算先验概率 # data为样本数据和分类结果;testlist是新样本数据列表;cls_y、cls_n是分类字符,是/否;s是属性的特征数目 def nb(data,testlist,cls_y,cls_n): py=prob1(data,cls_y) pn=prob1(data,cls_n) for i,val in enumerate(testlist): py*=conditionp1(data,cls_y,i,val) pn*=conditionp1(data,cls_n,i,val) if (py==0) or (pn==0): py=prob2(data,cls_y) pn=prob2(data,cls_n) for i,val in enumerate(testlist): s=len(set(data[data.columns[i]])) py*=conditionp2(data,cls_y,i,val,s) pn*=conditionp2(data,cls_n,i,val,s) if py>pn: result=cls_y else: result=cls_n return {cls_y:py,cls_n:pn,'好瓜':result} #测试结果 tsvec = ['青绿','蜷缩','沉闷','稍糊','稍凹','硬滑'] prob = nb(data,tsvec,'是','否') print("测试结果:",prob)

【实验总结】

优点:

(1) 算法逻辑简单,易于实现

(2)分类过程中时空开销小

缺点:

理论上,朴素贝叶斯模型与其他分类方法相比具有最小的误差率。但是实际上并非总是如此,这是因为朴素贝叶斯模型假设属性之间相互独立,这个假设在实际应用中往往是不成立的,在属性个数比较多或者属性之间相关性较大时,分类效果不好。

而在属性相关性较小时,朴素贝叶斯性能最为良好。对于这一点,有半朴素贝叶斯之类的算法通过考虑部分关联性适度改进。

浙公网安备 33010602011771号

浙公网安备 33010602011771号