sparksql对oracle时间做业务处理

目前sparksql对oracle的时间支持不是很好,查询日期时间时只会显示日期而时间则不会显示,下面是自己改的两个java解决方案

方案一

ReadOracle.java

package BigDataSQL; import org.apache.spark.SparkConf; import org.apache.spark.api.java.JavaSparkContext; import org.apache.spark.sql.Row; import org.apache.spark.sql.SQLContext; import java.util.HashMap; import java.util.List; import java.util.Map; public class ReadOracle { public static void main(String[] args) { String driver = "oracle.jdbc.driver.OracleDriver"; SparkConf conf = new SparkConf().setAppName("Spark03").setMaster("local"); JavaSparkContext sc = new JavaSparkContext(conf); //其底层就是scala的sparkcontext SQLContext sqlContext = new SQLContext(sc); String url = "jdbc:oracle:thin:@//192.168.1.**8:1521/orcl"; Map<String, String> options = new HashMap<String, String>(); options.put("user", "test"); options.put("password", "test"); options.put("url", url); options.put("dbtable", "TM_***_UPDOWN");//数据表 //一次取多少行数据 options.put("fetchSize", "20"); options.put("driver", driver); org.apache.spark.sql.Dataset jdbcDF = sqlContext.read().format("jdbc").options(options).load(); List<Row> teenagerNames = jdbcDF.collectAsList(); for (Row s : teenagerNames) { System.out.println(s); } } }

方案二

ReadOracle03.java

package BigDataSQL; import org.apache.spark.SparkConf; import org.apache.spark.api.java.JavaSparkContext; import org.apache.spark.sql.SQLContext; import org.apache.spark.sql.jdbc.JdbcDialect; import org.apache.spark.sql.jdbc.JdbcDialects; import org.apache.spark.sql.jdbc.JdbcType; import org.apache.spark.sql.types.DataType; import org.apache.spark.sql.types.DataTypes; import org.apache.spark.sql.types.MetadataBuilder; import scala.Option; import java.sql.Types; import java.util.HashMap; import java.util.Map; public class ReadOracle03 { public static void oracleInit() { JdbcDialect dialect = new JdbcDialect() { //判断是否为oracle库 @Override public boolean canHandle(String url) { return url.startsWith("jdbc:oracle"); } //用于读取Oracle数据库时数据类型的转换 @Override public Option<DataType> getCatalystType(int sqlType, String typeName, int size, MetadataBuilder md) { if (sqlType == Types.DATE && typeName.equals("DATE") && size == 0) return Option.apply(DataTypes.TimestampType); return Option.empty(); } //用于写Oracle数据库时数据类型的转换 @Override public Option<JdbcType> getJDBCType(DataType dt) { if (DataTypes.StringType.sameType(dt)) { return Option.apply( new JdbcType("VARCHAR2(255)", Types.VARCHAR)); } else if (DataTypes.BooleanType.sameType(dt)) { return Option.apply( new JdbcType("NUMBER(1)", Types.NUMERIC)); } else if (DataTypes.IntegerType.sameType(dt)) { return Option.apply( new JdbcType("NUMBER(10)", Types.NUMERIC)); } else if (DataTypes.LongType.sameType(dt)) { return Option.apply( new JdbcType("NUMBER(19)", Types.NUMERIC)); } else if (DataTypes.DoubleType.sameType(dt)) { return Option.apply( new JdbcType("NUMBER(19,4)", Types.NUMERIC)); } else if (DataTypes.FloatType.sameType(dt)) { return Option.apply( new JdbcType("NUMBER(19,4)", Types.NUMERIC)); } else if (DataTypes.ShortType.sameType(dt)) { return Option.apply( new JdbcType("NUMBER(5)", Types.NUMERIC)); } else if (DataTypes.ByteType.sameType(dt)) { return Option.apply( new JdbcType("NUMBER(3)", Types.NUMERIC)); } else if (DataTypes.BinaryType.sameType(dt)) { return Option.apply( new JdbcType("BLOB", Types.BLOB)); } else if (DataTypes.TimestampType.sameType(dt)) { return Option.apply( new JdbcType("DATE", Types.DATE)); } else if (DataTypes.DateType.sameType(dt)) { return Option.apply( new JdbcType("DATE", Types.DATE)); } else if (DataTypes.createDecimalType() .sameType(dt)) { //unlimited /* return DecimalType.Fixed(precision, scale) =>Some(JdbcType("NUMBER(" + precision + "," + scale + ")", java.sql.Types.NUMERIC))*/ return Option.apply( new JdbcType("NUMBER(38,4)", Types.NUMERIC)); } return Option.empty(); } }; //注册此方言 JdbcDialects.registerDialect(dialect); // JdbcDialects.unregisterDialect(dialect); } public static void main(String[] args) { SparkConf conf = new SparkConf(); conf.setAppName("Test"); //设置启动方式为本地方式 conf.setMaster("local[2]"); String driver="oracle.jdbc.driver.OracleDriver"; String url = "jdbc:oracle:thin:@//192.168.1.**8:1521/orcl"; JavaSparkContext sc = new JavaSparkContext(conf); SQLContext sqlContext = new SQLContext(sc); oracleInit(); // System.out.println("================" + sqlContext.getSQLDialect()); Map<String, String> options = new HashMap<String, String>(); options.put("user", "test"); options.put("password", "test"); options.put("url", url); options.put("dbtable", "TM_****UPDOWN");//数据表 //一次取多少行数据 options.put("AAAA", "20"); options.put("driver", driver); org.apache.spark.sql.Dataset jdbcDF = sqlContext.read().format("jdbc").options(options).load(); jdbcDF.createOrReplaceTempView("AAA03"); org.apache.spark.sql.Dataset AAAA03 = sqlContext.sql("select site_time ,line_no,count(*) Num from AAA03 where site_time BETWEEN '2018-02-01 12:30:00' and '2018-02-01 12:40:00' GROUP BY site_time ,line_no"); AAAA03.show(); //jdbcDF.show(false); } }

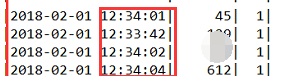

方案二查询的结果,建议使用方案二

浙公网安备 33010602011771号

浙公网安备 33010602011771号