云原生学习笔记-DAY4

pod状态与探针

1 Health Check

对容器进行周期性健康状态检测

周期检测相当于人类的周期性体检

每次检测相当于人类每次体检的内容

docker-compose yaml文件、docerfile以及pod的定义里面都可以配置health check,日常用的比较多的是pod使用探针对容器进行健康检测

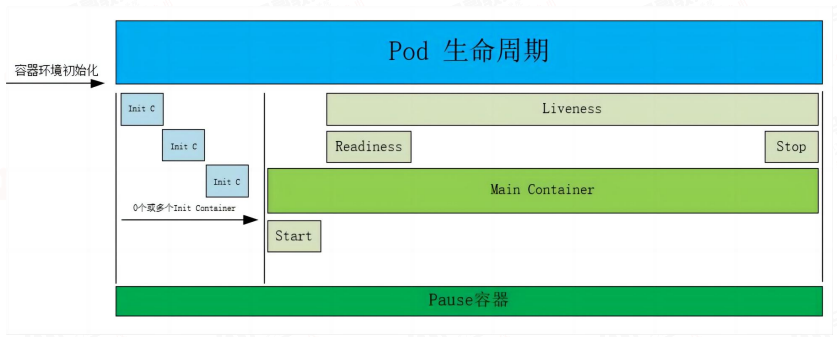

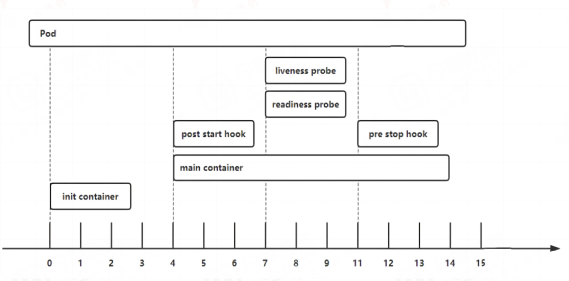

2 pod生命周期

pod的生命周期(pod lifecycle)

pod start时候可以配置postStart检测,运行过程中可以配置livenessProbe和readinessProbe,最后在 stop前可以配置preStop操作。

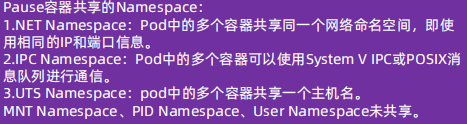

2.1 pause容器简介

pause 容器,又叫 Infra 容器,是pod的基础容器,镜像体积只有几百KB左右,配置在kubelet中,主要的功能是实现一个pod中多个容器的网络通信。

Infra 容器被创建后会初始化 Network Namespace,之后其它容器就可以共享Infra容器的网络了,因此如果一个 Pod 中的两个容器 A 和 B,那么关系如下 :

-

1.A容器和B容器能够直接使用 localhost 通信;

-

2.A容器和B容器可以看到网卡、IP与端口监听信息。

-

3.Pod 只有一个 IP 地址,也就是该 Pod 的 Network Namespace对应的IP地址(由Infra 容器初始化并创建)。

-

4.k8s环境中的每个Pod有一个独立的IP地址(前提是地址足够用),并且此IP被当前 Pod 中所有容器在内部共享使用。

-

5.pod删除后Infra 容器随机被删除,其IP被回收。

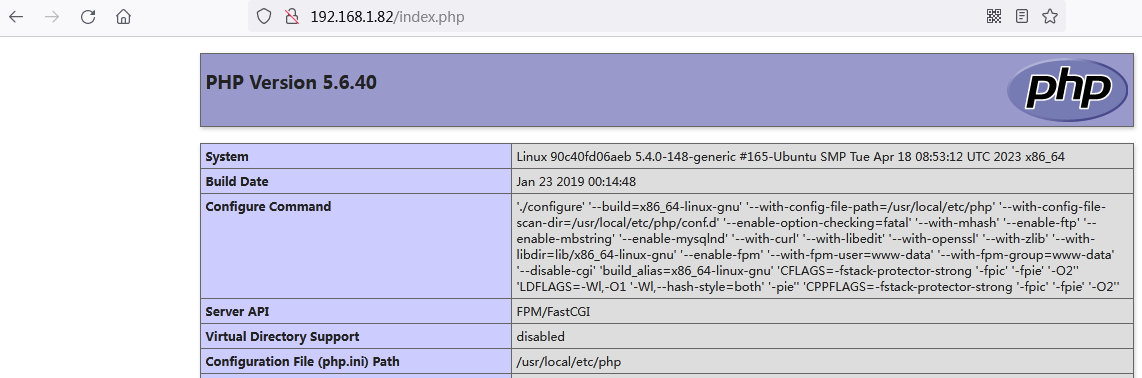

2.1.1 pause容器实验

1 拉取镜像

nerdctl pull harbor.idockerinaction.info/baseimages/pause:3.9

2 运行pause容器,这里使用的是私有仓库镜像,如果网络允许,也可以使用互联网公共镜像

nerdctl run -d -p 80:80 --name pause-container-test harbor.idockerinaction.info/baseimages/pause:3.9

3 准备测试web页面

root@ubuntu4:~/html/pause-test-case/html# cat index.html

<h1>pause container web test</h1>

root@ubuntu4:~/html/pause-test-case/html# cat index.php

<?php

phpinfo();

?>

root@ubuntu4:~/html/pause-test-case/html# cd ..

root@ubuntu4:~/html/pause-test-case# cat nginx.conf

error_log stderr;

events { worker_connections 1024; }

http {

access_log /dev/stdout;

server {

listen 80 default_server;

server_name www.mysite.com;

location / {

index index.html index.php;

root /usr/share/nginx/html;

}

location ~ \.php$ {

root /usr/share/nginx/html;

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

include fastcgi_params;

}

}

}

4 运行nginx容器,并使用pause容器网络

nerdctl run -d --name nginx-container-test -v `pwd`/nginx.conf:/etc/nginx/nginx.conf -v `pwd`/html:/usr/share/nginx/html --net=container:pause-container-test nginx:1.20.2

5 运行php容器,并使用pause容器网络

nerdctl run -d --name php-container-test --net=container:pause-container-test -v `pwd`/html:/usr/share/nginx/html php:5.6.40-fpm

6 查看pause,nginx,php容器均已创建

root@ubuntu4:~/html/pause-test-case# nerdctl ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4f862b9831a7 docker.io/library/php:5.6.40-fpm "docker-php-entrypoi…" 3 minutes ago Up php-container-test

63507d495af2 docker.io/library/nginx:1.20.2 "/docker-entrypoint.…" 4 minutes ago Up nginx-container-test

90c40fd06aeb harbor.idockerinaction.info/baseimages/pause:3.9 "/pause" 25 minutes ago Up 0.0.0.0:80->80/tcp pause-container-test

2.1.2 验证访问

可以看到pause容器的80端口其实被nginx容器占用了,并且对php页面的访问通过本地9000端口直接转发给了php容器,即pause/nginx/php几个容器共用了同一个网络名称空间

2.2 init容器简介

2.2.1 init容器的作用

-

1.可以为业务容器提前准备好业务容器的运行环境,比如将业务容器需要的配置文件提前生成并放在指定位置、检查数据权限或完整性、软件版本等基础运行环境。

-

2.可以在运行业务容器之前准备好需要的业务数据,比如从OSS下载、或者从其它位置copy。

-

3.检查依赖的服务是否能够访问。

2.2.2 init容器的特点

-

1.一个pod可以有多个业务容器还能在有多个init容器,但是每个init容器和业务容器的运行环境都是隔离的。

-

2.init容器会比业务容器先启动。

-

3.init容器运行成功之后才会继续运行业务容器。

-

4.如果一个pod有多个init容器,则需要从上到下逐个运行并且全部成功,最后才会运行业务容器。

-

5.init容器不支持探针检测(因为初始化完成后就退出再也不运行了)。

2.2.3 init容器示例

1 apply yaml 创建pod,svc资源

root@k8s-master1:~/manifest/4-20230507/2.init-container-case# kubectl apply -f 1-init-container.yaml

2 查看pod状态,可以看到经历短暂的init过程后变成了running, init过程是init容器在给main容器的运行提前提供数据文件并设置相关权限

root@k8s-master1:~/manifest/4-20230507/2.init-container-case# kubectl get pods -n myserver

NAME READY STATUS RESTARTS AGE

myserver-myapp-deployment-name-6965765b9c-jkctx 0/1 Init:0/2 0 7s

root@k8s-master1:~/manifest/4-20230507/2.init-container-case# kubectl get pods -n myserver

NAME READY STATUS RESTARTS AGE

myserver-myapp-deployment-name-6965765b9c-jkctx 0/1 Init:1/2 0 16s

root@k8s-master1:~/manifest/4-20230507/2.init-container-case# kubectl get pods -n myserver

NAME READY STATUS RESTARTS AGE

myserver-myapp-deployment-name-6965765b9c-jkctx 1/1 Running 0 39s

3 在pod被调度到的node上可以看到pod总共有4个容器,其中两个init容器初始化完成后就退出了

root@k8s-node3:~# nerdctl ps -a |grep myserver-myapp

4cb24e119505 docker.io/library/nginx:1.20.0 "/docker-entrypoint.…" 3 minutes ago Up k8s://myserver/myserver-myapp-deployment-name-6965765b9c-jkctx/myserver-myapp-container

9aa36cad5969 harbor.idockerinaction.info/baseimages/pause:3.9 "/pause" 3 minutes ago Up k8s://myserver/myserver-myapp-deployment-name-6965765b9c-jkctx

9e50e51a25e7 docker.io/library/centos:7.9.2009 "/bin/bash -c for i …" 3 minutes ago Created k8s://myserver/myserver-myapp-deployment-name-6965765b9c-jkctx/init-web-data

aa99d893bdbf docker.io/library/busybox:1.28 "/bin/sh -c /bin/chm…" 3 minutes ago Created k8s://myserver/myserver-myapp-deployment-name-6965765b9c-jkctx/change-data-owner

4 验证pod里的页面可以被正常访问

root@k8s-deploy:~# curl http://192.168.1.113:30080/myserver/index.html

<h1>1 web page at 20230515184850 <h1>

<h1>2 web page at 20230515184851 <h1>

<h1>3 web page at 20230515184852 <h1>

<h1>4 web page at 20230515184853 <h1>

<h1>5 web page at 20230515184854 <h1>

<h1>6 web page at 20230515184855 <h1>

<h1>7 web page at 20230515184856 <h1>

<h1>8 web page at 20230515184857 <h1>

<h1>9 web page at 20230515184858 <h1>

<h1>10 web page at 20230515184859 <h1>

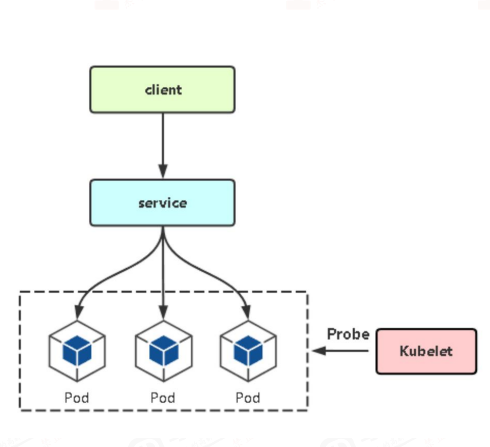

2.3 探针简介

探针是由 kubelet 对容器执行的定期诊断,以保证Pod的状态始终处于运行状态,要执行诊断,kubelet 调用由容器实现的Handler(处理程序),也成为Hook(钩子),每个探针里面可以定义三种类型的处理动作之一

-

ExecAction #在容器内执行指定命令,如果命令退出时返回码为0则认为诊断成功

-

TCPSocketAction #对容器IP地址的指定端口进行TCP检查,如果端口打开,则诊断被认为是成功的。

-

HTTPGetAction:#对容器的指定的端口和路径执行HTTPGet请求,如果响应的状态码大于等于200且小于400,则诊断被认为是成功的。

每次探测都将获得以下三种结果之一

-

成功:容器通过了诊断

-

失败:容器未通过诊断

-

未知:诊断失败,因此不会采取任何行动

2.3.1 探针类型

-

startupProbe: #启动探针,kubernetes v1.16引入,判断容器内的应用程序是否已启动完成,如果配置了启动探测,则会先禁用所有其它的探测,直到startupProbe检测成功为止,如果startupProbe探测失败,则kubelet将杀死容器,容器将按照重启策略进行下一步操作,如果容器没有提供启动探测,则默认状态为成功

-

livenessProbe: #存活探针,检测容器是否正在运行,如果存活探测失败,则kubelet会杀死容器,并且容器将受到其重启策略的影响,如果容器不提供存活探针,则默认状态为 Success,livenessProbe用于控制是否重启pod

-

readinessProbe: #就绪探针,如果就绪探测失败,端点控制器将从与Pod匹配的所有Service的端点中删除该Pod的IP地址,初始延迟之前的就绪状态默认为Failure(失败),如果容器不提供就绪探针,则默认状态为 Success,readinessProbe用于控制pod是否添加至service

2.3.2 探针配置参数

探针有很多配置字段,可以使用这些字段结合pod重启策略精确控制存活和就绪检测的行为

-

action: 定义探针检测的方式,可以是exec、httpGet,、tcpSocket三者之一, exec表示执行命令检测,httpGet表示执行http get检测,tcpSocket表示执行tcp端口检测

-

initialDelaySeconds: 120 #初始化延迟时间,告诉kubelet在执行第一次探测前应该等待多少秒,默认是0秒,最小值是0

-

periodSeconds: 60 #探测周期的间隔时间,指定了kubelet应该每多少秒执行一次存活探测,默认是 10 秒。最小值是 1

-

timeoutSeconds: 5 #单次探测超时时间,探测等待多少秒算超时,默认值是1秒,最小值是1。

-

successThreshold: 1 #从失败转为成功的重试次数,探测器在失败后,被视为成功的最小连续成功数,默认值是1,存活探测的这个值必须是1,最小值是 1

-

failureThreshold:3 #从成功转为失败的重试次数,当Pod启动了并且探测到失败,Kubernetes的重试次数,存活探测情况下的失败就意味着重新启动容器,就绪探测情况下的失败 Pod会被打上未就绪的标签,默认值是3,最小值是1

2.3.3 探针示例

2.3.3.1 httpGet探针示例

1 编写yaml文件

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# vi my-1-http-probe.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: myserver-myapp-frontend-deploy

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: myserver-myapp-frontend-label

template:

metadata:

labels:

app: myserver-myapp-frontend-label

spec:

containers:

- name: myserver-myapp-frontend-label

image: nginx:1.20.2

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

#volumeMounts:

readinessProbe: #不同的探针类型,只要action探测方式相同,配置参数是一样的。比如都用httpGet方式探测,readinessProbe与livenessProbe配置参数相同。但是探测失败后触发的结果不同。livenessProbe探测失败会导致pod里面的容器重启重建,readinessProbe探测失败会导致pod从svc的endpoint移除

#livenessProbe:

httpGet:

port: 80

path: /index.html

initialDelaySeconds: 5 #默认值是0,最小值是0

periodSeconds: 3 #默认值是10,最小值是1

timeoutSeconds: 1 #默认值是1,最小值是1

successThreshold: 1 #默认值是1,最小值是1,存活和启动探针这个值必须为1

failureThreshold: 3 #默认值是3 ,最小值是1

#volumes:

restartPolicy: Always

---

kind: Service

apiVersion: v1

metadata:

name: myserver-mapp-frontend-label

namespace: myserver

spec:

type: NodePort

selector:

app: myserver-myapp-frontend-label

ports:

- name: http

port: 81

targetPort: 80

nodePort: 30080

protocol: TCP

2 应用yaml文件创建资源

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl apply -f my-1-http-probe.yaml

3 查看资源已创建

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl get pods -n myserver -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myserver-myapp-frontend-deploy-cffcb6c65-c982d 1/1 Running 0 5m40s 10.200.182.129 192.168.1.113 <none>

4 验证访问

root@k8s-deploy:~# curl -I http://192.168.1.113:30080

HTTP/1.1 200 OK

Server: nginx/1.20.2

Date: Mon, 15 May 2023 12:23:12 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 16 Nov 2021 14:44:02 GMT

Connection: keep-alive

ETag: "6193c3b2-264"

Accept-Ranges: bytes

5 进入pod将index.html改名为index.htm,触发httpGet livenessProbe探针检测失败

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl exec myserver-myapp-frontend-deploy-cffcb6c65-c982d -it -n myserver -- bash

root@myserver-myapp-frontend-deploy-cffcb6c65-c982d:/usr/share/nginx/html# mv index.html index.htm

6 几秒钟以后可以看到pod自动退出并重启了, RESTARTS次数由0变为1

root@myserver-myapp-frontend-deploy-cffcb6c65-c982d:/usr/share/nginx/html# command terminated with exit code 137

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl get pods -n myserver -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myserver-myapp-frontend-deploy-cffcb6c65-c982d 1/1 Running 1 (111s ago) 18m 10.200.182.129 192.168.1.113 <none> <none>

7 重新进入pod查看index.html文件已恢复,由此说明pod重启实际是容器重建了,但是pod名称和IP并不会改变

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl exec myserver-myapp-frontend-deploy-cffcb6c65-c982d -it -n myserver -- bash

root@myserver-myapp-frontend-deploy-cffcb6c65-c982d:/# ls /usr/share/nginx/html

50x.html index.html

8 清理上面的pod,然后将yaml文件里面的livenessProbe改成readinessProbe,其他配置不变,然后重新apply

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl apply -f my-1-http-probe.yaml

deployment.apps/myserver-myapp-frontend-deploy created

service/myserver-mapp-frontend-label created

9 查看新资源已创建

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl get pods -n myserver -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myserver-myapp-frontend-deploy-859c7c8849-pjdx8 1/1 Running 0 31s 10.200.182.146 192.168.1.113 <none> <none>

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl get ep -n myserver

NAME ENDPOINTS AGE

myserver-mapp-frontend-label 10.200.182.146:80 45s

10 进入pod将index.html改名为index.htm触发httpGet readinessProbe探针检测失败

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl exec myserver-myapp-frontend-deploy-859c7c8849-pjdx8 -it -n myserver -- bash

root@myserver-myapp-frontend-deploy-859c7c8849-pjdx8:/# cd /usr/share/nginx/html/

root@myserver-myapp-frontend-deploy-859c7c8849-pjdx8:/usr/share/nginx/html# mv index.html index.htm

root@myserver-myapp-frontend-deploy-859c7c8849-pjdx8:/usr/share/nginx/html# ls

50x.html index.htm

11 查看pod并没有重启,只是pod被从svc的ep里面移除了,由此说明readinessProbe探针控制pod是否从svc移除

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl get pods -n myserver -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myserver-myapp-frontend-deploy-859c7c8849-pjdx8 0/1 Running 0 119s 10.200.182.146 192.168.1.113 <none> <none>

net-test 1/1 Running 2 (146m ago) 2d8h 10.200.182.174 192.168.1.113 <none> <none>

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl get ep -n myserver

NAME ENDPOINTS AGE

myserver-mapp-frontend-label 2m16s

2.3.3.2 tcpSocket探针示例

1 编辑yaml配置文件

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# vi my-2-tcp-probe.yaml

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# vi my-2-tcp-probe.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: myserver-myapp-frontend-deploy

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: myserver-myapp-frontend-label

template:

metadata:

labels:

app: myserver-myapp-frontend-label

spec:

containers:

- name: myserver-myapp-frontend-label

image: nginx:1.20.2

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

#volumeMounts:

readinessProbe: #不同的探针类型,只要action探测方式相同,配置参数是一样的。比如都用httpGet方式探测,readinessProbe与livenessProbe配置参数相同。但是>探测失败后触发的结果不同。livenessProbe探测失败会导致pod里面的容器重启重建,readinessProbe探测失败会导致pod从svc的endpoint移除

#livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 5 #默认值是0,最小值是0

periodSeconds: 3 #默认值是10,最小值是1

timeoutSeconds: 2 #默认值是1,最小值是1

successThreshold: 1 #默认值是1,最小值是1,存活和启动探针这个值必须为1

failureThreshold: 3 #默认值是3 ,最小值是1

#volumes:

restartPolicy: Always

---

kind: Service

apiVersion: v1

metadata:

name: myserver-mapp-frontend-label

namespace: myserver

spec:

type: NodePort

selector:

app: myserver-myapp-frontend-label

ports:

- name: http

port: 81

targetPort: 80

nodePort: 30080

protocol: TCP

2 应用yaml配置文件创建资源

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl apply -f my-2-tcp-probe.yaml

3 查看资源创建成功

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl get pods -n myserver -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myserver-myapp-frontend-deploy-769fb7974b-9cqbg 1/1 Running 0 46s 10.200.182.145 192.168.1.113 <none> <none>

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl get svc -n myserver

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

myserver-mapp-frontend-label NodePort 10.100.198.209 <none> 81:30080/TCP 104s

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl get ep -n myserver

NAME ENDPOINTS AGE

myserver-mapp-frontend-label 10.200.182.145:80 2m5s

4 进入pod修改nginx端口为81,触发tcpSocket readinessProbe探测失败

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl exec -it myserver-myapp-frontend-deploy-769fb7974b-9cqbg -n myserver -- bash

root@myserver-myapp-frontend-deploy-769fb7974b-9cqbg:/etc/nginx# cd /etc/nginx/conf.d/

root@myserver-myapp-frontend-deploy-769fb7974b-9cqbg:/etc/nginx/conf.d# sed -i 's/listen 80;/listen 81;/g' default.conf

root@myserver-myapp-frontend-deploy-769fb7974b-9cqbg:/etc/nginx/conf.d# nginx -s reload

5 可以看到,当tcpSocket readinessProbe探测失败后,pod会被从ep移除,但是pod不会重启

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl get pods -o wide -n myserver

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myserver-myapp-frontend-deploy-769fb7974b-9cqbg 0/1 Running 0 (6m42s ago) 10m 10.200.182.145 192.168.1.113 <none> <none>

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl get ep -n myserver

NAME ENDPOINTS AGE

myserver-mapp-frontend-label 10m

6 清理资源,并修改yaml文件,将readinessProbe改为livenessProbe

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl delete -f my-2-tcp-probe.yaml

deployment.apps "myserver-myapp-frontend-deploy" deleted

service "myserver-mapp-frontend-label" deleted

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# vi my-2-tcp-probe.yaml

7 重新apply文件生成资源

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl apply -f my-2-tcp-probe.yaml

deployment.apps/myserver-myapp-frontend-deploy created

service/myserver-mapp-frontend-label created

8 查看资源已创建

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl get pods -n myserver -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myserver-myapp-frontend-deploy-68bc997f94-h2qcq 1/1 Running 0 15s 10.200.182.140 192.168.1.113 <none> <none>

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl get ep -n myserver

NAME ENDPOINTS AGE

myserver-mapp-frontend-label 10.200.182.140:80 25s

9 进入pod后修改nginx端口为81,并重启服务,触发tcpSocket livenessProbe探测失败

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl exec myserver-myapp-frontend-deploy-68bc997f94-h2qcq -it -n myserver -- bash

root@myserver-myapp-frontend-deploy-68bc997f94-h2qcq:/# cd /etc/nginx/conf.d/

root@myserver-myapp-frontend-deploy-68bc997f94-h2qcq:/etc/nginx/conf.d# sed -i 's/listen 80;/listen 81;/g' default.conf

root@myserver-myapp-frontend-deploy-68bc997f94-h2qcq:/etc/nginx/conf.d# nginx -s reload

2023/05/15 13:47:30 [notice] 41#41: signal process started

root@myserver-myapp-frontend-deploy-68bc997f94-h2qcq:/etc/nginx/conf.d# command terminated with exit code 137

10 可以看到tcpSocket livenessProbe探测失败后pod重启了,niginx配置还原成了默认配置

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl get pods -n myserver -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myserver-myapp-frontend-deploy-68bc997f94-h2qcq 1/1 Running 1 (2m ago) 4m25s 10.200.182.140 192.168.1.113 <none> <none>

net-test 1/1 Running 2 (3h28m ago) 2d9h 10.200.182.174 192.168.1.113 <none> <none>

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case3-Probe# kubectl exec myserver-myapp-frontend-deploy-68bc997f94-h2qcq -it -n myserver -- bash

root@myserver-myapp-frontend-deploy-68bc997f94-h2qcq:/# cat /etc/nginx/conf.d/default.conf |grep listen

listen 80;

2.3.3.3 exec探针

yaml配置文件与httpGet和tcpSocket探针相同,只是action探测方式改成了exec

2.3.3.4 startupProbe探针

yaml配置与livenessProbe和readinessProbe相同,只是探针的类型改成了startupProbe, 同样可以使用exec,httpGet,tcpSocket三种探测方式

2.3.3.5 探针总结

生产环境中,一般同时使用livenessProbe和readinessProbe对容器进行检测。startupProbe按需使用,用的比较少

2.4 postStart and preStop handlers简介

参考:https://kubernetes.io/zh/docs/tasks/configure-pod-container/attach-handler-lifecycle-event/

-

postStart:container启动后立即执行指定的操作。container被创建后立即执行,即不等待container中的服务启动。如果postStart执行失败pod不会继续创建

-

preStop:在pod被停止之前执行。如果preStop一直执行不完成,则最后宽限秒后强制删除

2.4.1 postStart and preStop handlers示例

1 编辑yaml文件

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case4-postStart-preStop# vi my-1-myserver-myapp1-postStart-preStop.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: myserver-myapp-lifecycle

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: myserver-myapp-lifecycle-label

template:

metadata:

labels:

app: myserver-myapp-lifecycle-label

spec:

containers:

- name: myserver-myapp-lifecycle-label

image: tomcat:7.0.94-alpine

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 8080

#volumeMounts:

#startupProbe:

#livenessProbe:

#readinessProbe:

lifecycle:

postStart:

exec:

#command: 把自己注册到注册中心

command:

- "/bin/sh"

- "-c"

- "echo 'hello from postStart handler' >> /usr/local/tomcat/webapps/ROOT/index.html"

preStop:

exec:

#command: 把自己从注册中心移除

command: ["/bin/sh","-c","sleep 10000000"]

#volumes:

restartPolicy: Always

#serviceAccountName:

#imagePullSecrets:

terminationGracePeriodSeconds: 20 #默认值30,生产中要根据实际情况修改

---

kind: Service

apiVersion: v1

metadata:

name: myserver-myapp-lifecycle-label

namespace: myserver

spec:

type: NodePort

selector:

app: myserver-myapp-lifecycle-label

ports:

- name: http

port: 80

targetPort: 8080

nodePort: 30012

protocol: TCP

2 应用yaml文件创建资源

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case4-postStart-preStop# kubectl apply -f my-1-myserver-myapp1-postStart-preStop.yaml

deployment.apps/myserver-myapp-lifecycle created

service/myserver-myapp-lifecycle-label created

3 查看资源已创建

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case4-postStart-preStop# kubectl get pods -n myserver -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myserver-myapp-lifecycle-78b977fc94-dbkqm 1/1 Running 0 57s 10.200.182.149 192.168.1.113 <none> <none>

4 验证pod访问,并且页面内容是postStart写入的,证明postStart执行成功

root@k8s-deploy:~# curl http://192.168.1.113:30012

hello from postStart handler

5 删除资源

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case4-postStart-preStop# kubectl delete -f my-1-myserver-myapp1-postStart-preStop.yaml

deployment.apps "myserver-myapp-lifecycle" deleted

service "myserver-myapp-lifecycle-label" deleted

6 可以看到执行删除命令后,pod先进入Terminating, 然后等待20秒(terminationGracePeriodSeconds)后被删除

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case4-postStart-preStop# kubectl get pods -n myserver -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myserver-myapp-lifecycle-78b977fc94-dbkqm 1/1 Terminating 0 2m1s 10.200.182.149 192.168.1.113 <none> <none>

root@k8s-master1:~/manifest/4-20230507/3.Probe-cases/case4-postStart-preStop# kubectl get pods -n myserver -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

net-test 1/1 Running 2 (5h19m ago) 2d11h 10.200.182.174 192.168.1.113 <none> <none>

3 Pod的创建和删除流程

参考https://cloud.google.com/blog/products/containers-kubernetes/kubernetes-best-practices-terminating-with-grace

3.1 创建pod

1 向API-Server提交创建请求、API-Server完成鉴权和准入并将事件写入etcd

2 kube-scheduler完成调度流程

3 kubelet创建并启动pod、然后执行postStart

4 周期进行livenessProbe

5 进入running状态

6 readinessProbe检测通过后,service关联pod

7 接受客户端请求

3.2 删除pod

1 向API-Server提交删除请求、API-Server完成鉴权和准入并将事件写入etcd

2 Pod被设置为”Terminating”状态、从service的Endpoints列表中删除并不再接受客户端请求。

3.1 pod执行PreStop #与3.2几乎是同时执行

3.2 kubelet向pod中的容器发送SIGTERM信号(正常终止信号)终止pod里面的主进程,这个信号让容器知道自己很快将会被关闭

terminationGracePeriodSeconds: 60 #可选终止等待期(pod删除宽限期),如果有设置删除宽限时间,则等待宽限时间到期,否则最多等待30s,Kubernetes等待指定的时间称为优雅终止宽限期,默认情况下是30秒,值得注意的是等待期与preStop Hook和SIGTERM信号并行执行,即Kubernetes可能不会等待preStop Hook完成(最长30秒之后主进程还没有结束就就强制终止pod)。

4 SIGKILL信号被发送到Pod,删除Pod

4 基于nerdctl+buildkitd+containerd构建容器镜像

4.1 基础知识

容器技术除了的docker之外,还有coreOS的rkt、google的gvisor、以及docker开源的containerd、redhat的podman、阿⾥的pouch等,为了保证容器生态的标准性和健康可持续发展,包括Linux 基金会、Docker、微软、红帽、谷歌和IBM等公司在2015年6月共同成立了1个叫open container(OCI)的组织,其目的就是制定开放的标准的容器规范,目前OCI总共发布了两个规范,分别是runtime spec和image format spec,有了这两个规范,不同的容器公司开发的容器只要兼容这两个规范,就可以保证容器的可移植性和相互可操作性。

-

https://github.com/rkt/rkt #已停止维护

buildkit: 从Docker公司的开源出来的⼀个镜像构建工具包,支持OCI标准的镜像构建

4.2 buildkitd

4.2.1 buildkitd组成部分

buildkitd(服务端),目前支持runc和containerd作为镜像构建环境,默认是runc,可以更换为containerd。

buildctl(客户端),负责解析Dockerfile文件,并向服务端buildkitd发出构建请求。

4.2.2 部署buildkitd

1、下载buildkitd

cd /usr/local/src/

wget https://github.com/moby/buildkit/releases/download/v0.11.6/buildkit-v0.11.6.linux-amd64.tar.gz

2、解压并复制二进制到/usr/local/bin/

tar -zxvf buildkit-v0.11.6.linux-amd64.tar.gz

cp bin/* /usr/loccal/bin/

buildkitd --version

buildctl --help

3、创建 /usr/lib/systemd/system/buildkitd.service 文件

cat > /usr/lib/systemd/system/buildkitd.service <<EOF

[Unit]

Description=/usr/local/bin/buildkitd

ConditionPathExists=/usr/local/bin/buildkitd

After=containerd.service

[Service]

Type=simple

ExecStart=/usr/local/bin/buildkitd

User=root

Restart=on-failure

RestartSec=1500ms

[Install]

WantedBy=multi-user.target

EOF

4、开机自启动

systemctl daemon-reload && systemctl restart buildkitd && systemctl enable buildkitd

5、验证状态

systemctl status buildkitd

如果没有安装git,查看状态时会有一个告警如下,可以安装git消除告警,但是不安装也不影响使用

May 03 09:15:24 image-build buildkitd[722]: time="2023-05-03T09:15:24+08:00" level=warning msg="git source cannot be enabled: failed

to find git binary: exec: \"git\": executable file not found in $PATH"

4.2.3 测试镜像构建

4.2.3.1 nerdctl常用命令

root@image-build:~# nerdctl login harbor.idockerinaction.info

WARN[0000] skipping verifying HTTPS certs for "harbor.idockerinaction.info"

WARNING: Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

root@image-build:~# nerdctl pull centos:7.9.2009

WARN[0000] skipping verifying HTTPS certs for "docker.io"

docker.io/library/centos:7.9.2009: resolved |++++++++++++++++++++++++++++++++++++++|

index-sha256:be65f488b7764ad3638f236b7b515b3678369a5124c47b8d32916d6487418ea4: done |++++++++++++++++++++++++++++++++++++++|

manifest-sha256:dead07b4d8ed7e29e98de0f4504d87e8880d4347859d839686a31da35a3b532f: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:eeb6ee3f44bd0b5103bb561b4c16bcb82328cfe5809ab675bb17ab3a16c517c9: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:2d473b07cdd5f0912cd6f1a703352c82b512407db6b05b43f2553732b55df3bc: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 43.2s

total: 72.6 M (1.7 MiB/s)

root@image-build:~# nerdctl tag centos:7.9.2009 harbor.idockerinaction.info/baseimages/centos:7.9.2009

root@image-build:~# nerdctl push harbor.idockerinaction.info/baseimages/centos:7.9.2009

INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.list.v2+json, sha256:48838bdd8842b5b6bf36ef486c5b670b267ed6369e2eccbf00d520824f753ee2)

WARN[0000] skipping verifying HTTPS certs for "harbor.idockerinaction.info"

index-sha256:48838bdd8842b5b6bf36ef486c5b670b267ed6369e2eccbf00d520824f753ee2: done |++++++++++++++++++++++++++++++++++++++|

manifest-sha256:dead07b4d8ed7e29e98de0f4504d87e8880d4347859d839686a31da35a3b532f: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:eeb6ee3f44bd0b5103bb561b4c16bcb82328cfe5809ab675bb17ab3a16c517c9: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 1.2 s total: 3.5 Ki (2.9 KiB/s)

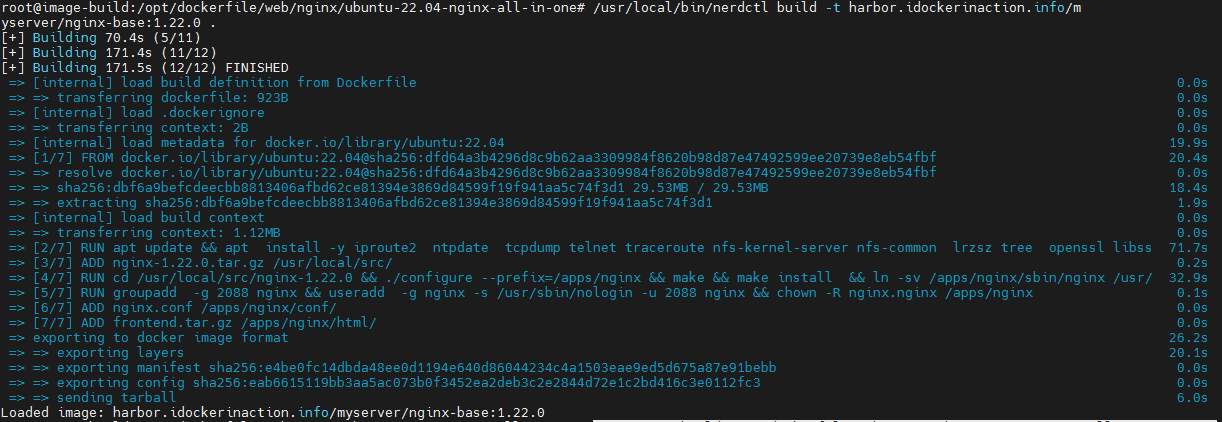

4.2.3.2 镜像构建

root@image-build:/opt/dockerfile/web/nginx/ubuntu-22.04-nginx-all-in-one# /usr/local/bin/nerdctl build -t harbor.idockerinaction.info/myserver/nginx-base:1.22.0 .

4.2.3.3 验证镜像上传

root@image-build:/opt/dockerfile/web/nginx/ubuntu-22.04-nginx-all-in-one# nerdctl push harbor.idockerinaction.info/myserver/nginx-base:1.22.0

INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.v2+json, sha256:e4be0fc14dbda48ee0d1194e640d86044234c4a1503eae9ed5d675a87e91bebb)

WARN[0000] skipping verifying HTTPS certs for "harbor.idockerinaction.info"

manifest-sha256:e4be0fc14dbda48ee0d1194e640d86044234c4a1503eae9ed5d675a87e91bebb: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:eab6615119bb3aa5ac073b0f3452ea2deb3c2e2844d72e1c2bd416c3e0112fc3: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 2.5 s total: 5.6 Ki (2.2 KiB/s)

4.2.3.4 通过脚本自动构建上传

root@image-build:/opt/dockerfile/web/nginx/ubuntu-22.04-nginx-all-in-one# cat build-command.sh

#!/bin/bash

/usr/local/bin/nerdctl build -t harbor.idockerinaction.info/myserver/nginx-base:1.22.0 .

/usr/local/bin/nerdctl push harbor.idockerinaction.info/myserver/nginx-base:1.22.0

root@image-build:/opt/dockerfile/web/nginx/ubuntu-22.04-nginx-all-in-one# bash build-command.sh

[+] Building 7.9s (12/12) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 923B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/library/ubuntu:22.04 1.5s

=> [1/7] FROM docker.io/library/ubuntu:22.04@sha256:dfd64a3b4296d8c9b62aa3309984f8620b98d87e47492599ee20739e8eb54fbf 0.0s

=> => resolve docker.io/library/ubuntu:22.04@sha256:dfd64a3b4296d8c9b62aa3309984f8620b98d87e47492599ee20739e8eb54fbf 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 108B 0.0s

=> CACHED [2/7] RUN apt update && apt install -y iproute2 ntpdate tcpdump telnet traceroute nfs-kernel-server nfs-common lrzsz tree openssl 0.0s

=> CACHED [3/7] ADD nginx-1.22.0.tar.gz /usr/local/src/ 0.0s

=> CACHED [4/7] RUN cd /usr/local/src/nginx-1.22.0 && ./configure --prefix=/apps/nginx && make && make install && ln -sv /apps/nginx/sbin/nginx 0.0s

=> CACHED [5/7] RUN groupadd -g 2088 nginx && useradd -g nginx -s /usr/sbin/nologin -u 2088 nginx && chown -R nginx.nginx /apps/nginx 0.0s

=> CACHED [6/7] ADD nginx.conf /apps/nginx/conf/ 0.0s

=> CACHED [7/7] ADD frontend.tar.gz /apps/nginx/html/ 0.0s

=> exporting to docker image format 6.2s

=> => exporting layers 0.0s

=> => exporting manifest sha256:e4be0fc14dbda48ee0d1194e640d86044234c4a1503eae9ed5d675a87e91bebb 0.0s

=> => exporting config sha256:eab6615119bb3aa5ac073b0f3452ea2deb3c2e2844d72e1c2bd416c3e0112fc3 0.0s

=> => sending tarball 6.2s

unpacking harbor.idockerinaction.info/myserver/nginx-base:1.22.0 (sha256:e4be0fc14dbda48ee0d1194e640d86044234c4a1503eae9ed5d675a87e91bebb)...

Loaded image: harbor.idockerinaction.info/myserver/nginx-base:1.22.0

INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.v2+json, sha256:e4be0fc14dbda48ee0d1194e640d86044234c4a1503eae9ed5d675a87e91bebb)

WARN[0000] skipping verifying HTTPS certs for "harbor.idockerinaction.info"

manifest-sha256:e4be0fc14dbda48ee0d1194e640d86044234c4a1503eae9ed5d675a87e91bebb: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:eab6615119bb3aa5ac073b0f3452ea2deb3c2e2844d72e1c2bd416c3e0112fc3: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 0.3 s total: 5.6 Ki (18.5 KiB/s)

root@image-build:/opt/dockerfile/web/nginx/ubuntu-22.04-nginx-all-in-one#

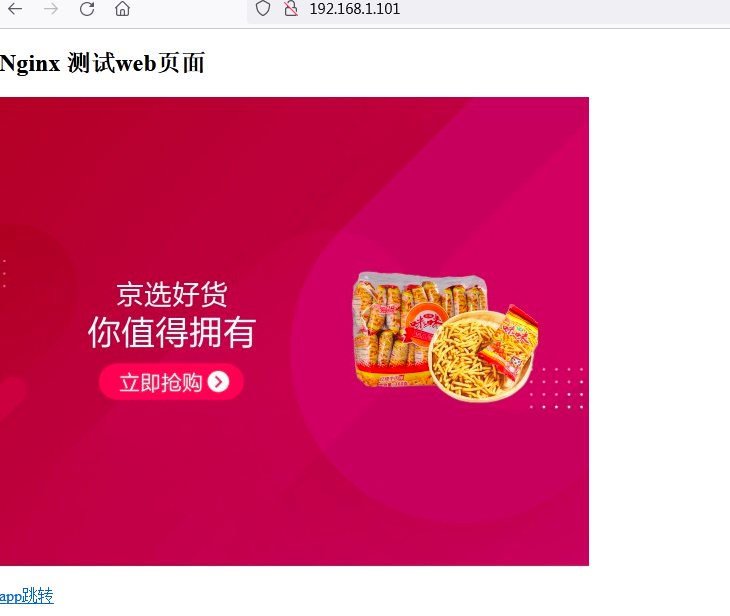

4.2.3.5 基于镜像测试容器运行

root@k8s-master1:~# nerdctl run -d -p 80:80 harbor.idockerinaction.info/myserver/nginx-base:1.22.0

root@k8s-master1:~# nerdctl exec -it b57c90036652ba407866cb64f2ce9f50b4a8ddb035acc17b77a0cb6e2d794380 -- bash

浙公网安备 33010602011771号

浙公网安备 33010602011771号