数仓项目01:准备工作

1.项目简介

2.软硬件资源准备

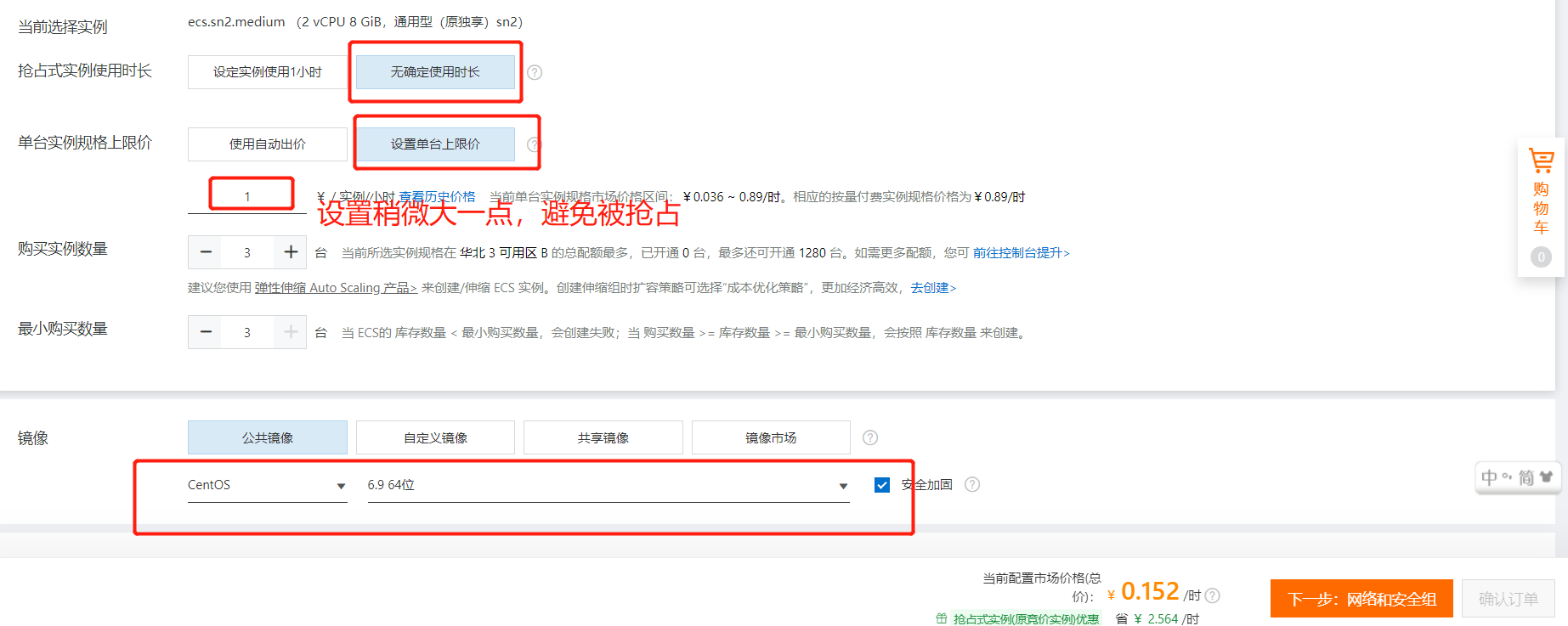

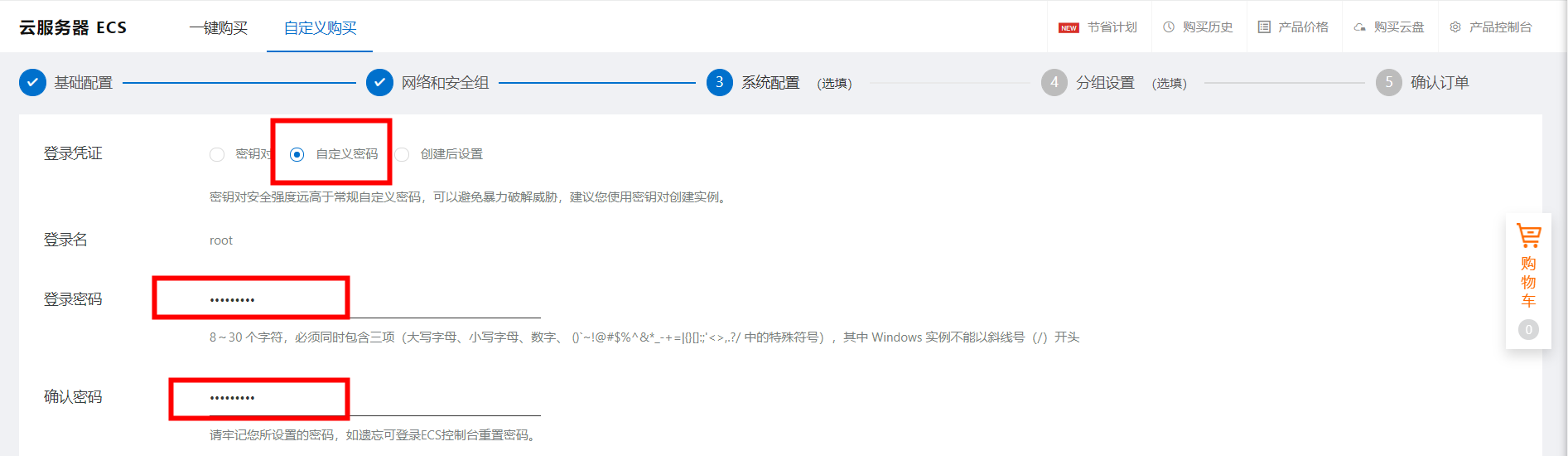

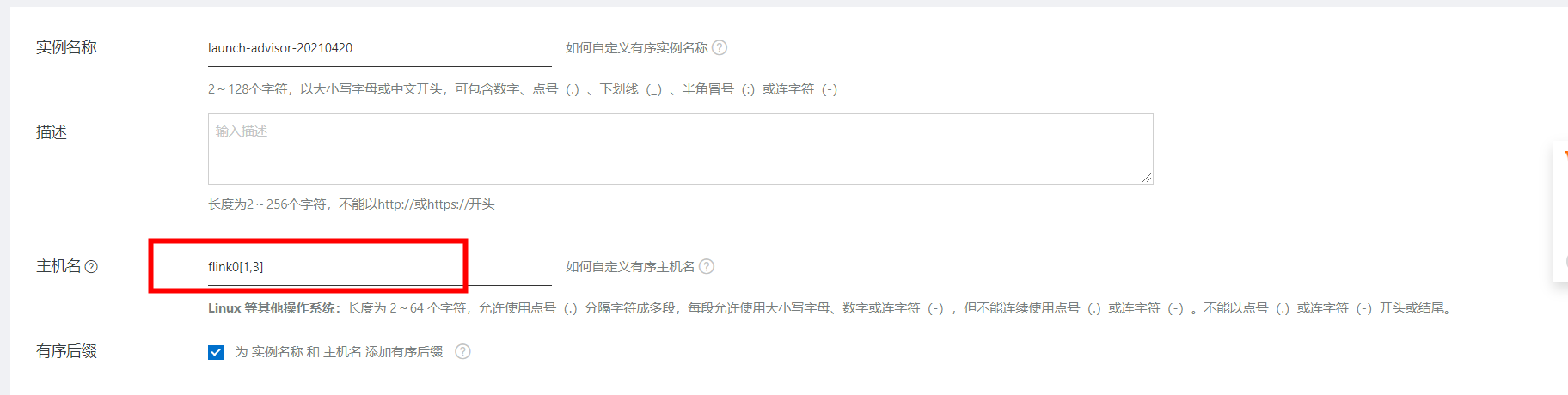

硬件:使用阿里云云主机来操作,因为只是学习测试使用,为了省钱因此可以选用抢占式实例。购买三台云主机用于测试。

3.hostname(三台主机都执行)

IP和hostname映射关系

172.24.67.125 hadoop102

172.24.67.126 hadoop103

172.24.67.127 hadoop104

3.1分别修改三台主机的hostname

修改:/etc/sysconfig/network

[hadoop@hadoop102 ~]$ sudo vi /etc/sysconfig/network [hadoop@hadoop102 ~]$ cat /etc/sysconfig/network NETWORKING=yes HOSTNAME=hadoop102 [hadoop@hadoop102 ~]

注意:主机名后面千万不能有空格

3.2修改主机与IP映射(DNS)

[root@iZ8vbcuwqycp91wygphyglZ ~]# hostname hadoop104 [root@iZ8vbcuwqycp91wygphyglZ ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 172.24.67.125 hadoop102 172.24.67.126 hadoop103 172.24.67.127 hadoop104

4.创建用户hadoop

[root@hadoop104 ~]# useradd hadoop [root@hadoop104 ~]# passwd hadoop Changing password for user hadoop. New password: BAD PASSWORD: it is WAY too short BAD PASSWORD: is a palindrome Retype new password: passwd: all authentication tokens updated successfully. 密码就是一个点

5.ssh免密登录(在主节点执行就行)

root用户免密登录

[root@hadoop103 ~]# ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: 8e:bb:73:c3:f0:13:c3:f0:d7:d9:38:5f:ff:0c:8f:71 root@hadoop103 The key's randomart image is: +--[ RSA 2048]----+ | | | | | | | . | | +S . + | | .o= . = . .| | .+.+ oo.E| | ..* .B.| | o+ o . =| +-----------------+ [root@hadoop103 ~]# ssh-copy-id hadoop102 The authenticity of host 'hadoop102 (172.24.67.125)' can't be established. RSA key fingerprint is 08:7d:5c:25:1f:26:9e:03:29:3a:12:01:1e:f9:a0:6d. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'hadoop102,172.24.67.125' (RSA) to the list of known hosts. root@hadoop102's password: Now try logging into the machine, with "ssh 'hadoop102'", and check in: .ssh/authorized_keys to make sure we haven't added extra keys that you weren't expecting. [root@hadoop103 ~]# ssh-copy-id hadoop103 The authenticity of host 'hadoop103 (172.24.67.126)' can't be established. RSA key fingerprint is 6e:0c:a4:d5:55:33:0b:e3:d0:e7:d9:c2:f8:5a:06:c5. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'hadoop103,172.24.67.126' (RSA) to the list of known hosts. root@hadoop103's password: Now try logging into the machine, with "ssh 'hadoop103'", and check in: .ssh/authorized_keys to make sure we haven't added extra keys that you weren't expecting. [root@hadoop103 ~]# ssh-copy-id hadoop104 The authenticity of host 'hadoop104 (172.24.67.127)' can't be established. RSA key fingerprint is b0:bb:4b:2e:bf:14:22:95:80:c1:7f:f7:cd:c6:5e:03. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'hadoop104,172.24.67.127' (RSA) to the list of known hosts. root@hadoop104's password: Now try logging into the machine, with "ssh 'hadoop104'", and check in: .ssh/authorized_keys to make sure we haven't added extra keys that you weren't expecting.

hadoop用户免密登录

[root@hadoop103 ~]# su - hadoop [hadoop@hadoop103 ~]$ ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/hadoop/.ssh/id_rsa): Created directory '/home/hadoop/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/hadoop/.ssh/id_rsa. Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub. The key fingerprint is: c2:72:b2:ee:40:0e:44:80:e0:9f:ab:a3:82:51:59:14 hadoop@hadoop103 The key's randomart image is: +--[ RSA 2048]----+ |*. .E. | |+ . | | o o | |. + .. | |...oo + S | |.+ .= . | |..o.. | |o..o | |+...o | +-----------------+ [hadoop@hadoop103 ~]$ ssh-copy-id hadoop103 The authenticity of host 'hadoop103 (172.24.67.126)' can't be established. RSA key fingerprint is 6e:0c:a4:d5:55:33:0b:e3:d0:e7:d9:c2:f8:5a:06:c5. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'hadoop103,172.24.67.126' (RSA) to the list of known hosts. hadoop@hadoop103's password: Permission denied, please try again. hadoop@hadoop103's password: Now try logging into the machine, with "ssh 'hadoop103'", and check in: .ssh/authorized_keys to make sure we haven't added extra keys that you weren't expecting. [hadoop@hadoop103 ~]$ ssh-copy-id hadoop102 The authenticity of host 'hadoop102 (172.24.67.125)' can't be established. RSA key fingerprint is 08:7d:5c:25:1f:26:9e:03:29:3a:12:01:1e:f9:a0:6d. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'hadoop102,172.24.67.125' (RSA) to the list of known hosts. hadoop@hadoop102's password: Now try logging into the machine, with "ssh 'hadoop102'", and check in: .ssh/authorized_keys to make sure we haven't added extra keys that you weren't expecting. [hadoop@hadoop103 ~]$ ssh-copy-id hadoop104 The authenticity of host 'hadoop104 (172.24.67.127)' can't be established. RSA key fingerprint is b0:bb:4b:2e:bf:14:22:95:80:c1:7f:f7:cd:c6:5e:03. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'hadoop104,172.24.67.127' (RSA) to the list of known hosts. hadoop@hadoop104's password: Now try logging into the machine, with "ssh 'hadoop104'", and check in: .ssh/authorized_keys to make sure we haven't added extra keys that you weren't expecting.

6.分发脚本编写

编写脚本

[root@hadoop103 bin]# cat xsync #!/bin/bash #判断参数是否只有一个 if(($#!=1)) then echo "传入了$#个文件,请传入一个文件" exit; fi #获取要分发文件的目录 dirpath=$(cd -P `dirname $1`;pwd) filename=$(basename $1) echo "要分发的文件目录是:$dirpath/$filename" #获取当前用户 user=$(whoami) #开始分发 for((i=102;i<=104;i++)) do echo ----------------hadoop$i----------------- rsync -rvlt $dirpath/$filename $user@hadoop$i:$dirpath done [root@hadoop103 bin]# pwd /usr/local/bin [root@hadoop103 bin]# ll total 4 -rwxrwxrwx 1 root root 454 Nov 9 17:44 xsync

注意:给与777或者chmod +x xsync 保证其他用户也能使用

7.集群执行脚本编写

[root@hadoop103 bin]# pwd /usr/local/bin [root@hadoop103 bin]# cat xcall #!/bin/bash #判断参数 if(($#==0)) then echo 请输入要执行的命令 exit; fi echo "要执行的命令是: $@" #执行命令 for((i=102;i<=104;i++)) do echo ----------------hadoop$i----------------- ssh hadoop$i $@ done

8.创建目录,上传需要的软件包

创建目录

[root@hadoop103 opt]# xcall mkdir /opt/soft /opt/module 要执行的命令是: mkdir /opt/soft /opt/module ----------------hadoop102----------------- ----------------hadoop103----------------- ----------------hadoop104----------------- [root@hadoop103 opt]# ll total 8 drwxr-xr-x 2 root root 4096 Nov 9 22:04 module drwxr-xr-x 2 root root 4096 Nov 9 22:04 soft [root@hadoop103 opt]# xcall chown -R hadoop:hadoop /opt/soft/ /opt/module/ 要执行的命令是: chown hadoop:hadoop /opt/soft/ /opt/module/ ----------------hadoop102----------------- ----------------hadoop103----------------- ----------------hadoop104----------------- [root@hadoop103 opt]# ll total 8 drwxr-xr-x 2 hadoop hadoop 4096 Nov 9 22:04 module drwxr-xr-x 2 hadoop hadoop 4096 Nov 9 22:04 soft [root@hadoop103 opt]# pwd /opt

上传文件

[hadoop@hadoop103 opt]$ cd soft/ [hadoop@hadoop103 soft]$ ls [hadoop@hadoop103 soft]$ ll total 380340 -rw-rw-r-- 1 hadoop hadoop 171966464 Nov 9 22:11 hadoop-2.7.2.tar.gz -rw-rw-r-- 1 hadoop hadoop 31981568 Nov 9 22:11 hadoop-lzo-master.zip -rw-rw-r-- 1 hadoop hadoop 185515842 Nov 9 22:11 jdk-8u144-linux-x64.tar.gz [hadoop@hadoop103 soft]$

xcall chown -R hadoop:hadoop /opt/soft/ /opt/module/

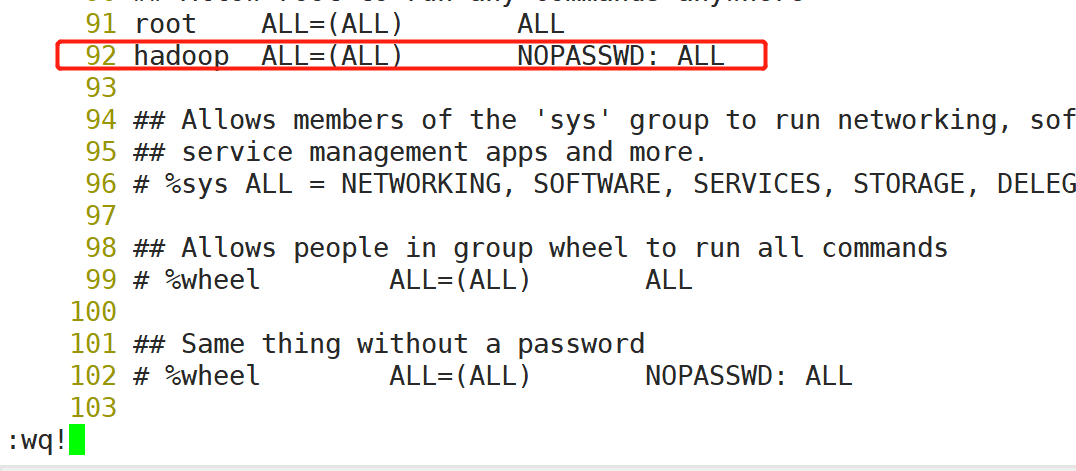

9.sudoer配置,nologin shell配置.bashrc

vi /etc/sudoers

分发

[root@hadoop103 ~]# xsync /etc/sudoers 要分发的文件目录是:/etc/sudoers ----------------hadoop102----------------- sending incremental file list sudoers sent 825 bytes received 67 bytes 1784.00 bytes/sec total size is 3761 speedup is 4.22 ----------------hadoop103----------------- sending incremental file list sent 30 bytes received 12 bytes 84.00 bytes/sec total size is 3761 speedup is 89.55 ----------------hadoop104----------------- sending incremental file list sudoers sent 825 bytes received 67 bytes 1784.00 bytes/sec total size is 3761 speedup is 4.22

切换到hadoop用户

.bashrc配置

[hadoop@hadoop103 ~]$ pwd /home/hadoop [hadoop@hadoop103 ~]$ vi .bashrc [hadoop@hadoop103 ~]$ tail -1 .bashrc source /etc/profile

分发

[hadoop@hadoop103 ~]$ xsync .bashrc 要分发的文件目录是:/home/hadoop/.bashrc ----------------hadoop102----------------- sending incremental file list .bashrc sent 217 bytes received 37 bytes 508.00 bytes/sec total size is 144 speedup is 0.57 ----------------hadoop103----------------- sending incremental file list sent 30 bytes received 12 bytes 84.00 bytes/sec total size is 144 speedup is 3.43 ----------------hadoop104----------------- sending incremental file list .bashrc sent 217 bytes received 37 bytes 169.33 bytes/sec total size is 144 speedup is 0.57

We only live once, and time just goes by.

浙公网安备 33010602011771号

浙公网安备 33010602011771号