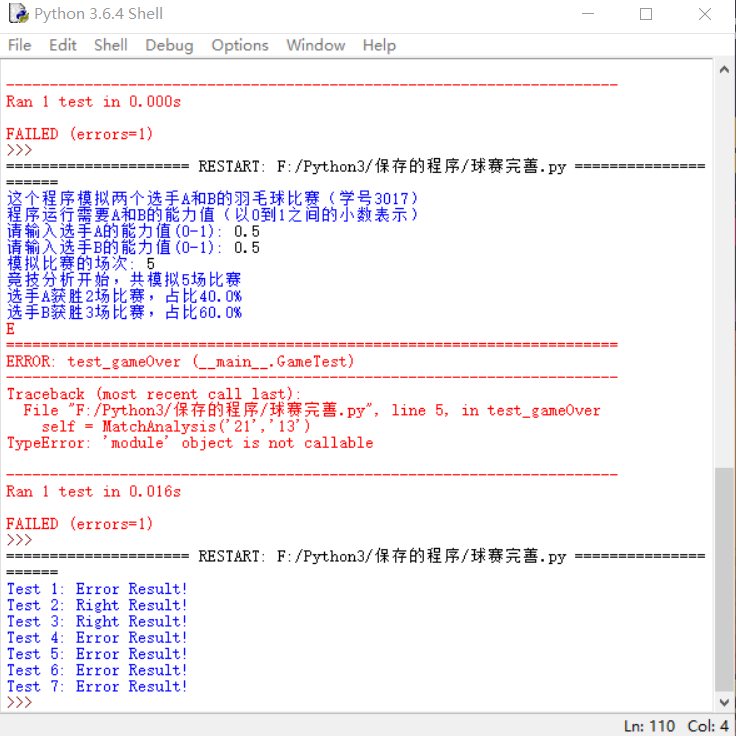

测试球赛程序:

1 def gameOver(N,z,y): 2 if(z-y==2 and z==20 and y==20): 3 return True 4 elif(y-z==2 and z==20 and y==20): 5 return True 6 elif(z==29 and y==29): 7 if(z-y==1 or y-z==1): 8 return True 9 else: 10 return False 11 def Test(): 12 try: 13 N = [1, 2, 3, 4, 5, 5, 5] 14 z = [13, 19, 20, 21, 14, 17, 15] 15 y = [15, 18, 20, 23, 16, 16, 0] 16 result = ["True", "False", "False", "True", "True", "True", "True"] 17 for i in range(0, 7): 18 if str(gameOver(N[i], z[i], y[i])) == result[i]: 19 print("Test {}: Right Result!".format(i+1)) 20 else: 21 print("Test {}: Error Result!".format(i+1)) 22 except Exception as e: 23 print("Error:", e) 24 Test()

结果:

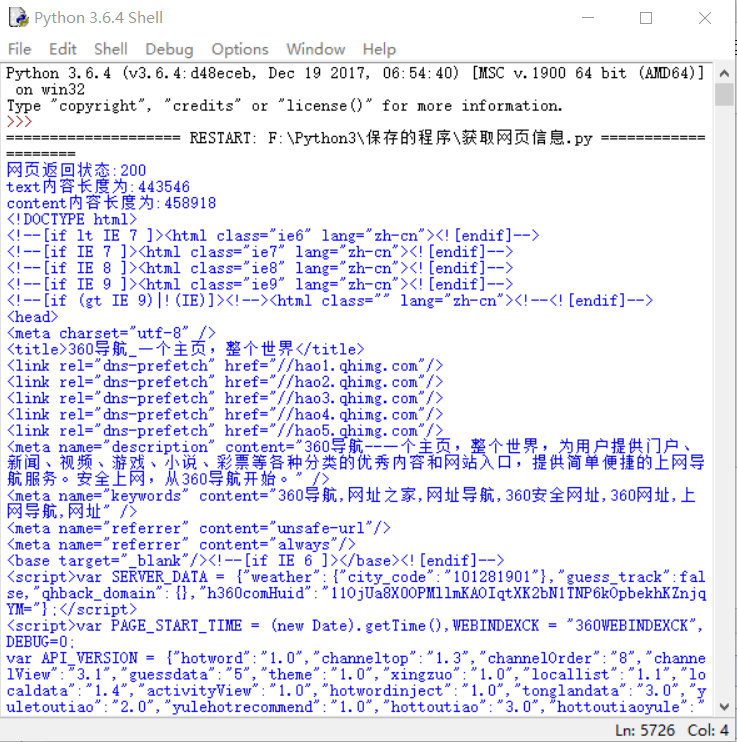

用requests库的get()访问一个网站20次,返回状态,text()内容和content属性所返回网页内容的长度

程序:

1 import requests 2 def getHTMLText(self): 3 try: 4 r=requests.get(url,timeout=30) 5 r.raise_for_status() 6 r.encoding = 'utf-8' 7 print("网页返回状态:{}".format(r.status_code)) 8 print("text内容长度为:{}".format(len(r.text))) 9 print("content内容长度为:{}".format(len(r.content))) 10 return r.text 11 except: 12 return"" 13 url = "https://hao.360.com" 14 print(getHTMLText(url))

效果图(由于text信息量过多所以显示部分内容):

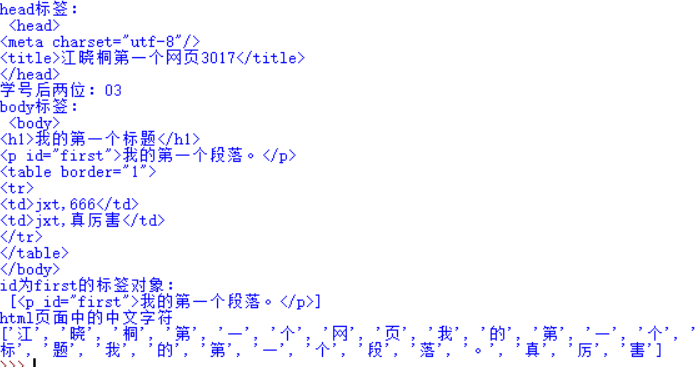

制作一个简单的html页面

1 <!DOCTYPE html> 2 <html> 3 <head> 4 <meta charset="utf-8"> 5 <title>江晓桐第一个网页3017</title> 6 </head> 7 <body> 8 9 <h1>我的第一个标题</h1> 10 <p id="first">我的第一个段落。</p> 11 <table border="1"> 12 <tr> 13 <td>jxt,666</td> 14 <td>jxt,真厉害</td> 15 </tr> 16 </table> 17 18 </body> 19 </html>

页面效果:

用自己制作的html页面完成以下计算:

a.打印head标签内容和你学号的后两位

b.获取body标签的内容

c.获取id为first的标签对象

d.获取并打印html页面中的中文字符

代码:

1 from bs4 import BeautifulSoup 2 import re 3 soup=BeautifulSoup('''<!DOCTYPE html> 4 <html> 5 <head> 6 <meta charset="utf-8"> 7 <title>江晓桐第一个网页3017</title> 8 </head> 9 <body> 10 <h1>我的第一个标题</h1> 11 <p id="first">我的第一个段落。</p> 12 <table border="1"> 13 <tr> 14 <td>jxt,666</td> 15 <td>jxt,真厉害</td> 16 </tr> 17 </table> 18 </body> 19 </html>''') 20 print("head标签:\n",soup.head,"\n学号后两位:03") 21 print("body标签:\n",soup.body) 22 print("id为first的标签对象:\n",soup.find_all(id="first")) 23 st=soup.text 24 pp = re.findall(u'[\u1100-\uFFFDh]+?',st) 25 print("html页面中的中文字符") 26 print(pp)

结果:

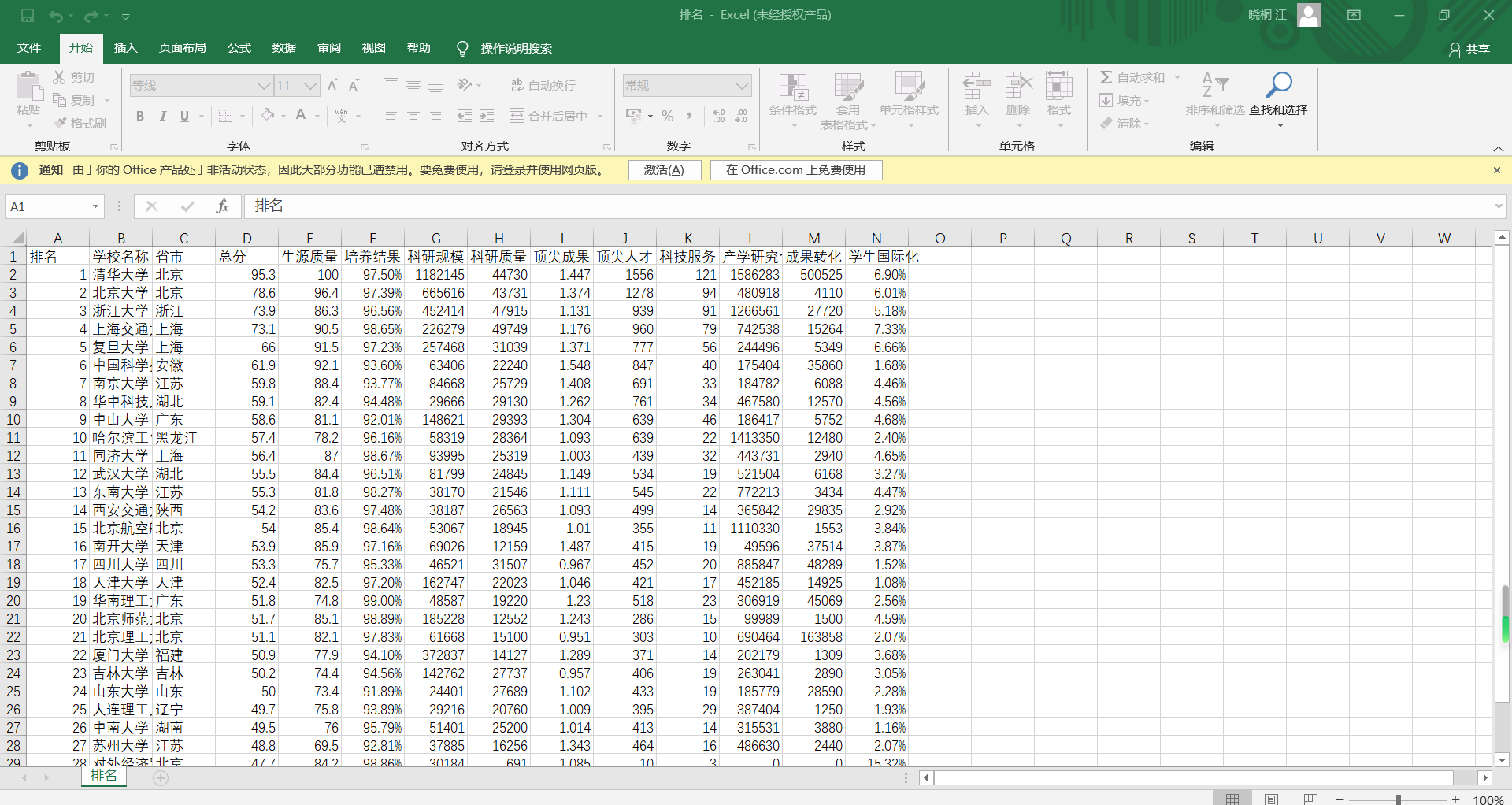

爬取中国大学排名网站内容(爬取年份2018年的大学排名)http://www.zuihaodaxue.com/zuihaodaxuepaiming2018.html

程序:

1 import csv 2 import os 3 import requests 4 from bs4 import BeautifulSoup 5 allUniv = [] 6 def getHTMLText(url): 7 try: 8 r = requests.get(url, timeout=30) 9 r.raise_for_status() 10 r.encoding ='utf-8' 11 return r.text 12 except: 13 return "" 14 def fillUnivList(soup): 15 data = soup.find_all('tr') 16 for tr in data: 17 ltd = tr.find_all('td') 18 if len(ltd)==0: 19 continue 20 singleUniv = [] 21 for td in ltd: 22 singleUniv.append(td.string) 23 allUniv.append(singleUniv) 24 def writercsv(save_road,num,title): 25 if os.path.isfile(save_road): 26 with open(save_road,'a',newline='')as f: 27 csv_write=csv.writer(f,dialect='excel') 28 for i in range(num): 29 u=allUniv[i] 30 csv_write.writerow(u) 31 else: 32 with open(save_road,'w',newline='')as f: 33 csv_write=csv.writer(f,dialect='excel') 34 csv_write.writerow(title) 35 for i in range(num): 36 u=allUniv[i] 37 csv_write.writerow(u) 38 title=["排名","学校名称","省市","总分","生源质量","培养结果","科研规模", 39 "科研质量","顶尖成果","顶尖人才","科技服务","产学研究合作","成果转化","学生国际化"] 40 save_road="E:\\排名.csv" 41 def main(): 42 url = 'http://www.zuihaodaxue.com/zuihaodaxuepaiming2018.html' 43 html = getHTMLText(url) 44 soup = BeautifulSoup(html, "html.parser") 45 fillUnivList(soup) 46 writercsv(save_road,30,title) 47 main()

结果:

浙公网安备 33010602011771号

浙公网安备 33010602011771号