Python 多线程+队列+入库:根据关键字筛选爬取网页

实现思路

多线程爬取网页信息,从一个页面为起点,爬取其包含的所有链接,并根据关键字筛选,将符合的网页入库。

- 访问首页(种子页),获取源码 html;

- 使用正则或者其他方式获取所有的绝对地址链接,存到一个 list 里面;

- 遍历 list,加入到队列中;

- 多线程从队列中取数据,一次取一个绝对地址链接,并重复上面 1-3 步。

代码

下载地址:https://github.com/juno3550/Crawler

- MysqlTool 类:将 mysql 的初始化及操作进行封装。

-

get_page_message():获取当前网页的所需信息(标题、正文、包含的所有链接)。

- get_html():多线程任务函数,将包含关键字的当前页面进行入库,并将包含的所有链接放入队列中,以此循环。

1 import requests 2 import re 3 from threading import Thread, Lock 4 import queue 5 import pymysql 6 import traceback 7 import time 8 9 10 # 线程同步锁 11 lock = Lock() 12 queue = queue.Queue() 13 # 设定需要爬取的网页数 14 crawl_num = 20 15 # 存储已爬取的url 16 result_urls = [] 17 # 当前已爬取的网页个数 18 current_url_count = 0 19 # 爬取的关键字 20 key_word = "新闻" 21 22 23 # mysql操作封装 24 class MysqlTool(): 25 26 def __init__(self, host, port, db, user, passwd, charset="utf8"): 27 self.host = host 28 self.port = port 29 self.db = db 30 self.user = user 31 self.passwd = passwd 32 self.charset = charset 33 34 def connect(self): 35 '''创建数据库连接与执行对象''' 36 try: 37 self.conn = pymysql.connect(host=self.host, port=self.port, db=self.db, user=self.user, passwd=self.passwd, charset=self.charset) 38 self.cursor = self.conn.cursor() 39 except Exception as e: 40 print(e) 41 42 def close(self): 43 '''关闭数据库连接与执行对象''' 44 try: 45 self.cursor.close() 46 self.conn.close() 47 except Exception as e: 48 print(e) 49 50 def __edit(self, sql): 51 '''增删改查的私有方法''' 52 try: 53 execute_count = self.cursor.execute(sql) 54 self.conn.commit() 55 except Exception as e: 56 print(e) 57 else: 58 return execute_count 59 60 def insert(self, sql): 61 '''插入数据''' 62 self.connect() 63 self.__edit(sql) 64 self.close() 65 66 67 # 获取URL的所需信息 68 def get_page_message(url): 69 try: 70 if ".pdf" in url or ".jpg" in url or ".jpeg" in url or ".png" in url or ".apk" in url or "microsoft" in url: 71 return 72 r = requests.get(url, timeout=5) 73 # 获取该URL的源码内容 74 page_content = r.text 75 # 获取页面标题 76 page_title = re.search(r"<title>(.+?)</title>", page_content).group(1) 77 # 使用正则提取该URL中的所有URL 78 page_url_list = re.findall(r'href="(http.+?)"', page_content) 79 # 过滤图片、pdf等链接 80 # 获取URL中的正文内容 81 page_text = "".join(re.findall(r"<p>(.+?)</p>", page_content)) 82 # 将正文中的标签去掉 83 page_text = "".join(re.split(r"<.+?>", page_text)) 84 # 将正文中的双引号改成单引号,以防落库失败 85 page_text = page_text.replace('"', "'") 86 return page_url_list, page_title, page_text 87 except: 88 print("获取URL所需信息失败【%s】" % url) 89 traceback.print_exc() 90 91 92 # 任务函数:获取本url中的所有链接url 93 def get_html(queue, lock, mysql, key_word, crawl_num, threading_no): 94 global current_url_count 95 try: 96 while not queue.empty(): 97 # 已爬取的数据量达到要求,则结束爬虫 98 lock.acquire() 99 if len(result_urls) >= crawl_num: 100 print("【线程%d】爬取总数【%d】达到要求,任务函数结束" % (threading_no, len(result_urls))) 101 lock.release() 102 return 103 else: 104 lock.release() 105 # 从队列中获取url 106 url = queue.get() 107 lock.acquire() 108 current_url_count += 1 109 lock.release() 110 print("【线程%d】队列中还有【%d】个URL,当前爬取的是第【%d】个URL:%s" % (threading_no, queue.qsize(), current_url_count, url)) 111 # 判断url是否已爬取过,以防止重复落库 112 if url not in result_urls: 113 page_message = get_page_message(url) 114 page_url_list = page_message[0] 115 page_title = page_message[1] 116 page_text = page_message[2] 117 if not page_message: 118 continue 119 # 将源码中的所有URL放到队列中 120 while page_url_list: 121 url = page_url_list.pop() 122 lock.acquire() 123 if url not in result_urls: 124 queue.put(url.strip()) 125 lock.release() 126 # 标题或正文包含关键字,才会入库 127 if key_word in page_title or key_word in page_text: 128 lock.acquire() 129 if not len(result_urls) >= crawl_num: 130 sql = 'insert into crawl_page(url, title, text) values("%s", "%s", "%s")' % (url, page_title, page_text) 131 mysql.insert(sql) 132 result_urls.append(url) 133 print("【线程%d】关键字【%s】,目前已爬取【%d】条数据,距离目标数据还差【%d】条,当前落库URL为【%s】" % (threading_no, key_word, len(result_urls), crawl_num-len(result_urls), url)) 134 lock.release() 135 else: 136 # 已爬取的数据量达到要求,则结束爬虫 137 print("【线程%d】爬取总数【%d】达到要求,任务函数结束" % (threading_no, len(result_urls))) 138 lock.release() 139 return 140 141 print("【线程%d】队列为空,任务函数结束" % threading_no) 142 143 except: 144 print("【线程%d】任务函数执行失败" % threading_no) 145 traceback.print_exc() 146 147 148 if __name__ == "__main__": 149 # 爬取的种子页 150 home_page_url = "https://www.163.com" 151 queue.put(home_page_url) 152 mysql = MysqlTool("127.0.0.1", 3306, "test", "root", "admin") 153 t_list = [] 154 for i in range(50): 155 t = Thread(target=get_html, args=(queue, lock, mysql, key_word, crawl_num, i)) 156 time.sleep(0.05) 157 t.start() 158 t_list.append(t) 159 for t in t_list: 160 t.join() 161

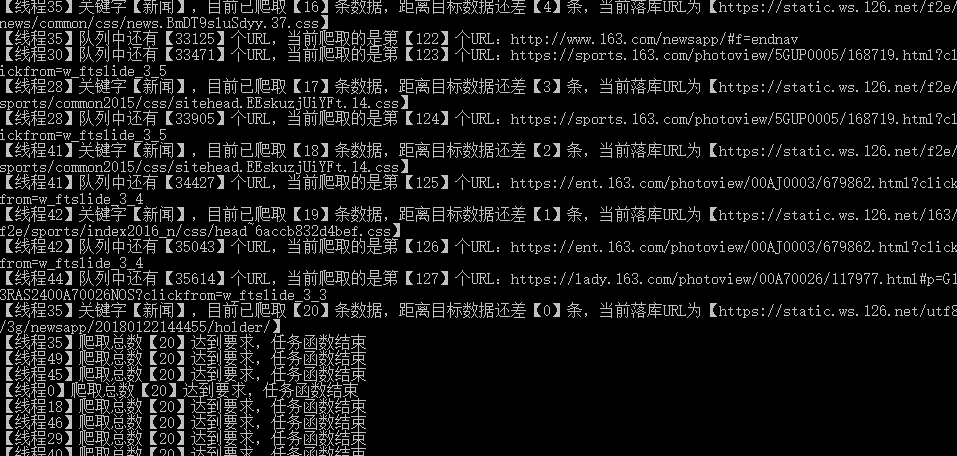

执行结果

浙公网安备 33010602011771号

浙公网安备 33010602011771号