20.B站薪享宏福笔记——第五章 k8s Pod 控制器

5 Pod 控制器

5.1 控制器

——有了它实现了真正的自愈

5.1.1 控制器概念

在Kubernetes 中运行了一系列控制器来确保集群的当前状态与期望状态保持一致,它们就是 Kubernetes 集群内部的管理控制中心或者说是“中心大脑”。例如:ReplicaSet 控制器负责维护集群中运行的 Pod 数量(比如期望启动4个pod,其中一个 pod 的节点损坏了,控制器会在其他节点再启动一个pod,达到4个数量的期望);Node 控制器负责监控节点的状态,并在节点出现故障时,执行自动化修复流程,确保集群始终处于预期的工作状态。

5.1.2 控制器调谐

工作原理:

1.预期状态:资源清单中副本数量 replicas:8,期望启动 8 个 pod

2.真实状态:真正启动的 pod 的数量

工作:a.检查当前状态 -> b.对比资源清单中期望 -> c.根据资源清单中的期望调整资源的部署 -> d.再次检查当前状态 -> ......

5.1.3 Pod 控制器种类

1.ReplicationController 和 ReplicaSet

2.Deployment

3.DaemonSet

4.StateFulSet

5.Job/CronJob

6.Horizontal Pod Autoscaling

5.2 Pod 控制器

——pod 的监护人

5.2.1 ReplicationController 和 ReplicaSet

(1)ReplicationController

ReplicationController(RC)用来确保容器应用的副本数始终保持在用户定义的副本数,即如果有容器异常退出,会自动创建新的 pod 来替代;而如果异常多出来的容器也会自动回收;

[root@k8s-master01 5]# cat 1.rc.yaml apiVersion: v1 kind: ReplicationController metadata: name: rc-demo spec: replicas: 3 selector: app: rc-demo template: metadata: labels: app: rc-demo spec: containers: - name: rc-demo-container image: myapp:v1.0 env: - name: GET_HOSTS_FROM value: dns name: zhangsan value: "123" ports: - containerPort: 80

1.接口组版本:核心组v1版 2.类别:ReplicationController 控制器 3.元数据:ReplicationController 控制器的名称 4.期望:replicas定义当前 pod 副本数量、selector 选择器(匹配符合当前标签,子集运算)、template 模板(定义pod信息):pod元数据、pod标签(与选择器标签可子集匹配)、pod期望:容器组:容器名、基于镜像版本、env 容器环境变量、定义容器端口80

# 小八卦:老版本 ReplicationController 控制器中 selector 选择器标签与 pod 标签不匹配会启动后一直匹配不到,新版本则是启动前检测,不匹配启动前报错(The ReplicationController "rc-demo" is invalid: spec.template.metadata.labels: Invalid value: map[string]string{"app":"rc-demo-1"}: `selector` does not match template `labels`)

[root@k8s-master01 5]# kubectl create -f 1.rc.yaml replicationcontroller/rc-demo created [root@k8s-master01 5]# kubectl get rc NAME DESIRED CURRENT READY AGE rc-demo 3 3 3 7s [root@k8s-master01 5]# kubectl get pod NAME READY STATUS RESTARTS AGE rc-demo-qxf45 1/1 Running 0 11s rc-demo-rjj4l 1/1 Running 0 11s rc-demo-zssms 1/1 Running 0 11s # 删除一个pod后,rc 控制器会再启动一个新的 pod。尽量满足资源清单中的期望 [root@k8s-master01 5]# kubectl delete po rc-demo-qxf45 pod "rc-demo-qxf45" deleted [root@k8s-master01 5]# kubectl get pod NAME READY STATUS RESTARTS AGE rc-demo-cm6zb 1/1 Running 0 3s rc-demo-rjj4l 1/1 Running 0 60s rc-demo-zssms 1/1 Running 0 60s # 是指尽量满足期望,不一定能一定满足期望,例如,当运行的pod占用足够多节点性能,节点已经不能再启动 pod 了,但仍不满足资源清单期望时,会启动、杀死、启动、杀死...重复此过程 # 查看 rc 的标签和 pod 的标签 [root@k8s-master01 5]# kubectl get rc --show-labels NAME DESIRED CURRENT READY AGE LABELS rc-demo 3 3 3 12m app=rc-demo [root@k8s-master01 5]# kubectl get pod --show-labels NAME READY STATUS RESTARTS AGE LABELS rc-demo-cm6zb 1/1 Running 0 11m app=rc-demo rc-demo-rjj4l 1/1 Running 0 12m app=rc-demo rc-demo-zssms 1/1 Running 0 12m app=rc-demo # 把 原来 pod 中标签添加一个新的标签后,仍旧是 3 个 pod 在运行,说明 rc 控制器对 pod 标签是采用子集运算的 [root@k8s-master01 5]# kubectl label pod rc-demo-cm6zb version=v1 pod/rc-demo-cm6zb labeled [root@k8s-master01 5]# kubectl get pod --show-labels NAME READY STATUS RESTARTS AGE LABELS rc-demo-cm6zb 1/1 Running 0 12m app=rc-demo,version=v1 rc-demo-rjj4l 1/1 Running 0 13m app=rc-demo rc-demo-zssms 1/1 Running 0 13m app=rc-demo # 修改 pod 的标签后,新增了一个 pod,说明 pod 是要达成期望值的 [root@k8s-master01 5]# kubectl label pod rc-demo-rjj4l app=junnan666 --overwrite pod/rc-demo-rjj4l labeled [root@k8s-master01 5]# kubectl get pod --show-labels NAME READY STATUS RESTARTS AGE LABELS rc-demo-cm6zb 1/1 Running 0 13m app=rc-demo,version=v1 rc-demo-pwfkz 1/1 Running 0 2s app=rc-demo rc-demo-rjj4l 1/1 Running 0 14m app=junnan666 rc-demo-zssms 1/1 Running 0 14m app=rc-demo # 把修改的标签变回原标签后,把新符合标签的 pod 停止了,这是因为默认原 pod 上有更多的链接更多的资源,原 pod 更有“价值”,所以杀死了新 pod [root@k8s-master01 5]# kubectl label pod rc-demo-rjj4l app=rc-demo --overwrite pod/rc-demo-rjj4l labeled [root@k8s-master01 5]# kubectl get pod --show-labels NAME READY STATUS RESTARTS AGE LABELS rc-demo-cm6zb 1/1 Running 0 14m app=rc-demo,version=v1 rc-demo-rjj4l 1/1 Running 0 14m app=rc-demo rc-demo-zssms 1/1 Running 0 14m app=rc-demo # 通过 rc 控制器增加或减少 pod,就是一条命令的事(减少也是按照时间,先杀死时间运行更短的 pod ) [root@k8s-master01 5]# kubectl scale rc rc-demo --replicas=5 replicationcontroller/rc-demo scaled [root@k8s-master01 5]# kubectl get rc NAME DESIRED CURRENT READY AGE rc-demo 5 5 5 23m [root@k8s-master01 5]# kubectl get pod --show-labels NAME READY STATUS RESTARTS AGE LABELS rc-demo-cm6zb 1/1 Running 0 22m app=rc-demo,version=v1 rc-demo-fh89g 1/1 Running 0 2s app=rc-demo rc-demo-rjj4l 1/1 Running 0 23m app=rc-demo rc-demo-spbjg 1/1 Running 0 2s app=rc-demo rc-demo-zssms 1/1 Running 0 23m app=rc-demo [root@k8s-master01 5]# kubectl scale rc rc-demo --replicas=2 replicationcontroller/rc-demo scaled [root@k8s-master01 5]# kubectl get rc NAME DESIRED CURRENT READY AGE rc-demo 2 2 2 23m [root@k8s-master01 5]# kubectl get pod --show-labels NAME READY STATUS RESTARTS AGE LABELS rc-demo-cm6zb 1/1 Running 0 22m app=rc-demo,version=v1 rc-demo-zssms 1/1 Running 0 23m app=rc-demo

(2)ReplicaSet

在新版本的 Kubernetes 中建议使用 ReplicaSet 来取代 ReplicationController。ReplicaSet 跟ReplicationController 没有本质的不同,只是名字不一样,并且ReplicaSet 支持集合式的 selector;

[root@k8s-master01 5]# cat 2.rs.yaml apiVersion: apps/v1 kind: ReplicaSet metadata: name: rs-ml-demo spec: replicas: 3 selector: matchLabels: app: rs-ml-demo daemon: junnan666 template: metadata: labels: app: rs-ml-demo daemon: junnan666 version: v1 spec: containers: - name: rs-ml-demo-container image: myapp:v1.0 env: - name: GET_HOSTS_FROM value: dns ports: - containerPort: 80

1.接口组版本:apps组v1版 2.类别:ReplicaSet 控制器 3.元数据:ReplicaSet 控制器的名称 4.期望:replicas定义当前 pod 副本数量、selector 选择器(匹配符合当前标签,子集运算):matchLabels 匹配运算符、template 模板(定义pod信息):pod元数据、pod标签(与选择器标签可子集匹配)、pod期望:容器组:容器名、基于镜像版本、env 容器环境变量、定义容器端口80 # 以下是 rc 与 rs 的标签区别,rs 带有标签运算符, [root@k8s-master01 5]# kubectl explain rc.spec.selector KIND: ReplicationController VERSION: v1 FIELD: selector <map[string]string> DESCRIPTION: Selector is a label query over pods that should match the Replicas count. If Selector is empty, it is defaulted to the labels present on the Pod template. Label keys and values that must match in order to be controlled by this replication controller, if empty defaulted to labels on Pod template. More info: https://kubernetes.io/docs/concepts/overview/working-with-objects/labels/#label-selectors [root@k8s-master01 5]# kubectl explain rs.spec.selector GROUP: apps KIND: ReplicaSet VERSION: v1 FIELD: selector <LabelSelector> DESCRIPTION: Selector is a label query over pods that should match the replica count. Label keys and values that must match in order to be controlled by this replica set. It must match the pod template's labels. More info: https://kubernetes.io/docs/concepts/overview/working-with-objects/labels/#label-selectors A label selector is a label query over a set of resources. The result of matchLabels and matchExpressions are ANDed. An empty label selector matches all objects. A null label selector matches no objects. FIELDS: matchExpressions <[]LabelSelectorRequirement> matchExpressions is a list of label selector requirements. The requirements are ANDed. matchLabels <map[string]string> matchLabels is a map of {key,value} pairs. A single {key,value} in the matchLabels map is equivalent to an element of matchExpressions, whose key field is "key", the operator is "In", and the values array contains only "value". The requirements are ANDed. selector.matchExpressions rs 在标签选择器上,除了可以定义键值对的选择形式,还支持 matchExpressions 字段,可以提供多种选择。 目前支持的操作包括: In:label 的值在某个列表中 NotIn:label 的值不在某个列表中 Exists:某个 label 存在 DoesNotExist:某个 label 不存在

# 相同点,同 rc 功能一样,当期望不满足时,会自动创建期望的 pod 副本数量 [root@k8s-master01 5]# kubectl create -f 2.rs.yaml replicaset.apps/rs-ml-demo created [root@k8s-master01 5]# kubectl get rs --show-labels NAME DESIRED CURRENT READY AGE LABELS rs-ml-demo 3 3 3 13s <none> [root@k8s-master01 5]# kubectl get pod --show-labels NAME READY STATUS RESTARTS AGE LABELS rs-ml-demo-52mrc 1/1 Running 0 24s app=rs-ml-demo,daemon=junnan666,version=v1 rs-ml-demo-nwkw6 1/1 Running 0 24s app=rs-ml-demo,daemon=junnan666,version=v1 rs-ml-demo-vqpdx 1/1 Running 0 24s app=rs-ml-demo,daemon=junnan666,version=v1 [root@k8s-master01 5]# kubectl delete po rs-ml-demo-52mrc pod "rs-ml-demo-52mrc" deleted [root@k8s-master01 5]# kubectl get pod --show-labels NAME READY STATUS RESTARTS AGE LABELS rs-ml-demo-5wdmk 1/1 Running 0 3s app=rs-ml-demo,daemon=junnan666,version=v1 rs-ml-demo-nwkw6 1/1 Running 0 102s app=rs-ml-demo,daemon=junnan666,version=v1 rs-ml-demo-vqpdx 1/1 Running 0 102s app=rs-ml-demo,daemon=junnan666,version=v1

新增点,标签匹配符(注意,key=value,这里标签匹配是标签匹配,标签值匹配是标签值匹配)

[root@k8s-master01 5]# cat 3.rs.yaml apiVersion: apps/v1 kind: ReplicaSet metadata: name: rs-me-exists-demo spec: selector: matchExpressions: - key: app operator: Exists template: metadata: labels: app: spring-k8s spec: containers: - name: rs-me-exists-demo-container image: myapp:v1.0 ports: - containerPort: 80

4.期望:匹配器:匹配运算符(标签app、运算符存在,只要存在app标签的key既可)、template 模板(定义pod信息):pod元数据、pod标签(与选择器标签可子集匹配)、pod期望:容器组:容器名、基于镜像版本、定义容器端口80

[root@k8s-master01 5]# kubectl create -f 3.rs.yaml replicaset.apps/rs-me-exists-demo created # 因为没有定义副本数量,所以默认启动一个 pod [root@k8s-master01 5]# kubectl get rs --show-labels NAME DESIRED CURRENT READY AGE LABELS rs-me-exists-demo 1 1 1 11s <none> # 这里 app=spring-k8s 符合带有 app key 的要求,但是上面创建的也符合要求却没有匹配,rs 控制器在匹配时有两个要求:1.资源对象是自己创建的(看父方是谁,只有父方是自己,才去匹配标签),2.标签符合(避免匹配其他不相干标签的pod ) [root@k8s-master01 5]# kubectl get po --show-labels NAME READY STATUS RESTARTS AGE LABELS rs-me-exists-demo-jhs99 1/1 Running 0 2m45s app=spring-k8s rs-ml-demo-6xx2b 1/1 Running 0 13s app=rs-ml-demo,daemon=junnan666,version=v1 rs-ml-demo-hjpdn 1/1 Running 0 13s app=rs-ml-demo,daemon=junnan666,version=v1 rs-ml-demo-zg4kb 1/1 Running 0 13s app=rs-ml-demo,daemon=junnan666,version=v1 # 修改标签的 key,仍然没有新启动 pod,说明仍然在管理 app 的 pod ,也就是匹配带有 app 的 pod [root@k8s-master01 5]# kubectl label po rs-me-exists-demo-jhs99 app=junnan666 --overwrite pod/rs-me-exists-demo-jhs99 labeled [root@k8s-master01 5]# kubectl get po --show-labels NAME READY STATUS RESTARTS AGE LABELS rs-me-exists-demo-jhs99 1/1 Running 0 28m app=junnan666 rs-ml-demo-6xx2b 1/1 Running 0 25m app=rs-ml-demo,daemon=junnan666,version=v1 rs-ml-demo-hjpdn 1/1 Running 0 25m app=rs-ml-demo,daemon=junnan666,version=v1 rs-ml-demo-zg4kb 1/1 Running 0 25m app=rs-ml-demo,daemon=junnan666,version=v1

[root@k8s-master01 5]# cat 4.rs.yaml apiVersion: apps/v1 kind: ReplicaSet metadata: name: rs-me-in-demo spec: selector: matchExpressions: - key: app operator: In values: - spring-k8s - hahahah template: metadata: labels: app: sg-k8s spec: containers: - name: rs-me-in-demo-container image: myapp:v1.0 ports: - containerPort: 80

4.期望:匹配器:匹配运算符(标签app、运算符在之中,只要在app标签的values中既可)、template 模板(定义pod信息):pod元数据、pod标签(与选择器标签可子集匹配)、pod期望:容器组:容器名、基于镜像版本、定义容器端口80

# 控制器在创建 pod 时发现标签没办法匹配,所以创建的时候报错,验证前面新版本控制器在创建时会匹配 pod 标签的结论 [root@k8s-master01 5]# kubectl create -f 4.rs.yaml The ReplicaSet "rs-me-in-demo" is invalid: spec.template.metadata.labels: Invalid value: map[string]string{"app":"sg-k8s"}: `selector` does not match template `labels` [root@k8s-master01 5]# kubectl create -f 4.rs.yaml replicaset.apps/rs-me-in-demo created [root@k8s-master01 5]# kubectl get rs --show-labels NAME DESIRED CURRENT READY AGE LABELS rs-me-exists-demo 1 1 1 4h29m <none> rs-me-in-demo 1 1 1 22s <none> [root@k8s-master01 5]# kubectl get po --show-labels NAME READY STATUS RESTARTS AGE LABELS rs-me-exists-demo-jhs99 1/1 Running 2 (11m ago) 4h29m app=junnan666 rs-me-in-demo-z99z9 1/1 Running 0 10s app=hahahah # rs 控制器匹配符是 in 的关系,所以只要在之中都可以,当把标签值改为 spring-k8s 仍然可以被管理,rs 控制器没有新创建 pod [root@k8s-master01 5]# kubectl label pod rs-me-in-demo-z99z9 app=spring-k8s --overwrite pod/rs-me-in-demo-z99z9 labeled [root@k8s-master01 5]# kubectl get pod --show-labels NAME READY STATUS RESTARTS AGE LABELS rs-me-exists-demo-jhs99 1/1 Running 2 (14m ago) 4h32m app=junnan666 rs-me-in-demo-z99z9 1/1 Running 0 3m16s app=spring-k8s # 当把容器中标签key的值value改为其他,不在标签符内,将再次生成带有标签的新 pod,同时删除当前标签修改后不符合标签值的孤儿 pod。 [root@k8s-master01 5]# kubectl label pod rs-me-in-demo-z99z9 app=666 --overwrite pod/rs-me-in-demo-z99z9 labeled [root@k8s-master01 5]# kubectl get pod --show-labels NAME READY STATUS RESTARTS AGE LABELS rs-me-exists-demo-jhs99 1/1 Running 2 (16m ago) 4h34m app=junnan666 rs-me-in-demo-r7svv 0/1 ContainerCreating 0 1s app=hahahah rs-me-in-demo-z99z9 1/1 Terminating 0 5m18s app=666 [root@k8s-master01 5]# kubectl get pod --show-labels NAME READY STATUS RESTARTS AGE LABELS rs-me-exists-demo-jhs99 1/1 Running 2 (16m ago) 4h34m app=junnan666 rs-me-in-demo-r7svv 0/1 ContainerCreating 0 6s app=hahahah [root@k8s-master01 5]# kubectl get pod --show-labels NAME READY STATUS RESTARTS AGE LABELS rs-me-exists-demo-jhs99 1/1 Running 2 (16m ago) 4h34m app=junnan666 rs-me-in-demo-r7svv 1/1 Running 0 11s app=hahahah

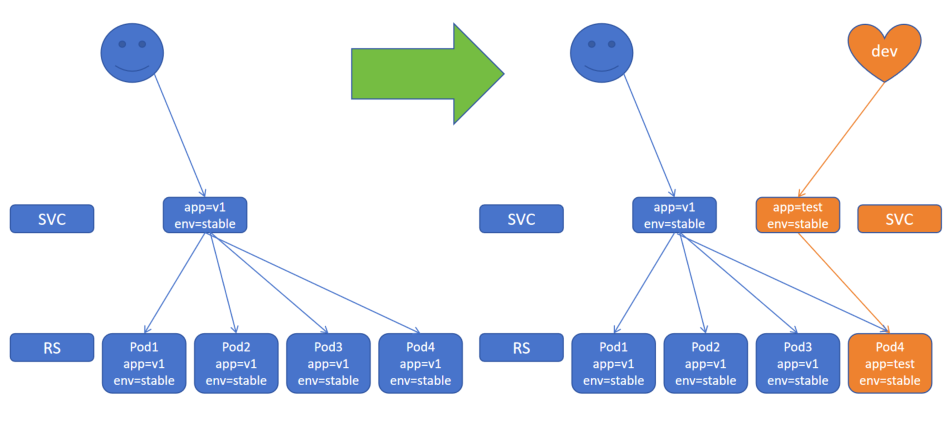

1.当左测用户访问到svc,svc根据标签,负载到后端的pod

2.当右侧开发需要测试,可以生成一个新的svc,然后pod修改标签,新的svc就能负载到pod上进行代码测试等(这个想法可以有,但不能做,1.如果pod标签不全修改,仍会被原svc负载到,如果做影响性能的测试,会影响用户的访问体验。2.如果全修改了标签,那么 rs 控制器会新生成 Pod )

5.2.2 Deployment

(1)Deployment 应用场景

Deployment 为 Pod 和 ReplicaSet 提供了一个 声明式定义(declarative) 方法,用来替代以前的 ReplicationController 来方便的管理应用。

典型的应用场景包括:

1.定义 Deployment 来创建 Pod 和 ReplicaSet

2.滚动升级和回滚应用

3.扩容和缩容

4.暂停和继续 Deployment

(2)声明式与命令式区别

声明性的东西是对结果的陈述,表名意图而不是实现它的过程。在 Kubernetes 中,这就是说“应该有一个包含三个 Pod 的 ReplicaSet ”。

命令式充当命令。声明式是被动的,而命令式是主动且主节的:“创建一个包含三个 Pod 的ReplicaSet ”。

声明式与命令式:例如 apply 和 replace

替换方式:

kubectl replace:使用新的配置完全替换掉现有的资源的配置。这意味着新配置将覆盖现有资源的所有字段和属性,包括未指定的字段,会导致整个资源的替换

kubectl apply:使用新的配置部分地更新现有资源的配置。它会根据提供的配置文件或参数,只更新与新配置中不同的部分,而不会覆盖整个资源的配置

字段级别的更新:

kubectl replace:由于是完全替换,所以会覆盖所有字段和属性,无论是否在新配置中指定

kubectl apply:只更新与新配置中不同的字段和属性,保留未指定的字段不受影响

部分更新:

kubectl replace:不支持部分更新,它会替换整个资源的配置

kubectl apply:支持部分更新,只会更新新配置中发生变化的部分,保留未指定的部分不受影响

与其他配置的影响:

kubectl replace:不考虑其他资源配置的状态,直接替换资源的配置

kubectl apply:可以结合使用 -f 或 -k 参数,从文件或目录中读取多个资源配置,并根据当前集群中的资源状态进行更新

(3)Deployment 实验演示

[root@k8s-master01 5]# cat 5.deploy.yaml apiVersion: apps/v1 kind: Deployment metadata: labels: app: myapp-deploy name: myapp-deploy spec: selector: matchLabels: app: myapp-deploy template: metadata: labels: app: myapp-deploy spec: containers: - image: myapp:v1.0 name: myapp

1.接口组版本:apps接口v1版 2.类别:Deployment 类 3.元数据:Deployment 标签和标签值、Deployment 名 4.期望:选择器:匹配标签、Pod 模板、Pod 元数据、Pod标签(需与 Deployment 控制器匹配,子集、全集、运算符)、Pod 期望:容器组:基于镜像、Pod 名

# 提前构筑myapp v3.0、v4.0、v5.0、v6.0镜像,以此方法,只需要更改index.html中的 v 版本既可,分发到其他节点导入 [root@k8s-node01 ~]# cat index.html Hello MyApp | Version: v3 | <a href="hostname.html">Pod Name</a> [root@k8s-node01 ~]# cat Dockerfile FROM myapp:v1.0 COPY ./index.html /usr/share/nginx/html/index.html [root@k8s-node01 ~]# docker build -t myapp:v3.0 . [root@k8s-node01 ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE myapp v3.0 73f1a41f5529 3 minutes ago 15.5MB myapp v2.0 93ddbdcd983a 7 days ago 15.5MB myapp v1.0 d4a5e0eaa84f 7 years ago 15.5MB .......... [root@k8s-node01 ~]# docker save myapp:v3.0 -o myapp_v3.0.tar.gz [root@k8s-node01 ~]# scp myapp_v3.0.tar.gz root@m1:/root root@m1's password: myapp_v3.0.tar.gz 100% 15MB 87.5MB/s 00:00 [root@k8s-node01 ~]# scp myapp_v3.0.tar.gz root@n2:/root root@n2's password: myapp_v3.0.tar.gz 100% 15MB 98.6MB/s 00:00 [root@k8s-master01 ~]# docker load -i myapp_v3.0.tar.gz e4a4a3e6c0f7: Loading layer [==================================================>] 4.096kB/4.096kB Loaded image: myapp:v3.0 [root@k8s-node02 ~]# docker load -i myapp_v3.0.tar.gz e4a4a3e6c0f7: Loading layer [==================================================>] 4.096kB/4.096kB Loaded image: myapp:v3.0

[root@k8s-master01 5]# kubectl apply -f 5.deploy.yaml deployment.apps/myapp-deploy created [root@k8s-master01 5]# kubectl get deployment NAME READY UP-TO-DATE AVAILABLE AGE myapp-deploy 1/1 1 1 6s [root@k8s-master01 5]# kubectl get pod NAME READY STATUS RESTARTS AGE myapp-deploy-6c95d44959-hwhdr 1/1 Running 0 10s # 没有定义副本数,默认是1的副本数 [root@k8s-master01 5]# kubectl get deployment myapp-deploy -o yaml apiVersion: apps/v1 kind: Deployment metadata: annotations: deployment.kubernetes.io/revision: "1" kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"apps/v1","kind":"Deployment","metadata":{"annotations":{},"labels":{"app":"myapp-deploy"},"name":"myapp-deploy","namespace":"default"},"spec":{"selector":{"matchLabels":{"app":"myapp-deploy"}},"template":{"metadata":{"labels":{"app":"myapp-deploy"}},"spec":{"containers":[{"image":"myapp:v1.0","name":"myapp"}]}}}} creationTimestamp: "2025-06-06T02:32:42Z" generation: 1 labels: app: myapp-deploy name: myapp-deploy namespace: default resourceVersion: "287255" uid: d2325957-e6b6-4c9b-82c8-b40050cd65cb spec: progressDeadlineSeconds: 600 replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: app: myapp-deploy strategy: rollingUpdate: maxSurge: 25% maxUnavailable: 25% type: RollingUpdate template: metadata: creationTimestamp: null labels: app: myapp-deploy spec: containers: - image: myapp:v1.0 imagePullPolicy: IfNotPresent name: myapp resources: {} terminationMessagePath: /dev/termination-log terminationMessagePolicy: File dnsPolicy: ClusterFirst restartPolicy: Always schedulerName: default-scheduler securityContext: {} terminationGracePeriodSeconds: 30 status: availableReplicas: 1 conditions: - lastTransitionTime: "2025-06-06T02:32:43Z" lastUpdateTime: "2025-06-06T02:32:43Z" message: Deployment has minimum availability. reason: MinimumReplicasAvailable status: "True" type: Available - lastTransitionTime: "2025-06-06T02:32:42Z" lastUpdateTime: "2025-06-06T02:32:43Z" message: ReplicaSet "myapp-deploy-6c95d44959" has successfully progressed. reason: NewReplicaSetAvailable status: "True" type: Progressing observedGeneration: 1 readyReplicas: 1 replicas: 1 updatedReplicas: 1 # 手动增加 deployment 的副本数 [root@k8s-master01 5]# kubectl scale deployment myapp-deploy --replicas=5 deployment.apps/myapp-deploy scaled [root@k8s-master01 5]# kubectl get deployment NAME READY UP-TO-DATE AVAILABLE AGE myapp-deploy 5/5 5 5 42m [root@k8s-master01 5]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES myapp-deploy-6c95d44959-6xkrp 1/1 Running 0 96s 10.244.85.227 k8s-node01 <none> <none> myapp-deploy-6c95d44959-gshwj 1/1 Running 0 96s 10.244.85.228 k8s-node01 <none> <none> myapp-deploy-6c95d44959-hwhdr 1/1 Running 0 44m 10.244.58.195 k8s-node02 <none> <none> myapp-deploy-6c95d44959-wrgv7 1/1 Running 0 96s 10.244.58.196 k8s-node02 <none> <none> myapp-deploy-6c95d44959-zcpkr 1/1 Running 0 96s 10.244.58.197 k8s-node02 <none> <none> [root@k8s-master01 5]# curl 10.244.85.227 Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a> # 修改myapp 镜像的版本,使用 apply 更新,仍旧是5个pod,只变更声明改变的部分 [root@k8s-master01 5]# cat 5.deploy.yaml apiVersion: apps/v1 kind: Deployment metadata: labels: app: myapp-deploy name: myapp-deploy spec: selector: matchLabels: app: myapp-deploy template: metadata: labels: app: myapp-deploy spec: containers: - image: myapp:v2.0 name: myapp [root@k8s-master01 5]# kubectl apply -f 5.deploy.yaml deployment.apps/myapp-deploy configured [root@k8s-master01 5]# kubectl get deployment NAME READY UP-TO-DATE AVAILABLE AGE myapp-deploy 5/5 5 5 47m [root@k8s-master01 5]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES myapp-deploy-7d6fccc696-4mp8s 1/1 Running 0 12s 10.244.58.200 k8s-node02 <none> <none> myapp-deploy-7d6fccc696-5kvxs 1/1 Running 0 13s 10.244.85.229 k8s-node01 <none> <none> myapp-deploy-7d6fccc696-f5sfs 1/1 Running 0 14s 10.244.58.198 k8s-node02 <none> <none> myapp-deploy-7d6fccc696-r4lrv 1/1 Running 0 14s 10.244.85.230 k8s-node01 <none> <none> myapp-deploy-7d6fccc696-shsb6 1/1 Running 0 12s 10.244.58.199 k8s-node02 <none> <none> [root@k8s-master01 5]# curl 10.244.58.200 Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a> # 修改myapp 镜像的版本,使用 replace 更新,变成1个pod,命令式全部按照文件替换 [root@k8s-master01 5]# cat 5.deploy.yaml apiVersion: apps/v1 kind: Deployment metadata: labels: app: myapp-deploy name: myapp-deploy spec: selector: matchLabels: app: myapp-deploy template: metadata: labels: app: myapp-deploy spec: containers: - image: myapp:v3.0 name: myapp [root@k8s-master01 5]# kubectl replace -f 5.deploy.yaml deployment.apps/myapp-deploy replaced [root@k8s-master01 5]# kubectl get deployment NAME READY UP-TO-DATE AVAILABLE AGE myapp-deploy 1/1 1 1 49m [root@k8s-master01 5]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES myapp-deploy-84845b77d9-d89dx 1/1 Running 0 13s 10.244.85.231 k8s-node01 <none> <none> [root@k8s-master01 5]# curl 10.244.85.231 Hello MyApp | Version: v3 | <a href="hostname.html">Pod Name</a> # 通过 diff 查看改变关系,当前文件没有改变没有输出,改变myapp:v4.0,会输出不同结果,与vim中diff一样的效果,通过文件与资源清单作对比,列出不同的点进行标记 [root@k8s-master01 5]# kubectl diff -f 5.deploy.yaml [root@k8s-master01 5]# cat 5.deploy.yaml apiVersion: apps/v1 kind: Deployment metadata: labels: app: myapp-deploy name: myapp-deploy spec: selector: matchLabels: app: myapp-deploy template: metadata: labels: app: myapp-deploy spec: containers: - image: myapp:v4.0 name: myapp [root@k8s-master01 5]# kubectl diff -f 5.deploy.yaml diff -u -N /tmp/LIVE-3449500486/apps.v1.Deployment.default.myapp-deploy /tmp/MERGED-1296198299/apps.v1.Deployment.default.myapp-deploy --- /tmp/LIVE-3449500486/apps.v1.Deployment.default.myapp-deploy 2025-06-06 11:28:41.712616953 +0800 +++ /tmp/MERGED-1296198299/apps.v1.Deployment.default.myapp-deploy 2025-06-06 11:28:41.712616953 +0800 @@ -4,7 +4,7 @@ annotations: deployment.kubernetes.io/revision: "3" creationTimestamp: "2025-06-06T02:32:42Z" - generation: 4 + generation: 5 labels: app: myapp-deploy name: myapp-deploy @@ -30,7 +30,7 @@ app: myapp-deploy spec: containers: - - image: myapp:v3.0 + - image: myapp:v4.0 imagePullPolicy: IfNotPresent name: myapp resources: {}

(4)Deployment 与 RS 的关联(滚动升级和回滚作用)

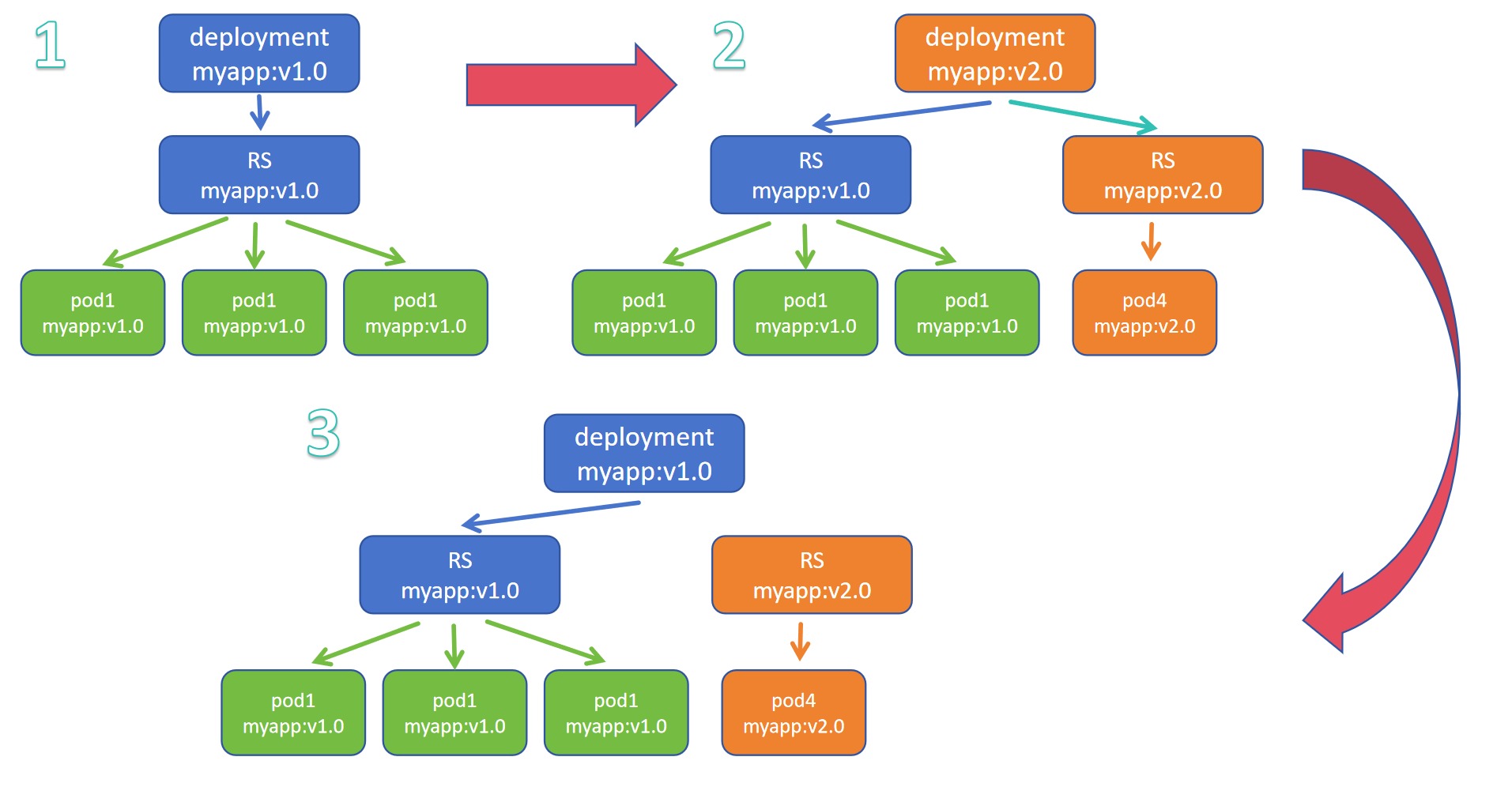

1.Deployment 控制器 副本数3 myapp:v1.0 创建成功后,先创建 ReplicaSet 副本数3 myapp:v1.0,ReplicaSet 再去按照要求创建 myapp:v1.0 的 Pod1、Pod2、Pod3

2.当开发进行升级 myapp:v2.0 时,在 Deployment 中修改 myapp:v2.0 后,Deployment 会创建一个新的 ReplicaSet 副本数1 myapp:v2.0,ReplicaSet 再去按照要求创建 myapp:v2.0 的 Pod1

3.当 myapp:v2.0 的 Pod1 启动成功并就绪后,svc 会将流量负载到其上,此时是 4个 Pod 提供负载(myapp:v1.0 的 Pod1、Pod2、Pod3 和 myapp:v2.0 的 Pod1),然后 ReplicaSet 副本数3 myapp:v1.0 会由副本数3 变为副本数2,myapp:v1.0 的 Pod3 消失,此时仍是 3 个 Pod 提供负载(myapp:v1.0 的 Pod1、Pod2 和 myapp:v2.0 的 Pod1)

4.新的 ReplicaSet 副本数变为2 myapp:v2.0,ReplicaSet 再去按照要求创建 myapp:v2.0 的 Pod2

5.当 myapp:v2.0 的 Pod2 启动成功并就绪后,svc 会将流量负载到其上,此时是 4个 Pod 提供负载(myapp:v1.0 的 Pod1、Pod2 和 myapp:v2.0 的 Pod1、Pod2),然后 ReplicaSet 副本数2 myapp:v1.0 会由副本数2 变为副本数1,myapp:v1.0 的 Pod2 消失,此时仍是 3 个 Pod 提供负载(myapp:v1.0 的 Pod1 和 myapp:v2.0 的 Pod1、Pod2 )

6.新的 ReplicaSet 副本数变为3 myapp:v2.0,ReplicaSet 再去按照要求创建 myapp:v2.0 的 Pod3

7.当 myapp:v2.0 的 Pod3 启动成功并就绪后,svc 会将流量负载到其上,此时是 4个 Pod 提供负载(myapp:v1.0 的 Pod1 和 myapp:v2.0 的 Pod1、Pod2、Pod3 ),然后 ReplicaSet 副本数1 myapp:v1.0 会由副本数1 变为副本数0,myapp:v1.0 的 Pod1 消失,此时仍是 3 个 Pod 提供负载(myapp:v2.0 的 Pod1、Pod2、Pod3 )

8.以上是 Deployment 的滚动发布,在升级过程中 持续保持最少有 3 个 Pod 在提供服务访问,不会造成访问中断,同时 Deployment 创建的 ReplicaSet 副本数3 myapp:v1.0 资源清单不会删除,可以在回滚中使用,回滚也是同样的顺序

(5)Deployment 常用命令

[root@k8s-master01 5]# cat 6.deploy.yaml apiVersion: apps/v1 kind: Deployment metadata: labels: app: deployment-demo name: deployment-demo spec: replicas: 5 selector: matchLabels: app: deployment-demo template: metadata: labels: app: deployment-demo spec: containers: - image: myapp:v1.0 name: deployment-demo-container

4.期望:副本数、Pod 选择器、运算符匹配(与Pod 标签符合运算符)、Pod 模板、Pod 元数据、Pod 标签(与Deployment 标签符合子集运算)、Pod 期望、容器组:基于镜像版本、Pod 名

[root@k8s-master01 5]# kubectl create -f 6.deploy.yaml deployment.apps/deployment-demo created [root@k8s-master01 5]# kubectl get deployment,pod -o wide NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR deployment.apps/deployment-demo 5/5 5 5 12s deployment-demo-container myapp:v1.0 app=deployment-demo NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod/deployment-demo-d97c6c4cf-5hn9t 1/1 Running 0 12s 10.244.85.235 k8s-node01 <none> <none> pod/deployment-demo-d97c6c4cf-c9cft 1/1 Running 0 12s 10.244.58.206 k8s-node02 <none> <none> pod/deployment-demo-d97c6c4cf-fztvw 1/1 Running 0 12s 10.244.58.205 k8s-node02 <none> <none> pod/deployment-demo-d97c6c4cf-qflvn 1/1 Running 0 12s 10.244.85.234 k8s-node01 <none> <none> pod/deployment-demo-d97c6c4cf-vkksg 1/1 Running 0 12s 10.244.58.207 k8s-node02 <none> <none> [root@k8s-master01 5]# curl 10.244.85.235 Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a> [root@k8s-master01 5]# cat 6.deploy.yaml apiVersion: apps/v1 kind: Deployment metadata: labels: app: deployment-demo name: deployment-demo spec: replicas: 5 selector: matchLabels: app: deployment-demo template: metadata: labels: app: deployment-demo spec: containers: - image: myapp:v2.0 name: deployment-demo-container # 镜像升级以后,再次创建不成功,create 不会对比文件差异性,直接创建,因为已经有原 Pod,所以创建报错 [root@k8s-master01 5]# kubectl create -f 6.deploy.yaml Error from server (AlreadyExists): error when creating "6.deploy.yaml": deployments.apps "deployment-demo" already exists # 基于文件的资源清单删除 [root@k8s-master01 5]# kubectl delete -f 6.deploy.yaml deployment.apps "deployment-demo" deleted [root@k8s-master01 5]# kubectl get deployment,pod No resources found in default namespace. # 使用 apply 创建,修改后仍能创建,是对比修改的部分进行更改创建 [root@k8s-master01 5]# kubectl apply -f 6.deploy.yaml deployment.apps/deployment-demo created [root@k8s-master01 5]# kubectl get deployment,pod -o wide NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR deployment.apps/deployment-demo 5/5 5 5 61s deployment-demo-container myapp:v2.0 app=deployment-demo NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod/deployment-demo-7bd59969bd-fqsvp 1/1 Running 0 61s 10.244.58.209 k8s-node02 <none> <none> pod/deployment-demo-7bd59969bd-gvltd 1/1 Running 0 61s 10.244.85.237 k8s-node01 <none> <none> pod/deployment-demo-7bd59969bd-l2fdp 1/1 Running 0 61s 10.244.85.236 k8s-node01 <none> <none> pod/deployment-demo-7bd59969bd-vgtfc 1/1 Running 0 61s 10.244.58.210 k8s-node02 <none> <none> pod/deployment-demo-7bd59969bd-x569b 1/1 Running 0 61s 10.244.58.208 k8s-node02 <none> <none> [root@k8s-master01 5]# curl 10.244.58.209 Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a> [root@k8s-master01 5]# cat 6.deploy.yaml apiVersion: apps/v1 kind: Deployment metadata: labels: app: deployment-demo name: deployment-demo spec: replicas: 5 selector: matchLabels: app: deployment-demo template: metadata: labels: app: deployment-demo spec: containers: - image: myapp:v3.0 name: deployment-demo-container [root@k8s-master01 5]# kubectl apply -f 6.deploy.yaml deployment.apps/deployment-demo configured [root@k8s-master01 5]# kubectl get deployment,pod -o wide NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR deployment.apps/deployment-demo 5/5 5 5 92s deployment-demo-container myapp:v3.0 app=deployment-demo NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod/deployment-demo-6db758d985-5qm58 1/1 Running 0 9s 10.244.85.239 k8s-node01 <none> <none> pod/deployment-demo-6db758d985-9g7qm 1/1 Running 0 9s 10.244.58.211 k8s-node02 <none> <none> pod/deployment-demo-6db758d985-9xjwt 1/1 Running 0 8s 10.244.58.212 k8s-node02 <none> <none> pod/deployment-demo-6db758d985-g8nzw 1/1 Running 0 7s 10.244.58.213 k8s-node02 <none> <none> pod/deployment-demo-6db758d985-m9bgk 1/1 Running 0 9s 10.244.85.238 k8s-node01 <none> <none> [root@k8s-master01 5]# curl 10.244.85.239 Hello MyApp | Version: v3 | <a href="hostname.html">Pod Name</a>

kubectl create 、apply、replace

1.create:创建资源对象

-f:通过基于文件的创建,但是如果此文件描述的对象存在,那么即使文件描述的信息发生了改变,再次提交时也不会应用

2.apply:创建资源对象、修改资源对象

-f:基于文件创建,如果目标对象与文件本身发生改变,那么会根据文件的指定一一修改目标对象的属性(部分更新)

3.replace:创建资源对象、修改资源对象

-f:基于文件创建,如果目标对象与文件本身发生改变,那么会重建次对象(替换)

# 副本扩缩容 [root@k8s-master01 5]# kubectl scale deployment deployment-demo --replicas=3 # k8s 根据当前负载量进行自动扩缩容 # 调整名字叫做 deployment-demo 的 deployment 控制器,最小 10 个pod,最多 15 个 pod,当 cpu 达到 80% 时,自动扩容。(定义最小数是因为业务低谷流量少,但担心突然的大量流量访问造成 pod 没来及扩容就死亡,从而服务中断。定义最大数是因为会有代码bug或恶意攻击一直扩容,造成大量资源浪费 ) [root@k8s-master01 5]# kubectl autoscale deployment deployment-demo --min=10 --max=15 --cpu-percent=80 # 需要安装监控性能的插件,从而根据监控的 cpu 和 内存使用量 暴露出接口,HPA 根据接口获得得实际性能数据再进行扩缩容 [root@k8s-master01 5]# kubectl top pod error: Metrics API not available # 根据deployment 控制器、deployment名字、容器名,去更换镜像 [root@k8s-master01 5]# kubectl get deployment,pod -o wide NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR deployment.apps/deployment-demo 3/3 3 3 25h deployment-demo-container myapp:v2.0 app=deployment-demo NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod/deployment-demo-7bd59969bd-85pcp 1/1 Running 0 8m22s 10.244.85.202 k8s-node01 <none> <none> pod/deployment-demo-7bd59969bd-8xzpm 1/1 Running 0 8m21s 10.244.58.246 k8s-node02 <none> <none> pod/deployment-demo-7bd59969bd-c8j2v 1/1 Running 0 8m20s 10.244.58.250 k8s-node02 <none> <none> [root@k8s-master01 5]# curl 10.244.85.202 Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a> [root@k8s-master01 5]# kubectl set image deployment.apps/deployment-demo deployment-demo-container=myapp:v3.0 deployment.apps/deployment-demo image updated [root@k8s-master01 5]# kubectl get deployment,pod -o wide NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR deployment.apps/deployment-demo 3/3 3 3 25h deployment-demo-container myapp:v3.0 app=deployment-demo NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod/deployment-demo-6db758d985-9hmrn 1/1 Running 0 6s 10.244.58.251 k8s-node02 <none> <none> pod/deployment-demo-6db758d985-tc8vz 1/1 Running 0 8s 10.244.58.249 k8s-node02 <none> <none> pod/deployment-demo-6db758d985-wnxrm 1/1 Running 0 9s 10.244.85.203 k8s-node01 <none> <none> [root@k8s-master01 5]# curl 10.244.58.251 Hello MyApp | Version: v3 | <a href="hostname.html">Pod Name</a> # 验证滚动更新,有新的v2生成,到滚动过程大部分是v2,到最后都是v2 [root@k8s-master01 5]# kubectl create service clusterip deployment-demo --tcp=80:80 service/deployment-demo created [root@k8s-master01 5]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE deployment-demo ClusterIP 10.14.252.163 <none> 80/TCP 7s kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 10d [root@k8s-master01 5]# curl 10.14.252.163 Hello MyApp | Version: v3 | <a href="hostname.html">Pod Name</a> [root@k8s-master01 5]# kubectl set image deployment deployment-demo deployment-demo-container=myapp:v2.0 deployment.apps/deployment-demo image updated [root@k8s-master01 5]# while true; do curl 10.14.252.163;done Hello MyApp | Version: v3 | <a href="hostname.html">Pod Name</a> Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

(6)Deployment 更新策略

Deployment 可以保证在升级时只有一定数量的 Pod 是 down 的。默认的,它会确保至少有比期望的 Pod 数量少一个是 up 状态(最多一个不可用)

Deployment 同时也可以确保只创建出超过期望数量的一定数量的 Pod。默认的,它会确保最多比期望的 Pod 数量多一个的 Pod 是 up 的(最多一个新版本)、

未来的 Kubernetes 版本中,将从 1-1 变成 25%-25% (未来已来)

[root@k8s-master01 5]# kubectl get deployment NAME READY UP-TO-DATE AVAILABLE AGE deployment-demo 3/3 3 3 25h [root@k8s-master01 5]# kubectl get deployment deployment-demo -o yaml .......... spec: ......... strategy: rollingUpdate: maxSurge: 25% maxUnavailable: 25% type: RollingUpdate ..........

spec.strategy.rollingUpdate:滚动更新策略 spec.strategy.rollingUpdate.maxSurge: 25% 多25 spec.strategy.rollingUpdate.maxUnavailable: 25% 少25%

[root@k8s-master01 5]# kubectl explain deployment.spec.strategy.type GROUP: apps KIND: Deployment VERSION: v1 FIELD: type <string> DESCRIPTION: Type of deployment. Can be "Recreate" or "RollingUpdate". Default is RollingUpdate. Possible enum values: - `"Recreate"` Kill all existing pods before creating new ones. - `"RollingUpdate"` Replace the old ReplicaSets by new one using rolling update i.e gradually scale down the old ReplicaSets and scale up the new one. Recreate:重建,允许停止访问的情况下 RollingUpdate:滚动更新,默认 maxSurge:指定超过副本数有几个,两种方式:1、指定数量 2、百分比 maxUnavailable:最多有几个不可用

(7)Deployment 金丝雀部署

金丝雀部署的名字灵感来源于 17 世纪英国矿井工人使用金丝雀作为瓦斯检测指标的传统方法。金丝雀对瓦斯这种气体十分敏感,空气中哪怕有及其微量的瓦斯,金丝雀也会停止歌唱。而当瓦斯含量超过一定限度时,虽然人类毫无察觉,金丝雀却早已毒发身亡。在采矿设备相对简陋的条件下,工人们每次下井都会带上一只金丝雀作为“瓦斯检测指标”,以便在危险状况下紧急撤离。

金丝雀部署的核心思想是在实际运行环境中的一小部分用户或流量上测试新版本的软件,而大部分用户或流量仍然使用旧版本。通过对新版本进行有限范围的实时测试和监控,可以及早发现潜在的问题,并减少对整个系统的冲击。

1.deployment myapp:v1.0 创建 RS myapp:v1.0 ,RS myapp:v1.0 创建 myapp:v1.0 的 pod1、pod2、pod3

2.当修改deployment 中镜像版本为 myapp:v2.0 后,deployment 会新创建一个 RS myapp:v2.0 的控制器

3.RS 中 myapp:v2.0 创建 myapp:v2.0 的 pod4

更新成功:

1)pod4 也被访问,这就是金丝雀发布,当 pod4 更新成功,会消失 myapp:v1.0 的 pod3,然后创建 myapp:v2.0 的 pod 5,依此完成更新

更新失败:

1)deployment 将负载到 pod4 上的访问停止,继续使用 myapp:v1.0 的 pod

# 通过打补丁的方式进行升级,格式是'{"":{}}',与字典一样的输入方式,最多更新1个,最少0个不可用 # 注意这里数字不用引号,如果是百分号要加引号 "25%" [root@k8s-master01 5]# kubectl get deployment deployment-demo -o yaml .......... spec: progressDeadlineSeconds: 600 replicas: 3 revisionHistoryLimit: 10 selector: matchLabels: app: deployment-demo strategy: rollingUpdate: maxSurge: 25% maxUnavailable: 25% type: RollingUpdate .......... [root@k8s-master01 5]# kubectl patch deployment deployment-demo -p '{"spec":{"strategy":{"rollingUpdate":{"maxSurge":1,"maxUnavailable":0}}}}' deployment.apps/deployment-demo patched [root@k8s-master01 5]# kubectl get deployment deployment-demo -o yaml .......... spec: progressDeadlineSeconds: 600 replicas: 3 revisionHistoryLimit: 10 selector: matchLabels: app: deployment-demo strategy: rollingUpdate: maxSurge: 1 maxUnavailable: 0 type: RollingUpdate ..........

# 进行补丁升级,然后暂停升级,此时就是金丝雀发布,3个myapp:v1.0 和1个myapp:v2.0 提供访问,与前面打补丁,最多可以增加1个,不能比资源的副本少0,进行的滚动更新。 [root@k8s-master01 5]# kubectl get deployment,pod NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/deployment-demo 3/3 3 3 28h NAME READY STATUS RESTARTS AGE pod/deployment-demo-d97c6c4cf-j76nx 1/1 Running 0 54s pod/deployment-demo-d97c6c4cf-jwn8k 1/1 Running 0 52s pod/deployment-demo-d97c6c4cf-rwxr4 1/1 Running 0 53s [root@k8s-master01 5]# kubectl patch deployment deployment-demo --patch '{"spec":{"template":{"spec":{"containers":[{"name":"deployment-demo-container","image":"myapp:v2.0"}]}}}}' && kubectl rollout pause deployment deployment-demo deployment.apps/deployment-demo patched deployment.apps/deployment-demo paused [root@k8s-master01 5]# kubectl get deployment,pod NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/deployment-demo 4/3 1 4 28h NAME READY STATUS RESTARTS AGE pod/deployment-demo-7bd59969bd-xgmdx 1/1 Running 0 6s pod/deployment-demo-d97c6c4cf-j76nx 1/1 Running 0 109s pod/deployment-demo-d97c6c4cf-jwn8k 1/1 Running 0 107s pod/deployment-demo-d97c6c4cf-rwxr4 1/1 Running 0 108s # 升级没有问题,可以继续发布,可以继续滚动更新 [root@k8s-master01 5]# kubectl rollout resume deployment deployment-demo deployment.apps/deployment-demo resumed [root@k8s-master01 5]# kubectl get deployment,pod NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/deployment-demo 3/3 3 3 28h NAME READY STATUS RESTARTS AGE pod/deployment-demo-7bd59969bd-qpbv8 1/1 Running 0 4s pod/deployment-demo-7bd59969bd-w7vvh 1/1 Running 0 2s pod/deployment-demo-7bd59969bd-xgmdx 1/1 Running 0 7m13s # 升级有问题,单个回滚,将镜像更新回原版本,然后关闭暂停 [root@k8s-master01 5]# kubectl patch deployment deployment-demo --patch '{"spec":{"template":{"spec":{"containers":[{"name":"deployment-demo-container","image":"myapp:v2.0"}]}}}}' deployment.apps/deployment-demo patched [root@k8s-master01 5]# kubectl rollout resume deployment deployment-demo # 升级有问题,批量回滚 [root@k8s-master01 5]# kubectl rollout undo deployment deployment-demo deployment.apps/deployment-demo rolled back [root@k8s-master01 5]# kubectl get po NAME READY STATUS RESTARTS AGE deployment-demo-6db758d985-b9k9b 1/1 Running 0 29s deployment-demo-6db758d985-n85nb 1/1 Running 0 28s deployment-demo-6db758d985-pswnk 1/1 Running 0 26s # 背后是通过调用不同的 RS 进行实现 [root@k8s-master01 5]# kubectl get rs NAME DESIRED CURRENT READY AGE deployment-demo-6db758d985 3 3 3 28h deployment-demo-7bd59969bd 0 0 0 28h deployment-demo-d97c6c4cf 0 0 0 4h5m

# 多个版本之间回滚,v1 -> v2 -> v3 ,此时第一次回滚,v3 -> v2 ,但是此时再回滚 v1 -> v2 -> v3 -> v2,v2 的上一个版本是 v3 ,所以又回滚到了 v3 版本 # 查看当前滚动更新的状态 [root@k8s-master01 5]# kubectl rollout status deployment deployment-demo deployment "deployment-demo" successfully rolled out # 查看更新的版本,发现备注信息里没有提示 [root@k8s-master01 5]# kubectl rollout history deployment deployment-demo deployment.apps/deployment-demo REVISION CHANGE-CAUSE 8 <none> 11 <none> 12 <none> # 重新创建,加上 --record,增加提示信息,可以根据提示信息知道每次更新的内容 [root@k8s-master01 5]# kubectl delete deployment deployment-demo deployment.apps "deployment-demo" deleted [root@k8s-master01 5]# kubectl create -f 6.deploy.yaml --record Flag --record has been deprecated, --record will be removed in the future deployment.apps/deployment-demo created [root@k8s-master01 5]# kubectl set image deployment deployment-demo deployment-demo-container=myapp:v2.0 --record Flag --record has been deprecated, --record will be removed in the future deployment.apps/deployment-demo image updated [root@k8s-master01 5]# kubectl rollout history deployment deployment-demo deployment.apps/deployment-demo REVISION CHANGE-CAUSE 1 kubectl create --filename=6.deploy.yaml --record=true 2 kubectl set image deployment deployment-demo deployment-demo-container=myapp:v2.0 --record=true # 当加了 --record,后面没有加的时候,再更新会把上一次的命令复制下来,容易变混乱 [root@k8s-master01 5]# kubectl set image deployment deployment-demo deployment-demo-container=myapp:v3.0 deployment.apps/deployment-demo image updated [root@k8s-master01 5]# kubectl rollout history deployment deployment-demo deployment.apps/deployment-demo REVISION CHANGE-CAUSE 2 kubectl set image deployment deployment-demo deployment-demo-container=myapp:v2.0 --record=true 3 kubectl set image deployment deployment-demo deployment-demo-container=myapp:v2.0 --record=true # 可以通过版本号进行回滚,解决回滚多级的问题 [root@k8s-master01 5]# kubectl rollout undo deployment deployment-demo --to-revision=2 deployment.apps/deployment-demo rolled back

(8)Deployment 清理策略

可以通过设置 spec.revisionHistoryLimit 项来指定 deployment 最多保留多少 revision 历史记录。默认的会保留 10 条revision;如果将该项设置为0,Deployment 就不允许回退了

可以通过cp v1.yaml 2.v2.yaml 的方式进行升级和回滚,这样就保留着磁盘,相比于spec.revisionHistoryLimit 保存 rs 的信息到 etcd 里面要更便捷和节省 etcd 资源

[root@k8s-master01 5]# kubectl explain spec.revisionHistoryLimit the server doesn't have a resource type "spec" [root@k8s-master01 5]# kubectl explain deployment.spec.revisionHistoryLimit GROUP: apps KIND: Deployment VERSION: v1 FIELD: revisionHistoryLimit <integer> DESCRIPTION: The number of old ReplicaSets to retain to allow rollback. This is a pointer to distinguish between explicit zero and not specified. Defaults to 10. [root@k8s-master01 5]# kubectl get rs NAME DESIRED CURRENT READY AGE deployment-demo-6db758d985 0 0 0 53m deployment-demo-7bd59969bd 5 5 5 53m

(9)Deployment 更新策略

1.在升级过程中,Deployment 首先启动 v1 版本,假设启动 4 个 pod

2.当升级成 v2 版本,发现版本错了,想直接升级成 v3 版本,此时 1 个 pod 是 v2 版本,3 个 pod 是 v3 版本

思考:接下来,如何进行版本更新?

1)1 个 v2 版本 pod 变成 v3 版本 pod,其余 3 个 v1 版本 pod 先变成 v2 版本,再变成 v3 版本

2)1 个 v2 版本 pod 变成 v3 版本 pod,其余 3 个 v1 版本 pod 直接变成 v3 版本

kubernetes 采用第二种策略,v2 只是一个中间态,既然终态是 v3,直接跳过中间态 v2 ,节省资源的消耗过程(与人旅游类似,出发去新疆,中途想去云南了,直接改道云南,不用先到新疆再去云南)

(10)干跑形成yaml文件

# deployment 控制器名、基于镜像、--dry-run 干跑、-o yaml 输出为 yaml 文件

[root@k8s-master01 5]# kubectl create deployment my_deployment --image=busybox --dry-run -o yaml W0608 10:11:37.558998 35830 helpers.go:704] --dry-run is deprecated and can be replaced with --dry-run=client. apiVersion: apps/v1 kind: Deployment metadata: creationTimestamp: null labels: app: my_deployment name: my_deployment spec: replicas: 1 selector: matchLabels: app: my_deployment strategy: {} template: metadata: creationTimestamp: null labels: app: my_deployment spec: containers: - image: busybox name: busybox resources: {} status: {} [root@k8s-master01 5]# kubectl create deployment my_deployment --image=busybox --dry-run -o yaml > my_deployment.test W0608 10:13:25.286964 36840 helpers.go:704] --dry-run is deprecated and can be replaced with --dry-run=client.

5.2.3 DaemonSet

DaemonSet 确保全部(或者一些)Node 上运行一个 Pod 的副本。当有 Node 加入集群时,也会为他们新增一个 Pod。当有 Node 从集群移除时,这些 Pod 也会被回收。删除 DaemonSet 将会删除它创建的所有 Pod

使用 DaemonSet 的一些典型用法:

运行集群存储 daemon,例如在每个 Node 上运行 `glusterd`、`ceph`

在每个 Node 上运行日志收集 daemon,例如`fluentd`,`logstash`

在每个 Node 节点运行监控 daemon,例如 Prometheus Node Exporter、`collectd`、Datadog 代理、New Relic 代理,或 Ganglia `gmond`

[root@k8s-master01 5]# cat 7.daemonset.yaml apiVersion: apps/v1 kind: DaemonSet metadata: name: daemonset-demo labels: app: daemonset-demo spec: selector: matchLabels: name: daemonset-demo template: metadata: labels: name: daemonset-demo spec: containers: - name: daemonset-demo-container image: myapp:v1.0

1.接口组版本:apps组v1版 2.类别:DaemonSet 3.元数据:DaemonSet 控制器名称、DaemonSet 控制器 标签、标签值 4.期望:标签选择器:匹配标签:标签、标签值、模板:元数据、Pod 标签、Pod标签值、期望:容器组、容器名、基于镜像

# daemonset 未设置副本数量,默认每个节点启动一个 pod,和 deployment 未设置副本数默认启动为 1 不同,k8s-master01 未启动 pod 原因是 集群默认为它设置了 不调度污点 [root@k8s-master01 5]# kubectl create -f 7.daemonset.yaml daemonset.apps/daemonset-demo created [root@k8s-master01 5]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES daemonset-demo-btvt6 1/1 Running 0 7s 10.244.58.211 k8s-node02 <none> <none> daemonset-demo-vr8qf 1/1 Running 0 7s 10.244.85.224 k8s-node01 <none> <none> [root@k8s-master01 5]# kubectl describe node k8s-master01 .......... Taints: node-role.kubernetes.io/control-plane:NoSchedule ..........

5.2.4 Job

(1)Job 定义

Job 负责批处理任务,即执行一次的任务,它保证批处理任务的一个或多个 Pod 成功结束( Pod 返回码为 0)

特殊说明:

spec.template 格式同 Pod

RestartPolicy 仅支持 Nerver 或 OnFailure(没有always,如果有将与 deployment 一样,例如备份成功,Pod 结束,又启动一个 Pod 去进行备份)

单个 Pod 时,默认 Pod 成功运行后 Job 即结束

`.spec.completions` 标志 Job 结束需要成功运行的 Pod 个数,默认为 1(成功运行,返回码为 0 的 Pod 个数,返回码非 0,不计入)

`.spec.parallelism` 标志并行运行的 Pod 的个数,默认为 1(一次创建几个 Pod、线性启动 )

`.spec.activeDeadlineSeconds` 标志失败 Pod 的重试最大时间,超过这个时间不会继续重试

(2)第一个实验演示

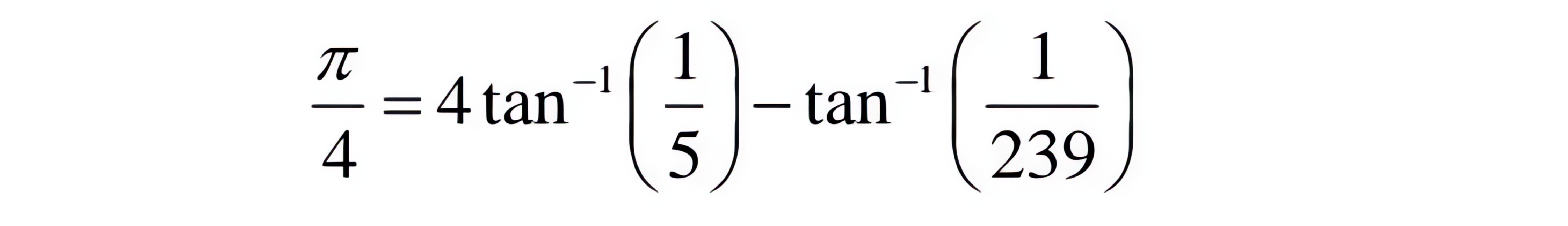

求 π 值,算法:马青公式

这个公式由英国天文学教授 约翰·马青 于 1706 年发现。他利用这个公式计算到了 100 位的圆周率。马青公式每计算一项可以得到 1.4 位的 十进制精度。因为他的计算过程中被乘数和被除数都不大于长整数,所以可以很容易地在计算机上编程实现。

# 下载镜像基础镜像 [root@k8s-master01 5]# docker pull python:2.7 2.7: Pulling from library/python 7e2b2a5af8f6: Pull complete 09b6f03ffac4: Pull complete dc3f0c679f0f: Pull complete fd4b47407fc3: Pull complete b32f6bf7d96d: Pull complete 6f4489a7e4cf: Pull complete af4b99ad9ef0: Pull complete 39db0bc48c26: Pull complete acb4a89489fc: Pull complete Digest: sha256:cfa62318c459b1fde9e0841c619906d15ada5910d625176e24bf692cf8a2601d Status: Downloaded newer image for python:2.7 docker.io/library/python:2.7 [root@k8s-master01 5]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE python 2.7 68e7be49c28c 5 years ago 902MB

# 基于马青公式 ,python 语言编辑的代码 [root@k8s-master01 5]# cat main.py # -*- coding: utf-8 -*- from __future__ import division # 导入时间模块 import time # 计算当前时间 time1=time.time() # 算法根据马青公式计算圆周率 # number = 1000 # 多计算10位,防止尾数取舍的影响 number1 = number+10 # 算到小数点后number1位 b = 10**number1 # 求含4/5的首项 x1 = b*4//5 # 求含1/239的首项 x2 = b // -239 # 求第一大项 he = x1+x2 #设置下面循环的终点,即共计算n项 number *= 2 #循环初值=3,末值2n,步长=2 for i in xrange(3,number,2): # 求每个含1/5的项及符号 x1 //= -25 # 求每个含1/239的项及符号 x2 //= -57121 # 求两项之和 x = (x1+x2) // i # 求总和 he += x # 求出π pai = he*4 #舍掉后十位 pai //= 10**10 # 输出圆周率π的值 paistring=str(pai) result=paistring[0]+str('.')+paistring[1:len(paistring)] print result time2=time.time() print u'Total time:' + str(time2 - time1) + 's'

# 编辑Dockerfile,创建镜像,将镜像分发到其他节点,其他节点再倒入镜像

[root@k8s-master01 5]# cat Dockerfile FROM python:2.7 ADD ./main.py /root CMD /usr/bin/python /root/main.py [root@k8s-master01 5]# docker build -t maqingpythonv1 . [+] Building 0.2s (7/7) FINISHED docker:default => [internal] load build definition from Dockerfile 0.0s => => transferring dockerfile: 107B 0.0s => WARN: JSONArgsRecommended: JSON arguments recommended for CMD to prevent unintended behavior related to OS signals (line 3) 0.0s => [internal] load metadata for docker.io/library/python:2.7 0.0s => [internal] load .dockerignore 0.0s => => transferring context: 2B 0.0s => [internal] load build context 0.0s => => transferring context: 960B 0.0s => [1/2] FROM docker.io/library/python:2.7 0.1s => [2/2] ADD ./main.py /root 0.0s => exporting to image 0.0s => => exporting layers 0.0s => => writing image sha256:464ec9dee78fb1e3ee0ad742d60527b217b53f300c5d352555cdbbd9164b534d 0.0s => => naming to docker.io/library/maqingpythonv1 0.0s 1 warning found (use docker --debug to expand): - JSONArgsRecommended: JSON arguments recommended for CMD to prevent unintended behavior related to OS signals (line 3) [root@k8s-master01 5]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE maqingpythonv1 latest 464ec9dee78f 10 seconds ago 902MB [root@k8s-master01 5]# docker save maqingpythonv1:latest -o maqingpythonv1.tar.gz [root@k8s-master01 5]# scp maqingpythonv1.tar.gz root@n1:/root root@n1's password: maqingpythonv1.tar.gz 100% 882MB 83.8MB/s 00:10 [root@k8s-master01 5]# scp maqingpythonv1.tar.gz root@n2:/root root@n2's password: maqingpythonv1.tar.gz 100% 882MB 81.4MB/s 00:10 [root@k8s-node01 ~]# docker load -i maqingpythonv1.tar.gz [root@k8s-node02 ~]# docker load -i maqingpythonv1.tar.gz

[root@k8s-master01 5]# cat 8.job.yaml apiVersion: batch/v1 kind: Job metadata: name: job-demo spec: template: metadata: name: job-demo-pod spec: containers: - name: job-demo-container image: maqingpythonv1 imagePullPolicy: Never restartPolicy: Never

1.接口组版本:batch组v1版 2.类别:Job 3.元数据:Job 控制器名字 4.期望:模板:元数据:Pod 名、Pod 期望:容器组:容器名、基于镜像版本、不下载使用本地镜像、重启策略:不重启

# 查看日志,可以显示 pod 已经运行成功,出打印的 2000 位 π 值 [root@k8s-master01 5]# kubectl create -f 8.job.yaml job.batch/job-demo created [root@k8s-master01 5]# kubectl get pod NAME READY STATUS RESTARTS AGE job-demo-t4tfq 0/1 Completed 0 4s [root@k8s-master01 5]# kubectl logs job-demo-t4tfq 3.1415926535897932384626433832795028841971693993751058209749445923078164062862089986280348253421170679821480865132823066470938446095505822317253594081284811174502841027019385211055596446229489549303819644288109756659334461284756482337867831652712019091456485669234603486104543266482133936072602491412737245870066063155881748815209209628292540917153643678925903600113305305488204665213841469519415116094330572703657595919530921861173819326117931051185480744623799627495673518857527248912279381830119491298336733624406566430860213949463952247371907021798609437027705392171762931767523846748184676694051320005681271452635608277857713427577896091736371787214684409012249534301465495853710507922796892589235420199561121290219608640344181598136297747713099605187072113499999983729780499510597317328160963185950244594553469083026425223082533446850352619311881710100031378387528865875332083814206171776691473035982534904287554687311595628638823537875937519577818577805321712268066130019278766111959092164201989 Total time:0.00243401527405s

(3)第二个实验演示

Job 负责批处理任务,即仅执行一次的任务,它保证批处理任务的一个或多个 Pod 成功结束

[root@k8s-master01 5]# cat 9.job.yaml apiVersion: batch/v1 kind: Job metadata: name: rand-1 spec: template: metadata: name: rand-1 spec: containers: - name: rand-1 image: randexitv1 imagePullPolicy: Never args: ["--exitcode=1"] restartPolicy: Never [root@k8s-master01 5]# cat 10.job.yaml apiVersion: batch/v1 kind: Job metadata: name: rand-2 spec: completions: 10 parallelism: 5 template: metadata: name: rand-2 spec: containers: - name: rand-2 image: randexitv1 imagePullPolicy: Never restartPolicy: Never

4.期望:Pod 模板:元数据:Pod 名、Pod 期望:容器组:容器名、基于镜像版本、镜像下载策略:使用本地不下载、参数传递 返回码1、重启策略:不重启 4.期望:完成数量、并发量、Pod 模板:元数据:Pod 名、Pod 期望:容器组:容器名、基于镜像版本、镜像下载策略:使用本地不下载、重启策略:不重启

[root@k8s-master01 5]# kubectl create -f 9.job.yaml job.batch/rand-1 created [root@k8s-master01 5]# kubectl create -f 10.job.yaml job.batch/rand-2 created [root@k8s-master01 5]# kubectl get pod NAME READY STATUS RESTARTS AGE rand-1-2st6f 0/1 Error 0 9s rand-1-7f7pw 0/1 Error 0 2m17s rand-1-8f42q 0/1 Error 0 2m42s rand-1-b586t 0/1 Error 0 2m57s rand-1-nrb7n 0/1 Error 0 93s rand-2-5wp4f 0/1 Error 0 2m52s rand-2-6qwfr 0/1 Completed 0 2m34s rand-2-6r455 0/1 Completed 0 2m52s rand-2-84dt7 0/1 Completed 0 2m52s rand-2-85khp 0/1 Completed 0 2m10s rand-2-8wggn 0/1 Completed 0 2m52s rand-2-dp7nx 0/1 Completed 0 2m42s rand-2-hjk6h 0/1 Completed 0 2m52s rand-2-hv6xb 0/1 Error 0 2m34s rand-2-ll6mr 0/1 Completed 0 2m43s rand-2-nqqkq 0/1 Error 0 2m25s rand-2-pvgrg 0/1 Error 0 2m43s rand-2-shrgm 0/1 Completed 0 2m43s rand-2-twgtg 0/1 Completed 0 2m35s rand-2-wws7b 0/1 Error 0 2m43s # 经过一段时间,rand-1一直没有成功,rand-2有成功有失败,最后成功数量是 10 个,rand-2 随机数小于 50,返回码 0 才是成功。 [root@k8s-master01 5]# kubectl get job NAME COMPLETIONS DURATION AGE rand-1 0/1 3m5s 3m5s rand-2 10/10 50s 3m # 因为 rand-1 返回码一直是 1,所以一直不成功,不成功后,会重拉一个Pod继续执行任务(注:不是重启,是 Pod 任务失败,重拉 Pod) [root@k8s-master01 5]# kubectl logs rand-1-2st6f 休眠 4 秒,返回码为 1! # rand-2 是随机的,要求完成数量是10个,并发数量是5个(例:第一次启动5个,有2个失败,第二次再启动5个,有3个失败,第三次再启动5个,有1个失败,第四次启动1个,多线程,线性启动) [root@k8s-master01 5]# kubectl logs rand-2-5wp4f 休眠 5 秒,产生的随机数为 79,大于等于 50,返回码为 1! [root@k8s-master01 5]# kubectl logs rand-2-6qwfr 休眠 5 秒,产生的随机数为 8,小于 50,返回码为 0!

5.2.5 CronJob

(1)Crontab 特性

Cron tab 管理基于时间的 Job,即:

在给定时间点运行一次

周期性地在给定时间点运行

使用条件:当前使用的 Kubernetes 集群,版本 >= 1.8 (对 CronJob)

典型的用法如下所示:

在给定的时间点调度 Job 运行

创建周期性运行的 Job,例如:数据库备份、发送邮件

(2)包含字段

`.spec.schedule`:调度,必需字段,指定任务运行周期,格式同 Crontabl 一致

`.spec.jobTemplate`:Job 模板,必需字段,指定需要运行的任务,格式同 Job

`.spec.startingDeadlineSeconds`:启动 Job 的期限(秒级别),该字段是可选的。如果因为任何原因错过了被调度的时间,那么错过执行时间的 Job 将被认为是失败的。如果没有指定,则没有期限

`.spec.concurrencyPolicy`:并发策略,该字段也是可选的。它指定了如何处理被 Cron Job 创建的 Job 的并发执行。只允许指定下面策略中的一种(与 Job 中并行 Pod 区分,是创建 Job,是否并行有下面三种选项)

`Allow`(默认):允许并发运行 Job

`Forbid`:禁止并发运行,如果前一个还没有完成,则直接跳过下一个(旧的没执行完,新的静默)

`Replace`:取消当前正在运行的 Job,用一个新的来替换(旧的没执行完,新的仍替换旧的 Job)

注意:当前策略只能应用于同一个 Cron Job 创建的 Job。如果存在多个 Cron Job,他们创建的 Job 之间总是允许并发运行

`.spec.suspend` :挂起,该字段也是可选的。如果设置为 `true`,后续所有执行都会被挂起。它对已经开始执行的 Job 不起作用。默认值为 `false`

`.spec.successfulJobsHistoryLimit` 和 `.sped.failedJobsHistoryLimit` :历史限制,是可选的字段。它们指定了可以保留多少完成和失败的 Job。默认情况下,它们分别设置为 `3` 和 `1` 。设置限制的值为 `0` 相关类型的 Job 完成后将不会被保留

(3)创建 Cron Job

[root@k8s-master01 5]# cat 11.cronjob.yaml apiVersion: batch/v1 kind: CronJob metadata: name: cronjob-demo spec: schedule: "*/1 * * * *" jobTemplate: spec: completions: 3 template: spec: containers: - name: cronjob-demo-container image: busybox args: - /bin/sh - -c - date; echo Hello from the Kubernetes cluster restartPolicy: OnFailure

1.接口组版本:batch接口v1版本 2.类别:CronJob 3.元数据:CronJob 控制器名 4.期望:调度时间、job模板:job期望:成功数量、Pod模板:Pod期望:容器组:容器名、基于镜像版本、执行命令、重启策略:返回码为0不重载、非0重载

# cronjob 每分钟创建一个 job,每个 job 每次创建 1 个 Pod(未设置并行关系),创建成功 3 次 # 此处注意:1.CronJob 只能做分钟级别控制,无法做到秒级别的控制。2.Cron Job 能按照要求每分钟创建 Job,但不是一开始就创建,比如下面 job 的 AGE,第一个3m7s,第二个2m7s,第三个1m7s。 [root@k8s-master01 5]# kubectl apply -f 11.cronjob.yaml cronjob.batch/cronjob-demo created [root@k8s-master01 5]# kubectl get cronjob NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE cronjob-demo */1 * * * * False 3 5s 3m42s [root@k8s-master01 5]# kubectl get job NAME COMPLETIONS DURATION AGE cronjob-demo-29156296 3/3 2m57s 3m7s cronjob-demo-29156297 1/3 2m7s 2m7s cronjob-demo-29156298 1/3 67s 67s cronjob-demo-29156299 0/3 7s 7s [root@k8s-master01 5]# kubectl get pod NAME READY STATUS RESTARTS AGE cronjob-demo-29156296-6d8fq 0/1 Completed 0 3m14s cronjob-demo-29156296-btnbq 0/1 Completed 0 104s cronjob-demo-29156296-ppwww 0/1 Completed 0 2m31s cronjob-demo-29156297-5dtr6 0/1 ContainerCreating 0 61s cronjob-demo-29156297-r2dtw 0/1 Completed 0 2m14s cronjob-demo-29156298-j4twv 0/1 ContainerCreating 0 16s cronjob-demo-29156298-sv9rk 0/1 Completed 0 74s cronjob-demo-29156299-qmd9d 0/1 ContainerCreating 0 14s

(4)CronJob 限制

创建 Job 操作应该是幂等的:

操作 N 次,结果和预期都是相符的,无论尝试创建 Job 的操作执行多少次,在相同的状态下,结果应该是相同的,不会产生额外的或者重复的 Job 实例。

不会同样一个 Job,既可以备份数据库,又可以发送邮件等...

3.2.6 Pod 控制器总结

(1)守护进程类型

RC 控制器

保障当前的 Pod 数量与期望值一致

RS 控制器

功能与 RC 控制器类似,但是多了标签选择器的运算方式

Deployment 控制器

支持了声明式表达

支持了滚动更新和回滚

原理:Deployment 控制 RS,RS 再去控制 Pod

DaemonSet 控制器

保障每个节点有且只有一个 Pod 的运行,动态调整

(2)批处理任务类型

Job

保障批处理任务一个或多个成功为止

Cron Job

周期性创建 Job,典型的场景:数据库的周期性备份......

———————————————————————————————————————————————————————————————————————————

无敌小马爱学习

浙公网安备 33010602011771号

浙公网安备 33010602011771号