08 学生课程分数的Spark SQL分析

读学生课程分数文件chapter4-data01.txt,创建DataFrame。

>>> url = "file:///usr/local/spark/mycode/rdd/chapter4-data01.txt"

>>> rdd = spark.sparkContext.textFile(url).map(lambda line:line.split(','))

>>> rdd.take(3)

[['Aaron', 'OperatingSystem', '100'], ['Aaron', 'Python', '50'], ['Aaron', 'ComputerNetwork', '30']]

>>> from pyspark.sql.types import IntegerType,StringType,StructField,StructType

>>> from pyspark.sql import Row

>>> fields = [StructField('name',StringType(),True),StructField('course',StringType(),True),StructField('score',IntegerType(),True)]

>>> schema = StructType(fields)

>>> data = rdd.map(lambda p:Row(p[0],p[1],int(p[2])))

>>> df_scs = spark.createDataFrame(data,schema)

>>> df_scs.printSchema()

>>> df_scs.show()

用DataFrame的操作或SQL语句完成以下数据分析要求,并和用RDD操作的实现进行对比:

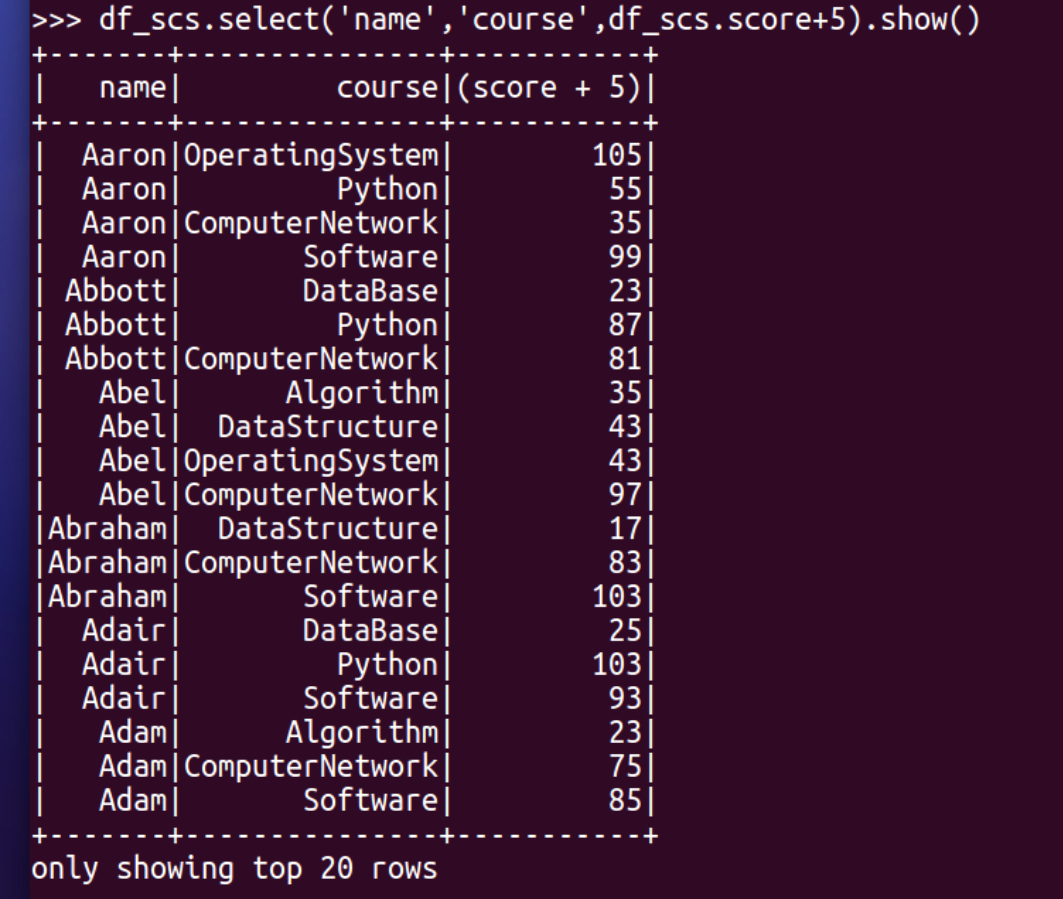

- 每个分数+5分。

>>> df_scs.select('name','course',df_scs.score+5).show()

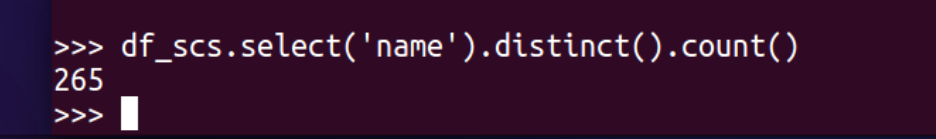

- 总共有多少学生?

>>> df_scs.select('name').distinct().count()

- 总共开设了哪些课程?

>>> df_scs.select('course').distinct().show()

- 每个学生选修了多少门课?

>>> df_scs.groupBy('name').count().show()

- 每门课程有多少个学生选?

>>> df_scs.groupBy('course').count().show()

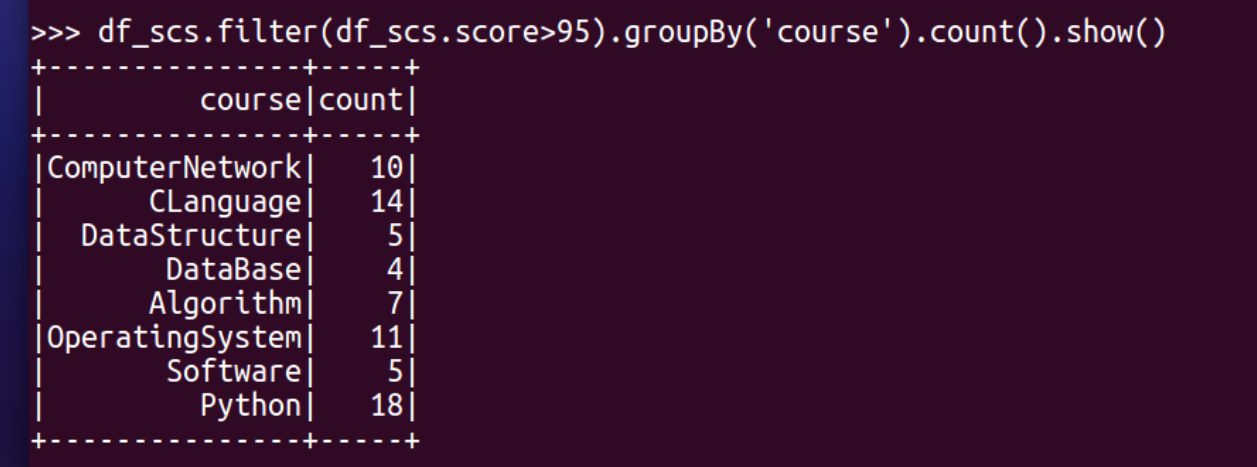

- 每门课程大于95分的学生人数?

>>> df_scs.filter(df_scs.score>95).groupBy('course').count().show()

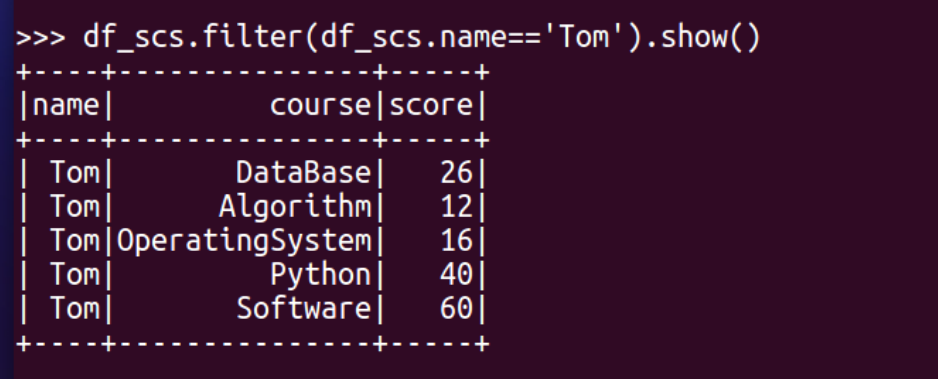

- Tom选修了几门课?每门课多少分?

>>> df_scs.filter(df_scs.name=='Tom').show()

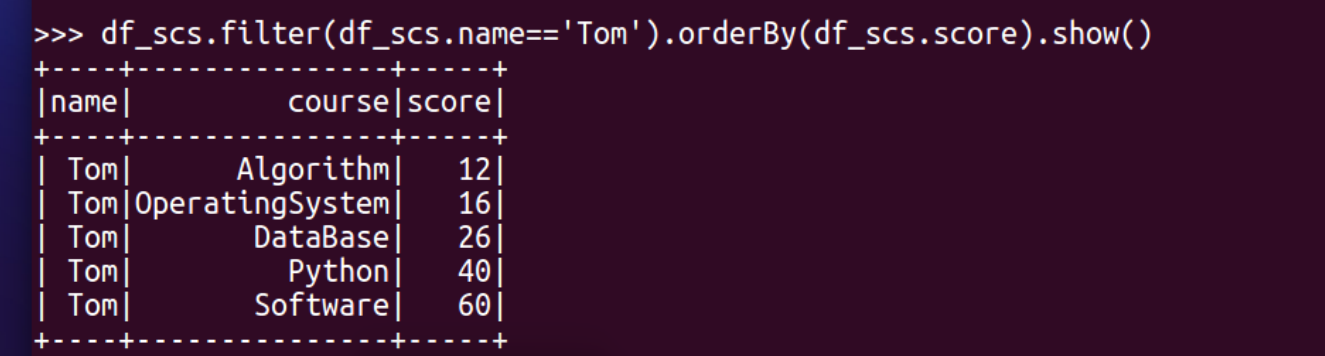

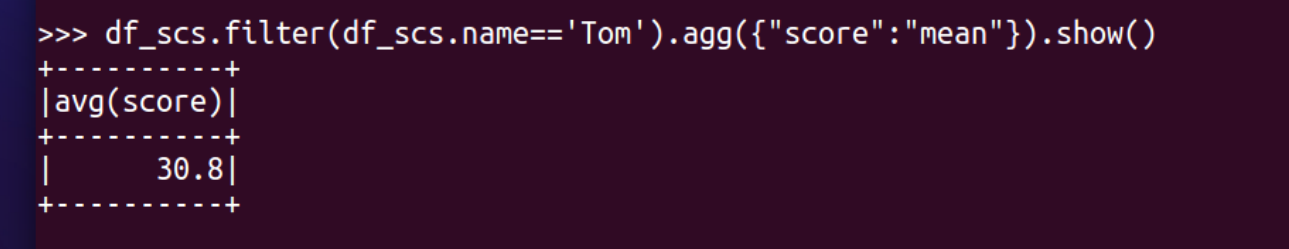

- Tom的成绩按分数大小排序。

>>> df_scs.filter(df_scs.name=='Tom').orderBy(df_scs.score).show()

- Tom的平均分。

>>> df_scs.filter(df_scs.name=='Tom').agg({"score":"mean"}).show()

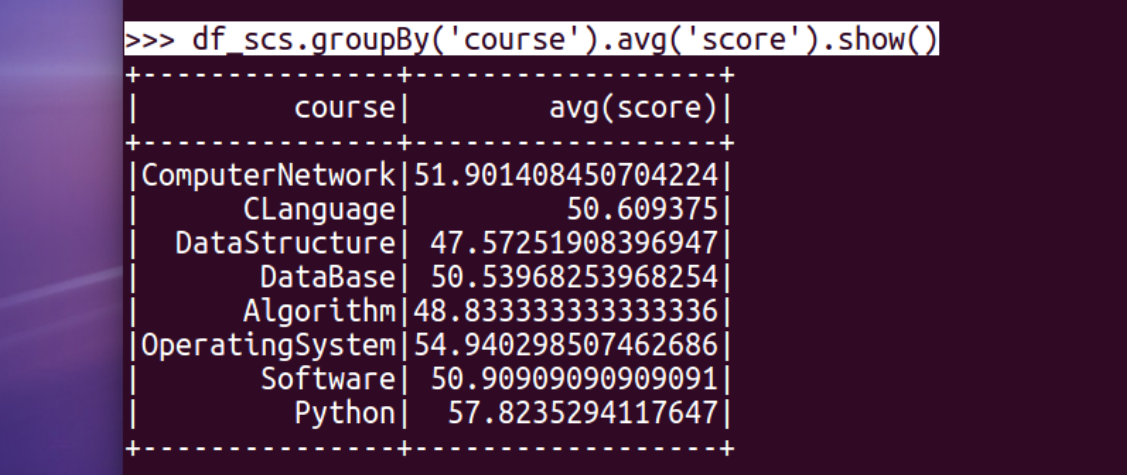

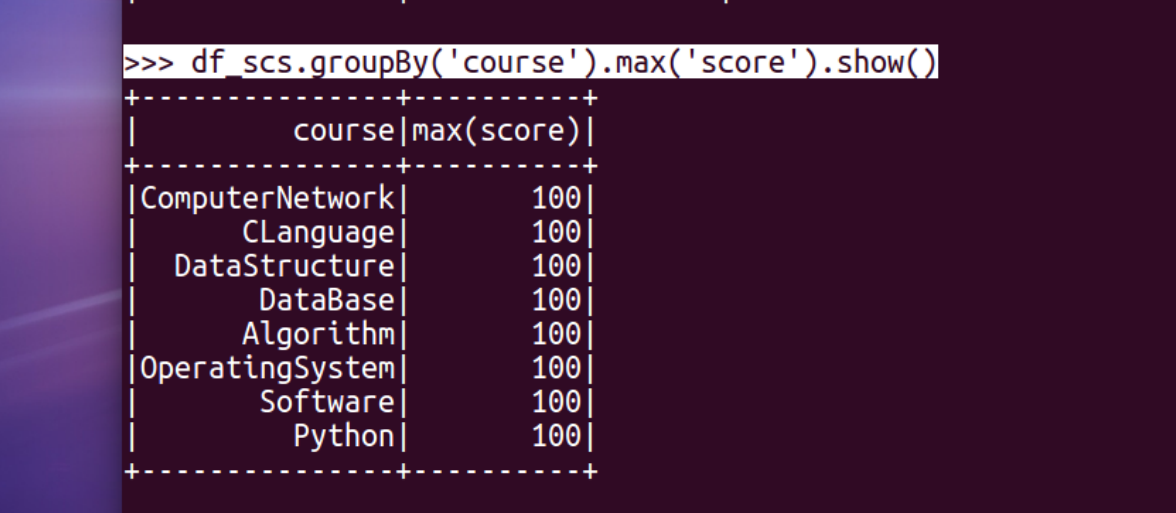

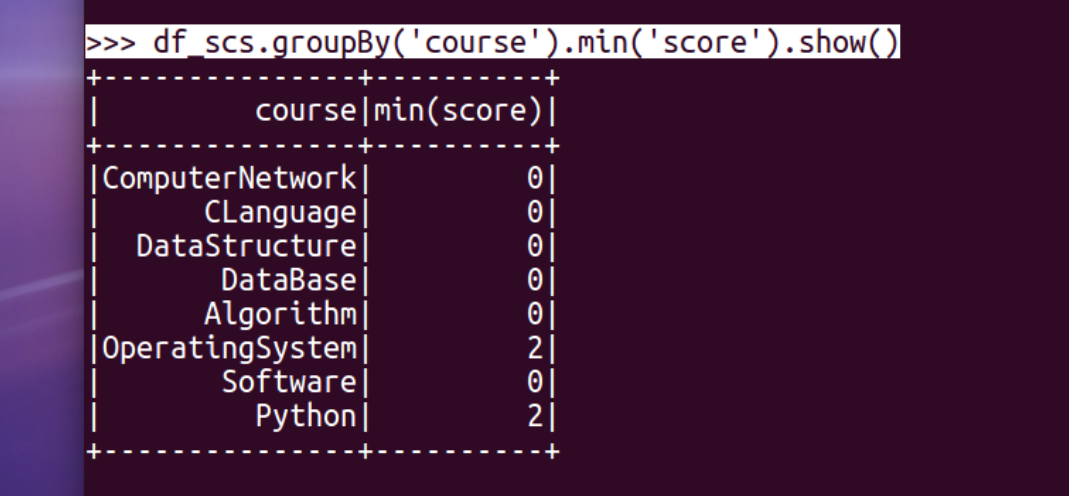

- 求每门课的平均分,最高分,最低分。

>>> df_scs.groupBy('course').avg('score').show()

>>> df_scs.groupBy('course').max('score').show()

>>> df_scs.groupBy('course').min('score').show()

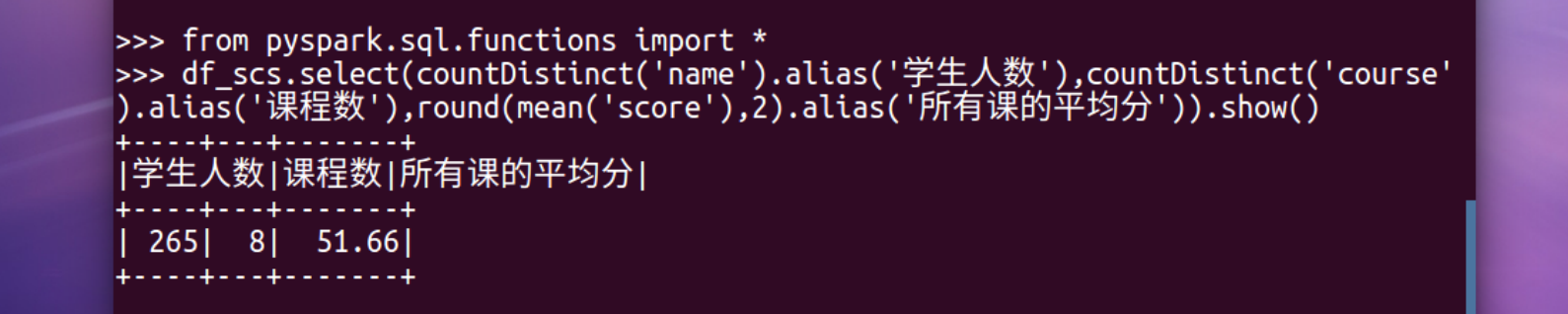

- 求每门课的选修人数及平均分,精确到2位小数。

>>> from pyspark.sql.functions import *

>>> df_scs.select(countDistinct('name').alias('学生人数'),countDistinct('course').alias('课程数'),round(mean('score'),2).alias('所有课的平均分')).show()

- 每门课的不及格人数,通过率

>>> df_scs.filter(df_scs.score<60).groupBy('course').count().show()

二、用SQL语句完成以上数据分析要求

0.把DataFrame注册为临时表

>>> df_scs.createOrReplaceTempView("scs")

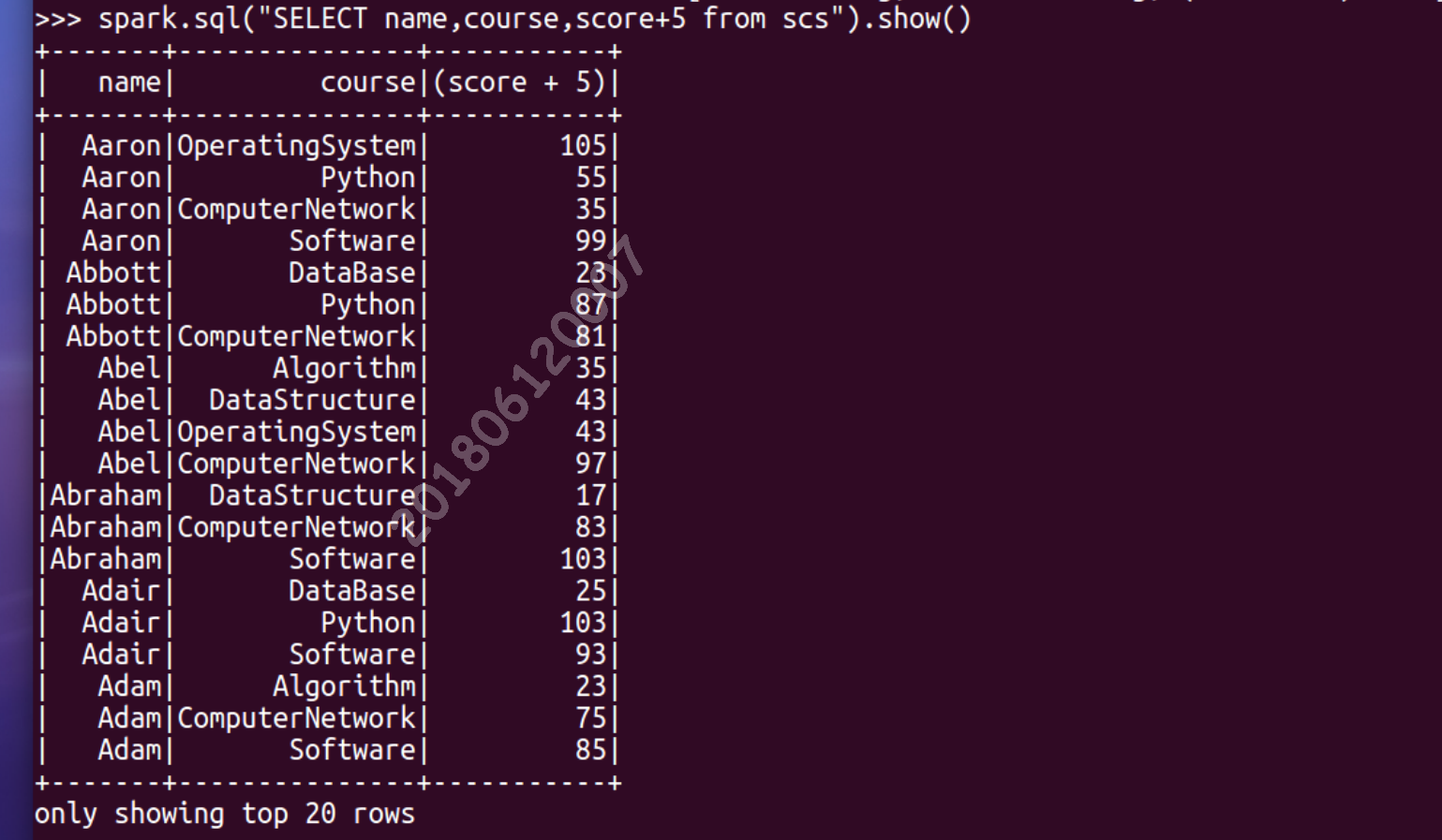

- 每个分数+5分

>>> spark.sql("SELECT name,course,score+5 from scs").show()

- 总共有多少学生?

- 总共开设了哪些课程?

>>> spark.sql("SELECT count(name) from scs").show()

>>> spark.sql("SELECT distinct(course) from scs").show()

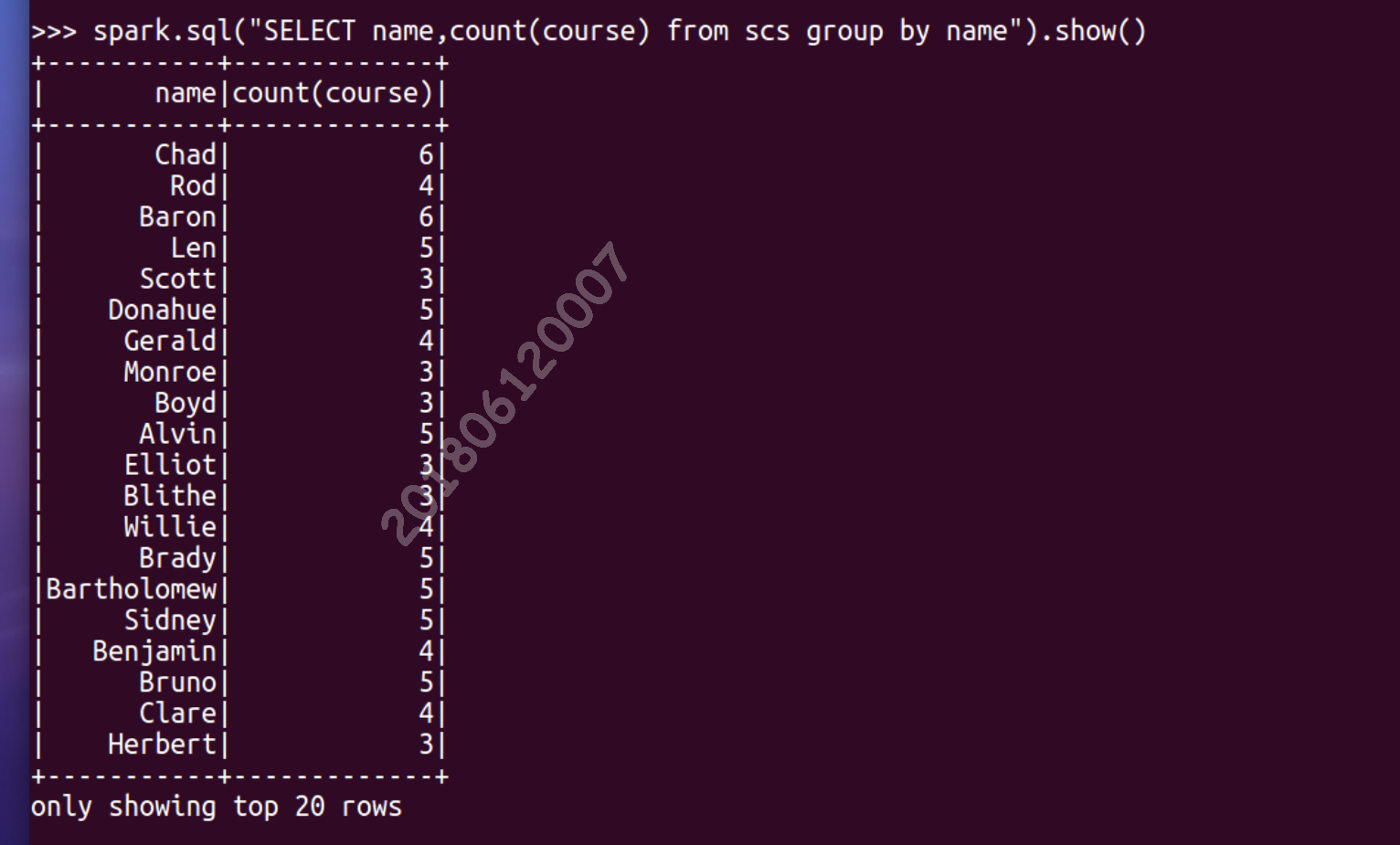

- 每个学生选修了多少门课?

>>> spark.sql("SELECT name,count(course) from scs group by name").show()

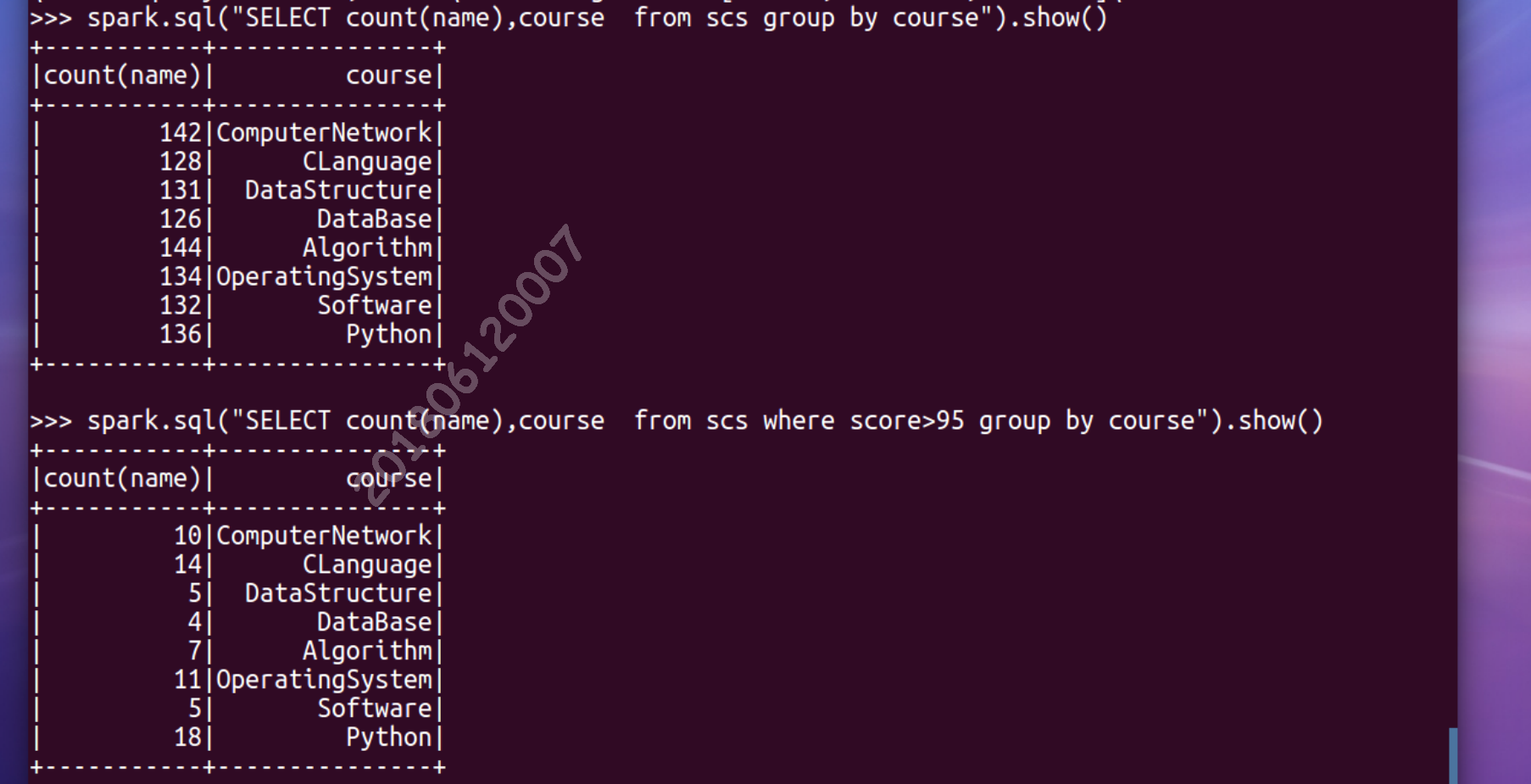

- 每门课程有多少个学生选?

- 每门课程大于95分的学生人数?

>>> spark.sql("SELECT count(name),course from scs group by course").show()

>>> spark.sql("SELECT count(name),course from scs where score>95 group by course").show()

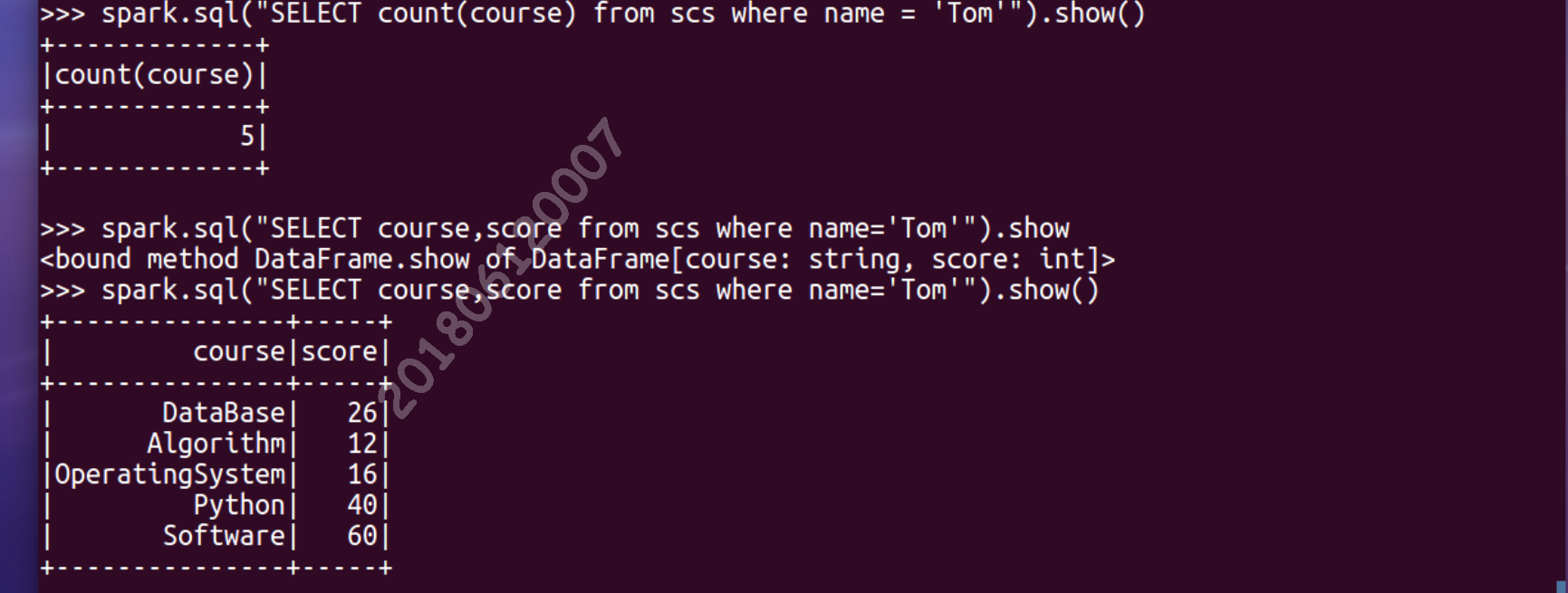

- Tom选修了几门课?每门课多少分?

>>> spark.sql("SELECT count(course) from scs where name = 'Tom'").show()

>>> spark.sql("SELECT course,score from scs where name='Tom'").show()

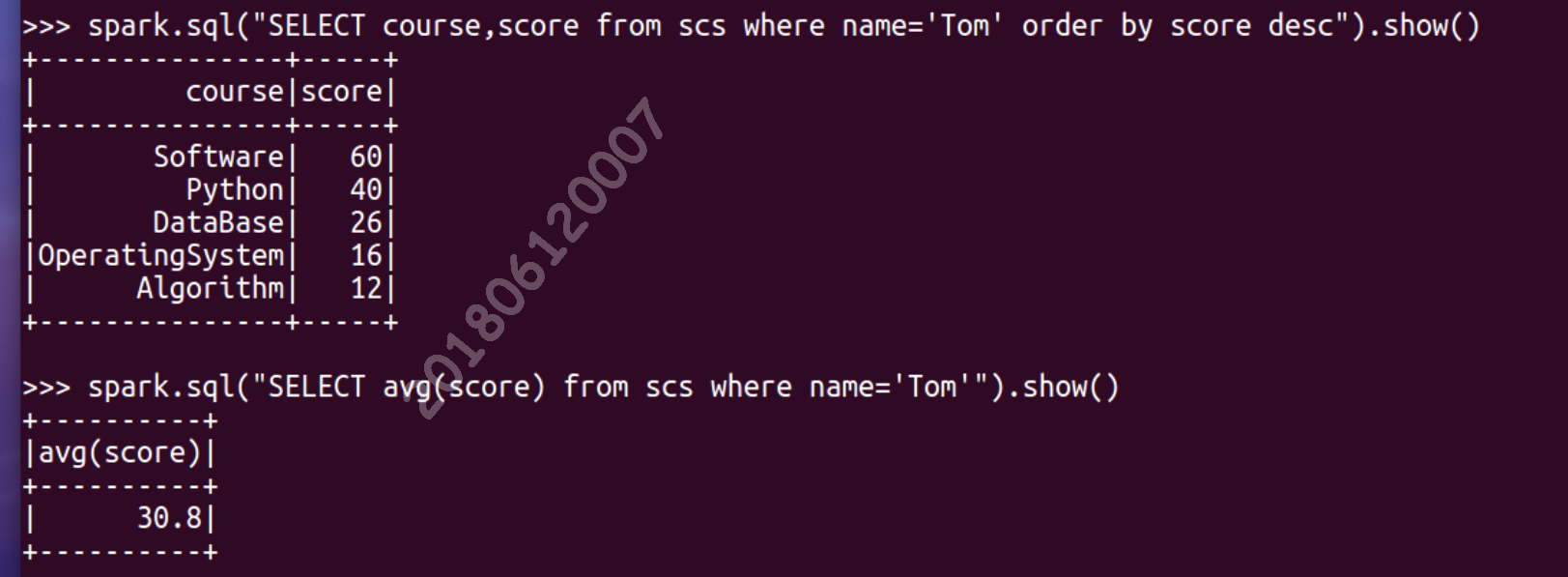

- Tom的成绩按分数大小排序。

>>> spark.sql("SELECT course,score from scs where name='Tom' order by score desc").show()

- Tom的平均分。

>>> spark.sql("SELECT avg(score) from scs where name='Tom'").show()

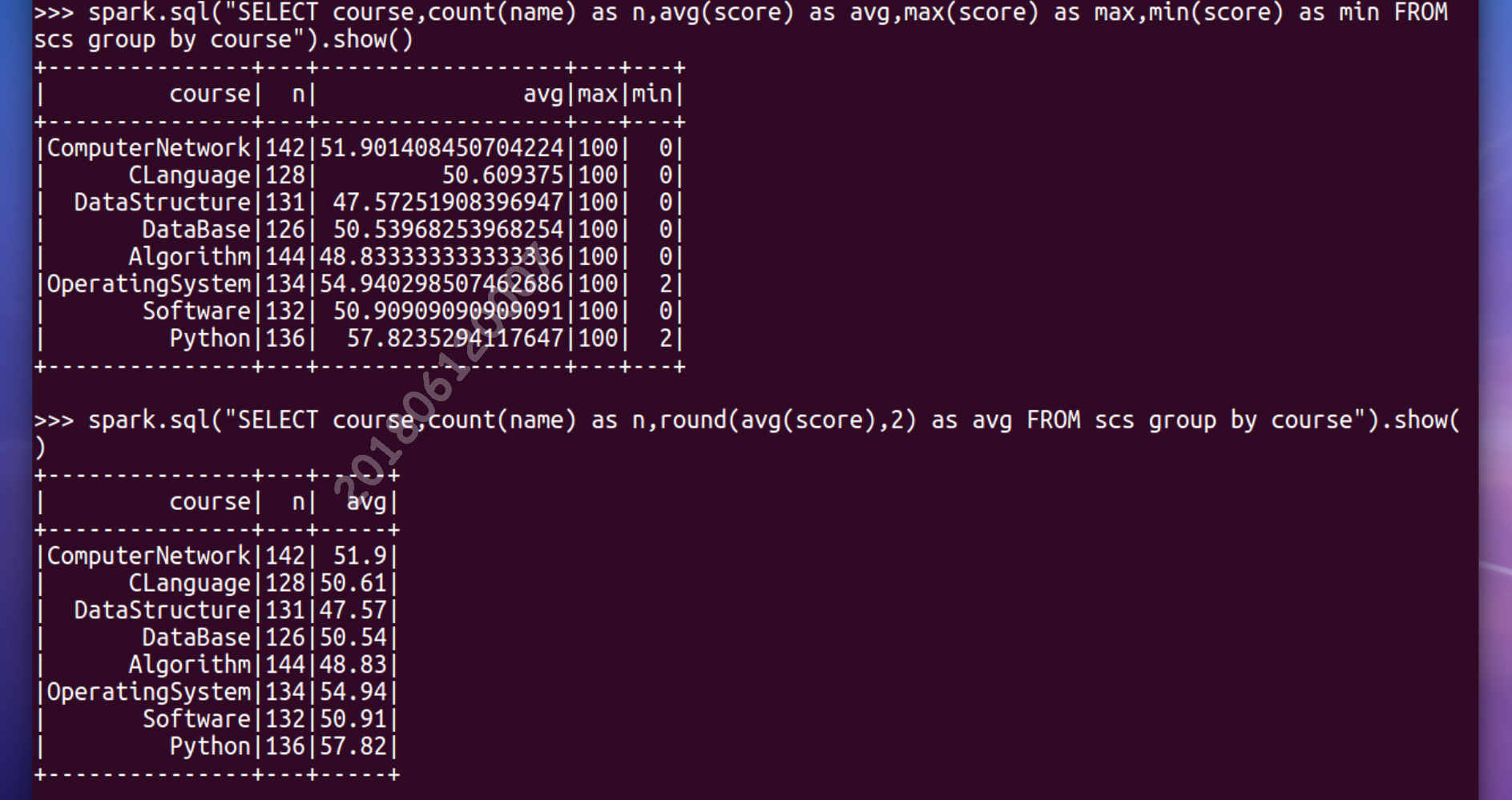

- 求每门课的平均分,最高分,最低分。

>>> spark.sql("SELECT course,count(name) as n,avg(score) as avg,max(score) as max,min(score) as min FROM scs group by course").show()

- 求每门课的选修人数及平均分,精确到2位小数。

>>> spark.sql("SELECT course,count(name) as n,round(avg(score),2) as avg FROM scs group by course").show()

- 每门课的不及格人数,通过率

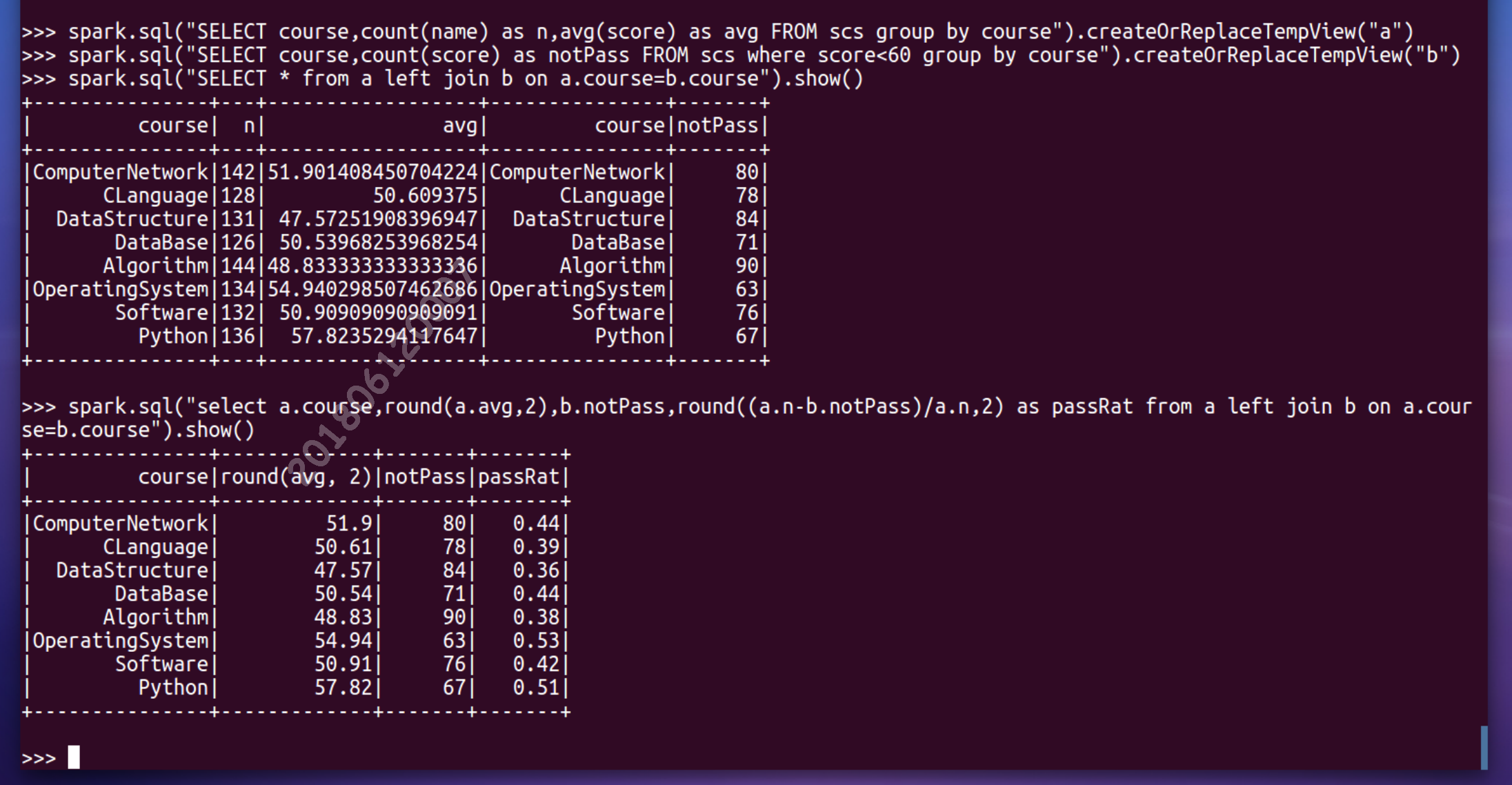

>>> spark.sql("SELECT course,count(name) as n,avg(score) as avg FROM scs group by course").createOrReplaceTempView("a")

>>> spark.sql("SELECT course,count(score) as notPass FROM scs where score<60 group by course").createOrReplaceTempView("b")

>>> spark.sql("SELECT * from a left join b on a.course=b.course").show()

>>> spark.sql("select a.course,round(a.avg,2),b.notPass,round((a.n-b.notPass)/a.n,2) as passRat from a left join b on a.course=b.course").show()

三、对比分别用RDD操作实现、用DataFrame操作实现和用SQL语句实现的异同。(比较两个以上问题)

1.每个分数+5分

(1)sql实现

spark.sql("SELECT name,course,score+5 from scs").show()

(2)DataFrame操作实现

df_scs.select('name','course',df_scs.score+5).show()

(3)RDD实现

words = words.map(lambda x:(x[0],int(x[1])))

words.mapValues(lambda x:x+5).foreach(print)

2.总共有多少学生?

(1)sql实现

spark.sql("SELECT count(name) from scs").show()

(2)DataFrame操作实现

df_scs.select('name').distinct().count()

(3)RDD实现

lines.map(lambda line : line.split(',')[0]).distinct().count()

3.求每门课的选修人数及平均分,精确到2位小数。

(1)sql实现

spark.sql("SELECT course, count(name) as n,avg(score) as avg FROM scs group by course").show() #每门课的选修人数与平均分

(2)DataFrame操作实现

df_scs.groupBy('course').agg({'name':'count',"score": "mean"}).withColumnRenamed("avg(score)","'avg').withColumnRenamed("count(name)",'n').show()

df_scs.groupBy("course").avg("score").show()

df_scs.groupBy("course").count().show()

四、结果可视化。

from pyspark.sql.types import IntegerType, StringType, StructField, StructType

fields = [StructField(...), ...]

schema = StructType(fields)

类型:http://spark.apache.org/docs/latest/sql-ref-datatypes.html

from pyspark.sql import Row

data = rdd.map(lambda p: Row(...))

Spark SQL DataFrame 操作

df.show()

df.printSchema()

df.count()

df.head(3)

df.collect()

df[‘name’]

df.name

df.first().asDict()

df.describe().show()

df.distinct()

df.filter(df['age'] > 21).show()

df.groupBy("age").count().show()

df.select('name', df['age‘] + 1).show()

df_scs.groupBy("course").avg('score').show()

df_scs.agg({"score": "mean"}).show()

df_scs.groupBy("course").agg({"score": "mean"}).show()

函数:http://spark.apache.org/docs/2.2.0/api/python/pyspark.sql.html#module-pyspark.sql.functions