05 RDD编程

一、词频统计:

1读文本文件生成RDD lines

lines=sc.textFile("file:///usr/local/spark/mycode/rdd/word.txt") lines.foreach(print)

2将一行一行的文本分割成单词 words flatmap()

words=lines.flatMap(lambda line:line.split()) words.foreach(print)

3全部转换为小写 lower()

words1=lines.map(lambda word:word.lower()) words1.foreach(print)

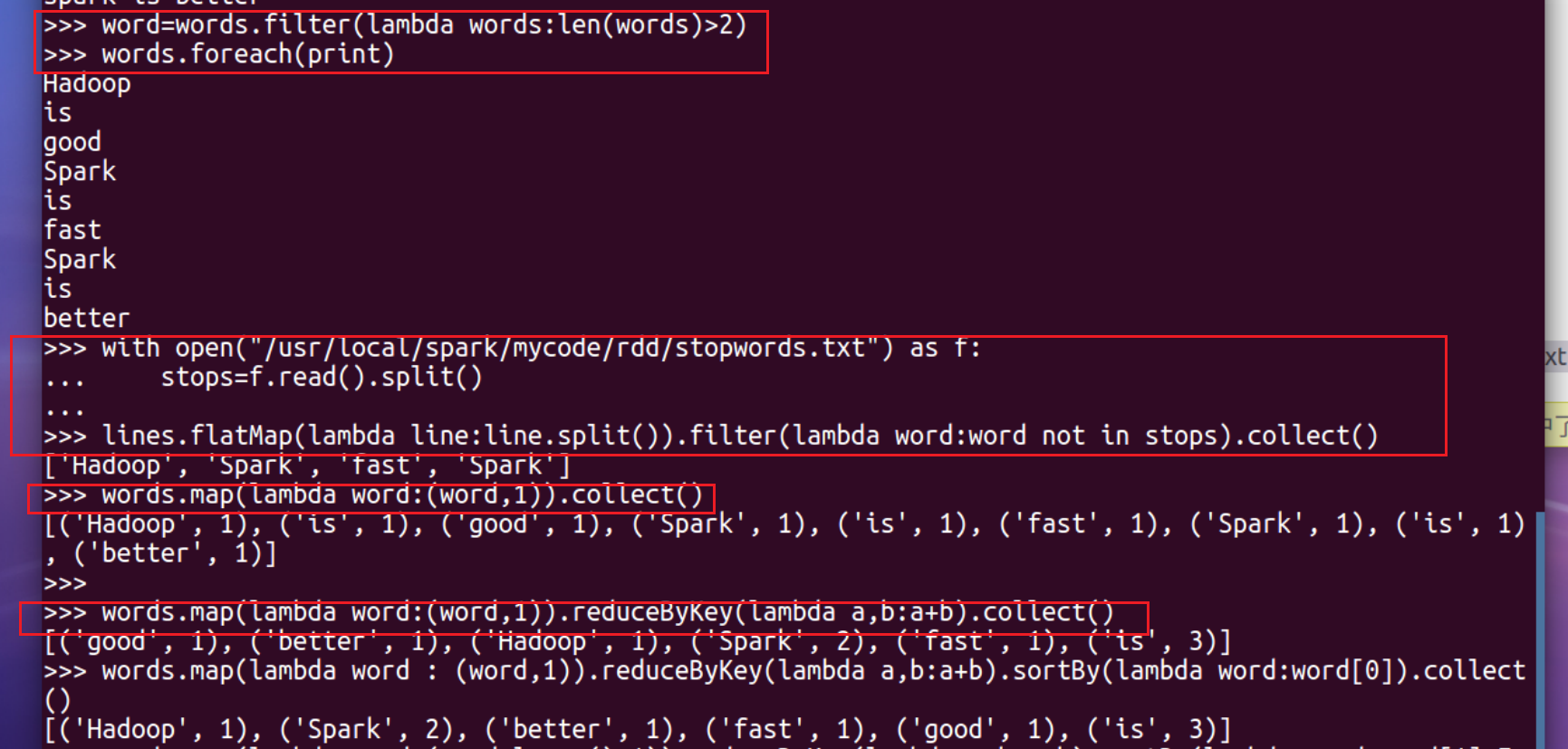

4去掉长度小于3的单词 filter()

word=words.filter(lambda words:len(words)>2) words.foreach(print)

5去掉停用词

with open("/usr/local/spark/mycode/rdd/stopwords.txt") as f: stops=f.read().split() lines.flatMap(lambda line:line.split()).filter(lambda word:word not in stops).collect()

6转换成键值对 map()

words.map(lambda word:(word,1)).collect()

7统计词频 reduceByKey()

words.map(lambda word:(word,1)).reduceByKey(lambda a,b:a+b).collect()

8按字母顺序排序 sortBy(f)

words.map(lambda word : (word,1)).reduceByKey(lambda a,b:a+b).sortBy(lambda word:word[0]).collect()

9按词频排序 sortByKey()

words.map(lambda word:(word.lower(),1)).reduceByKey(lambda a,b:a+b).sortBy(lambda word:word[1],False).collect()

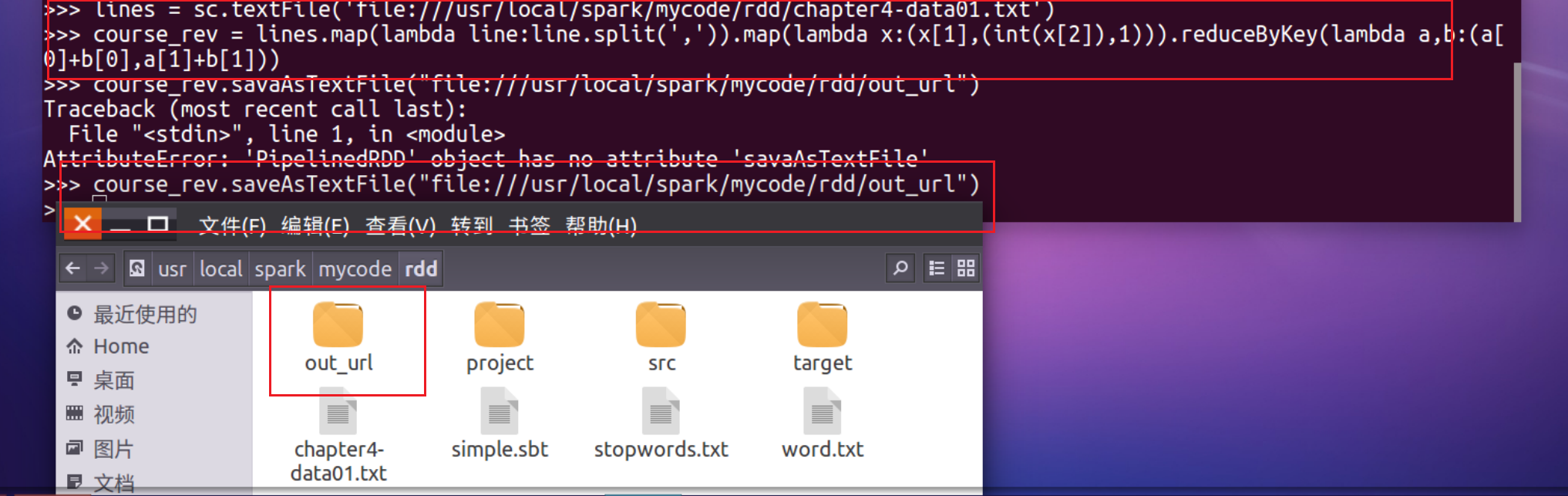

10.结果文件保存 saveAsTextFile(out_url)

lines = sc.textFile('file:///usr/local/spark/mycode/rdd/chapter4-data01.txt')

course_rev = lines.map(lambda line:line.split(',')).map(lambda x:(x[1],(int(x[2]),1))).reduceByKey(lambda a,b:(a[0]+b[0],a[1]+b[1]))

course_rev.saveAsTextFile("file:///usr/local/spark/mycode/rdd/out_url")

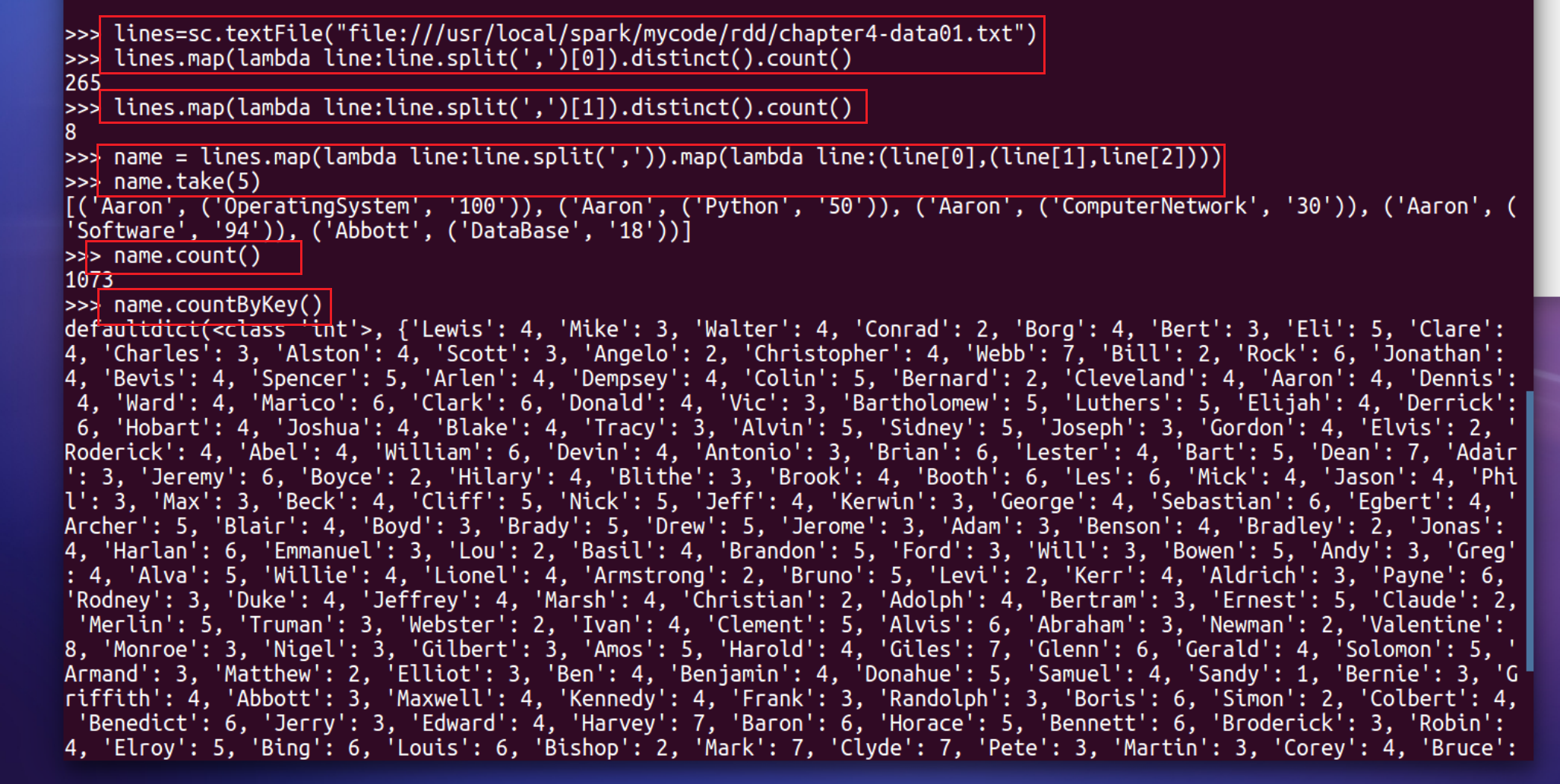

二、学生课程分数案例

总共有多少学生?map(), distinct(), count()

lines=sc.textFile("file:///usr/local/spark/mycode/rdd/chapter4-data01.txt")lines.map(lambda line:line.split(',')[0]).distinct().count()

开设了多少门课程?

lines.map(lambda line:line.split(',')[1]).distinct().count()

每个学生选修了多少门课?map(), countByKey()

name = lines.map(lambda line:line.split(',')).map(lambda line:(line[0],(line[1],line[2])))

name.take(5)

name.count()

name.countByKey()

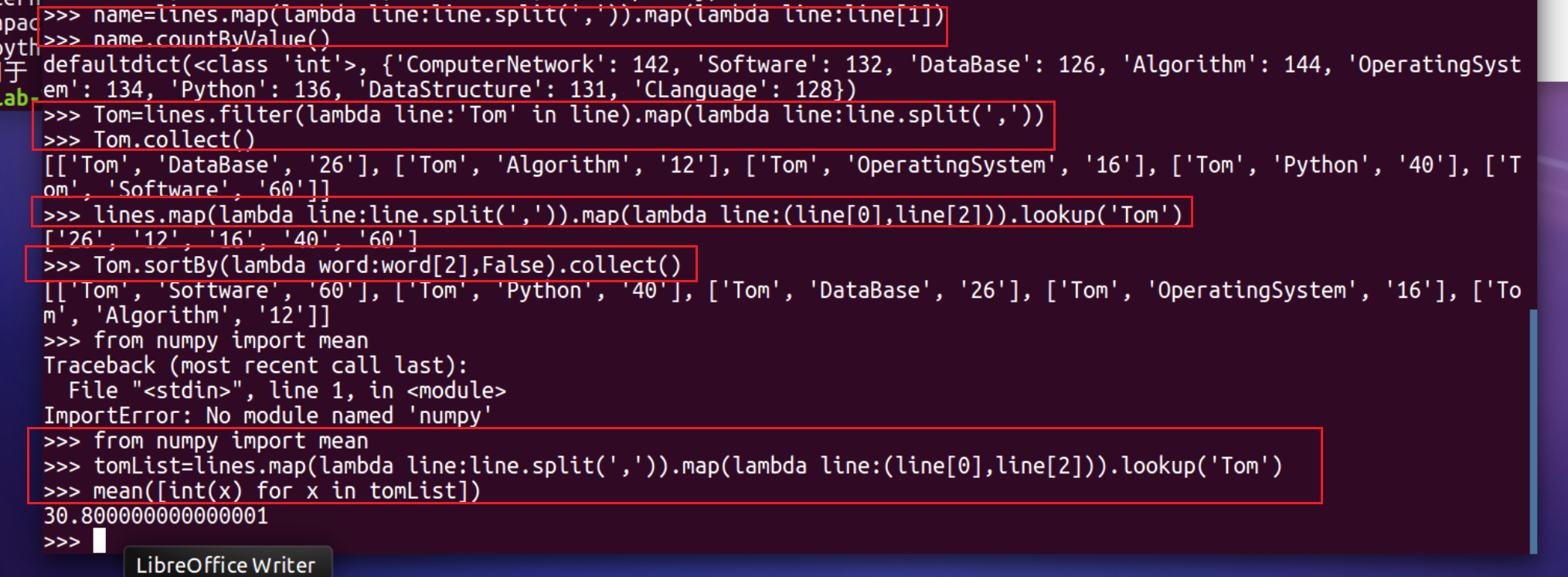

每门课程有多少个学生选?map(), countByValue()

name=lines.map(lambda line:line.split(',')).map(lambda line:line[1])name.countByValue()

Tom选修了几门课?每门课多少分?filter(), map() RDD

Tom=lines.filter(lambda line:'Tom' in line).map(lambda line:line.split(','))Tom.collect()

Tom选修了几门课?每门课多少分?map(),lookup() list

lines.map(lambda line:line.split(',')).map(lambda line:(line[0],line[2])).lookup('Tom')

Tom的成绩按分数大小排序。filter(), map(), sortBy()

Tom.sortBy(lambda word:word[2],False).collect()

Tom的平均分。map(),lookup(),mean()

from numpy import meantomList=lines.map(lambda line:line.split(',')).map(lambda line:(line[0],line[2])).lookup('Tom')mean([int(x) for x in tomList])

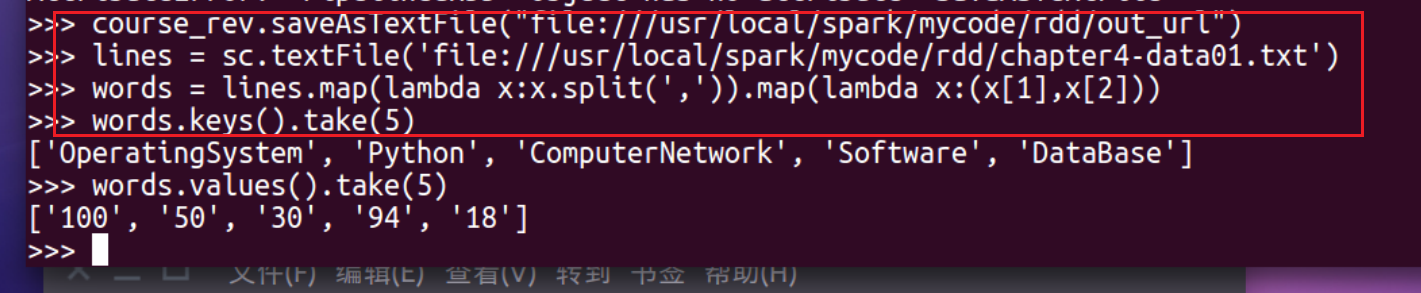

生成(课程,分数)RDD,观察keys(),values()

course_rev.saveAsTextFile("file:///usr/local/spark/mycode/rdd/out_url")

words = lines.map(lambda x:x.split(',')).map(lambda x:(x[1],x[2]))

words.keys().take(5)

words.values().take(5)

每个分数+5分。mapValues(func)

words = words.map(lambda x:(x[0],int(x[1])))

words.mapValues(lambda x:x+1).foreach(print)

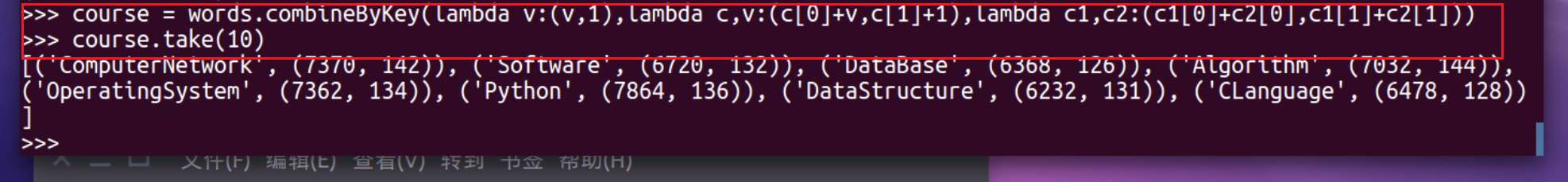

求每门课的选修人数及所有人的总分。combineByKey()

course = words.combineByKey(lambda v:(v,1),lambda c,v:(c[0]+v,c[1]+1),lambda c1,c2:(c1[0]+c2[0],c1[1]+c2[1]))

course.take(10)

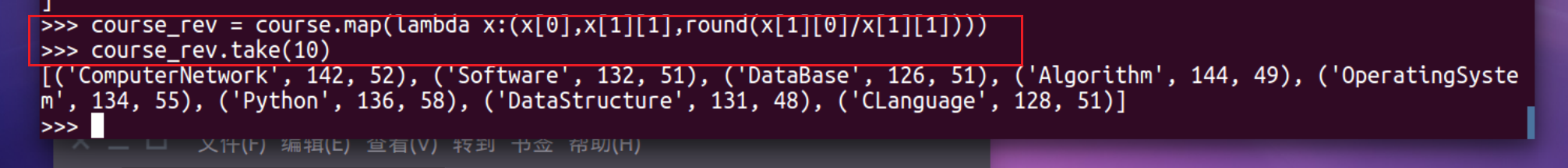

求每门课的选修人数及平均分,精确到2位小数。map(),round()

course_rev = course.map(lambda x:(x[0],x[1][1],round(x[1][0]/x[1][1])))

course_rev.take(10)

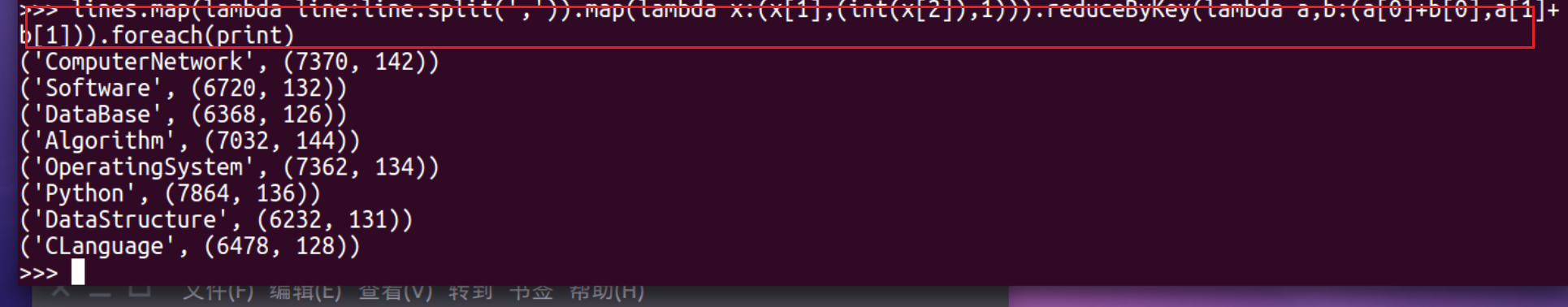

求每门课的选修人数及平均分。用reduceByKey()实现,并比较与combineByKey()的异同。

lines.map(lambda line:line.split(',')).map(lambda x:(x[1],(int(x[2]),1))).reduceByKey(lambda a,b:(a[0]+b[0],a[1]+b[1])).foreach(print)