[Keras] Python core API

不错的公开课,只是用的PyTorch。

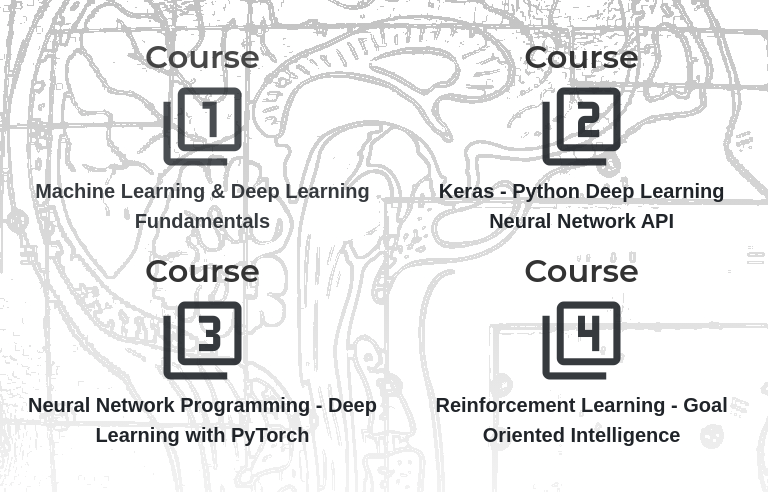

Course Lessons

- Keras Prerequisites

- Change Keras Backend To Theano

- Preprocess Data For Training With Keras

- Create An Artificial Neural Network With Keras

- Train An Artificial Neural Network With Keras

- Build A Validation Set With Keras

- Make Predictions With An Artificial Neural Network Using Keras

- Create Confusion Matrix For Predictions From Keras Model

- Save And Load A Keras Model

- Image Preparation For CNN Image Classifier With Keras

- Create And Train A CNN Image Classifier With Keras

- Make Predictions With A Keras CNN Image Classifier

- Fine-Tune VGG16 Image Classifier With Keras | Part 1: Build

- Fine-Tune VGG16 Image Classifier With Keras | Part 2: Train

- Fine-Tune VGG16 Image Classifier With Keras | Part 3: Predict

- Data Augmentation With Keras

- Mapping Keras Labels To Image Classes

- Reproducible Results With Keras

- Initializing And Accessing Bias With Keras

- Learnable Parameters ("Trainable Params") In A Keras Model

- Learnable Parameters ("Trainable Params") In A Keras Convolutional Neural Network

- Deploy Keras Neural Network To Flask Web Service | Part 1 - Overview

- Deploy Keras Neural Network To Flask Web Service | Part 2 - Build Your First Flask App

- Deploy Keras Neural Network To Flask Web Service | Part 3 - Send And Receive Data With Flask

- Deploy Keras Neural Network To Flask Web Service | Part 4 - Build A Front End Web Application

- Deploy Keras Neural Network To Flask Web Service | Part 5 - Host VGG16 Model With Flask

- Deploy Keras Neural Network To Flask Web Service | Part 6 - Build Web App To Send Images To VGG16

- Deploy Keras Neural Network To Flask Web Service | Part 7 - Visualizations With D3, DC, Crossfilter

- Deploy Keras Neural Network To Flask Web Service | Part 8 - Access Model From Powershell, Curl

- Deploy Keras Neural Network To Flask Web Service | Part 9 - Information Privacy, Data Protection

之后的内容请见: [Web CV] Retrain MobileNet for TensorFlow.js

Keras 预测模型

一、资源

Ref: keras-boston房价回归

Ref: [Feature] Preprocessing tutorial

Ref: Keras 101: A simple Neural Network for House Pricing regression [regression 挺好用]

二、Keras 基础学习

-

基础知识

model = Sequential([ Dense(units=16, input_shape=(1,), activation='relu'), Dense(units=32, activation='relu'), Dense(units=2, activation='softmax') ])

We can verify that the loaded model has the same architecture and weights as the saved model by calling summary() and get_weights() on the model.

Model: "sequential" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= dense (Dense) (None, 16) 32 _________________________________________________________________ dense_1 (Dense) (None, 32) 544 _________________________________________________________________ dense_2 (Dense) (None, 2) 66 ================================================================= Total params: 642 Trainable params: 642 Non-trainable params: 0 _________________________________________________________________

三、Sequential 序贯模型例子

Ref: 深入学习Keras中Sequential模型及方法

在训练模型之前,我们需要配置学习过程,这是通过compile方法完成的,他接收三个参数:

-

- 优化器 optimizer:它可以是现有优化器的字符串标识符,如

rmsprop或adagrad,也可以是 Optimizer 类的实例。详见:optimizers。 - 损失函数 loss:模型试图最小化的目标函数。它可以是现有损失函数的字符串标识符,如

categorical_crossentropy或mse,也可以是一个目标函数。详见:losses。 - 评估标准 metrics:对于任何分类问题,你都希望将其设置为

metrics = ['accuracy']。评估标准可以是现有的标准的字符串标识符,也可以是自定义的评估标准函数。

- 优化器 optimizer:它可以是现有优化器的字符串标识符,如

-

Dropout

Goto: http://localhost:8888/notebooks/10_dropout.ipynb

class CustomModel(keras.Model): def __init__(self, **kwargs): super().__init__(**kwargs) self.input_layer = keras.layers.Flatten(input_shape=(28,28)) self.hidden1 = keras.layers.Dense(200, activation='relu') self.hidden2 = keras.layers.Dense(100, activation='relu') self.hidden3 = keras.layers.Dense(60, activation='relu') self.output_layer = keras.layers.Dense(10, activation='softmax') self.dropout_layer = keras.layers.Dropout(rate=0.2) def call(self, input, training=None): input_layer = self.input_layer(input) input_layer = self.dropout_layer(input_layer)

hidden1 = self.hidden1(input_layer) hidden1 = self.dropout_layer(hidden1, training=training)

hidden2 = self.hidden2(hidden1) hidden2 = self.dropout_layer(hidden2, training=training)

hidden3 = self.hidden3(hidden2) hidden3 = self.dropout_layer(hidden3, training=training)

output_layer = self.output_layer(hidden3) return output_layer

# dropout in hidden layers with weight constraint def create_model(): # create model model = Sequential() model.add(Dense(60, input_dim=60, activation='relu', kernel_constraint=maxnorm(3))) model.add(Dropout(0.2)) model.add(Dense(30, activation='relu', kernel_constraint=maxnorm(3))) model.add(Dropout(0.2)) model.add(Dense(1, activation='sigmoid')) # Compile model sgd = SGD(lr=0.1, momentum=0.9) model.compile(loss='binary_crossentropy', optimizer=sgd, metrics=['accuracy']) return model

-

Batch Normalisation

Goto: http://localhost:8888/notebooks/04_batch_normalisation.ipynb

# Placing batch normalization layer before the activation layers model = keras.models.Sequential([ keras.layers.Flatten(input_shape=[28,28]), keras.layers.Dense(300, use_bias=False), keras.layers.BatchNormalization(), keras.layers.Dense(200, use_bias=False), keras.layers.BatchNormalization(), keras.layers.Activation(keras.activations.relu), keras.layers.Dense(100, use_bias=False), keras.layers.BatchNormalization(), keras.layers.Activation(keras.activations.relu), keras.layers.Dense(10, activation=keras.activations.softmax) ])

/* implement */

浙公网安备 33010602011771号

浙公网安备 33010602011771号