【AI学习笔记9】基于pytorch实现CNN或MLP识别mnist, Mnist recognition using CNN & MLP based on pytorch

基于pytorch实现CNN或MLP识别mnist, Mnist recognition using CNN & MLP based on pytorch

一、CNN识别mnist

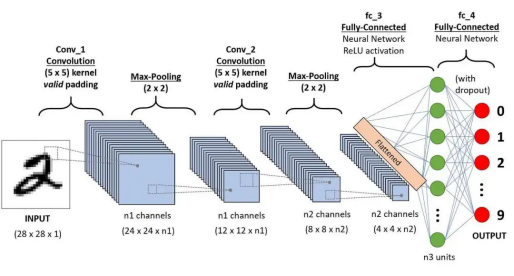

如图,CNN网络由2层卷积层(Convolutional layer)、2层池化层(Pooling layer)、1层全连接层(FCN layer)组成。【1】

二、用CNN识别mnist的代码 【2】【3】【4】【5】

# 加载必要库 load lib

import torch

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

import matplotlib.pyplot as plt

# 定义超参数 hyperparameter

Batch_Size = 64 #每批处理的数据

Epochs = 100 #训练数据集的轮次

Learning_Rate = 0.01 #学习率

# prepare dataset

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))])

# read mnist dataset

train_dataset = datasets.MNIST(root='./dataset/mnist/', train=True, download=True, transform=transform)

train_loader = DataLoader(train_dataset, shuffle=True, batch_size=Batch_Size) # 下载训练集 MNIST 手写数字训练集

test_dataset = datasets.MNIST(root='./dataset/mnist/', train=False, download=True, transform=transform)

test_loader = DataLoader(test_dataset, shuffle=False, batch_size=Batch_Size) #下载测试集 MNIST 手写数字测试集

# design model using class

class CNN(torch.nn.Module):

def __init__(self):

super(CNN, self).__init__()

self.conv1 = torch.nn.Sequential( # 原始图片为灰度图(1,28,28)

# 卷积: 输入通道数1,输出通道数10,卷积核3×3,步长1,不填充 (10,24,24)

torch.nn.Conv2d(in_channels=1, out_channels=10, kernel_size=5, stride=1, padding=0),

torch.nn.ReLU(), # ReLU激活函数

torch.nn.MaxPool2d(2), # 最大池化,池化核2×2,步长2, (10,12,12)

)

self.conv2 = torch.nn.Sequential(

torch.nn.Conv2d(in_channels=10, out_channels=20, kernel_size=5, stride=1, padding=0), # (20,8,8)

torch.nn.ReLU(),

torch.nn.MaxPool2d(2), # (20,4,4)

)

self.fc = torch.nn.Linear(20*4*4, 10) # 全连接层将它展平flatten, 分成10类(0-9) classification

# 前向传播

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = x.view(x.size(0), -1)

output = self.fc(x)

return output

model = CNN()

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") # 用CPU还是GPU

print(device)

model.to(device)

# construct loss and optimizer

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=Learning_Rate, momentum=0.5)

# training cycle forward, backward, update

def train(epoch):

running_loss = 0.0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

inputs, target = inputs.to(device), target.to(device)

optimizer.zero_grad()

# 调用前向传播

outputs = model(inputs)

loss = criterion(outputs, target)

# 反向传播

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx % 300 == 299:

print('[%d, %5d] loss: %.3f' % (epoch + 1, batch_idx + 1, running_loss / 300))

running_loss = 0.0

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

images, labels = images.to(device), labels.to(device)

outputs = model(images)

predicted = torch.max(outputs.data, dim=1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('accuracy on test set: %d %% ' % (100 * correct / total))

return correct / total

if __name__ == '__main__':

epoch_list = []

acc_list = []

for epoch in range(Epochs):

train(epoch)

acc = test()

epoch_list.append(epoch)

acc_list.append(acc)

plt.plot(epoch_list, acc_list)

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.show()

运行结果:

cuda:0

[1, 300] loss: 0.588

[1, 600] loss: 0.182

[1, 900] loss: 0.135

accuracy on test set: 97 %

...

...

[100, 300] loss: 0.000

[100, 600] loss: 0.001

[100, 900] loss: 0.001

accuracy on test set: 99 %

三、用MLP识别mnist的代码 【6】

用如下代码替换 # design model using class 代码块即可:

# 定义网络结构

class FC(torch.nn.Module):

def __init__(self):

super(FC, self).__init__()

self.l1 = torch.nn.Linear(784, 15)

self.l2 = torch.nn.Linear(15, 10)

self.relu = torch.nn.ReLU()

#前向传播

def forward(self, x):

x = x.view(-1, 784)

x = self.l1(x)

x = self.relu(x)

x = self.l2(x)

return x

运行结果:

cuda:0

[1, 300] loss: 0.783

[1, 600] loss: 0.385

[1, 900] loss: 0.326

accuracy on test set: 91 %

...

...

[100, 300] loss: 0.087

[100, 600] loss: 0.088

[100, 900] loss: 0.095

accuracy on test set: 94 %

参考文献(References):

【1】 架构师带你玩转AI 《大模型开发 - 一文搞懂CNNs工作原理(卷积与池化)》

https://www.53ai.com/news/qianyanjishu/594.html

【2】 山山而川 《Pytorch实现手写数字识别 | MNIST数据集(CNN卷积神经网络)》

https://www.cnblogs.com/xinyangblog/p/16326476.html

【3】 月球背面 《深度学习入门——卷积神经网络CNN基本原理+实战》

https://juejin.cn/post/7238627611265253434

【4】 Tom2Code 《手撕CNN的MNIST手写数字识别》

https://cloud.tencent.com/developer/article/2216568

【5】 全栈程序员站长 《详解 Pytorch 实现 MNIST[通俗易懂]》

https://cloud.tencent.com/developer/article/2055189

【6】 martin-wmx 《MNIST-pytorch》

https://github.com/martin-wmx/MNIST-pytorch/blob/master/FC/model.py

posted on 2025-02-23 18:45 JasonQiuStar 阅读(288) 评论(0) 收藏 举报

浙公网安备 33010602011771号

浙公网安备 33010602011771号