1.tTensorboard

Windows下坑太多......

在启动TensorBoard的过程,还是遇到了一些问题。接下来简单的总结一下我遇到的坑。

1、我没找不到log文件?!

答:所谓的log文件其实就是在你train过程中保存的关于你train的所有详尽信息。

文件的格式是:events.out.tfevents.1493741531.DESKTOP-CJI9GBL

你只需要找出events.out.tfevents开头的文件即可,后面的那个是跟你的电脑有关的一个标识,不用管。

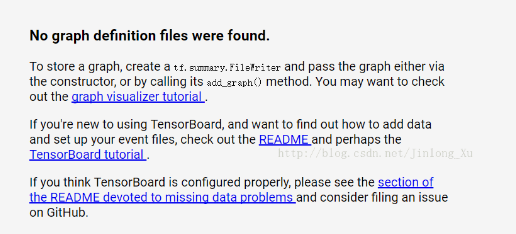

2.打开了TensorBoard,但是没有显示。例如下面的情况:

这种情况,其实我也遇到过。明明能打开的,但是为什么就是不对呢?

原因主要有这几个:

1、路径不对。在路径不对的情况下,按照上面的步骤也能打开TensorBoard,但不是我想要的信息。

2、文件出错。在train阶段,发生了错误,所以没法在TensorBoard上显示出train的信息。

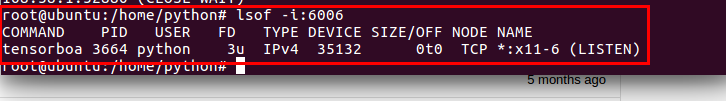

查看指定端口并kill

也可以使用lsof命令:

lsof -i:8888

若要关闭使用这个端口的程序,使用kill + 对应的pid

kill -9 PID号

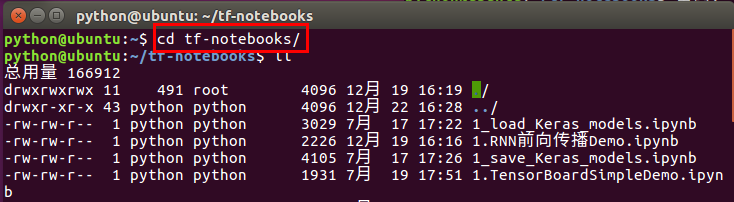

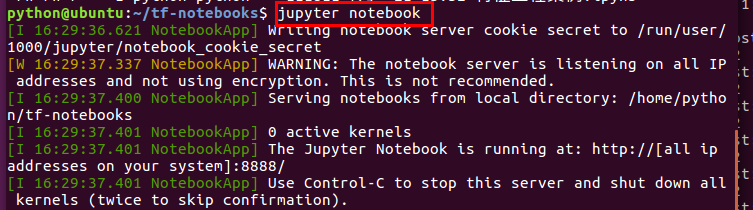

启动jupyter notebook

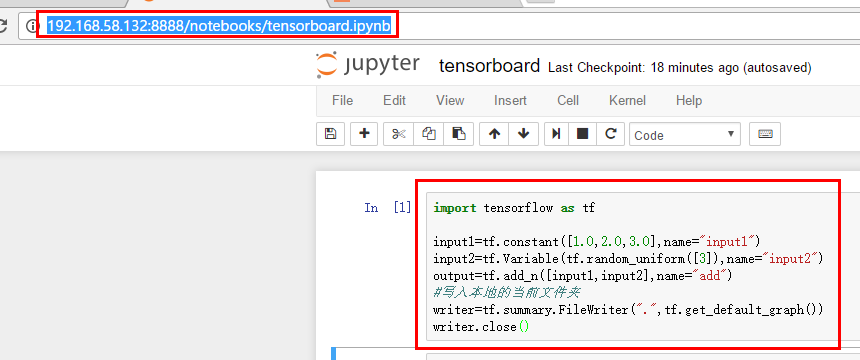

通过windows远程调用写一个Demo

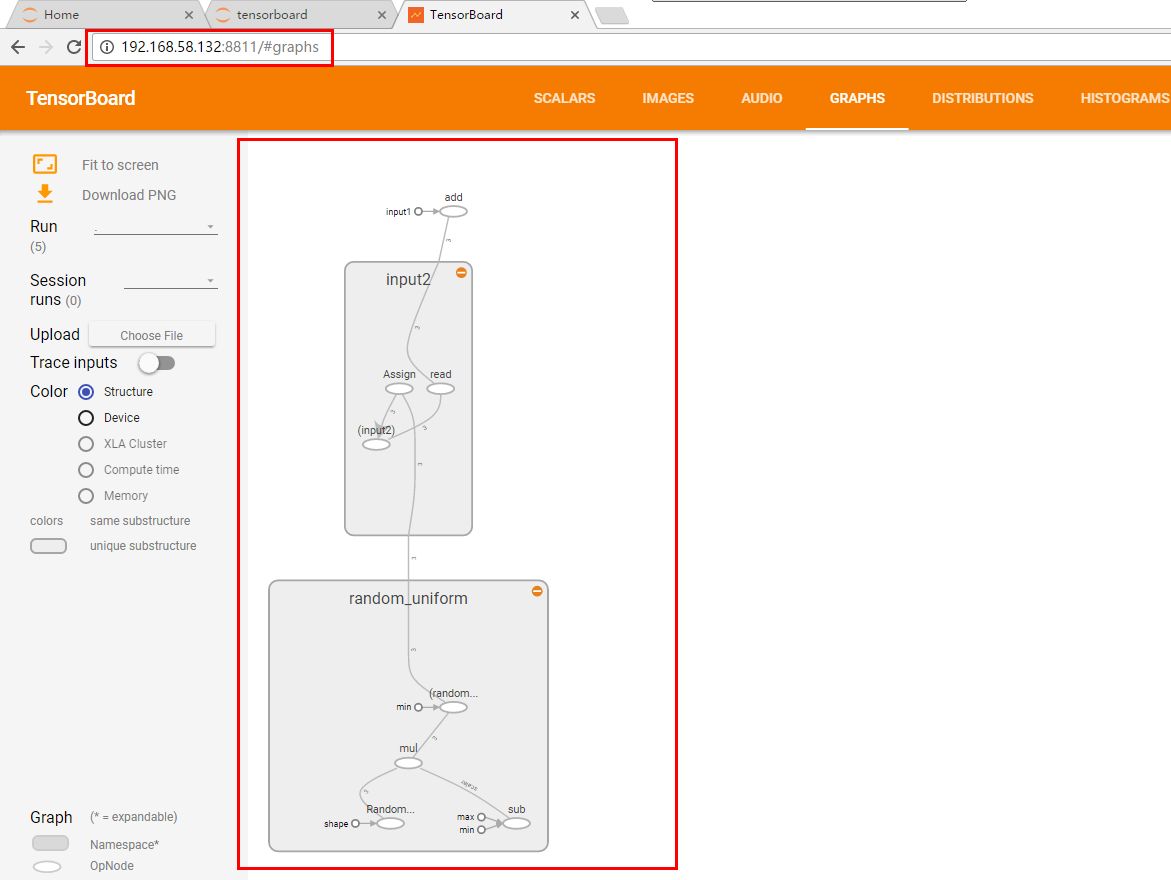

启动tensorboard并通过web访问:

sudo tensorboard --logdir='.' --port=8811

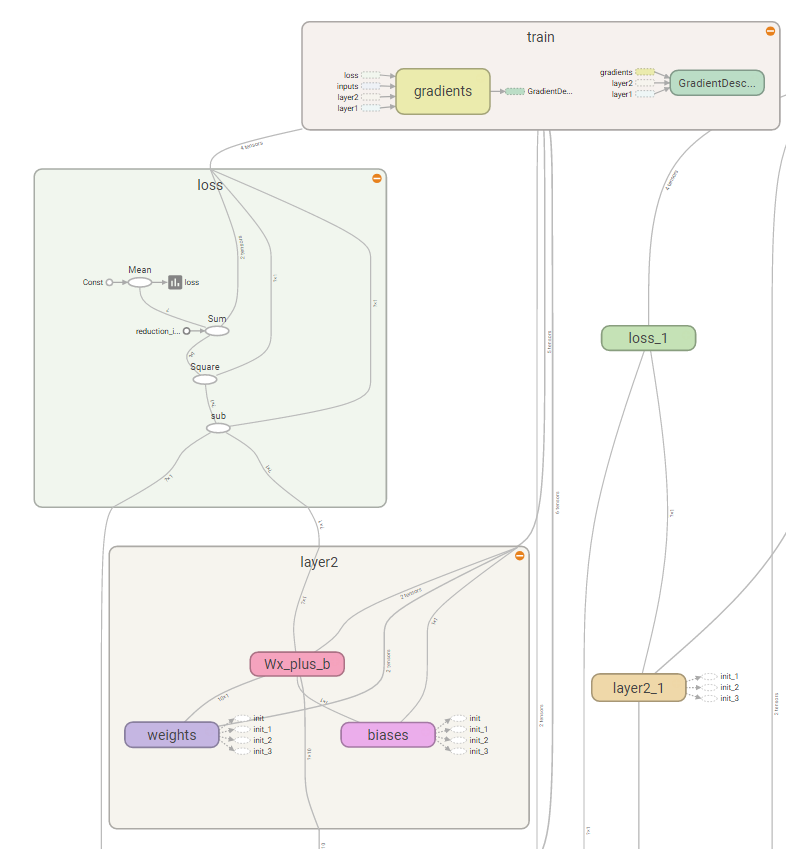

""" Please note, this code is only for python 3+. If you are using python 2+, please modify the code accordingly. """ #-*-coding:utf8-*- import tensorflow as tf import numpy as np def add_layer(inputs, in_size, out_size, n_layer, activation_function=None): # add one more layer and return the output of this layer layer_name = 'layer%s' % n_layer with tf.name_scope(layer_name): with tf.name_scope('weights'): Weights = tf.Variable(tf.random_normal([in_size, out_size]), name='W') tf.summary.histogram(layer_name + '/weights', Weights) with tf.name_scope('biases'): biases = tf.Variable(tf.zeros([1, out_size]) + 0.1, name='b') tf.summary.histogram(layer_name + '/biases', biases) with tf.name_scope('Wx_plus_b'): Wx_plus_b = tf.add(tf.matmul(inputs, Weights), biases) if activation_function is None: outputs = Wx_plus_b else: outputs = activation_function(Wx_plus_b, ) tf.summary.histogram(layer_name + '/outputs', outputs) return outputs

# Make up some real data x_data = np.linspace(-1, 1, 300)[:, np.newaxis] noise = np.random.normal(0, 0.05, x_data.shape) y_data = np.square(x_data) - 0.5 + noise # define placeholder for inputs to network with tf.name_scope('inputs'): xs = tf.placeholder(tf.float32, [None, 1], name='x_input') ys = tf.placeholder(tf.float32, [None, 1], name='y_input') # add hidden layer l1 = add_layer(xs, 1, 10, n_layer=1, activation_function=tf.nn.relu) # add output layer prediction = add_layer(l1, 10, 1, n_layer=2, activation_function=None) # the error between prediciton and real data with tf.name_scope('loss'): loss = tf.reduce_mean(tf.reduce_sum(tf.square(ys - prediction), reduction_indices=[1])) tf.summary.scalar('loss', loss) with tf.name_scope('train'): train_step = tf.train.GradientDescentOptimizer(0.1).minimize(loss) sess = tf.Session() merged = tf.summary.merge_all() writer = tf.summary.FileWriter("./tensorlogs/", sess.graph) init = tf.global_variables_initializer() sess.run(init)

下面代码在python3中正常,在python2中需要更改

for i in range(1000): sess.run(train_step, feed_dict={xs: x_data, ys: y_data}) if i % 50 == 0: result = sess.run(merged,feed_dict={xs: x_data, ys: y_data}) writer.add_summary(result, i) # direct to the local dir and run this in terminal: # $ tensorboard --logdir logs

web页面显示

博客地址:http://www.cnblogs.com/jackchen-Net/

浙公网安备 33010602011771号

浙公网安备 33010602011771号