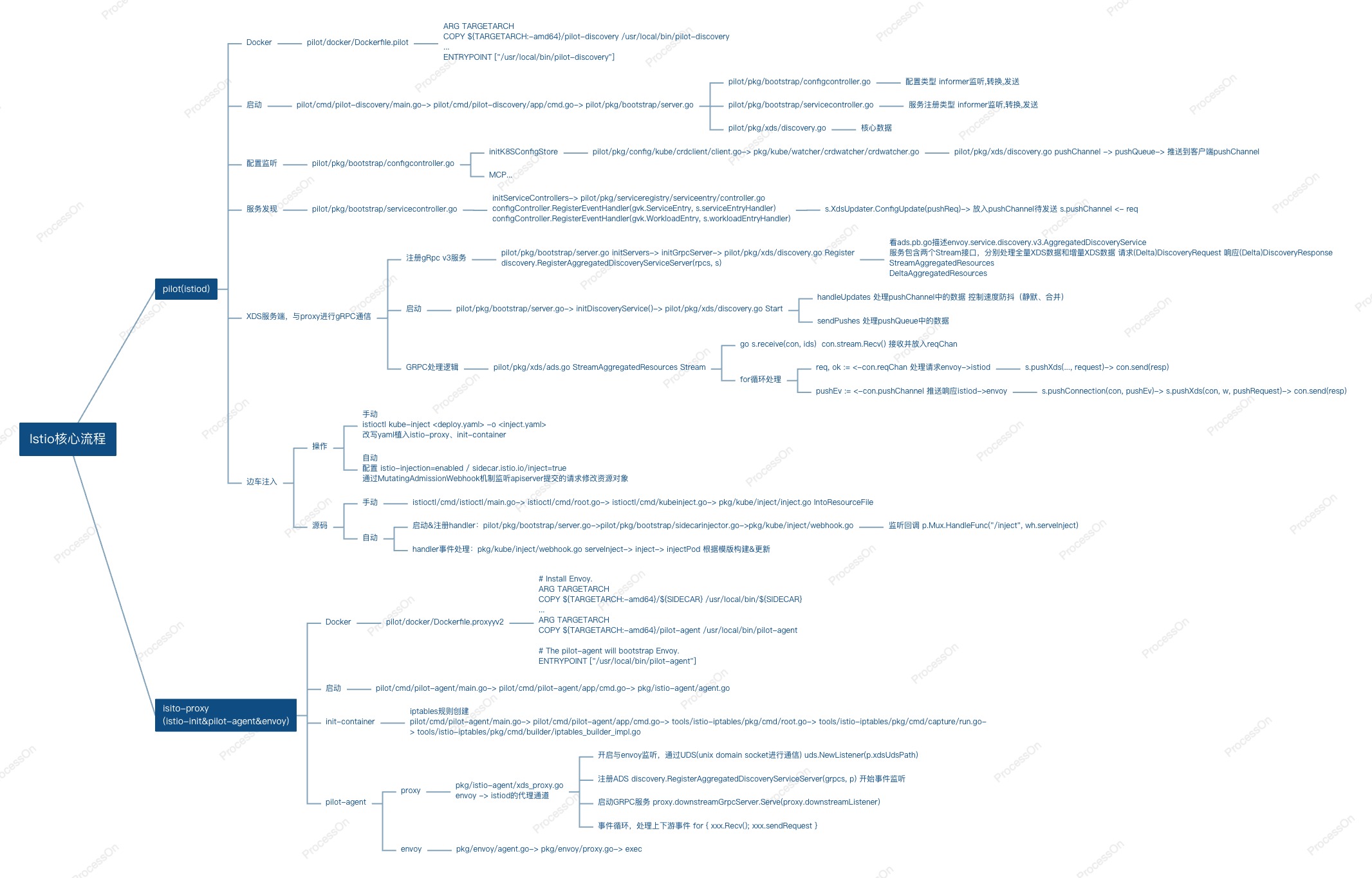

外部视角

物理角度,k8s在安装了istio之后会产生一组istiod的管理服务以及一组新的CRD,比如virtualservice/destinationrule/gateway/serviceentry等,当我们启用istioinject配置之后,pod启动时会多出一个已结束状态的istio-container初始化容器以及一个伴随业务容器运行的istio-proxy容器

功能角度,我们可以通过CRD配置来管理集群的流量,可以通过istio的mesh功能搭建多集群环境,可以通过envoyfilter动态管理配置,也可以利用envoy扩展功能解决集群间redis_cluster的访问问题

模块角度,istiod作为控制平面起到了管理控制、监听下发等作用;istio-container作为iptables规则生成器,为envoy完成流量导入的相关配置;istio-proxy内部包含两个进程,其中envoy作为C++的高性能网络边车,按照控制面推送的配置,对过往流量进行拦截处理;另一个istio-agent维护了与控制面的grpc连接,通过xds协议请求接收相关配置数据,管理envoy生命周期及证书,与envoy通过uds通信

代码视角

pilot部分主要是开启grpc服务,通过k8s的informer机制监听相关资源、转换资源结构,加入防抖提升性能(时间/合并),最终通过grpc请求推送到envoy;另外通过webhook机制,在pod创建时回调inject,修改对象实现边车注入

istio-proxy部分主要是开启grpc通道、接收配置、管理envoy生命周期、管理证书热更新

代码细节摘录

Pilot

启动

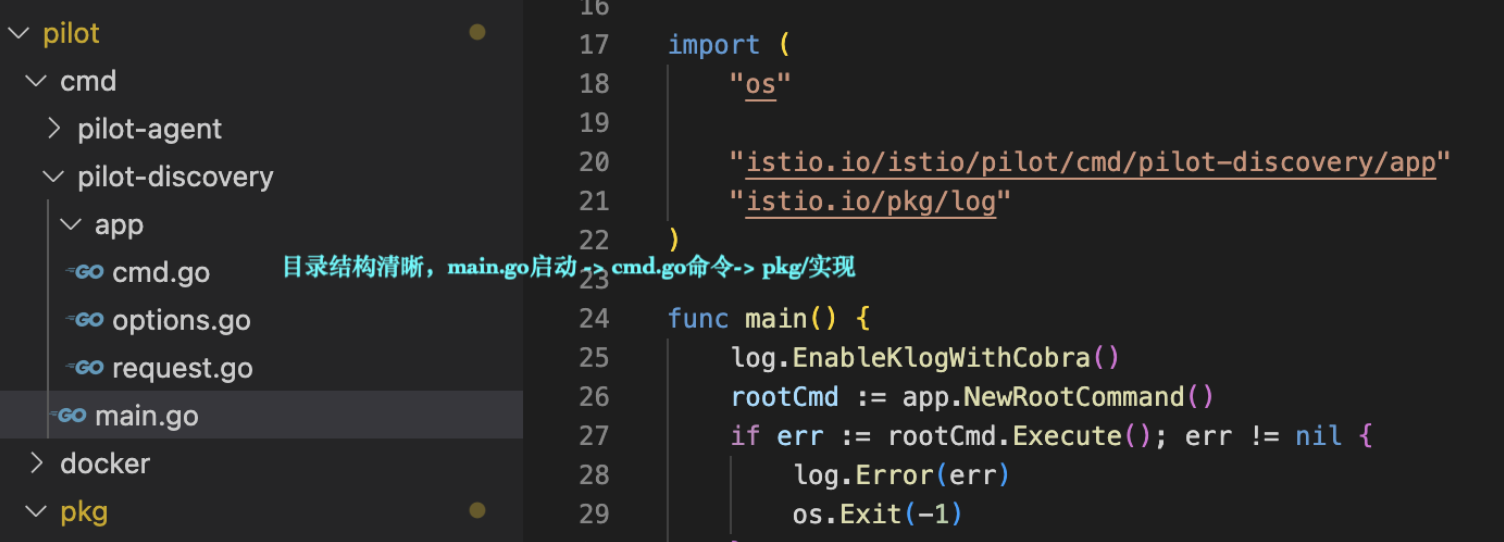

云原生基本套路,main.go→ cmd(标准k8s命令行的组件-cobar)→ pkg(主逻辑)

基础数据结构及转换

数据结构细分为三种:一种是k8s原生对象,另一种是istio的CRD对象,这两种都是通过yaml创建且存在于k8s的etcd中,第三种是作为XDS协议的LDS/RDS/CDS/EDS/SDS数据;

- 三种数据天然存在关联,之间必然需要这转换、合并等

- 为了提升计算性能、推送的性能,过程中也存在各种维度的缓存、合并等逻辑

- 由于支持各种configPatches动作,也需要动态调整计算各个对象

配置中心

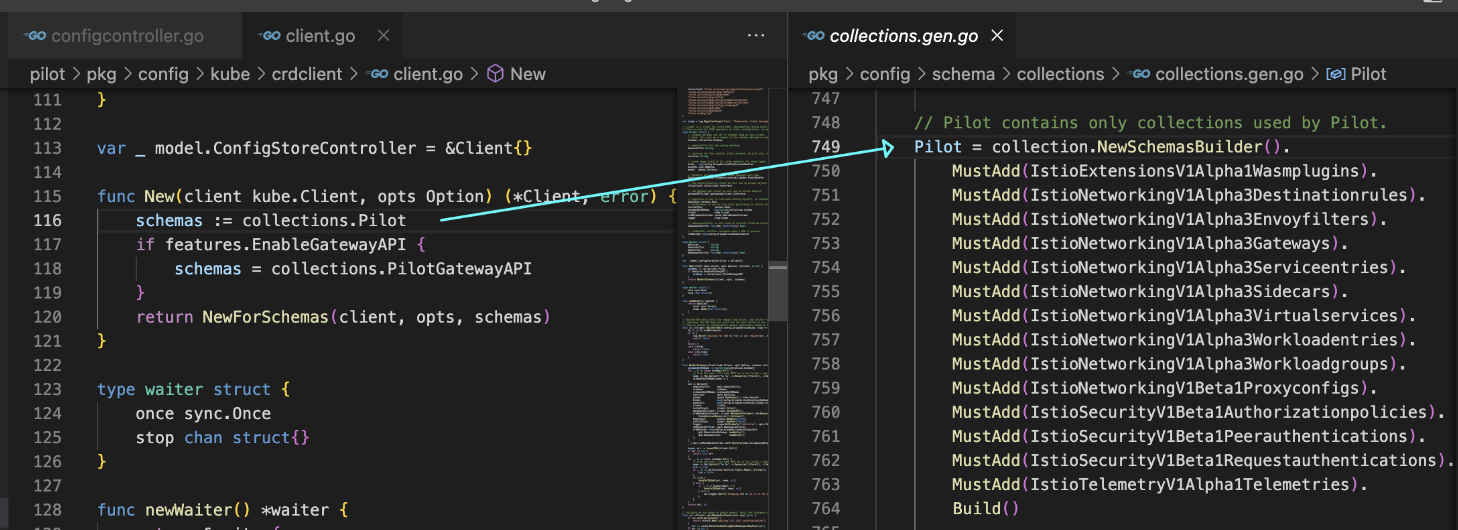

configcontroller注册中心初始化,文件/k8s/mcp over xds nacos实现 对接三方注册中心介绍

s.initConfigController(args) makeKubeConfigController → pilot/pkg/config/kube/client.go

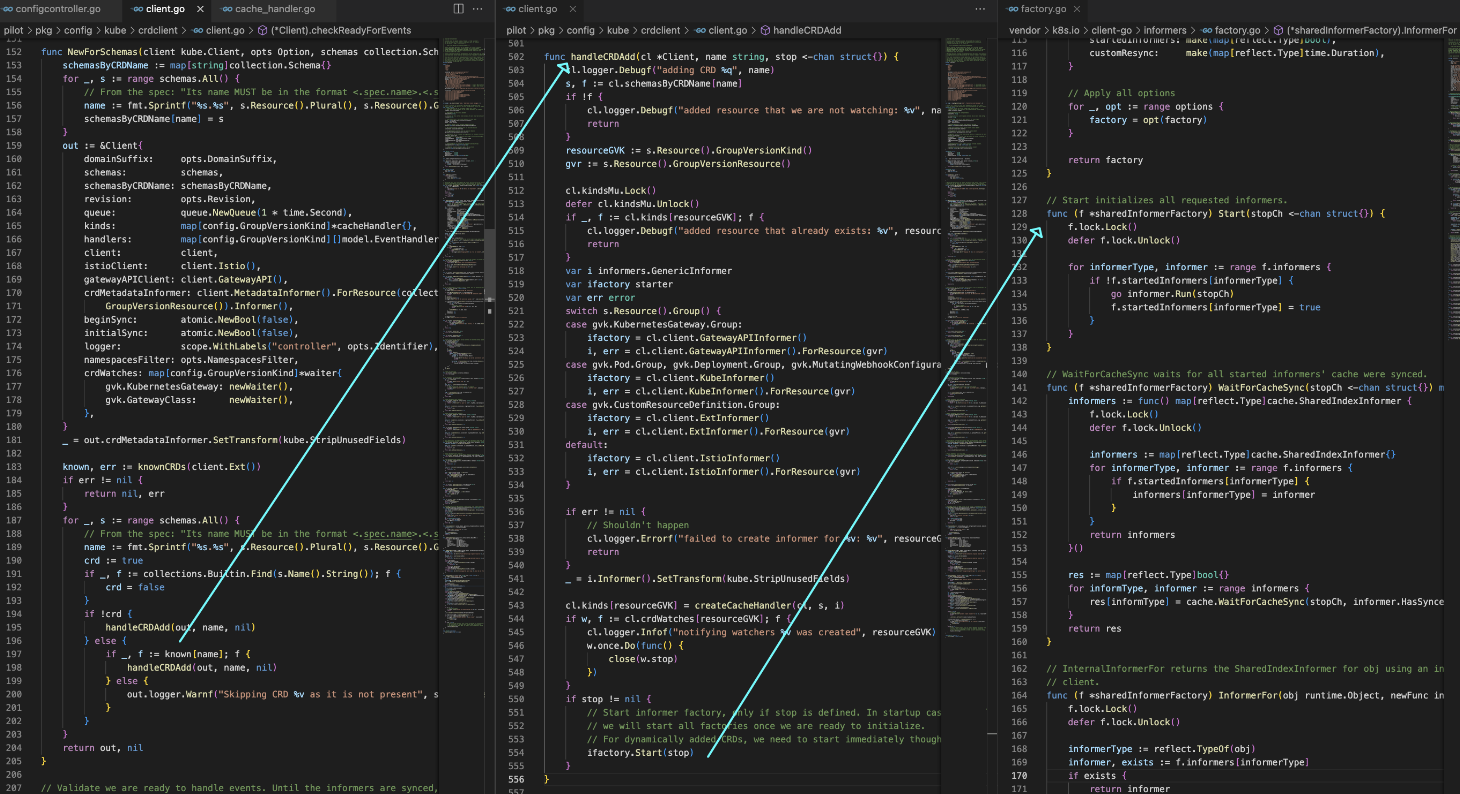

通过informer机制监听对象变更

通知机制中的队列, 用来存事件任务

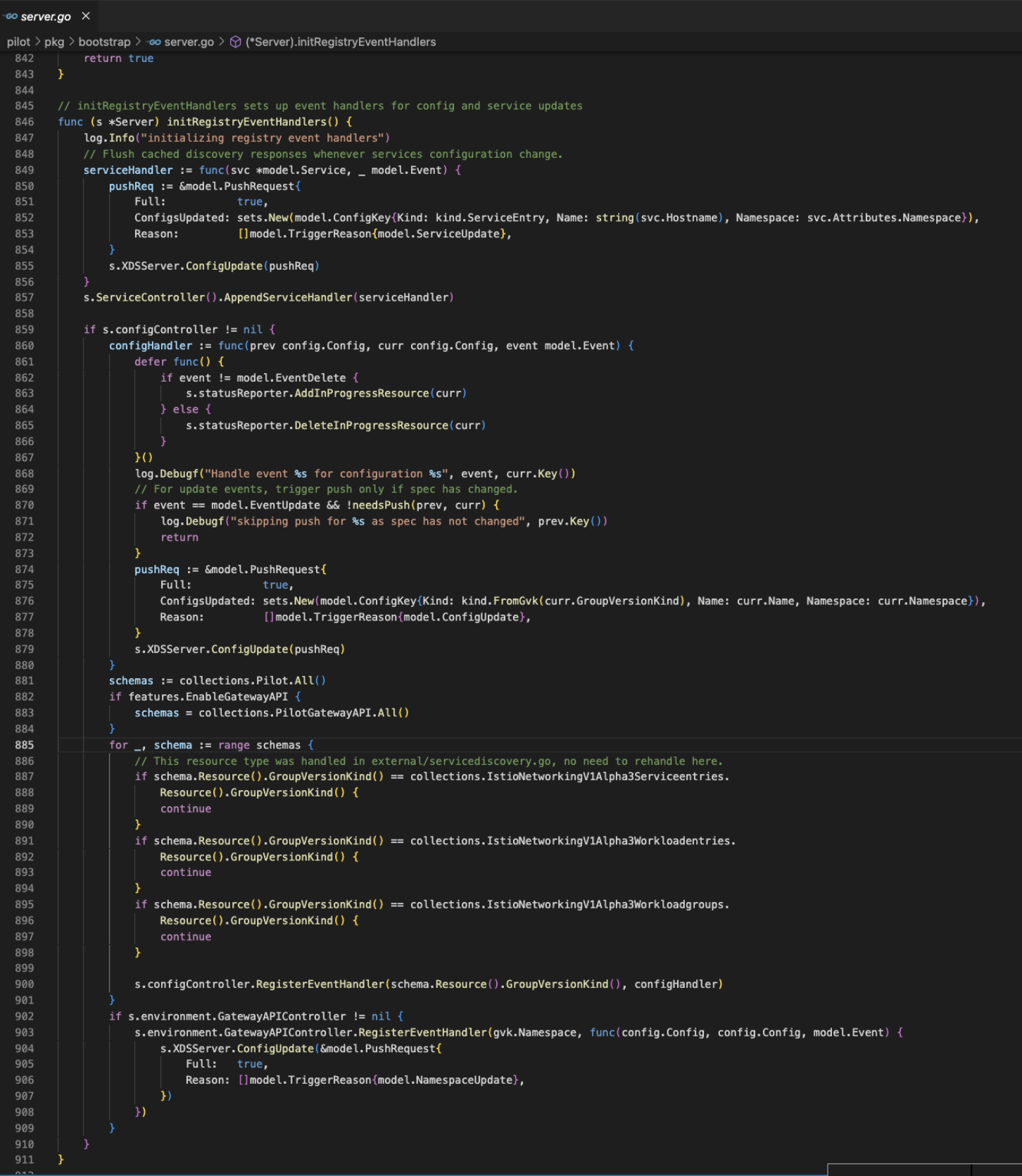

注册Handler, 对象变更后, 通过xDS发送到数据面 s.XDSServer.ConfigUpdate(pushReq)

交互

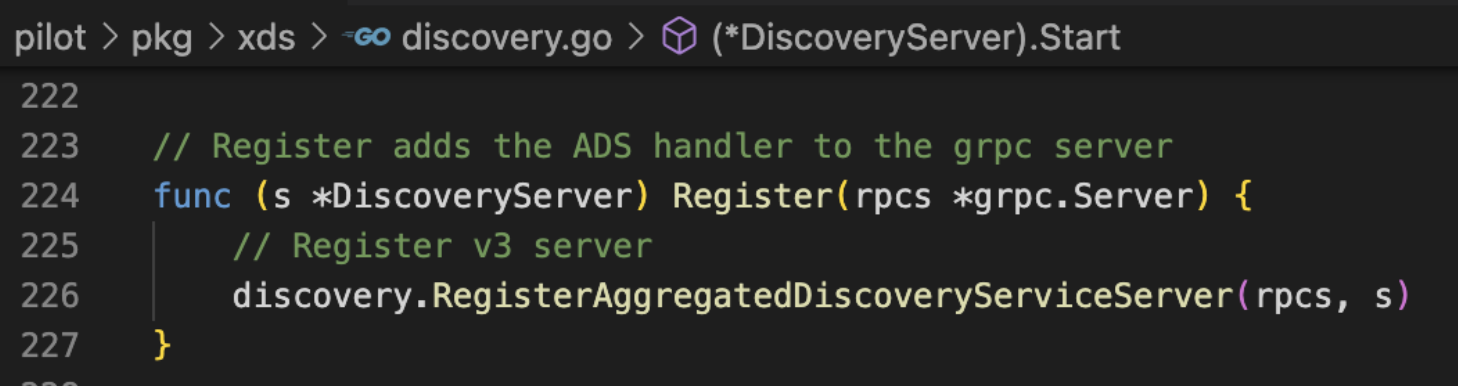

xDS协议通过服务端实现AggregatedDiscoveryService与envoy通过grpc交互

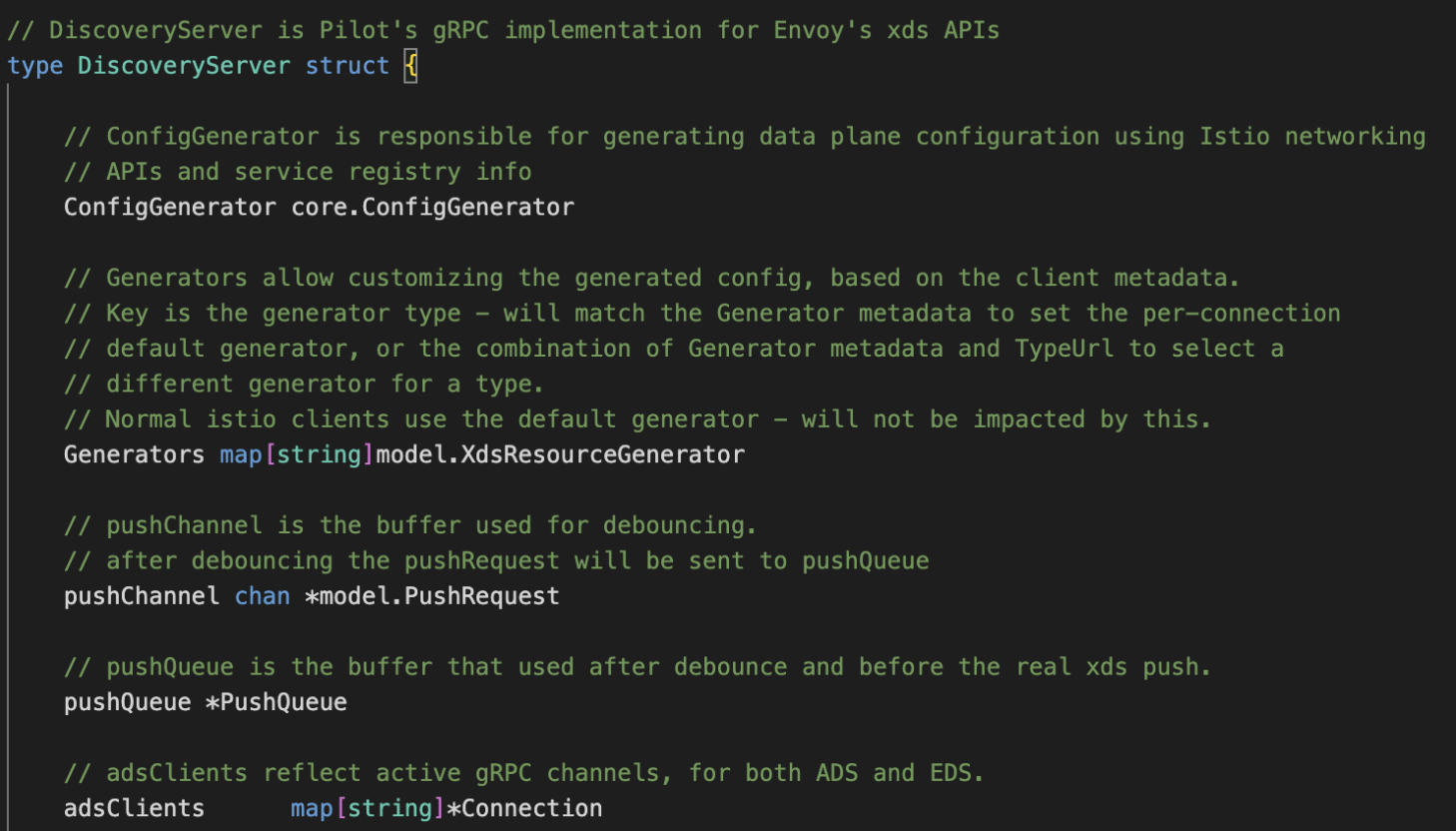

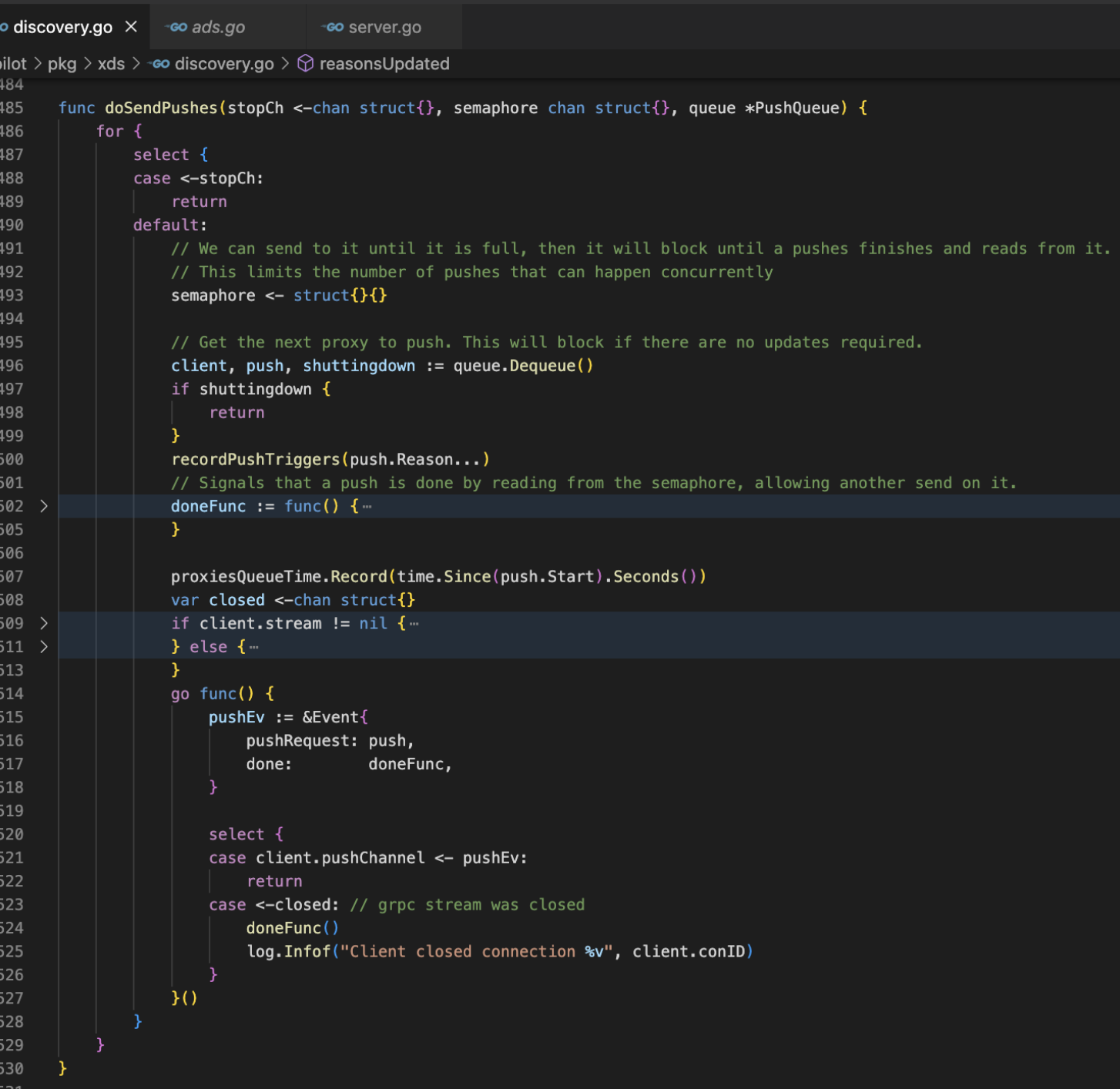

抽取核心对象,pushChannel针对防抖设计,变更先推入pushChannel,经过防抖处理(),推送到pushQueue做真正的推送

Istio协议实现及启动

server.go

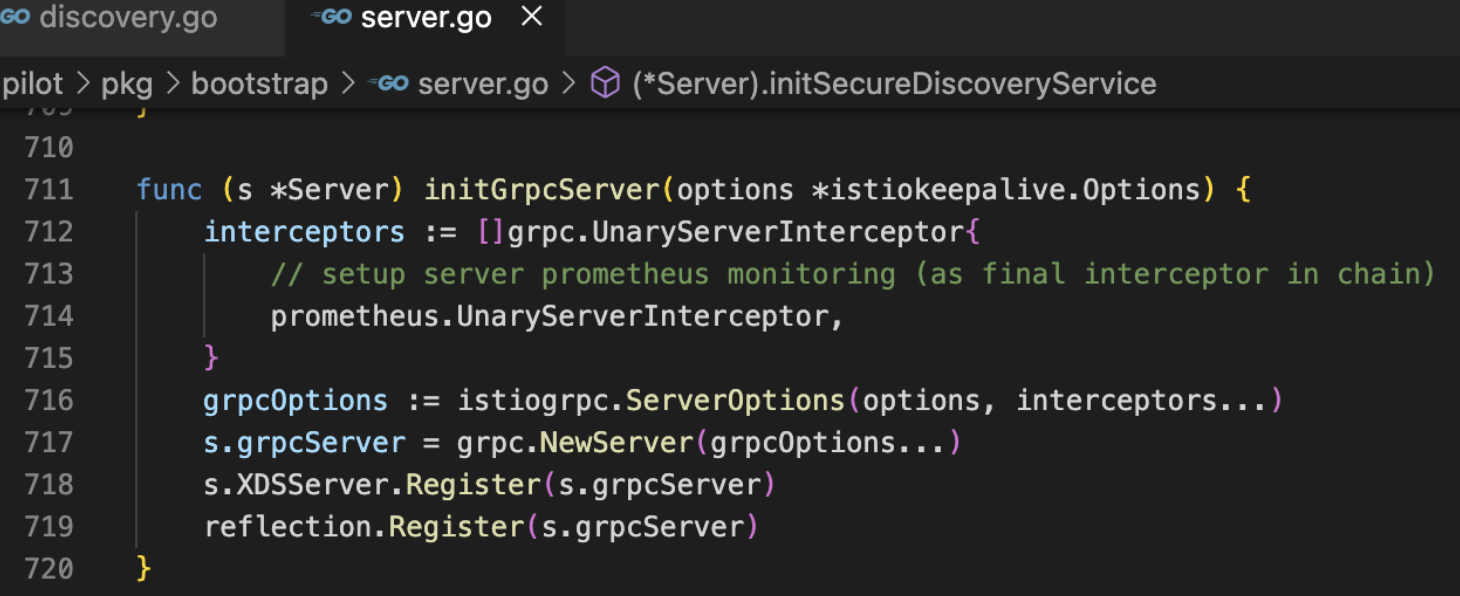

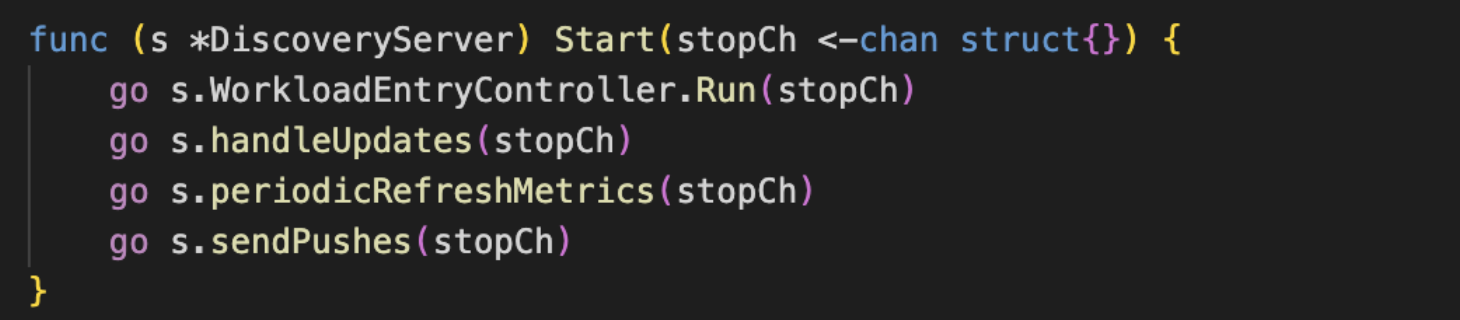

NewServer → s.initServers(args) → s.initGrpcServer(args.KeepaliveOptions) → s.initDiscoveryService() → s.XDSServer.Start(stop)

初始化grpc配置,创建grpc实例

将服务注册到grpcserver

启动监听

开启处理循环

ads.go grpc通过StreamAggregatedResources方法接收请求,处理&响应

处理链路

最终是通过ConfigGeneratorImpl构建xDS格式数据

istio-proxy

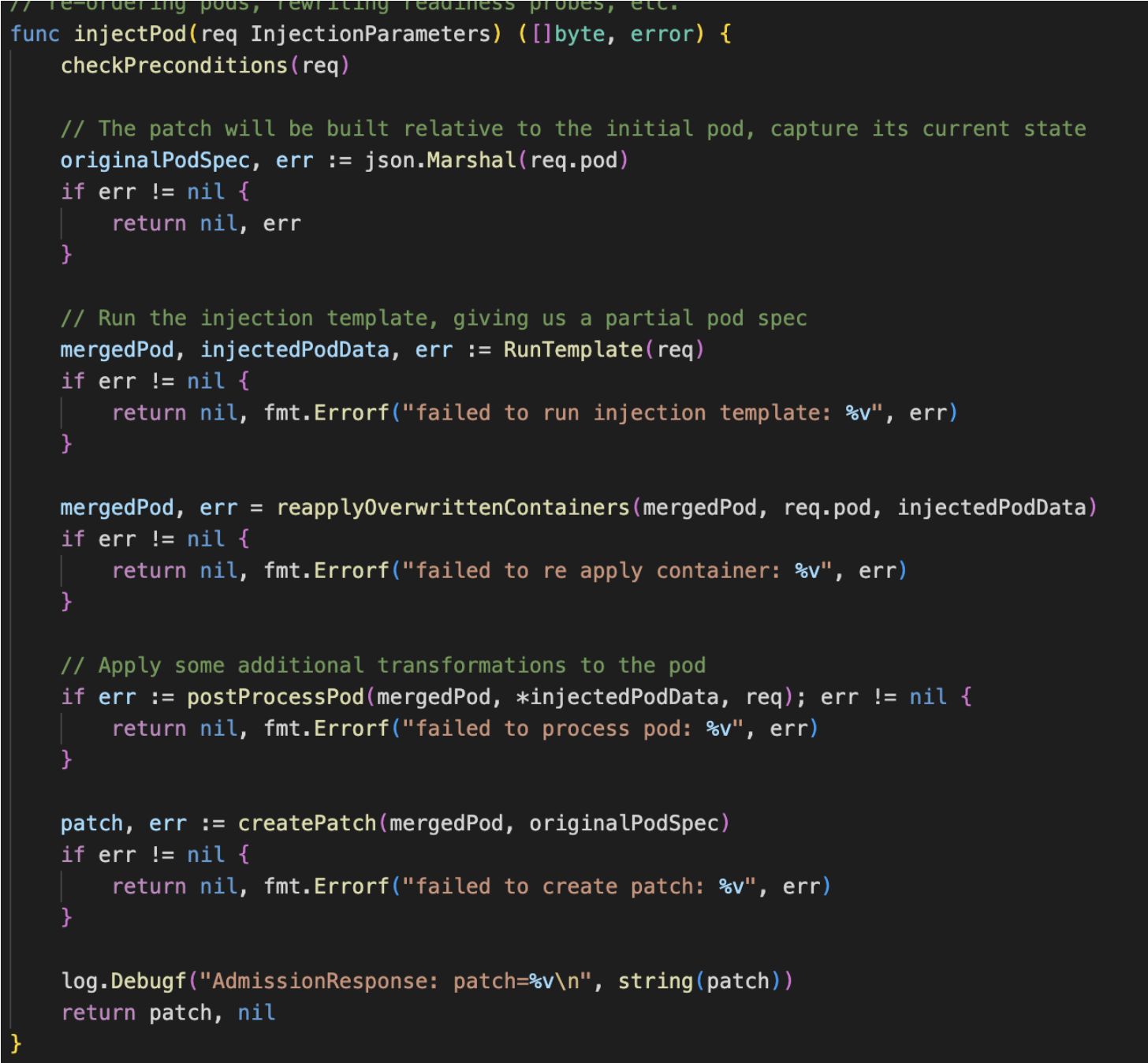

inject 通过Webhook回调, 创建pod之前修改配置,增加边车容器属性

server.go → s.initSidecarInjector(args)

sidecarinjector.go → initSidecarInjector → inject.NewWebhook(parameters) →

pkg/kube/inject/webhook.go → injectPod

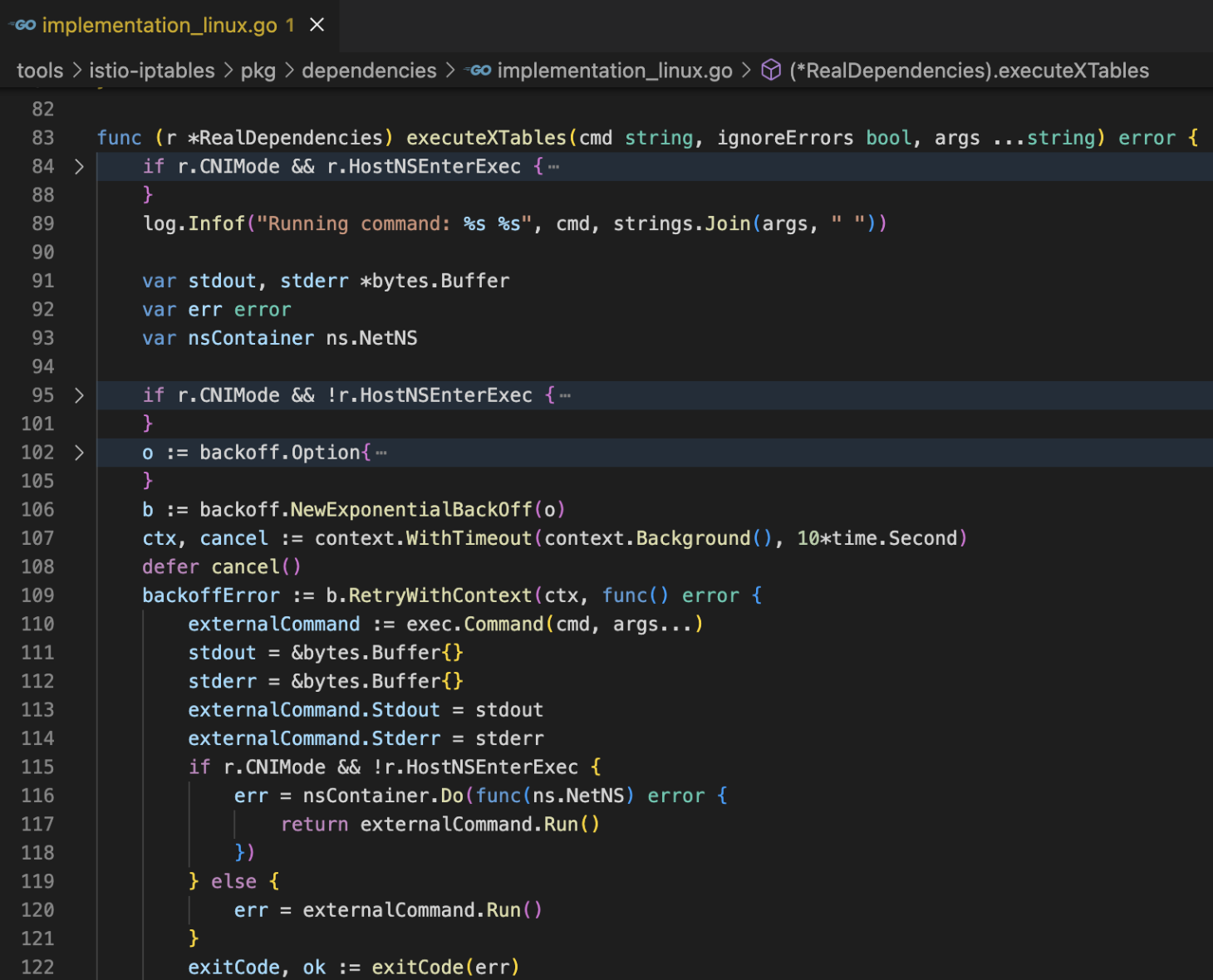

istio-init

初始路由规则生成执行

tools/istio-iptables/pkg/cmd/root.go-> tools/istio-iptables/pkg/cmd/capture/run.go-> tools/istio-iptables/pkg/cmd/builder/iptables_builder_impl.go

启动agent

连接

作为proxy,需要同时与istio(upstream)和envoy(downstream)进行grpc通信,由于与envoy是容器内交互,使用了进程间交互方式unix domain socket

从pilot/cmd/pilot-agent/main.go→ pilot/cmd/pilot-agent/app/cmd.go→ pkg/istio-agent/agent.go→ xds_proxy.go

func initXdsProxy(ia *Agent) (*XdsProxy, error) { ... proxy := &XdsProxy{ istiodAddress: ia.proxyConfig.DiscoveryAddress, istiodSAN: ia.cfg.IstiodSAN, clusterID: ia.secOpts.ClusterID, handlers: map[string]ResponseHandler{}, stopChan: make(chan struct{}), healthChecker: health.NewWorkloadHealthChecker(ia.proxyConfig.ReadinessProbe, envoyProbe, ia.cfg.ProxyIPAddresses, ia.cfg.IsIPv6), xdsHeaders: ia.cfg.XDSHeaders, xdsUdsPath: ia.cfg.XdsUdsPath, wasmCache: cache, proxyAddresses: ia.cfg.ProxyIPAddresses, ia: ia, downstreamGrpcOptions: ia.cfg.DownstreamGrpcOptions, } // 初始化下游连接 if err = proxy.initDownstreamServer(); err != nil { return nil, err } go func() { // 建立与下游envoy的grpc通信 if err := proxy.downstreamGrpcServer.Serve(proxy.downstreamListener); err != nil { log.Errorf("failed to accept downstream gRPC connection %v", err) } }() return proxy, nil }

先初始化启动与下游的监听

func (p *XdsProxy) initDownstreamServer() error { // unix domain socket 与envoy建立进程间通信监听 l, err := uds.NewListener(p.xdsUdsPath) if err != nil { return err } // TODO: Expose keepalive options to agent cmd line flags. opts := p.downstreamGrpcOptions opts = append(opts, istiogrpc.ServerOptions(istiokeepalive.DefaultOption())...) // 创建grpc服务实例 grpcs := grpc.NewServer(opts...) // 注册服务 discovery.RegisterAggregatedDiscoveryServiceServer(grpcs, p) reflection.Register(grpcs) p.downstreamGrpcServer = grpcs p.downstreamListener = l return nil }

接收请求

func (p *XdsProxy) StreamAggregatedResources(downstream xds.DiscoveryStream) error { proxyLog.Debugf("accepted XDS connection from Envoy, forwarding to upstream XDS server") return p.handleStream(downstream) }

接收到连接后保存连接并与上游建立连接

func (p *XdsProxy) handleStream(downstream adsStream) error { con := &ProxyConnection{ conID: connectionNumber.Inc(), upstreamError: make(chan error, 2), // can be produced by recv and send downstreamError: make(chan error, 2), // can be produced by recv and send // Requests channel is unbounded. The Envoy<->XDS Proxy<->Istiod system produces a natural // looping of Recv and Send. Due to backpressure introduced by gRPC natively (that is, Send() can // only send so much data without being Recv'd before it starts blocking), along with the // backpressure provided by our channels, we have a risk of deadlock where both Xdsproxy and // Istiod are trying to Send, but both are blocked by gRPC backpressure until Recv() is called. // However, Recv can fail to be called by Send being blocked. This can be triggered by the two // sources in our system (Envoy request and Istiod pushes) producing more events than we can keep // up with. // See https://github.com/istio/istio/issues/39209 for more information // // To prevent these issues, we need to either: // 1. Apply backpressure directly to Envoy requests or Istiod pushes // 2. Make part of the system unbounded // // (1) is challenging because we cannot do a conditional Recv (for Envoy requests), and changing // the control plane requires substantial changes. Instead, we make the requests channel // unbounded. This is the least likely to cause issues as the messages we store here are the // smallest relative to other channels. requestsChan: channels.NewUnbounded[*discovery.DiscoveryRequest](), // Allow a buffer of 1. This ensures we queue up at most 2 (one in process, 1 pending) responses before forwarding. responsesChan: make(chan *discovery.DiscoveryResponse, 1), stopChan: make(chan struct{}), downstream: downstream, } p.registerStream(con) defer p.unregisterStream(con) ctx, cancel := context.WithTimeout(context.Background(), time.Second*5) defer cancel() // 建立 upStream istiod 的连接 grpc.DialContext(ctx, p.istiodAddress, opts...) upstreamConn, err := p.buildUpstreamConn(ctx) if err != nil { proxyLog.Errorf("failed to connect to upstream %s: %v", p.istiodAddress, err) metrics.IstiodConnectionFailures.Increment() return err } defer upstreamConn.Close() // 建立xds连接 xds := discovery.NewAggregatedDiscoveryServiceClient(upstreamConn) ... // We must propagate upstream termination to Envoy. This ensures that we resume the full XDS sequence on new connection return p.handleUpstream(ctx, con, xds) }

从传送的流中读取数据,再传送到responsesChan

func (p *XdsProxy) handleUpstream(ctx context.Context, con *ProxyConnection, xds discovery.AggregatedDiscoveryServiceClient) error { // 通过grpc连接获取到数据传递stream upstream, err := xds.StreamAggregatedResources(ctx, grpc.MaxCallRecvMsgSize(defaultClientMaxReceiveMessageSize)) ... con.upstream = upstream // Handle upstream xds recv go func() { for { // from istiod 接收数据 resp, err := con.upstream.Recv() if err != nil { select { case con.upstreamError <- err: case <-con.stopChan: } return } select { // 从传送的流中读取数据,再传送到responsesChan case con.responsesChan <- resp: case <-con.stopChan: } } }() go p.handleUpstreamRequest(con) go p.handleUpstreamResponse(con) ... }

最后是对不同方向流的处理,从下游收到数据直接转发给istiod,

func (p *XdsProxy) handleUpstreamRequest(con *ProxyConnection) { initialRequestsSent := atomic.NewBool(false) go func() { for { // recv xds requests from envoy req, err := con.downstream.Recv() ... // forward to istiod con.sendRequest(req) } }() for { select { case req := <-con.requestsChan.Get(): con.requestsChan.Load() ... if err := sendUpstream(con.upstream, req); err != nil { err = fmt.Errorf("upstream [%d] send error for type url %s: %v", con.conID, req.TypeUrl, err) con.upstreamError <- err return } case <-con.stopChan: return } } }

接收从istio到proxy中转的数据,经过判断类型,主分支最终转发到envoy

func (p *XdsProxy) handleUpstreamResponse(con *ProxyConnection) { forwardEnvoyCh := make(chan *discovery.DiscoveryResponse, 1) for { select { // 接收从istio到proxy中转的数据,经过判断类型,主分支最终转发到envoy; case resp := <-con.responsesChan: ... switch resp.TypeUrl { case v3.ExtensionConfigurationType: if features.WasmRemoteLoadConversion { ... } else { // Otherwise, forward ECDS resource update directly to Envoy. forwardToEnvoy(con, resp) } default: if strings.HasPrefix(resp.TypeUrl, v3.DebugType) { p.forwardToTap(resp) } else { forwardToEnvoy(con, resp) } } case resp := <-forwardEnvoyCh: forwardToEnvoy(con, resp) case <-con.stopChan: return } } }

envoy启动

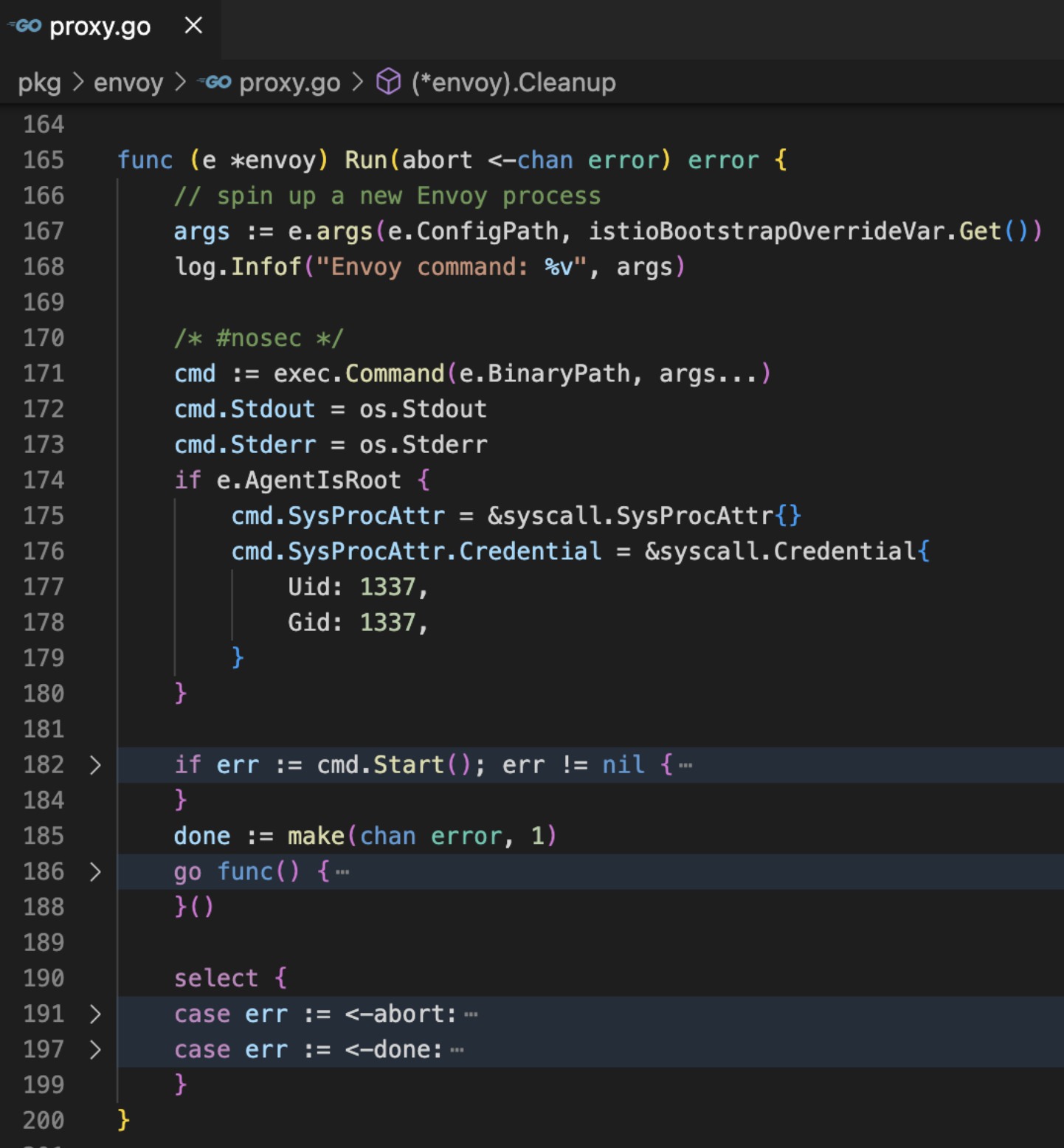

pkg/envoy/agent.go-> pkg/envoy/proxy.go-> exec 根据配置构建启动参数,执行envoy启动命令

istioctl

istioctl目录下istioctl/main.go→ root.go→ ....go

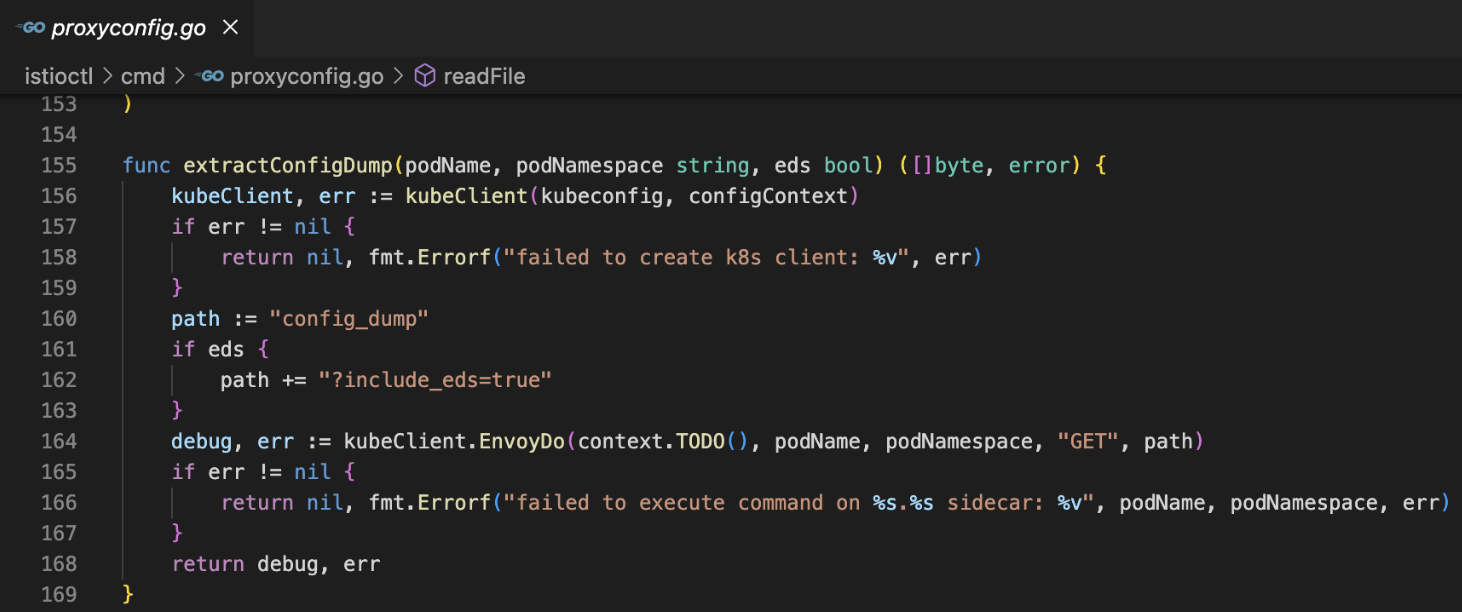

以proxy-config为例,proxyconfig.go

配置通过Http Get请求到的对应的pod获取,其余命令类似

资料

https://www.cnblogs.com/FireworksEasyCool/p/12693749.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号