实验记录 | BERT 文本情感分类

Bert 文本情感分类实战

Intro

BERT 模型直接下载来自 HuggingFace 上由 Google 发布的 bert-case-uncased 预训练模型。

数据集采用 IMDB(Internet Movie Database Dataset),这是 NLP 领域中一个非常著名和广泛使用的数据集,主要应用于文本情感分析任务。

Experimental Setup

- nproc = 64

- Core(s) per socket = 16

- Intel Xeon Silver4314@2.40 GHz

- NVIDIA Corporation GA102 [GeForce RTX 3080 Ti]

- CUDA Version = v11.8.89

- Python 3.10.5

Train

教程给出的显存占用约 6G,在 3090 的运行时间约 40 min. 实测 3080 运行时间在 2~3 h.

Debug

首次运行迟缓

1%| | 48/9375 [07:28<24:12:23, 9.34s/it]

排查后定位为 pytorch-xla 未能自动选择 GPU 作为默认 PJRT (Portable JIT Runtime)。排查及修改如下。

# print(os.environ.get('PJRT_DEVICE'))

os.environ['PJRT_DEVICE'] = 'GPU'

解决该问题后又运行了两次,第一次在 model.save_pretrain 处中断,建议首先执行 model.to(cpu) 再保存。下文展示图表为第二次运行结果。

Results

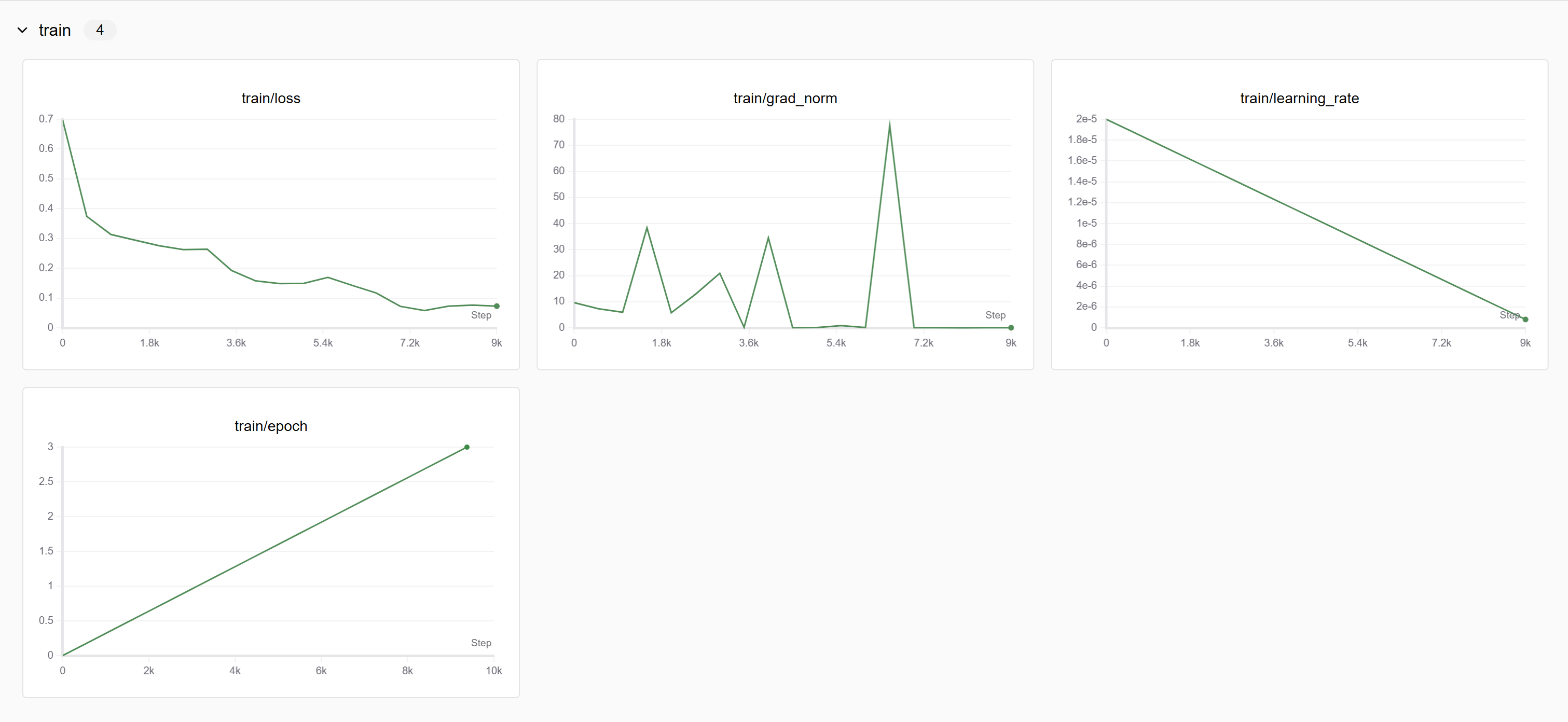

Train

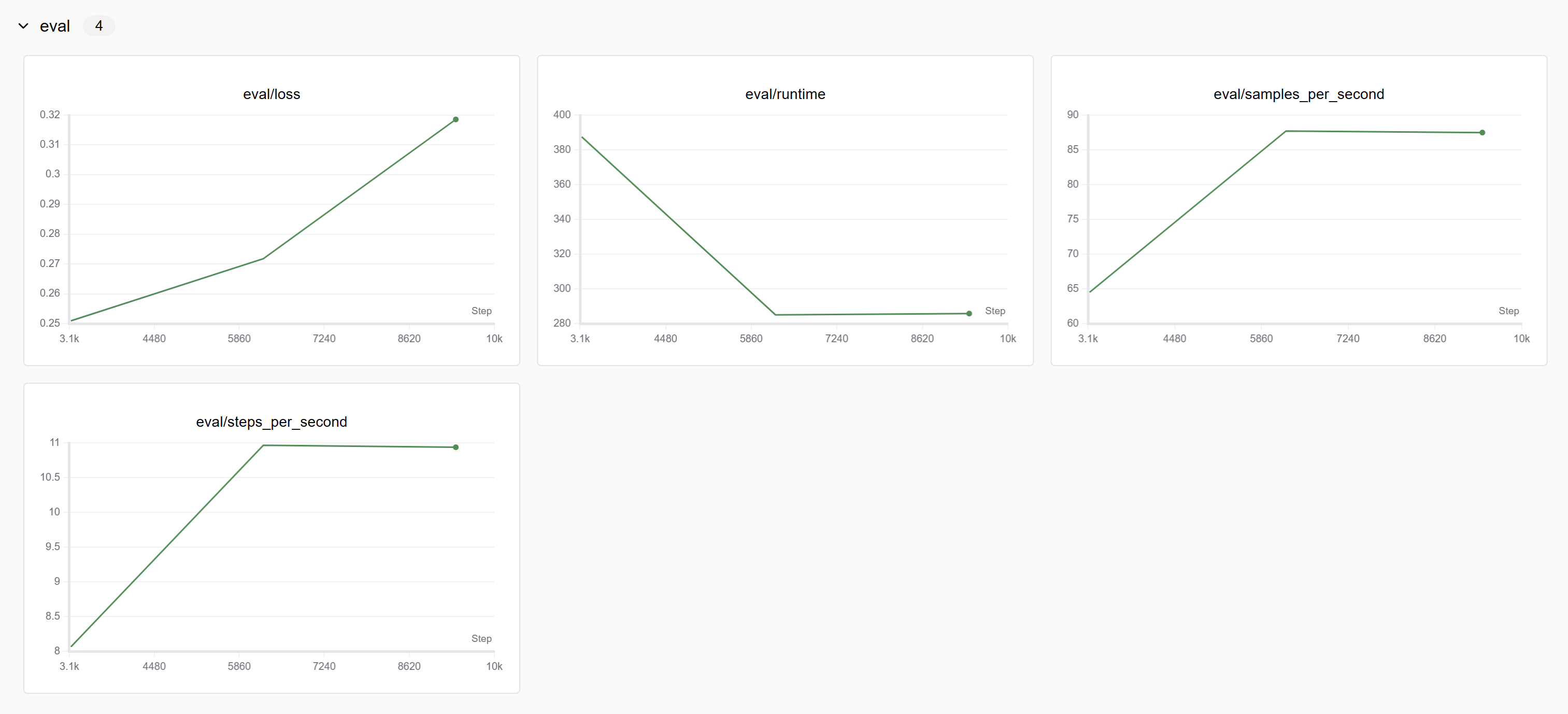

Eval

Predict

{'train_runtime': 9730.7196, 'train_samples_per_second': 7.708, 'train_steps_per_second': 0.963, 'train_loss': 0.17324476619084675, 'epoch': 3.0}

100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 9375/9375 [2:42:06<00:00, 1.04s/it]

sft finished, saving pre_model...

Input Text: I absolutely loved this movie! The storyline was captivating and the acting was top-notch. A must-watch for everyone.

Predicted class: 1 positive

Input Text: This movie was a complete waste of time. The plot was predictable and the characters were poorly developed.

Predicted class: 0 negative

Input Text: An excellent film with a heartwarming story. The performances were outstanding, especially the lead actor.

Predicted class: 1 positive

Input Text: I found the movie to be quite boring. It dragged on and didn't really go anywhere. Not recommended.

Predicted class: 0 negative

Input Text: A masterpiece! The director did an amazing job bringing this story to life. The visuals were stunning.

Predicted class: 1 positive

Input Text: Terrible movie. The script was awful and the acting was even worse. I can't believe I sat through the whole thing.

Predicted class: 0 negative

Input Text: A delightful film with a perfect mix of humor and drama. The cast was great and the dialogue was witty.

Predicted class: 1 positive

Input Text: I was very disappointed with this movie. It had so much potential, but it just fell flat. The ending was particularly bad.

Predicted class: 0 negative

Input Text: One of the best movies I've seen this year. The story was original and the performances were incredibly moving.

Predicted class: 1 positive

Input Text: I didn't enjoy this movie at all. It was confusing and the pacing was off. Definitely not worth watching.

Predicted class: 0 negative

Summary

遇到的报错怀疑还是 pytorch-xla 版本问题所致。做实验的服务器正在复现 MAGIS,需要做多个后端的对比实验,各种软件包为兼容性做出牺牲,均控制在较低版本,导致这个 BERT 实验的代码不能直接运行。

浙公网安备 33010602011771号

浙公网安备 33010602011771号