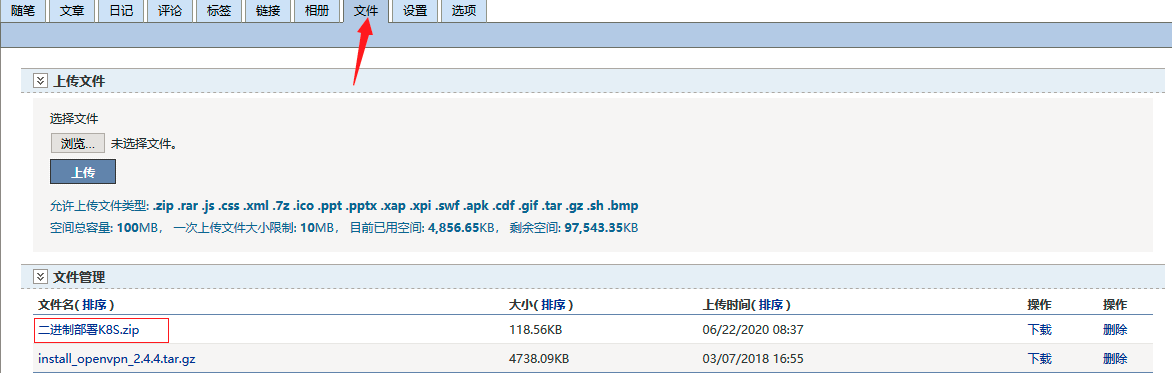

二进制部署K8S

源文件已上传至此,有问题可下载对比!!

第一章 部署环境

Master192.168.0.118

安装组件:

etcd、kube-apiserver、kube-controller-manager、kube-scheduler

========================

Node1 192.168.0.117

安装组件:

docker、kubelet、kube-proxy

node2 192.168.0.116

=========================

第二章 系统预配置

在/etc/hosts文件中添加对应的域名解析

[root@master ~]# cat /etc/hosts

192.168.0.118 master

192.168.0.117 node1

192.168.0.116 node2

[root@ndoe1 ~]# cat /etc/hosts

192.168.0.118 master

192.168.0.117 node1

192.168.0.116 node2

[root@ndoe2 ~]# cat /etc/hosts

192.168.0.118 master

192.168.0.117 node1

192.168.0.116 node2

设置系统防火墙为开机不启动并关闭防火墙服务

[root@master ~]# systemctl disable firewalld.service && systemctl stop

firewalld.service

[root@ndoe1 ~]# systemctl disable firewalld.service && systemctl stop

firewalld.service

[root@ndoe2 ~]# systemctl disable firewalld.service && systemctl stop

firewalld.service

关闭SElinux,并配置开机关闭

以下“系统预配置“操作需在所有机器上面执行。

[root@master ~]# setenforce 0 && sed -i 's/enforcing/disabled/g' /etc

/selinux/config

[root@ndoe1 ~]# setenforce 0 && sed -i 's/enforcing/disabled/g' /etc

/selinux/config

[root@ndoe2 ~]# setenforce 0 && sed -i 's/enforcing/disabled/g' /etc

/selinux/config

关闭交换内存并设置开机不启用

[root@master ~]# swapoff -a && sed -i 's/.*swap.*/#&/' /etc/fstab

[root@ndoe1 ~]# swapoff -a && sed -i 's/.*swap.*/#&/' /etc/fstab

[root@ndoe2 ~]# swapoff -a && sed -i 's/.*swap.*/#&/' /etc/fstab

第三章 源码包下载

Kubernetes二进制文件下载

https://github.com/kubernetes/kubernetes/releases

etcd数据库下载

https://github.com/coreos/etcd/releases/

第四章 Master部署配置

4.1 Etcd数据库部署配置

解压下载的源码包文件,并将etcd、etcdctl拷贝至/usr/bin目录

[root@master ~]# tar zxvf etcd-v3.4.7-linux-amd64.tar.gz

[root@master ~]# cd etcd-v3.4.7-linux-amd64

[root@master etcd-v3.4.7-linux-amd64]# cp etcd etcdctl /usr/bin/

在/usr/lib/systemd/system/目录下手动编写etcd.service文件,并将内容配置如下,新建对应的文件目录

[root@master ~]# vi /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

[Service]

Type=notify

TimeoutStartSec=0

Restart=always

WorkingDirectory=/var/lib/etcd/

EnvironmentFile=-/etc/etcd/etcd.conf

ExecStart=/usr/bin/etcd

[Install]

WantedBy=multi-user.target

[root@master ~]# mkdir -p /var/lib/etcd/

[root@master ~]# mkdir -p /etc/etcd

在/etc/etcd/目录下手动编写etcd.conf文件,并将内容配置如下

[root@master ~]# vi /etc/etcd/etcd.conf

ETCD_NAME=ETCD Server

ETCD_DATA_DIR="/var/lib/etcd/"

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.0.118:2379" ##MasterIP

加载、设置开启启动项并启动etcd服务

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl enable etcd.service

[root@master ~]# systemctl start etcd.service

4.2 kube-apiserver部署配置

解压下载的源码包文件,将kube-apiserver拷贝至/usr/bin目录

[root@master ~]# tar zxvf kubernetes-server-linux-amd64.tar.gz

[root@master ~]# cp /root/kubernetes/server/bin/kube-apiserver /usr/bin/

在/usr/lib/systemd/system/目录下手动编写kube-apiserver.service文件,并将内容配置如下,新建对应的文件目录

[root@master ~]# vi /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

After=etcd.service

Wants=etcd.service

[Service]

EnvironmentFile=/etc/kubernetes/apiserver

ExecStart=/usr/bin/kube-apiserver \

$KUBE_ETCD_SERVERS \

$KUBE_API_ADDRESS \

$KUBE_API_PORT \

$KUBE_SERVICE_ADDRESSES \

$KUBE_ADMISSION_CONTROL \

$KUBE_API_LOG \

$KUBE_API_ARGS

Restart=on-failure

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

[root@master ~]# mkdir -p /etc/kubernetes

在/etc/kubernetes/目录下手动编写apiserver文件,并将内容配置如下

[root@master ~]# vi /etc/kubernetes/apiserver

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

KUBE_API_PORT="--insecure-port=8080"

KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.0.118:2379" ##MasterIP

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=192.168.0.0/24" ##

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,LimitRanger,

SecurityContextDeny,ServiceAccount,ResourceQuota"

KUBE_API_LOG="--logtostderr=false --log-dir=/var/log/kubernets/apiserver --

v=2"

KUBE_API_ARGS=" "

4.3 kube-controller-manager部署配置

将kube-controller-manager拷贝至/usr/bin目录

[root@master ~]# cp /root/kubernetes/server/bin/kube-controller-manager /usr/bin/

在/usr/lib/systemd/system/目录下手动编写kube-controller-manager.service文件,并将内容配置如下

[root@master ~]# vi /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Scheduler

After=kube-apiserver.service

Requires=kube-apiserver.service

[Service]

EnvironmentFile=-/etc/kubernetes/controller-manager

ExecStart=/usr/bin/kube-controller-manager \

$KUBE_MASTER \

$KUBE_CONTROLLER_MANAGER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

在/etc/kubernetes/目录下手动编写controller-manager文件,并将内容配置如下

[root@master ~]# vi /etc/kubernetes/controller-manager

KUBE_MASTER="--master=http://192.168.0.118:8080" ##MasterIP

KUBE_CONTROLLER_MANAGER_ARGS=" "

4.4 kube-scheduler部署配置

将kube-scheduler拷贝至/usr/bin目录

[root@master ~]# cp /root/kubernetes/server/bin/kube-scheduler /usr/bin/

在/usr/lib/systemd/system/目录下手动编写kube-scheduler.service文件,并将内容配置如下

[root@master ~]# vi /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

After=kube-apiserver.service

Requires=kube-apiserver.service

[Service]

User=root

EnvironmentFile=-/etc/kubernetes/scheduler

ExecStart=/usr/bin/kube-scheduler \

$KUBE_MASTER \

$KUBE_SCHEDULER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

在/etc/kubernetes/目录下手动编写scheduler文件,并将内容配置如下

[root@master ~]# vi /etc/kubernetes/scheduler

KUBE_MASTER="--master=http://192.168.0.118:8080" ##MasterIP

KUBE_SCHEDULER_ARGS="--logtostderr=true --log-dir=/var/log/kubernetes

/scheduler --v=2"

4.5 秘钥配置

将kubectl拷贝至/usr/bin目录

[root@master ~]# cp /root/kubernetes/server/bin/kubectl /usr/bin

下载对应的ssl安全秘钥验证文件,并授权移动到指定位置

[root@master ~]# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

[root@master ~]# chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl_certinfo_linux-amd64

[root@master ~]# mv cfssl_linux-amd64 /usr/local/bin/cfssl

[root@master ~]# mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

[root@master ~]# mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

手动编写如下ca-config.json、ca-csr.json、server-csr.json文件

[root@master ~]# vi ca-config.json

{

"signing": { "default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

[root@master ~]# vi ca-csr.json

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

[root@master ~]# vi server-csr.json

{

"CN": "etcd",

"hosts": [

"192.168.0.118",

"192.168.0.116",

"192.168.0.117"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}生成秘钥文件

[root@master ~]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

[root@master ~]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

在root目录下新建.kube目录并进入该目录执行相关的k8s config秘钥文件配置

[root@master ~]# mkdir -p /root/.kube

[root@master ~]# cd /root/.kube/

[root@master .kube]# kubectl config set-cluster kubernetes --certificate-authority=/root/ca.pem --embed-certs=true --server=http://192.168.0.118:8080 --kubeconfig=config

[root@master .kube]# kubectl config set-credentials admin --client-certificate=/root/ca.pem --client-key=/root/ca-key.pem --embed-certs=true --kubeconfig=config

[root@master .kube]# kubectl config set-context kubernetes --cluster=kubernetes --user=admin --kubeconfig=config

[root@master .kube]# kubectl config use-context kubernetes --kubeconfig=config

[root@master .kube]# cp config /etc/kubernetes/admin.conf

[root@master .kube]# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* kubernetes kubernetes admin

[root@master .kube]# kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: http://192.168.0.118:8080

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: admin

name: kubernetes

current-context: kubernetes

kind: Config

preferences: {}

users:

- name: admin

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

[root@master .kube]# kubectl config current-context

kubernetes

添加环境变量,并source重新加载

[root@master ~]# vi /etc/profile

export KUBECONFIG=/etc/kubernetes/admin.conf ##

[root@master ~]# source /etc/profile

加载、设置开启启动项并启动kube-apiserver.service、kube-controller-manager.service、kube-scheduler.service服务

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl enable kube-apiserver.service

[root@master ~]# systemctl start kube-apiserver.service

[root@master ~]# systemctl enable kube-controller-manager.service

[root@master ~]# systemctl start kube-controller-manager.service

[root@master ~]# systemctl enable kube-scheduler.service

[root@master ~]# systemctl start kube-scheduler.service

检验Master各组件运行状态

[root@master ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

第五章 Node部署配置

以下“Node部署配置“操作需在所有的Node机器上面执行。

配置yum源,安装匹配master版本的docker包

[root@node1 ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@node1 ~]# yum-config-manager --add-repo https://mirrors.aliyun.com

/docker-ce/linux/centos/docker-ce.repo

[root@node1 ~]# yum install -y docker-ce docker-ce-cli

解压下载的源码包文件,将kubelet、kube-proxy拷贝至Node节点的/usr/bin目录

[root@node1 ~]# tar zxvf kubernetes-server-linux-amd64.tar

[root@node1 ~]# cp /root/kubernetes/server/bin/kubelet /usr/bin/

[root@node1 ~]# cp /root/kubernetes/server/bin/kube-proxy /usr/bin/

在/usr/lib/systemd/system/目录下手动编写kube-proxy.service文件,并将内容配置如下,新建对应的文件目录

[root@node1 ~]# vi /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

EnvironmentFile=/etc/kubernetes/config

EnvironmentFile=/etc/kubernetes/proxy

ExecStart=/usr/bin/kube-proxy \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_PROXY_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

[root@node1 ~]# mkdir -p /etc/kubernetes

在/etc/kubernetes/目录下手动编写proxy文件,并将内容配置如下

[root@node1 ~]# vi /etc/kubernetes/proxy

KUBE_PROXY_ARGS=""

在/etc/kubernetes/目录下手动编写config文件,并将内容配置如下

[root@node1 ~]# vi /etc/kubernetes/config

KUBE_LOGTOSTDERR="--logtostderr=true"

KUBE_LOG_LEVEL="--v=0"

KUBE_ALLOW_PRIV="--allow_privileged=false"

KUBE_MASTER="--master=http://192.168.0.118:8080" ##MasterIP

加载、设置开启启动项并启动kube-proxy服务

[root@node1 ~]# systemctl daemon-reload

[root@node1 ~]# systemctl enable kube-proxy

[root@node1 ~]# systemctl start kube-proxy

在/usr/lib/systemd/system/目录下手动编写kubelet.service文件,并将内容配置如下,新建对应的文件目录

[root@node1 ~]# vi /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

EnvironmentFile=/etc/kubernetes/kubelet

ExecStart=/usr/bin/kubelet $KUBELET_ARGS

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target

[root@node1 ~]# mkdir -p /var/lib/kubelet

在/etc/kubernetes/目录下手动编写kubelet文件,并将内容配置如下

[root@node1 ~]# vi /etc/kubernetes/kubelet

KUBELET_ADDRESS="--address=0.0.0.0"

KUBELET_HOSTNAME="--hostname-override=192.168.0.116" ##NodeIP

KUBELET_API_SERVER="--api-servers=http://192.168.0.118:8080" ##MasterIP

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=reg.docker.tb/harbor/pod-infrastructure:latest"

KUBELET_ARGS="--enable-server=true --enable-debugging-handlers=true --fail-swap-on=false --kubeconfig=/var/lib/kubelet/kubeconfig"

在/var/lib/kubelet/目录下手动编写kubeconfig文件,并将内容配置如下

[root@node1 ~]# vi /var/lib/kubelet/kubeconfig

apiVersion: v1

kind: Config

users:

- name: kubelet

clusters:

- name: kubernetes

cluster:

server: http://192.168.0.118:8080 ##MasterIP

contexts:

- context:

cluster: kubernetes

user: kubelet

name: service-account-context

current-context: service-account-context

加载、设置开启启动项并启动kubelet.service服务

[root@node1 ~]# systemctl daemon-reload

[root@node1 ~]# systemctl enable kubelet.service

[root@node1 ~]# systemctl start kubelet.service

请返回至Master机器查看二进制部署检验信息

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

node1 Ready <none> 57s v1.18.1

创建pod报错:

[root@bogon ~]# kubectl create -f dapi-test-pod.yaml

Error from server (ServerTimeout): error when creating "dapi-test-pod.yaml": No API token found for service account "default", retry after the token is automatically created and added to the service account

根据报错信息可以初步看出是service account没有设置API token引起的。

解决:配置ServiceAccount

1、首先生成密钥:

[root@bogon ~]# openssl genrsa -out /etc/kubernetes/serviceaccount.key 2048 Generating RSA private key, 2048 bit long modulus .............+++ ............................+++ e is 65537 (0x10001)

2、编辑/etc/kubernetes/apiserver

添加以下内容:

KUBE_API_ARGS="--service-account-key-file=/etc/kubernetes/serviceaccount.key"

3、编辑/etc/kubernetes/controller-manager

添加以下内容:

KUBE_CONTROLLER_MANAGER_ARGS="--service-account-private-key-file=/etc/kubernetes/serviceaccount.key"

4、重启kubernetes服务:

systemctl restart etcd kube-apiserver kube-controller-manager kube-schedulerkubelet提示DNS错误信息

kubelet does not have ClusterDNS IP configured and cannot create Pod using "ClusterFirst" policy. Fail

解决办法:

在cat /etc/kubernetes/kubelet 配置文件中添加如下内容即可 KUBE_ARGS="--cluster-dns=10.0.0.110 --cluster-domain=cluster.local" 重启 systemctl daemon-reload; systemctl restart kubelet 即可

启动Pod遇到CreatePodSandbox或RunPodSandbox异常。查看日志

Warning FailedCreatePodSandBox 40s (x11 over 8m41s) kubelet, server01 Failed to create pod sandbox: rpc error: code = Unknown desc = failed pulling image "k8s.gcr.io/pause:3.1": Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

解决办法:

从别的镜像源把镜像取下来,然后再重新做个标签

docker pull mirrorgooglecontainers/pause:3.1 docker tag mirrorgooglecontainers/pause:3.1 k8s.gcr.io/pause:3.1