htmlUnit加持,网络小蜘蛛的超级进化

前言

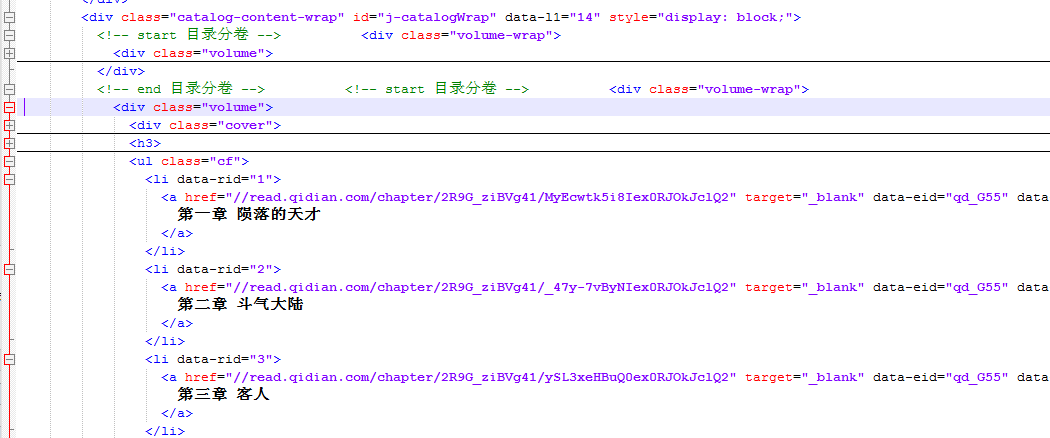

前段时间写了个小说线上采集阅读(猛戳这里:https://www.cnblogs.com/huanzi-qch/p/9817831.html),当我们去采集起点网的小说目录时发现目录数据没有在html里面,数据是页面加载时,用ajax请求获取,且对应的div是隐藏的,需要点击“目录”,才看到目录,虽然经过研究最终我们还是找到了接口URL,并通过HttpClient构造post请求获取到了数据,但这种方式太麻烦,成本太大,那有没有其他的方式呢?

htmlUnit简单介绍

通过查找资料发现一个神器:HtmlUnit 官网入口,猛戳这里:http://htmlunit.sourceforge.net

以下介绍摘自官网:

HtmlUnit is a "GUI-Less browser for Java programs". It models HTML documents and provides an API that allows you to invoke pages, fill out forms, click links, etc... just like you do in your "normal" browser.

It has fairly good JavaScript support (which is constantly improving) and is able to work even with quite complex AJAX libraries, simulating Chrome, Firefox or Internet Explorer depending on the configuration used.

It is typically used for testing purposes or to retrieve information from web sites.

HtmlUnit is not a generic unit testing framework. It is specifically a way to simulate a browser for testing purposes and is intended to be used within another testing framework such as JUnit or TestNG. Refer to the document "Getting Started with HtmlUnit" for an introduction.

HtmlUnit is used as the underlying "browser" by different Open Source tools like Canoo WebTest, JWebUnit, WebDriver, JSFUnit, WETATOR, Celerity, Spring MVC Test HtmlUnit, ...

HtmlUnit was originally written by Mike Bowler of Gargoyle Software and is released under the Apache 2 license. Since then, it has received many contributions from other developers, and would not be where it is today without their assistance.

HtmlUnit provides excellent JavaScript support, simulating the behavior of the configured browser (Firefox or Internet Explorer). It uses the Rhino JavaScript engine for the core language (plus workarounds for some Rhino bugs) and provides the implementation for the objects specific to execution in a browser.

中文翻译:

HtmlUnit是一个“Java程序的无界面浏览器”。它为HTML文档建模,并提供一个API,允许您调用页面、填写表单、单击链接等……就像你在“普通”浏览器中所做的一样。

它有相当好的JavaScript支持(不断改进),甚至可以使用非常复杂的AJAX库,根据使用的配置模拟Chrome、Firefox或Internet Explorer。

它通常用于测试或从web站点检索信息。

HtmlUnit不是一个通用的单元测试框架。它是一种专门用于测试目的的模拟浏览器的方法,并打算在其他测试框架(如JUnit或TestNG)中使用。请参阅“开始使用HtmlUnit”文档以获得介绍。

HtmlUnit被不同的开源工具用作底层的“浏览器”,比如Canoo WebTest, JWebUnit, WebDriver, JSFUnit, WETATOR, Celerity, Spring MVC Test HtmlUnit…

HtmlUnit最初是由石像鬼软件的Mike Bowler编写的,在Apache 2许可证下发布。从那以后,它收到了其他开发者的许多贡献,如果没有他们的帮助,它就不会有今天的成就。

HtmlUnit提供了出色的JavaScript支持,模拟了配置好的浏览器(Firefox或Internet Explorer)的行为。它使用Rhino JavaScript引擎作为核心语言(加上一些Rhino bug的解决方案),并为特定于在浏览器中执行的对象提供实现。

代码编写

快速上手,猛戳这里:http://htmlunit.sourceforge.net/gettingStarted.html

maven引包:

<dependency>

<groupId>net.sourceforge.htmlunit</groupId>

<artifactId>htmlunit</artifactId>

<version>2.32</version>

</dependency>

那对应我们之前获取目录,我们可以这样做:

try { //创建一个WebClient,并模拟特定的浏览器 WebClient webClient = new WebClient(BrowserVersion.FIREFOX_52); //几个重要配置 webClient.getOptions().setJavaScriptEnabled(true);//激活js webClient.setAjaxController(new NicelyResynchronizingAjaxController());//设置Ajax异步 webClient.getOptions().setThrowExceptionOnFailingStatusCode(true);//抛出失败的状态码 webClient.getOptions().setThrowExceptionOnScriptError(true);//抛出js异常 webClient.getOptions().setCssEnabled(false);//禁用css,无页面,无需渲染 webClient.getOptions().setTimeout(10000); //设置连接超时时间 //获取起点中文网书本详情、目录页面 HtmlPage page = webClient.getPage("https://book.qidian.com/info/1209977"); //设置等待js响应时间 webClient.waitForBackgroundJavaScript(5000); //模拟点击“目录” page = page.getHtmlElementById("j_catalogPage").click(); //获取页面源代码 System.out.println(page.asXml()); } catch (IOException e) { e.printStackTrace(); }

效果展示

未执行js之前

经过执行js请求渲染数据,再获取页面源代码,这样我们就能拿到带有目录数据的html了

结束语

简单的几行代码就可以看出htmlUnit的强大,理论上,浏览器能做的它都能模拟;在这里先记录下来,等有空了再加到小说线上采集阅读(猛戳这里:https://www.cnblogs.com/huanzi-qch/p/9817831.html)

更新

HtmlPage常用的筛选出我们想要的元素

HtmlPage page; //根据id查询元素 HtmlElement id = page.getHtmlElementById("id"); //根据元素标签名 DomNodeList<DomElement> input = page.getElementsByTagName("input"); //根据attribute查询元素,例如class List<HtmlElement> elements = page.getDocumentElement().getElementsByAttribute("div", "class", "list-content");

2020-12-03更新

我们之前htmlutil的版本一直是2.32,最近在抓取一个Vue页面,程序一直报错,无法继续抓取页面

更新到2.45.0(目前是最新版),程序可以继续抓取页面

<dependency>

<groupId>net.sourceforge.htmlunit</groupId>

<artifactId>htmlunit</artifactId>

<version>2.45.0</version>

</dependency>

同时记录一下,如果等待页面js渲染页面

我们先写一个简单的Vue页面进行测试

<template> <div class="main"> <p v-if="isShow">数据加载中...</p> <div v-else="isShow"> <span class="item" v-for="item in items">{{item}}</span> </div> </div> </template> <script> //引入 import AxiosUtil from "@/utils/axiosUtil.js" var vue; export default { data(){ return { isShow:true, items:[] } }, created() { vue = this; console.log("5秒后调用后台查询数据!"); setTimeout(function () { console.log("调用后台接口!"); //请求后台服务,获取数据 AxiosUtil.post("http://localhost:8081/test",{},function (response) { vue.items = response; vue.isShow = false; console.log(response); }) },5000); } } </script> <style scoped> </style>

htmlutil进行抓取

这个是我们封装好的工具类

/** * WebClient工具类 */ @Slf4j public class WebClientUtil { /** * 获取一个WebClient */ public static WebClient getWebClient() { //创建一个WebClient,并随机初始化一个浏览器模型 BrowserVersion[] versions = {BrowserVersion.INTERNET_EXPLORER, BrowserVersion.CHROME, BrowserVersion.EDGE}; WebClient webClient = new WebClient(versions[(int) (versions.length * Math.random())]); //几个重要配置 webClient.getCookieManager().setCookiesEnabled(true);//启用cookie webClient.getOptions().setThrowExceptionOnFailingStatusCode(true);//抛出失败的状态码 webClient.getOptions().setThrowExceptionOnScriptError(true);//抛出js异常 webClient.getOptions().setUseInsecureSSL(true);//忽略ssl认证 webClient.getOptions().setJavaScriptEnabled(true);//启用js webClient.getOptions().setRedirectEnabled(true);//启用重定向 webClient.getOptions().setCssEnabled(true);//启用css webClient.getOptions().setTimeout(10000); //设置连接超时时间 webClient.waitForBackgroundJavaScript(3000);//设置等待js响应时间 webClient.waitForBackgroundJavaScriptStartingBefore(3000);//设置等待js响应时间 webClient.setAjaxController(new NicelyResynchronizingAjaxController());//设置Ajax异步 webClient.getOptions().setAppletEnabled(true);//启用小程序 webClient.getOptions().setGeolocationEnabled(true);//启用定位 return webClient; } /** * 设置cookie */ public static void setCookie(WebClient webClient,String domain,String cookieString){ //设置cookie for (String value : cookieString.split(";")) { String[] split = value.trim().split("="); if(split.length <=0){ continue; } //域名、key、value Cookie cookie = new Cookie(domain,split[0],split[1]); webClient.getCookieManager().addCookie(cookie); } } /** * 根据一个url发起get请求 */ public static Page gatherForGet(WebClient webClient, String url, String refererUrl, Map<String, String> headers) throws IOException { //Referer,默认百度 https://www.baidu.com webClient.removeRequestHeader("Referer"); webClient.addRequestHeader("Referer", refererUrl); //是否还要其他的Header,不可以直接在http请求的head里面携带cookie,需要这样设置:webClient.getCookieManager().addCookie(cookie); if (!StringUtils.isEmpty(headers)) { headers.forEach((key, value) -> { webClient.removeRequestHeader(key); webClient.addRequestHeader(key, value); }); } //get访问 WebRequest request = new WebRequest(new URL(url), HttpMethod.GET); request.setProxyHost(webClient.getOptions().getProxyConfig().getProxyHost()); request.setProxyPort(webClient.getOptions().getProxyConfig().getProxyPort()); return webClient.getPage(request); } /** * 根据一个url发起post请求 */ public static Page gatherForPost(WebClient webClient, String url, String refererUrl, Map<String, String> headers,Map<String,Object> paramMap) throws IOException { //Referer,默认百度 https://www.baidu.com webClient.removeRequestHeader("Referer"); webClient.addRequestHeader("Referer", refererUrl); //是否还要其他的Header,可以直接在http请求的head里面携带cookie,或者这样设置:webClient.getCookieManager().addCookie(cookie); if (!StringUtils.isEmpty(headers)) { headers.forEach((key, value) -> { webClient.removeRequestHeader(key); webClient.addRequestHeader(key, value); }); } //post访问 WebRequest request = new WebRequest(new URL(url), HttpMethod.POST); request.setProxyHost(webClient.getOptions().getProxyConfig().getProxyHost()); request.setProxyPort(webClient.getOptions().getProxyConfig().getProxyPort()); /* 服务端有@RequestBody,请求头需要设置Content-type=application/json; charset=UTF-8,同时请求参数要放在body里 */ // request.setRequestBody(JSONObject.fromObject(paramMap).toString()); // webClient.removeRequestHeader("Content-Type"); // webClient.addRequestHeader("Content-Type","application/json;charset=UTF-8"); /* 服务端没有@RequestBody,请求头需要设置Content-type=application/x-www-form-urlencoded; charset=UTF-8,同时请求参数要放在URL参数里 */ ArrayList<NameValuePair> list = new ArrayList<>(); for (int i = 0; i < paramMap.size(); i++) { String key = (String) paramMap.keySet().toArray()[i]; list.add(new NameValuePair(key, (String) paramMap.get(key))); } request.setRequestParameters(list); webClient.removeRequestHeader("Content-Type"); webClient.addRequestHeader("Content-Type","application/x-www-form-urlencoded;charset=UTF-8"); webClient.removeRequestHeader("Accept"); webClient.addRequestHeader("Accept", "*/*"); return webClient.getPage(request); } }

main测试

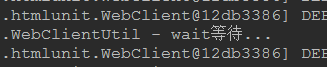

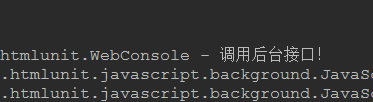

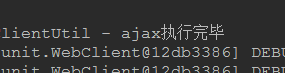

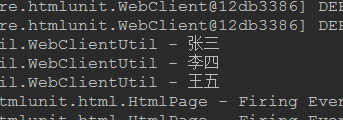

/** * main测试 */ public static void main(String[] args) { try (WebClient webClient = WebClientUtil.getWebClient()) { Page page = WebClientUtil.gatherForGet(webClient, "http://localhost:8080/#/test", "", null); //解析html格式的字符串成一个Document HtmlPage htmlPage = (HtmlPage) page; //根据attribute查询元素,例如class List<HtmlElement> elementss = htmlPage.getDocumentElement().getElementsByAttribute("span", "class", "item"); for (int i = 0; i < 20; i++) { if (elementss.size() > 0) { log.info("ajax执行完毕"); break; } synchronized (htmlPage) { log.info("wait等待..."); //继续等待 htmlPage.wait(500); //重新分析 elementss = htmlPage.getDocumentElement().getElementsByAttribute("span", "class", "item"); } } elementss.forEach((htmlElement)->{ log.info(htmlElement.asText()); }); } catch (Exception e) { e.printStackTrace(); } }

结果

浏览器直接访问Vue页面

htmlutil抓取

代码开源

代码已经开源、托管到我的GitHub、码云:

版权声明

捐献、打赏

支付宝

微信

交流群

浙公网安备 33010602011771号

浙公网安备 33010602011771号