使用Keras和OpenCV完成人脸检测和识别

一、数据集选择和实现思路

1、数据集说明:这里用到数据集来自于百度AI Studio平台的公共数据集,属于实验数据集,数据集本身较小因而会影响深度网络最终训练的准确率。数据集链接:[https://aistudio.baidu.com/aistudio/datasetdetail/8325]:

2、使用说明:数据集解压缩后有四类标注图像,此次只使用其中两类做一个简单的二分类,如有其他分类需求可自行修改相关的训练代码,本人在此使用“jiangwen”和“zhangziyi”的分类。

如图:

(需要说明的是,我这里的face数据集文件夹放在项目文件夹下,项目文件夹是cascadeFace)

3、实现思路:使用OpenCV中提供的Haar级联分类器进行面部检测,扣取Haar分类器检测到的面部图像,喂入已经训练好的AlexNet卷积模型中获取识别结果(当然我们将自己构建AlexNet并训练它)。关于Haar的使用,我将在后面的测试代码部分讲解;关于Haar的理论知识请参考[https://www.cnblogs.com/zyly/p/9410563.html];

二、数据预处理

代码如下:

import os

import sys

import cv2

import numpy as np

"""预处理

"""

IMAGE_SIZE = 64

def resize_image(image, height=IMAGE_SIZE, width=IMAGE_SIZE):

"""按照指定尺寸调整图像大小

"""

top, bottom, left, right = (0, 0, 0, 0)

h, w, _ = image.shape

# 找到最长的边(对于长宽不等的图像)

longest_edge = max(h, w)

# 计算短边需要增加多上像素宽度使其与长边等长

if h < longest_edge:

dh = longest_edge - h

top = dh // 2

bottom = dh - top

elif w < longest_edge:

dw = longest_edge - w

left = dw // 2

right = dw - left

else:

pass

# RGB色彩

BLACK = [0, 0, 0]

# 给图像增加边界,是图像长、宽等长,cv2.BORDED_CONSTANT指定边界颜色由value指定

constant = cv2.copyMakeBorder(image, top, bottom, left, right, cv2.BORDER_CONSTANT, value=BLACK)

return cv2.resize(constant, (height, width))

# 读取训练数据

images = []

labels = []

def read_path(path_name):

for dir_item in os.listdir(path_name):

# 从初始路径开始叠加,合并成可识别操作路径

full_path = os.path.abspath(os.path.join(path_name, dir_item))

if os.path.isdir(full_path):

read_path(full_path)

else:

if dir_item.endswith('.jpg') or dir_item.endswith('.png'):

image = cv2.imread(full_path)

image = resize_image(image, IMAGE_SIZE, IMAGE_SIZE)

# cv2.imwrite('1.jpg', image)

images.append(image)

labels.append(path_name)

return images, labels

# 从指定路径读取训练数据

def load_dataset(path_name):

images, labels = read_path(path_name)

# 将图片转换成四维数组,尺寸:图片数量 * IMAGE_SIZE * IMAGE_SIZE * 3

# 图片为 64*64 像素,一个像素3个颜色值

images = np.array(images)

print(images.shape)

# 标注数据

labels = np.array([0 if label.endswith('jiangwen') else 1 for label in labels])

return images, labels

if __name__ == '__main__':

if len(sys.argv) != 1:

print('Usage: %s path_name\r\n' % (sys.argv[0]))

else:

images, labels = load_dataset('./face')

说明:resize_image()的功能是判断图像是否长宽相等,如果不是则统一长宽,然后才调用cv2.resize()实现等比缩放,这样确保了图像不会失真。

三、模型搭建与训练

1、模型结构介绍

说明:这个结构参考图很好的展示了AlexNet网络模型,AlexNet虽然如今已经是相对简单基础的卷积模型,但其参数量依然庞大,用作分类任务时其全连接层的百万级参数量成为训练网络的负担,我们使用Dropout对半丢弃结点。还有一点需要说的就是我们的这次实验在输入数据的尺寸与上网络结构图所显示的不太一样,具体的情况请阅读接下来所展示的模型搭建与训练代码。

2、模型参数表

(alexnet)

Layer (type) Output Shape Param #

conv2d_31 (Conv2D) (None, 55, 55, 96) 28896

_________________________________________________________________

activation_47 (Activation) (None, 55, 55, 96) 0

_________________________________________________________________

max_pooling2d_19 (MaxPooling (None, 27, 27, 96) 0

_________________________________________________________________

conv2d_32 (Conv2D) (None, 27, 27, 256) 614656

_________________________________________________________________

activation_48 (Activation) (None, 27, 27, 256) 0

_________________________________________________________________

max_pooling2d_20(MaxPooling) (None, 13, 13, 256) 0

_________________________________________________________________

conv2d_33 (Conv2D) (None, 13, 13, 384) 885120

_________________________________________________________________

activation_49 (Activation) (None, 13, 13, 384) 0

_________________________________________________________________

conv2d_34 (Conv2D) (None, 13, 13, 384) 1327488

_________________________________________________________________

activation_50 (Activation) (None, 13, 13, 384) 0

_________________________________________________________________

conv2d_35 (Conv2D) (None, 13, 13, 256) 884992

_________________________________________________________________

activation_51 (Activation) (None, 13, 13, 256) 0

_________________________________________________________________

max_pooling2d_21(MaxPooling) (None, 6, 6, 256) 0

_________________________________________________________________

flatten_5 (Flatten) (None, 9216) 0

_________________________________________________________________

dense_17 (Dense) (None, 4096) 37752832

_________________________________________________________________

activation_52 (Activation) (None, 4096) 0

_________________________________________________________________

dropout_15 (Dropout) (None, 4096) 0

_________________________________________________________________

dense_18 (Dense) (None, 4096) 16781312

_________________________________________________________________

activation_53 (Activation) (None, 4096) 0

_________________________________________________________________

dropout_16 (Dropout) (None, 4096) 0

_________________________________________________________________

dense_19 (Dense) (None, 2) 8194

3、模型搭建与训练代码展示

import random

from sklearn.model_selection import train_test_split

from keras.preprocessing.image import ImageDataGenerator

from keras.models import Sequential

from keras.layers import Convolution2D

from keras.layers import MaxPooling2D

from keras.layers import Dropout

from keras.layers import Activation

from keras.layers import Flatten

from keras.layers import Dense

from keras.optimizers import SGD

from keras.utils import np_utils

from keras.models import load_model

from keras import backend

from load_data import load_dataset, resize_image, IMAGE_SIZE # 这里load_data是引入上面预处理代码

class Dataset:

def __init__(self, path_name):

# 训练集

self.train_images = None

self.train_labels = None

# 验证集

self.valid_images = None

self.valid_labels = None

# 测试集

self.test_images = None

self.test_labels = None

# 数据集加载路径

self.path_name = path_name

# 当前库采取的维度顺序

self.input_shape = None

# 加载数据集并按照交叉验证划分数据集再开始相关的预处理

def load(self, img_rows=IMAGE_SIZE, img_cols=IMAGE_SIZE, img_channels=3, nb_classes=2):

images, labels = load_dataset(self.path_name)

train_images, valid_images, train_labels, valid_lables = train_test_split(images,

labels,

test_size=0.2,

random_state=random.randint(0, 100))

_, test_images, _, test_labels = train_test_split(images,

labels,

test_size=0.3,

random_state=random.randint(0, 100))

# 当前维度顺序如果是'th',则输入图片数据时的顺序为:channels, rows, cols; 否则:rows, cols, channels

# 根据keras库要求的维度重组训练数据集

if backend.image_dim_ordering() == 'th':

train_images = train_images.reshape(train_images.shape[0], img_channels, img_rows, img_cols)

valid_images = valid_images.reshape(valid_images.shape[0], img_channels, img_rows, img_cols)

test_images = test_images.reshape(test_images.shape[0], img_channels, img_rows, img_cols)

self.input_shape = (img_channels, img_rows, img_cols)

else:

train_images = train_images.reshape(train_images.shape[0], img_rows, img_cols, img_channels)

valid_images = valid_images.reshape(valid_images.shape[0], img_rows, img_cols, img_channels)

test_images = test_images.reshape(test_images.shape[0], img_rows, img_cols, img_channels)

self.input_shape = (img_rows, img_cols, img_channels)

# 输出训练集、验证集、测试集数量

print(train_images.shape[0], 'train samples')

print(valid_images.shape[0], 'valid samples')

print(test_images.shape[0], 'test samples')

# 使用categorical_crossentropy作为损失,因此需要根据类别数量nb_classes将类别标签进行one-hot编码,分类类别为4类,所以转换后的标签维数为4

train_labels = np_utils.to_categorical(train_labels, nb_classes)

valid_lables = np_utils.to_categorical(valid_lables, nb_classes)

test_labels = np_utils.to_categorical(test_labels, nb_classes)

# 像素数据浮点化以便进行归一化

train_images = train_images.astype('float32')

valid_images = valid_images.astype('float32')

test_images = test_images.astype('float32')

# 归一化

train_images /= 255

valid_images /= 255

test_images /= 255

self.train_images = train_images

self.valid_images = valid_images

self.test_images = test_images

self.train_labels = train_labels

self.valid_labels = valid_lables

self.test_labels = test_labels

"""CNN构建

"""

class CNNModel:

def __init__(self):

self.model = None

# 模型构建

def build_model(self, dataset, nb_classes=2):

# 构建一个空间网络模型(一个线性堆叠模型)

self.model = Sequential()

self.model.add(Convolution2D(96, 10, 10, input_shape=dataset.input_shape))

self.model.add(Activation('relu'))

self.model.add(MaxPooling2D(pool_size=(3, 3), strides=2))

self.model.add(Convolution2D(256, 5, 5, border_mode='same'))

self.model.add(Activation('relu'))

self.model.add(MaxPooling2D(pool_size=(3, 3), strides=2))

self.model.add(Convolution2D(384, 3, 3, border_mode='same'))

self.model.add(Activation('relu'))

self.model.add(Convolution2D(384, 3, 3, border_mode='same'))

self.model.add(Activation('relu'))

self.model.add(Convolution2D(256, 3, 3, border_mode='same'))

self.model.add(Activation('relu'))

self.model.add(MaxPooling2D(pool_size=(3, 3), strides=2))

self.model.add(Flatten())

self.model.add(Dense(4096))

self.model.add(Activation('relu'))

self.model.add(Dropout(0.5))

self.model.add(Dense(4096))

self.model.add(Activation('relu'))

self.model.add(Dropout(0.5))

self.model.add(Dense(nb_classes))

self.model.add(Activation('softmax'))

# 输出模型概况

self.model.summary()

def train(self, dataset, batch_size=10, nb_epoch=5, data_augmentation=True):

sgd = SGD(lr=0.01, decay=1e-6, momentum=0.7, nesterov=True) # SGD+momentum的训练器

self.model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy']) # 模型配置工作

# 跳过数据提升

if not data_augmentation:

self.model.fit(dataset.train_images,

dataset.train_labels,

batch_size=batch_size,

nb_epoch=nb_epoch,

validation_data=(dataset.valid_images, dataset.valid_labels),

shuffle=True)

# 使用实时数据提升

else:

# 定义数据生成器用于数据提升,其返回一个生成器对象datagen,datagen每被调用一次

# 其顺序生成一组数据,节省内存,该数据生成器其实就是python所定义的数据生成器

datagen = ImageDataGenerator(featurewise_center=False, # 是否使输入数据去中心化(均值为0)

samplewise_center=False, # 是否使输入数据的每个样本均值为0

featurewise_std_normalization=False, # 是否数据标准化(输入数据除以数据集的标准差)

samplewise_std_normalization=False, # 是否将每个样本数据除以自身的标准差

zca_whitening=False, # 是否对输入数据施以ZCA白化

rotation_range=20, # 数据提升时图片随机转动的角度(范围为0~180)

width_shift_range=0.2, # 数据提升时图片水平偏移的幅度(单位为图片宽度的占比,0~1之间的浮点数)

height_shift_range=0.2, # 垂直偏移幅度

horizontal_flip=True, # 是否进行随机水平翻转

vertical_flip=False # 是否进行随机垂直翻转

)

datagen.fit(dataset.train_images)

self.model.fit_generator(datagen.flow(dataset.train_images,

dataset.train_labels,

batch_size=batch_size),

samples_per_epoch=dataset.train_images.shape[0],

nb_epoch=nb_epoch,

validation_data=(dataset.valid_images, dataset.valid_labels)

)

MODEL_PATH = './cascadeface.model.h5'

def save_model(self, file_path=MODEL_PATH):

self.model.save(file_path)

def load_model(self, file_path=MODEL_PATH):

self.model = load_model(file_path)

def evaluate(self, dataset):

score = self.model.evaluate(dataset.test_images, dataset.test_labels, verbose=1)

print('%s: %.2f%%' % (self.model.metrics_names[1], score[1] * 100))

# 识别人脸

def face_predict(self, image):

# 根据后端系统确定维度顺序

if backend.image_dim_ordering() == 'th' and image.shape != (1, 3, IMAGE_SIZE, IMAGE_SIZE):

image = resize_image(image) # 尺寸必须与训练集一致,都为:IMAGE_SIZE * IMAGE_SIZE

image = image.reshape((1, 3, IMAGE_SIZE, IMAGE_SIZE)) # 与模型训练不同,这里是预测单张图像

elif backend.image_dim_ordering() == 'tf' and image.shape != (1, IMAGE_SIZE, IMAGE_SIZE, 3):

image = resize_image(image)

image = image.reshape((1, IMAGE_SIZE, IMAGE_SIZE, 3))

# 归一化

image = image.astype('float32')

image /= 255

# 给出输入属于各类别的概率

result = self.model.predict_proba(image)

print('result:', result)

result = self.model.predict_classes(image)

# 返回预测结果

return result[0]

if __name__ == '__main__':

dataset = Dataset('./face/')

dataset.load()

model = CNNModel()

model.build_model(dataset)

# 先前添加的测试build_model()函数的代码

model.build_model(dataset)

# 测试训练函数的代码

model.train(dataset)

if __name__ == '__main__':

dataset = Dataset('./face/')

dataset.load()

model = CNNModel()

model.build_model(dataset)

model.train(dataset)

model.save_model(file_path='./model/cascadeface.model.h5')

if __name__ == '__main__':

dataset = Dataset('./face/')

dataset.load()

# 评估模型

model = CNNModel()

model.load_model(file_path='./model/cascadeface.model.h5')

model.evaluate(dataset)

说明:相关超参数请参考代码注释,并请注意代码中使用到的数据增强方法。

4、训练结果

说明:因为数据集较小,所以模型最终准确率不如人意在意料之中,如果有机会拿到较好的数据集,可重新训练一下。

保存的模型文件:

四、模型测试

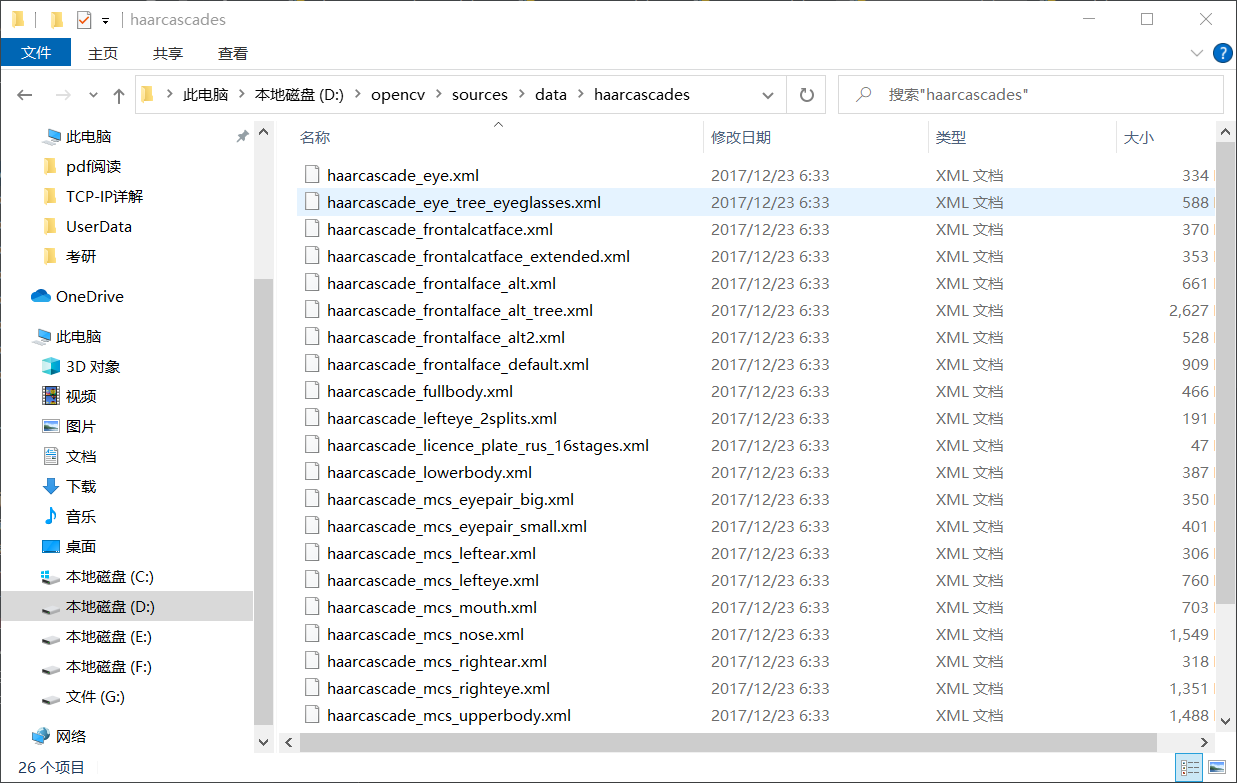

1、Haar人脸识别:OK,这里我们来说一下Haar分类器的使用,在OpenCV的开源代码中提供了用于人脸检测的.xml文件,这些文件封装了已经通过haar分类器提取好的人脸特征,其路径是:opencv/sources/data/haarcascades,我的文件所在位置如图,

我们这里使用到的是静态图像检测,所以将该文件夹下的haarcascade_frontalface_default.xml文件拷贝到项目文件夹下,下面我们将使用该文件完成人脸检测,详细的使用方法请参考下面的测试代码。

2、人脸检测和识别测试代码

import cv2

from 人脸检测与识别 import CNNModel # 引入训练代码中的模型对象

if __name__ == '__main__':

# 加载模型

model = CNNModel()

model.load_model(file_path='./cascadeface.model.h5')

# 人脸识别矩形框

color = (0, 255, 0)

# 人脸识别分类器路径

cascade_path = './haarcascade_frontalface_default.xml'

image = cv2.imread('jiangwen.jpg')

image_gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

# 读入分类器

cascade = cv2.CascadeClassifier(cascade_path)

# 利用分类器识别人脸区域

faceRects = cascade.detectMultiScale(image_gray, scaleFactor=1.2, minNeighbors=5, minSize=(32, 32))

if len(faceRects) > 0:

for faceRect in faceRects:

x, y, w, h = faceRect

# 截取图像脸部提交给识别模型识别

img = image[y-10: y + h + 10, x-10: x + w + 10]

faceID = model.face_predict(image)

if faceID == 0:

cv2.rectangle(image, (x - 10, y - 10), (x + w + 10, y + h + 10), color, thickness=2)

cv2.putText(image,

'jiangwen',

(x + 30, y + 30), # 坐标

cv2.FONT_HERSHEY_SIMPLEX, # 字体

1, # 字号

(0, 0, 255), # 颜色

1) # 字的线宽

elif faceID == 1:

cv2.rectangle(image, (x - 10, y - 10), (x + w + 10, y + h + 10), color, thickness=2)

cv2.putText(image,

'zhangziyi',

(x + 30, y + 30),

cv2.FONT_HERSHEY_SIMPLEX,

1,

(0, 0, 255),

1)

else:

pass

cv2.imshow('image', image)

cv2.waitKey(0)

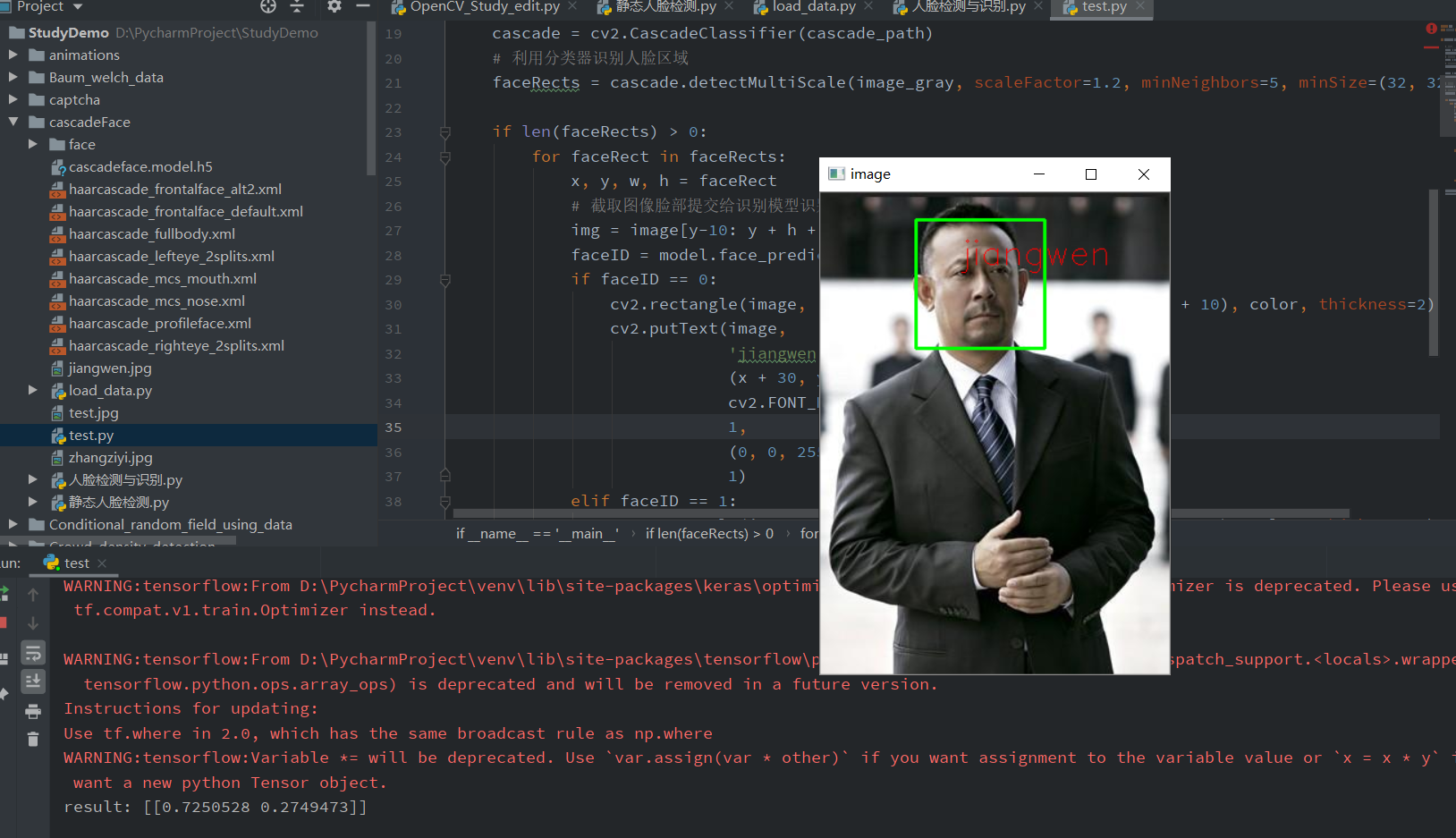

运行结果如下:

浙公网安备 33010602011771号

浙公网安备 33010602011771号