Prometheus+Alertmanager+Webhook-dingtalk

一、下载alertmanager和webhook-dingtalk

www.github.com 搜索alertmanager webhook-dingtalk

1、解压、安装webhook-dingtalk

tar -zxvf prometheus-webhook-dingtalk-2.1.0.linux-amd64.tar.gz mv prometheus-webhook-dingtalk-2.1.0.linux-amd64 /usr/local/webhook-dingtalk

cp /usr/local/webhook-dingtalk/config.example.yml/usr/local/webhook-dingtalk/config.yml

2、创建开机启动并启动服务

vim /usr/lib/systemd/system/webhook.service [Unit] Description=Prometheus-Server After=network.target [Service] ExecStart=/usr/local/webhook-dingtalk/prometheus-webhook-dingtalk --config.file=/usr/local/webhook-dingtalk/config.yml --web.enable-ui User=root [Install] WantedBy=multi-user.target systemctl enable webhook.service --now

3、解压、安装alertmanager

tar zxf alertmanager-0.25.0-rc.2.linux-amd64.tar.gz mv alertmanager-0.25.0-rc.2.linux-amd64 /usr/local/prometheus/alertmanager

4、创建开机启动并启动服务

vim /usr/lib/systemd/system/alertmanager.service [Unit] Description=Prometheus-Server After=network.target [Service] ExecStart=/usr/local/alertmanager/alertmanager --cluster.advertise-address=0.0.0.0:9093 --config.file=/usr/local/alertmanager/alertmanager.yml --web.external-url=http://AlertManagerIP:9093 User=root [Install] WantedBy=multi-user.target

systemctl enable alertmanager.service --now

5、验证alertmanager和webhook-dingtalk监听端口

ss -ant|egrep "9093|8060"

二、配置、测试

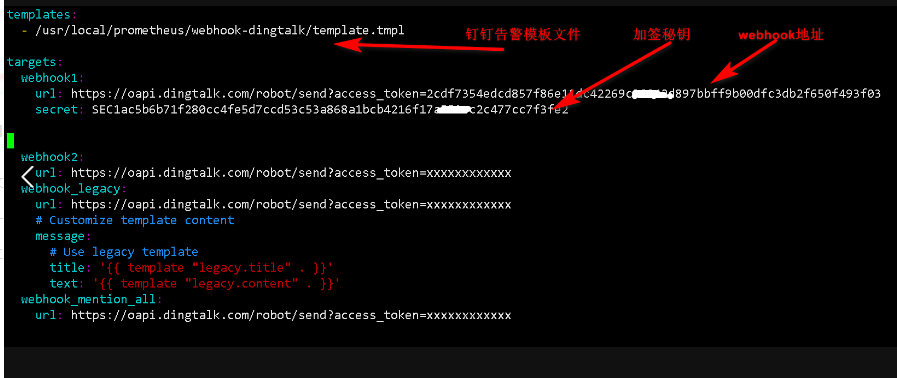

1、 Webhook-dingtalk配置相对比较简单,只改以下三处即可

vim /usr/local/webhook-dingtalk/config.yml

templates: - /etc/prometheus-webhook-dingtalk/template.tmpl

targets: webhook1: url: https://oapi.dingtalk.com/robot/send?access_token=xxxxxxxxxxxxxxxxxxxxxxx secret: xxxxxxxxxxxxxxxxxxxxxxxxxxxx mention:

#all: true #at所有人 mobiles: ['1391234567'] #at某个人除了这条配置,还需要在rule的Labels标签配置users: "@1391234567"

2、添加钉钉报警模板(一)

vim /etc/prometheus-webhook-dingtalk/template.tmpl

{{ define "__subject" }}[{{ .Status | toUpper }}{{ if eq .Status "firing" }}:{{ .Alerts.Firing | len }}{{ end }}] {{ .GroupLabels.SortedPairs.Values | join " " }} {{ if gt (len .CommonLabels) (len .GroupLabels) }}({{ with .CommonLabels.Remove .GroupLabels.Names }}{{ .Values | join " " }}{{ end }}){{ end }}{{ end }}

{{ define "__alertmanagerURL" }}{{ .ExternalURL }}/#/alerts?receiver={{ .Receiver }}{{ end }}

{{ define "__text_alert_list" }}{{ range . }}

**Labels**

{{ range .Labels.SortedPairs }}> - {{ .Name }}: {{ .Value | markdown | html }}

{{ end }}

**Annotations**

{{ range .Annotations.SortedPairs }}> - {{ .Name }}: {{ .Value | markdown | html }}

{{ end }}

**Source:** [{{ .GeneratorURL }}]({{ .GeneratorURL }})

{{ end }}{{ end }}

{{/* Firing */}}

{{ define "default.__text_alert_list" }}{{ range . }}

**告警名称:** {{ .Annotations.summary }}

**告警内容:** {{ .Annotations.description }}

**Graph:** [📈 ]({{ .GeneratorURL }})

**告警时间:** {{ dateInZone "2006.01.02 15:04:05" (.StartsAt) "Asia/Shanghai" }}

**详细信息:**

{{ range .Labels.SortedPairs }}{{ if and (ne (.Name) "severity") (ne (.Name) "summary") }}> - {{ .Name }}: {{ .Value | markdown | html }}

{{ end }}{{ end }}

{{ end }}{{ end }}

{{/* Resolved */}}

{{ define "default.__text_resolved_list" }}{{ range . }}

**告警名称** {{ .Annotations.summary }}

**Graph:** [📈 ]({{ .GeneratorURL }})

**告警时间:** {{ dateInZone "2006.01.02 15:04:05" (.StartsAt) "Asia/Shanghai" }}

**恢复时间:** {{ dateInZone "2006.01.02 15:04:05" (.EndsAt) "Asia/Shanghai" }}

**详细信息:**

{{ range .Labels.SortedPairs }}{{ if and (ne (.Name) "severity") (ne (.Name) "summary") }}> - {{ .Name }}: {{ .Value | markdown | html }}

{{ end }}{{ end }}

{{ end }}{{ end }}

{{/* Default */}}

{{ define "default.title" }}{{ template "__subject" . }}{{ end }}

{{ define "default.content" }}#### \[{{ .Status | toUpper }}{{ if eq .Status "firing" }}:{{ .Alerts.Firing | len }}{{ end }}\] **[{{ index .GroupLabels "alertname" }}]({{ template "__alertmanagerURL" . }})**

{{ if gt (len .Alerts.Firing) 0 -}}

{{ template "default.__text_alert_list" .Alerts.Firing }}

{{- end }}

{{ if gt (len .Alerts.Resolved) 0 -}}

{{ template "default.__text_resolved_list" .Alerts.Resolved }}

{{- end }}

{{- end }}

{{/* Legacy */}}

{{ define "legacy.title" }}{{ template "__subject" . }}{{ end }}

{{ define "legacy.content" }}#### \[{{ .Status | toUpper }}{{ if eq .Status "firing" }}:{{ .Alerts.Firing | len }}{{ end }}\] **[{{ index .GroupLabels "alertname" }}]({{ template "__alertmanagerURL" . }})**

{{ template "__text_alert_list" .Alerts.Firing }}

{{- end }}

{{/* Following names for compatibility */}}

{{ define "ding.link.title" }}{{ template "default.title" . }}{{ end }}

{{ define "ding.link.content" }}{{ template "default.content" . }}{{ end }}

3、重启webhook

systemctl restart webhook.service

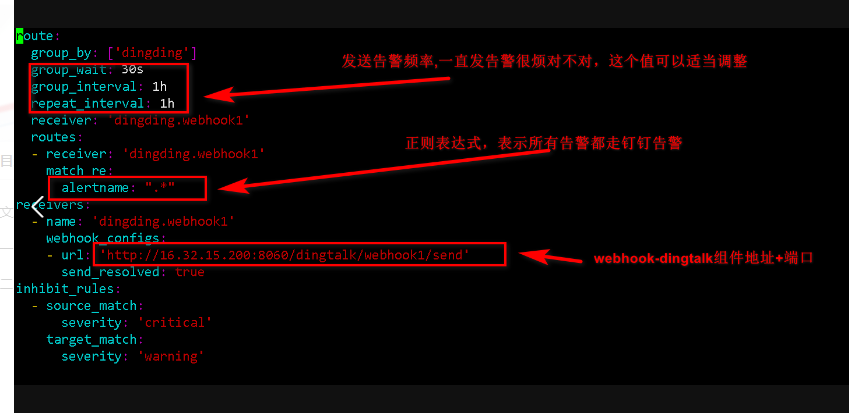

4、Alertmanager配置钉钉告警

vim /usr/local/alertmanager/alertmanager.yml global: resolve_timeout: 1m templates: - '/etc/prometheus-webhook-dingtalk/template.tmpl' route: group_by: ['VMWare'] group_wait: 1s group_interval: 10s repeat_interval: 10m receiver: 'dingding.webhook_vmware' receivers: - name: 'dingding.webhook_vmware' webhook_configs: - url: 'http://webhookIP:8060/dingtalk/webhook_vmware/send' send_resolved: true

主要修改的地方

重启alertmanager

systemctl restart alertmanager.service

5、Prometheus集成Alertmanager及告警规则配置

vim /usr/local/prometheus/prometheus.yml global: scrape_interval: 15s evaluation_interval: 15s alerting: alertmanagers: - static_configs: - targets: - IP:9093 rule_files: - "/usr/local/prometheus/rule/node_exporter.yml" scrape_configs: - job_name: "VMware" static_configs: - targets: ["IP:9100"]

重点修改地方

6、添加node_exporter告警规则

mkdir -p /usr/local/prometheus/prometheus/rule vim /usr/local/prometheus/prometheus/rule/node_exporter.yml groups: - name: 服务器资源监控 rules: - alert: 内存使用率 expr: 100 - round((node_memory_MemAvailable_bytes / node_memory_MemTotal_bytes) * 100) > 80 for: 3m labels: severity: 严重告警

users: "@1391234567" annotations: summary: "{{ $labels.instance }} 内存使用率过高!" description: "当前内存使用率:{{ $value }}%." - alert: 服务器宕机 expr: up == 0 for: 3s labels: severity: 致命告警

users: "@1391234567" annotations: summary: "{{$labels.instance}} 服务器宕机!" description: "主机不通了,当前状态:{{ $value }}. " - alert: CPU高负荷 expr: 100 - round((avg by (instance,job)(irate(node_cpu_seconds_total{mode="idle"}[5m])) * 100)) > 80 for: 5m labels: severity: 严重告警

users: "@1391234567" annotations: summary: "{{$labels.instance}} CPU使用率过高!" description: "CPU使用大于80%,当前使用率:{{ $value }}%. " - alert: 磁盘IO性能 expr: round(avg(irate(node_disk_io_time_seconds_total[1m])) by(instance,job) * 10000 ,2) > 30 for: 5m labels: severity: 一般告警

users: "@1391234567" annotations: summary: "{{$labels.instance}} 流入磁盘IO使用率过高!" description: "流入磁盘IO大于30%,当前使用率:{{ $value }}%." - alert: 网络流入 expr: ((sum(rate (node_network_receive_bytes_total{device!~'tap.*|veth.*|br.*|docker.*|virbr*|lo*'}[5m])) by (instance,job)) / 100) > 102400 for: 5m labels: severity: 一般告警

users: "@1391234567" annotations: summary: "{{$labels.instance}} 流入网络带宽过高!" description: "流入网络带宽持续5分钟高于100M. 带宽使用量:{{$value}}." - alert: 网络流出 expr: ((sum(rate (node_network_transmit_bytes_total{device!~'tap.*|veth.*|br.*|docker.*|virbr*|lo*'}[5m])) by (instance,job)) / 100) > 102400 for: 5m labels: severity: 严重告警

users: "1391234567" annotations: summary: "{{$labels.instance}} 流出网络带宽过高!" description: "流出网络带宽持续5分钟高于100M. 带宽使用量:{$value}}." - alert: TCP连接数 expr: node_netstat_Tcp_CurrEstab > 1000 for: 2m labels: severity: 严重告警

users: "@1391234567" annotations: summary: " TCP_ESTABLISHED连接数大于1000!" description: "TCP_ESTABLISHED大于1000,当前连接数:{{$value}}." - alert: 磁盘容量 expr: round(100-(node_filesystem_free_bytes{fstype=~"ext4|xfs"}/node_filesystem_size_bytes {fstype=~"ext4|xfs"}*100),2) > 80 for: 1m labels: severity: 严重告警

users: "@1391234567" annotations: summary: "{{$labels.mountpoint}} 磁盘分区使用率过高!" description: "磁盘分区使用大于80%,当前使用率:{{ $value }}%."

7、热加载prometheus配置

curl -X POST http://localhost:9090/-/reload

浙公网安备 33010602011771号

浙公网安备 33010602011771号