Elasticsearch中关于transform的一个问题分析

背景:现在有一个业务,派件业务,业务员今天去派件(扫描产生一条派件记录),派件可能会有重复派件的情况,第二天再派送(记录被更新,以最新的派件操作为准)。现在需要分业务员按天统计每天的派件数量。

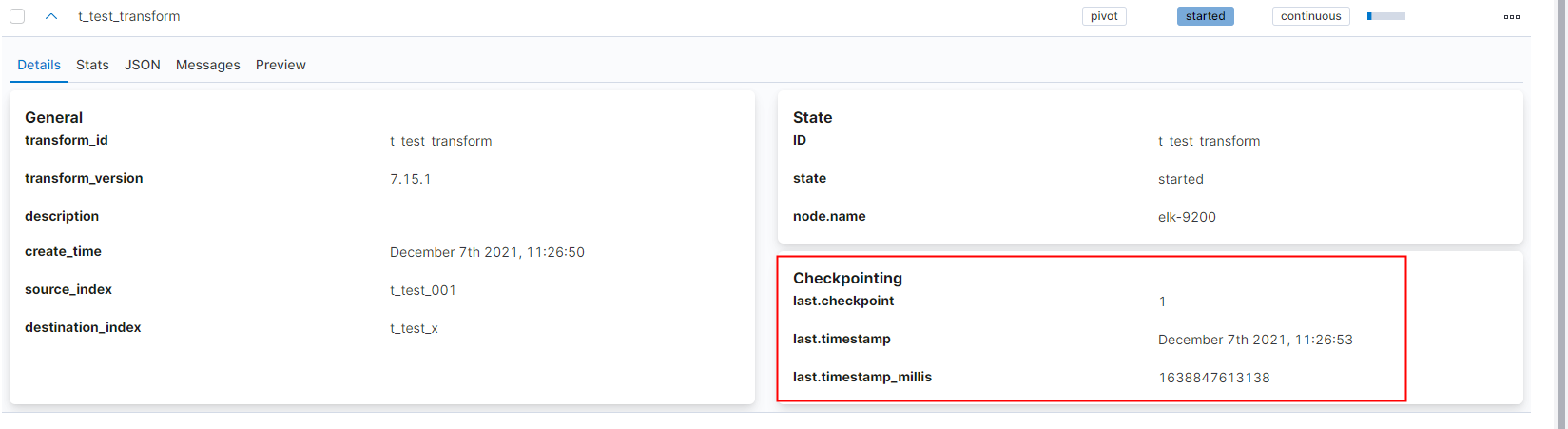

es版本:7.15.1

1、创建索引:

PUT t_test_001 { "settings": { "number_of_shards": 1, "number_of_replicas": 1 }, "mappings": { "properties": { "city_id": { "type": "long" }, "city_name": { "type": "keyword" }, "create_time": { "type": "date" }, "push_date": { "type": "date" }, "update_time": { "type": "date" } } } }

2、插入测试数据

POST /t_test_001/_bulk { "index": {}} { "order_no" : 1,"employee":"张三", "create_time" : "2021-12-06T08:00:00.000Z", "push_date" : "2021-12-06T08:00:00.000Z", "update_time" : "2021-12-06T08:00:00.000Z"} { "index": {}} { "order_no" : 2,"employee":"张三", "create_time" : "2021-12-06T08:00:00.000Z", "push_date" : "2021-12-06T08:00:00.000Z", "update_time" : "2021-12-06T08:00:00.000Z"} { "index": {}} { "order_no" : 3,"employee":"张三", "create_time" : "2021-12-07T00:00:00.000Z", "push_date" : "2021-12-07T00:00:00.000Z", "update_time" : "2021-12-07T00:00:00.000Z"} { "index": {}} { "order_no" : 4,"employee":"张三", "create_time" : "2021-12-07T00:00:00.000Z", "push_date" : "2021-12-07T00:00:00.000Z", "update_time" : "2021-12-07T00:00:00.000Z"} { "index": {}} { "order_no" : 5,"employee":"王五", "create_time" : "2021-12-06T08:00:00.000Z", "push_date" : "2021-12-06T08:00:00.000Z", "update_time" : "2021-12-06T08:00:00.000Z"} { "index": {}} { "order_no" : 6,"employee":"王五", "create_time" : "2021-12-06T08:00:00.000Z", "push_date" : "2021-12-06T08:00:00.000Z", "update_time" : "2021-12-06T08:00:00.000Z"} { "index": {}} { "order_no" : 7,"employee":"王五", "create_time" : "2021-12-07T00:00:00.000Z", "push_date" : "2021-12-07T00:00:00.000Z", "update_time" : "2021-12-07T00:00:00.000Z"} { "index": {}} { "order_no" : 8,"employee":"王五", "create_time" : "2021-12-07T00:00:00.000Z", "push_date" : "2021-12-07T00:00:00.000Z", "update_time" : "2021-12-07T00:00:00.000Z"}

3、查询一下看看

GET /t_test_001/_search { "size": 10 }

结果:

{ "took" : 0, "timed_out" : false, "_shards" : { "total" : 1, "successful" : 1, "skipped" : 0, "failed" : 0 }, "hits" : { "total" : { "value" : 8, "relation" : "eq" }, "max_score" : 1.0, "hits" : [ { "_index" : "t_test_001", "_type" : "_doc", "_id" : "GLztkn0BDKE3xmcewwIG", "_score" : 1.0, "_source" : { "order_no" : 1, "employee" : "张三", "create_time" : "2021-12-06T08:00:00.000Z", "push_date" : "2021-12-06T08:00:00.000Z", "update_time" : "2021-12-06T08:00:00.000Z" } }, { "_index" : "t_test_001", "_type" : "_doc", "_id" : "Gbztkn0BDKE3xmcewwIG", "_score" : 1.0, "_source" : { "order_no" : 2, "employee" : "张三", "create_time" : "2021-12-06T08:00:00.000Z", "push_date" : "2021-12-06T08:00:00.000Z", "update_time" : "2021-12-06T08:00:00.000Z" } }, { "_index" : "t_test_001", "_type" : "_doc", "_id" : "Grztkn0BDKE3xmcewwIG", "_score" : 1.0, "_source" : { "order_no" : 3, "employee" : "张三", "create_time" : "2021-12-07T00:00:00.000Z", "push_date" : "2021-12-07T00:00:00.000Z", "update_time" : "2021-12-07T00:00:00.000Z" } }, { "_index" : "t_test_001", "_type" : "_doc", "_id" : "G7ztkn0BDKE3xmcewwIG", "_score" : 1.0, "_source" : { "order_no" : 4, "employee" : "张三", "create_time" : "2021-12-07T00:00:00.000Z", "push_date" : "2021-12-07T00:00:00.000Z", "update_time" : "2021-12-07T00:00:00.000Z" } }, { "_index" : "t_test_001", "_type" : "_doc", "_id" : "HLztkn0BDKE3xmcewwIG", "_score" : 1.0, "_source" : { "order_no" : 5, "employee" : "王五", "create_time" : "2021-12-06T08:00:00.000Z", "push_date" : "2021-12-06T08:00:00.000Z", "update_time" : "2021-12-06T08:00:00.000Z" } }, { "_index" : "t_test_001", "_type" : "_doc", "_id" : "Hbztkn0BDKE3xmcewwIG", "_score" : 1.0, "_source" : { "order_no" : 6, "employee" : "王五", "create_time" : "2021-12-06T08:00:00.000Z", "push_date" : "2021-12-06T08:00:00.000Z", "update_time" : "2021-12-06T08:00:00.000Z" } }, { "_index" : "t_test_001", "_type" : "_doc", "_id" : "Hrztkn0BDKE3xmcewwIG", "_score" : 1.0, "_source" : { "order_no" : 7, "employee" : "王五", "create_time" : "2021-12-07T00:00:00.000Z", "push_date" : "2021-12-07T00:00:00.000Z", "update_time" : "2021-12-07T00:00:00.000Z" } }, { "_index" : "t_test_001", "_type" : "_doc", "_id" : "H7ztkn0BDKE3xmcewwIG", "_score" : 1.0, "_source" : { "order_no" : 8, "employee" : "王五", "create_time" : "2021-12-07T00:00:00.000Z", "push_date" : "2021-12-07T00:00:00.000Z", "update_time" : "2021-12-07T00:00:00.000Z" } } ] } }

4、创建一个transform,将数据按天、业务员 聚合

PUT _transform/t_test_transform { "id": "t_test_transform", "source": { "index": [ "t_test_001" ] }, "dest": { "index": "t_test_x" }, "frequency": "60s", "sync": { "time": { "field": "update_time", "delay": "60s" } }, "pivot": { "group_by": { "employee": { "terms": { "field": "employee" } }, "push_date": { "date_histogram": { "field": "push_date", "calendar_interval": "1d" } } }, "aggregations": { "sum_all": { "value_count": { "field": "_id" } } } } }

5、开启transform

POST _transform/t_test_transform/_start

6、查看transform转换的索引结果

GET /t_test_x/_search {}

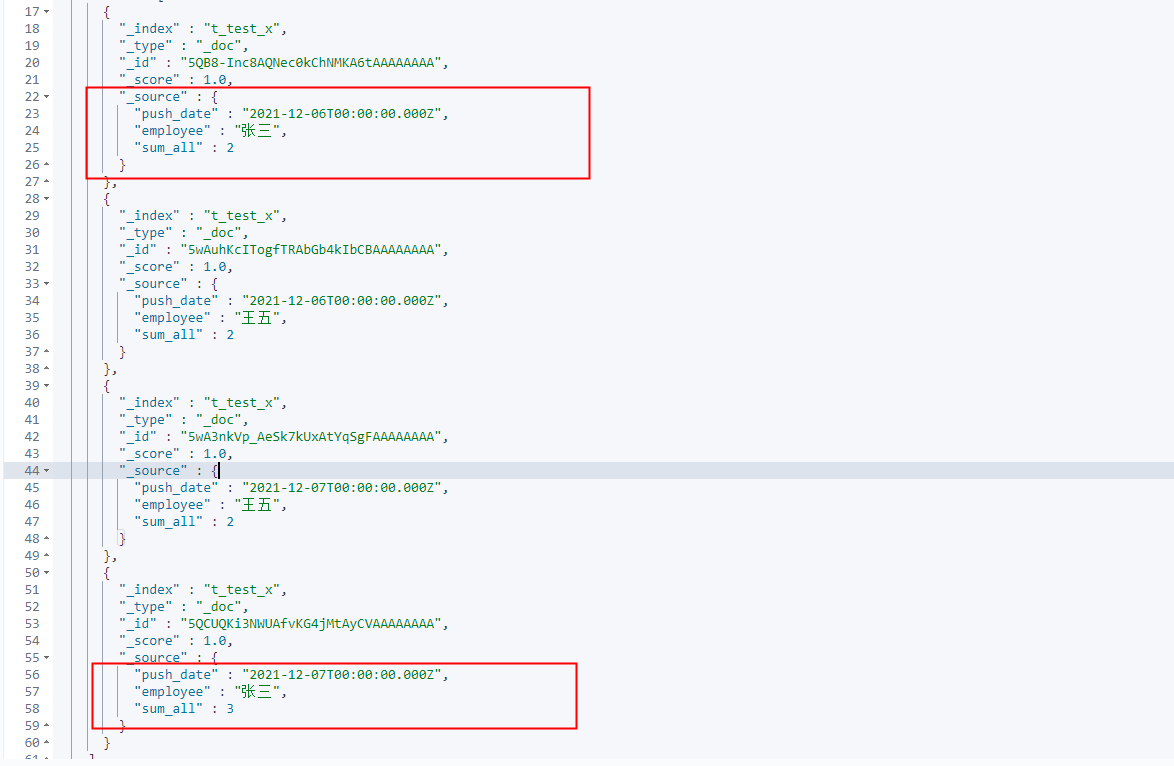

结果:如图,张三2021-12-06和07号各派送两单:

7、12月7号,订单order_no = 1的单子再次被张三派送;数据被更新

POST /t_test_001/_update/GLztkn0BDKE3xmcewwIG { "doc": { "push_date": "2021-12-07T03:27:12.000Z", "update_time": "2021-12-07T03:27:12.000Z" } }

注意模拟操作数据的真实性,更新时间在上一个检查点之后!【截图中的检查点时间是北京时间】

8、预期transfrom转换的结果是:张三12-6号的派单统计数据由2减少为1;12-7号的派单数据从2增加到3。

9、查询transform转换的索引结果

GET /t_test_x/_search {}

结果:张三12-6号的派单统计数据为2没有减少,不符合预期;12-7号的派单数据为3,符合预期。

10,再查询一下原始数据:

GET /t_test_001/_search {}

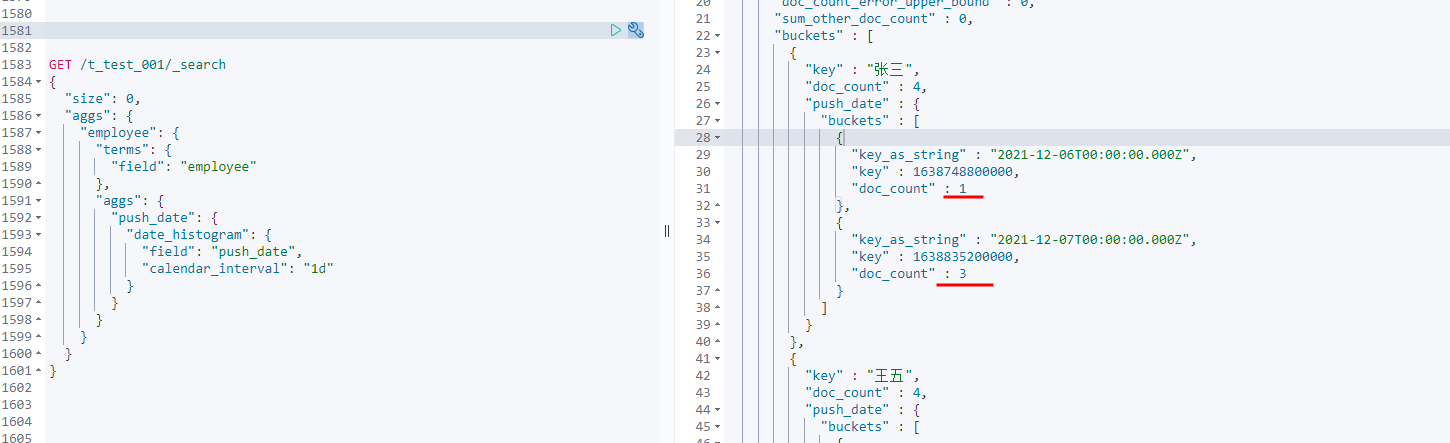

11、再统计一下数据:

GET /t_test_001/_search { "size": 0, "aggs": { "employee": { "terms": { "field": "employee" }, "aggs": { "push_date": { "date_histogram": { "field": "push_date", "calendar_interval": "1d" } } } } } }

结果很显然:张三 12-06号派送量为1,12-07号派送量为3!!!而transform统计的结果,此时就错了!!!这个怎么理解呢?是他es的transform不支持这种场景数据变化的聚合,还是说这是一个bug呢?我理解,可能是因为考虑到性能的原因,es的transform在这种场景下是有这种问题的。

若有错误之处,望大家指正。谢谢。

【要解决这个问题,可以增加一个状态字段,将之前的派送记录状态修改为已失效,然后通过条件过滤即可解决问题】

-----------------------2022-07-29------------------------

1、经过测试,上述问题无法解决。

2、原因是:当你加一个is_delete 状态,并添加到transform的query查询语句中。看似可以解决,实际并不能解决。

我们可以看到当你生成transform并start启动后,目标索引中的数据确实如你所预期的一样。但是当派件员第二天再次派件后,新纪录生成,昨天的记录的is_delete被修改,我们从transform的preview结果中可以看到数据确实变了,如我们预期的一样;但是,实际在目标索引中已经生成的数据并没有改变。

3、换一个思路,我们直接删除历史数据,相当于mysql的delete,通过debezium我们可以将墓碑事件处理,mysql删除的数据同步到es的同样可以删除。这样,我们继续观察transfrom,发现preview中的数据和我们期望的一致;但是,已经生成的目标索引中的数据并没有和preview中看到的一样,而是之前已经生成的旧值。

4、通过上述2、3的探索,发现。这个问题,暂时没法通过transform解决。就需要从业务方面考虑了:比如重复派件的数据经过筛选新增到一个新的索引中处理。

5、在此,重新查看了官方文档,发现一个 转换保留策略retention_policy,他可以将符合定义标准的数据将从目标索引中删除。他可以应用在:比如一年前的订单无需统计,就可以用这个实现。但,依旧无法解决上述问题。

----------------------

最近使用transfrom实现一个新功能又遇到了如上所述问题:

1、业务:订单销售额和销量的统计折线图,按天、月、年这种,但是注意,需要排除售后订单。售后订单有两种情况,一种是订单可以部分售后(退款),另一种是整单售后(退款)。为了简化,这里统一成整单退款。

2、订单表同步数据到es的mapping

PUT xxx_order { "mappings": { "properties": { "create_time": { "type": "date" }, "create_user": { "type": "long" }, "update_time": { "type": "date" }, "update_user": { "type": "long" }, "id": { "type": "long" }, "is_deleted": { "type": "long" }, "is_pay": { "type": "long" }, "order_amount": { "type": "long" }, "order_code": { "type": "text", "fields": { "keyword": { "type": "keyword", "ignore_above": 256 } } }, "order_status": { "type": "long" }, "pay_amount": { "type": "long" }, "pay_time": { "type": "date" }, "pay_type": { "type": "long" } } } }

3、假如业务是:销售额是含售后订单的统计,那就比较简单-----》订单销量统计(含售后订单):

说明:is_pay :1 表示已支付

order_status :9 表示已退款

DELETE xxx_order_paid_summary #xx商城-每日订单汇总 PUT _transform/xxx_order_daily_paid_summary_transform { "source": { "index": [ "xxx_order" ], "query": { "term": { "is_pay": { "value": "1" } } } }, "dest": { "index": "xxx_daily_order_paid_summary" }, "frequency": "1m", "sync": { "time": { "field": "update_time", "delay": "1m" } }, "pivot": { "group_by": { "org_code": { "terms": { "field": "org_code.keyword" } }, "statistic_time": { "date_histogram": { "field": "pay_time", "calendar_interval": "1d", "time_zone": "+08:00" } } }, "aggregations": { "pay_amount_sum": { "sum": { "field": "pay_amount" } }, "order_count": { "value_count": { "field": "order_code.keyword" } } } }, "description": "xx商城-每日订单汇总" } POST _transform/xxx_order_daily_paid_summary_transform/_start POST _transform/xxx_order_daily_paid_summary_transform/_stop DELETE _transform/xxx_order_daily_paid_summary_transform

其中sync的field可以使用update_time,也可以使用pay_time,都一样。

"sync": { "time": { "field": "update_time", "delay": "1m" } }

关于delay参数的解释:当前时间与最近输入数据时间之间的时间延迟。默认值为60s。

4、但是实际业务是统计不包含售后订单---》订单销量统计(不含售后订单):

DELETE xxx_order_paid_summary #xx商城-每日订单汇总 PUT _transform/xxx_order_daily_paid_summary_transform { "source": { "index": [ "xxx_order" ], "query": { "bool": { "filter": [ { "term": { "is_pay": 1 } } ], "must_not": [ { "term": { "order_status": { "value": "9" } } } ] } } }, "dest": { "index": "xxx_daily_order_paid_summary" }, "frequency": "1m", "sync": { "time": { "field": "update_time", "delay": "1m" } }, "pivot": { "group_by": { "org_code": { "terms": { "field": "org_code.keyword" } }, "statistic_time": { "date_histogram": { "field": "pay_time", "calendar_interval": "1d", "time_zone": "+08:00" } } }, "aggregations": { "pay_amount_sum": { "sum": { "field": "pay_amount" } }, "order_count": { "value_count": { "field": "order_code.keyword" } } } }, "description": "xx商城-每日订单汇总" } POST _transform/xxx_order_daily_paid_summary_transform/_start POST _transform/xxx_order_daily_paid_summary_transform/_stop DELETE _transform/xxx_order_daily_paid_summary_transform

5、第二天有发生退款:

发现transform的preview中的结果,确实发生了变化:

但是,实际查看目标索引,发现数据并没有变化(测试数据插入,模拟退款数据,我就不详细写了。我在本地搭建的mysql-kafka connect-debezium同步程序,直接在DBeaver插入修改数据的)

POST /xxx_daily_order_paid_summary/_search

{"size": 120}

6、那说了这么多,怎么解决这个问题呢,经过我的实践和思考,总结了两种方法:

第一种:直接从order表进行aggs聚合统计。亦可以考虑将统计结果在redis缓存5分钟。这个就需要根据实际情况来看了,一般情况,不加redis完全可以。也不会造成太大压力。除非数据量特别巨大,聚合对es集群在成较大压力,就不能使用直接聚合这种方式了。

第二种:业务中除了order表,还有一张after_sales_order表(售后订单表),此时就可以吧两张表的数据都同步到es,然后分别给两张表各自创建一个transform分别统计,然后在查询的时候,将两个结果再在循环中相减即可得到想要的结果。

浙公网安备 33010602011771号

浙公网安备 33010602011771号