WritableComparable

WritableComparable继承自Writable和java.lang.Comparable接口,是一个Writable也是一个Comparable,也就是说,既可以序列化,也可以比较!

WritableComparable的实现类之间相互来比较,在Map/Reduce中,任何用作键来使用的类都应该实现WritableComparable接口!

/* 输入: order01,pro01,220.8 order01,pro02,220.1 order01,pro03,220.3 order02,pro04,221.8 order02,pro05,222.8 order03,pro06,220.2 order04,pro07,220.8 输出: orderId='order01', productId='pro01', price=220.8 orderId='order02', productId='pro05', price=222.8 orderId='order03', productId='pro06', price=220.2 orderId='order04', productId='pro07', price=220.8 */

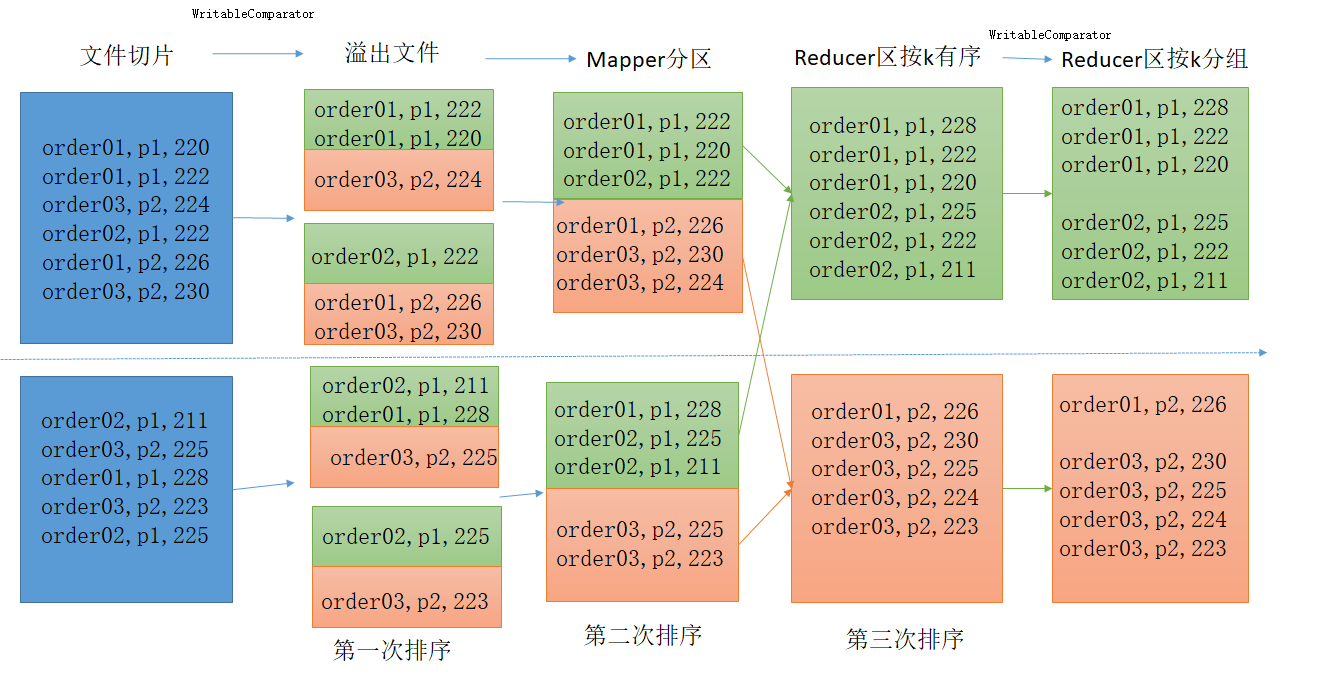

计算每个订单的price的最大值

(1)price排序(orderBean.compareTo【二次排序】)

(2)按订单分组(groupingComparator)

package com.atguigu.groupingComparator; import org.apache.hadoop.io.WritableComparable; import java.io.DataInput; import java.io.DataOutput; import java.io.IOException; public class OrderBean implements WritableComparable<OrderBean> { private String orderId; private String productId; private double price; @Override public String toString() { return "orderId='" + orderId + '\'' + ", productId='" + productId + '\'' + ", price=" + price; } public void setOrderId(String orderId) { this.orderId = orderId; } public void setProductId(String productId) { this.productId = productId; } public void setPrice(double price) { this.price = price; } public String getOrderId() { return orderId; } public String getProductId() { return productId; } public double getPrice() { return price; } @Override public int compareTo(OrderBean o) { //二次排序 int compare = this.orderId.compareTo(o.orderId); //比较订单id if(compare == 0){ return Double.compare(o.price,this.price); //订单相同,按照价格排序 }else{ return compare; } } @Override public void write(DataOutput out) throws IOException { out.writeUTF(orderId); out.writeUTF(productId); out.writeDouble(price); } @Override public void readFields(DataInput in) throws IOException { orderId = in.readUTF(); productId = in.readUTF(); price = in.readDouble(); } }

package com.atguigu.groupingComparator; import org.apache.hadoop.io.WritableComparable; import org.apache.hadoop.io.WritableComparator; public class OrderComparator extends WritableComparator { protected OrderComparator(){ super(OrderBean.class,true); } @Override public int compare(WritableComparable a, WritableComparable b) { OrderBean oa =(OrderBean) a; OrderBean ob =(OrderBean) b; return oa.getOrderId().compareTo(ob.getOrderId()); } }

package com.atguigu.groupingComparator; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import java.io.IOException; public class OrderDriver { public static void main(String[] args) throws Exception { Job job = Job.getInstance(new Configuration()); job.setJarByClass(OrderDriver.class); job.setMapperClass(OrderMapper.class); job.setReducerClass(OrderReducer.class); job.setMapOutputKeyClass(OrderBean.class); job.setMapOutputValueClass(NullWritable.class); job.setOutputKeyClass(OrderBean.class); job.setOutputValueClass(NullWritable.class); //增加Comparator job.setGroupingComparatorClass(OrderComparator.class); FileInputFormat.setInputPaths(job,new Path("E:\\order.txt")); FileOutputFormat.setOutputPath(job,new Path("E:\\out4")); boolean b = job.waitForCompletion(true); System.exit(b?0:1); } }

package com.atguigu.groupingComparator; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; import java.io.IOException; public class OrderMapper extends Mapper<LongWritable, Text,OrderBean, NullWritable> { private OrderBean orderBean = new OrderBean(); @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { String[] fields = value.toString().split(","); orderBean.setOrderId(fields[0]); orderBean.setProductId(fields[1]); orderBean.setPrice(Double.parseDouble(fields[2])); context.write(orderBean,NullWritable.get()); } }

package com.atguigu.groupingComparator; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.mapreduce.Reducer; import java.io.IOException; import java.util.Iterator; public class OrderReducer extends Reducer<OrderBean, NullWritable,OrderBean,NullWritable> { //取最大值 // @Override // protected void reduce(OrderBean key, Iterable<NullWritable> values, Context context) throws IOException, InterruptedException { // context.write(key,NullWritable.get()); // } //取前两名 @Override protected void reduce(OrderBean key, Iterable<NullWritable> values, Context context) throws IOException, InterruptedException { Iterator<NullWritable> iterator = values.iterator(); for(int i=0;i<2;i++){ if(iterator.hasNext()){ context.write(key,iterator.next()); } } } }

posted on 2020-11-17 16:44 happygril3 阅读(225) 评论(0) 收藏 举报

浙公网安备 33010602011771号

浙公网安备 33010602011771号