hadoop集群搭建

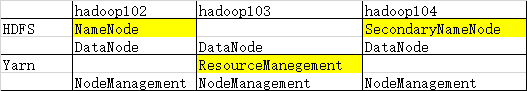

HDFS:NameNode DataNode SecondaryNameNode

Yarn:ResourceManegement NodeManagement

(1)修改文件

1.core-site.xml

<configuration>

<!--指定HDFS中NameNode地址-->

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop102:9000</value>

</property>

<!--指定hadoop运行时产生文件的存储目录-->

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/module/hadoop-2.7.6/data/tmp</value>

</property>

</configuration>

2.hdfs-site.xml

<configuration>

<!--指定hdfs副本的数量-->

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<!--SecondaryNameNode配置->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>hadoop104:50090</value>

</property>

</configuration>

3.mapred-site.xml

<configuration>

<!--指定MR运行在yarn-->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<!-- 指定历史服务器端地址 -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>hadoop104:10020</value>

</property>

<!-- 指定历史服务器Web访问的IP和端口 -->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>hadoop104:19888</value>

</property>

</configuration>

4. yarn-site.xml

<configuration>

<!--reduce数据的获取方式-->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!--指定ResourceManegement的地址-->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop103</value>

</property>

<!-- 日志聚集功能 -->

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<!-- 日志保留时间7天 -->

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>604800</value>

</property>

</configuration>

5.配置java_home

hadoop-env.sh

mapred-env.sh

yarn-env.sh

6.分发到其他服务器

[atguigu@hadoop101 hadoop-2.7.6]$ xsync etc

7.在namenode服务器(hadoop102)格式化NameNode

hdfs namenode -format

8.单独启动集群

(1)启动hdfs

hadoop102:

hadoop-daemon.sh start namenode

hadoop-daemon.sh start datanode

hadoop103:

hadoop-daemon.sh start datanode

hadoop104:

hadoop-daemon.sh start datanode

hadoop-daemon.sh start secondarynamenode

(2)启动yarn

hadoop102:

yarn-daemon.sh start nodemanager

hadoop103:

yarn-daemon.sh start nodemanager

yarn-daemon.sh start resourcemanager

hadoop104:

yarn-daemon.sh start nodemanager

(3)启动历史服务器

hadoop104:

mr-jobhistory-daemon.sh start historyserver

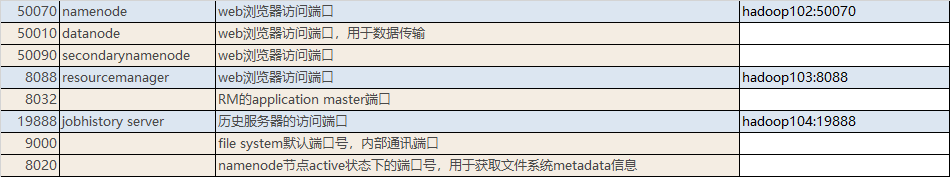

hadoop常用端口:

9.jps

hadoop102:

3990 NodeManager

3016 NameNode

3163 DataNode

4107 Jps

hadoop103:

4274 ResourceManager

4130 NodeManager

2967 DataNode

4397 Jps

hadoop104:

3921 JobHistoryServer

2999 SecondaryNameNode

3961 Jps

3305 NodeManager

2926 DataNode

10.启动集群2

1.配置dataNode

vim slaves

hadoop102

hadoop103

hadoop104

2.同步配置文件

xsync etc

2.在hadoop102上启动hdfs

start-dfs.sh

3.查看启动结果jps

4.在hadoop103启动yarn

start-yarn.sh

5.停止hdfs

stop-dfs.sh

6.停止yarn

stop-yarn.sh

hadoop例子:

hadoop jar /opt/module/hadoop-2.7.6/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.6.jar wordcount /README.txt /out

posted on 2020-11-08 17:33 happygril3 阅读(78) 评论(0) 收藏 举报

浙公网安备 33010602011771号

浙公网安备 33010602011771号