建图

方式一

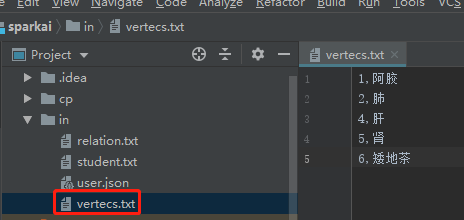

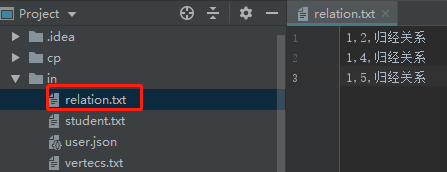

package graphx import org.apache.log4j.{Level, Logger} import org.apache.spark.{SparkConf, SparkContext} import org.apache.spark.graphx._ import org.apache.spark.rdd.RDD import scala.io.BufferedSource // 字符串转码,解决乱码问题 import java.nio.charset.StandardCharsets import scala.io.Source import collection.mutable.ArrayBuffer object test09 { def main(args: Array[String]): Unit = { //屏蔽日志 Logger.getLogger("org.apache.spark").setLevel(Level.WARN) Logger.getLogger("org.eclipse.jetty.server").setLevel(Level.OFF) val conf: SparkConf = new SparkConf().setAppName("my scala").setMaster("local") val sc = new SparkContext(conf) val verticsLines: RDD[String] = sc.textFile("in/vertecs.txt") println("edgeLines") verticsLines.collect().foreach(println) //只有一种属性 val vertextRDD: RDD[(VertexId, String)] = verticsLines.map({ line => val strings: Array[String] = line.split(",") val id: VertexId = strings(0).toLong val name: String = strings(1) (id, name) }) println("verticsRDD") vertextRDD.collect().foreach(println) val edgeLines: RDD[String] = sc.textFile("in/relation.txt") println("edgeLines") edgeLines.collect().foreach(println) val edgeRDD: RDD[Edge[String]] = edgeLines.map({ line => val strings: Array[String] = line.split(",") val src: VertexId = strings(0).toLong val dst: VertexId = strings(1).toLong val attr: String = strings(2) Edge(src, dst, attr) }) println("edgeRDD") edgeRDD.collect().foreach(println) val graph = Graph(vertextRDD,edgeRDD) println("graph") graph.vertices.collect().foreach(println) graph.edges.collect().foreach(println) graph.triplets.collect().foreach(println) } }

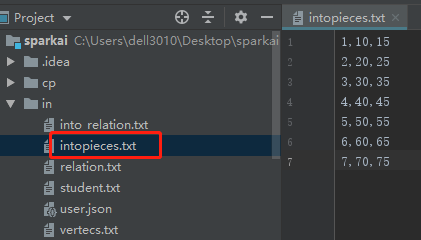

package graphx import org.apache.log4j.{Level, Logger} import org.apache.spark.rdd.RDD import org.apache.spark.{SparkConf, SparkContext} import org.apache.spark.graphx._ object intopiece { def main(args: Array[String]): Unit = { //屏蔽日志 Logger.getLogger("org.apache.spark").setLevel(Level.WARN) Logger.getLogger("org.eclipse.jetty.server").setLevel(Level.OFF) val conf: SparkConf = new SparkConf().setAppName("my intopiece").setMaster("local") val sc = new SparkContext(conf) //节点 val intopiece: RDD[String] = sc.textFile("in/intopieces.txt") val intopieceRDD: RDD[(VertexId, VertexId, VertexId)] = intopiece.map({ line => val strings: Array[String] = line.split(",") val id: VertexId = strings(0).toLong val black: VertexId = strings(1).toLong val grey: VertexId = strings(2).toLong (id, black, grey) }) println("intopieceRDD") intopieceRDD.collect().foreach(println) //有两种属性 val intopieceRDD2: RDD[(VertexId, (VertexId, VertexId))] = intopieceRDD.map { case (id, black, grey) => (id, (black, grey)) } val relation: RDD[String] = sc.textFile("in/into_relation.txt") val relationRDD: RDD[Edge[String]] = relation.map({ line => val strings: Array[String] = line.split(",") val src: VertexId = strings(0).toLong val dst: VertexId = strings(1).toLong val attr: String = strings(2) Edge(src, dst, attr) }) println("relationRDD") relationRDD.collect().foreach(println) val graph = Graph(intopieceRDD2,relationRDD) println("graph:") graph.vertices.collect().foreach(println) graph.edges.collect().foreach(println) } }

方式二

package graphx import org.apache.spark.graphx._ import scala.io.BufferedSource // To make some of the examples work we will also need RDD import org.apache.spark.rdd.RDD import org.apache.spark.SparkContext import org.apache.log4j.{Level, Logger} import org.apache.spark.SparkConf import scala.io.Source import collection.mutable.ArrayBuffer object test10 { def main(args: Array[String]) { //屏蔽日志 Logger.getLogger("org.apache.spark").setLevel(Level.WARN) Logger.getLogger("org.eclipse.jetty.server").setLevel(Level.OFF) val conf = new SparkConf().setAppName("Simple Application").setMaster("local") val sc = new SparkContext(conf) val vertexArr = new ArrayBuffer[(Long, String)]() val edgeArr = new ArrayBuffer[Edge[String]]() // 读入时指定编码 val sourceV: BufferedSource = Source.fromFile("in/vertecs.txt", "UTF-8") val lines: Iterator[String] = sourceV.getLines() while (lines.hasNext) { val pp = lines.next().split(",") vertexArr += ((pp(0).toLong, pp(1))) } println(vertexArr.length) val sourceE: BufferedSource = Source.fromFile("in/relation.txt", "UTF-8") val linesE: Iterator[String] = sourceE.getLines() while (linesE.hasNext) { val ee = linesE.next().split(",") edgeArr += Edge(ee(0).toLong, ee(1).toLong, ee(2)) } // 创建点RDD val users: RDD[(VertexId, String)] = sc.parallelize(vertexArr) // 创建边RDD val relationships: RDD[Edge[String]] = sc.parallelize(edgeArr) // 定义一个默认用户,避免有不存在用户的关系 val graph = Graph(users, relationships) // 输出Graph的信息 graph.vertices.collect().foreach(println(_)) } }

posted on 2020-10-28 15:28 happygril3 阅读(70) 评论(0) 收藏 举报

浙公网安备 33010602011771号

浙公网安备 33010602011771号