hdp2.4搭建

1、集群安装

2、配置Standby NameNode 、Standby HBase Master

3、集群扩容节点、添加zk、jn

一、集群安装

虚拟机目录 /data/www/html/hbase

httpd配置文件修改下面三行路径 vi /etc/httpd/conf/httpd.conf (默认是/var/www/html/)

DocumentRoot "/data/www/html"

<Directory "/data/www">

<Directory "/data/www/html">

重启 /bin/systemctl restart httpd.service (启动httpd /bin/systemctl start httpd.service)

浏览器访问 http://192.168.159.11/hbase/

关闭透明大页

[root@hdp11 ~]# cat /sys/kernel/mm/transparent_hugepage/enabled

[always] madvise never

[root@hdp11 ~]# echo never > /sys/kernel/mm/transparent_hugepage/enabled

[root@hdp11 ~]# cat /sys/kernel/mm/transparent_hugepage/enabled

always madvise [never]

yum文件

[root@hdp1 hbase]# cat /etc/yum.repos.d/hdp.repo

[AMBARI-2.2.1.0] name=AMBARI-2.2.1.0 baseurl=http://hdp1/hbase/AMBARI-2.2.1.0/centos7/2.2.1.0-161/ enabled=1 gpgcheck=0 [HDP-2.2.1.0] name=HDP-2.2.1.0 baseurl=http://hdp1/hbase/HDP/centos7/2.x/updates/2.4.0.0/ enabled=1 gpgcheck=0 [HDP-uti] name=HDP-uti baseurl=http://hdp1/hbase/HDP-UTILS-1.1.0.20/repos/centos7/ enabled=1 gpgcheck=0

所有节点

yum -y install ambari-agent

ambari-agent start

chkconfig --add ambari-agent

修改配置文件hostname /etc/ambari-agent/conf/ambari-agent.ini service ambari-agent restart

master节点 yum install ambari-server -y

初始化ambari-server ambari-server setup

一路默认(jdk选[3] Custom JDK 填JAVA_HOME地址 数据库选[1] - PostgreSQL (Embedded))

ambari账号密码 都是admin(或者ambari)

Database name (ambari):

Postgres schema (ambari):

Username (ambari):

Enter Database Password (bigdata):

[root@hdp9 ~]# ambari-server setup Using python /usr/bin/python2 Setup ambari-server Checking SELinux... SELinux status is 'disabled' Customize user account for ambari-server daemon [y/n] (n)? Adjusting ambari-server permissions and ownership... Checking firewall status... Redirecting to /bin/systemctl status iptables.service Checking JDK... Do you want to change Oracle JDK [y/n] (n)? y [1] Oracle JDK 1.8 + Java Cryptography Extension (JCE) Policy Files 8 [2] Oracle JDK 1.7 + Java Cryptography Extension (JCE) Policy Files 7 [3] Custom JDK ============================================================================== Enter choice (1): 3 WARNING: JDK must be installed on all hosts and JAVA_HOME must be valid on all hosts. WARNING: JCE Policy files are required for configuring Kerberos security. If you plan to use Kerberos,please make sure JCE Unlimited Strength Jurisdiction Policy Files are valid on all hosts. Path to JAVA_HOME: /var/java/jdk1.8.0_151/ Validating JDK on Ambari Server...done. Completing setup... Configuring database... Enter advanced database configuration [y/n] (n)? y Configuring database... ============================================================================== Choose one of the following options: [1] - PostgreSQL (Embedded) [2] - Oracle [3] - MySQL [4] - PostgreSQL [5] - Microsoft SQL Server (Tech Preview) [6] - SQL Anywhere ============================================================================== Enter choice (1): Database name (ambari): Postgres schema (ambari): Username (ambari): admin Enter Database Password (ambari): Re-enter password: Default properties detected. Using built-in database. Configuring ambari database... Checking PostgreSQL... Configuring local database... Connecting to local database...done. Configuring PostgreSQL... Restarting PostgreSQL Extracting system views... ....... Adjusting ambari-server permissions and ownership... Ambari Server 'setup' completed successfully.

启动service ambari-server start 页面访问http://192.168.159.19:8080/ admin admin

ambari-server start 启动 ambari-server stop 停止 ambari-server restart 重启 ambari-agent start 启动 ambari-agent stop 停止 ambari-agent status 查看状态

Name your cluster kzx_pay_hf

HDP 2.4

Advanced Repository Options (参考 /etc/yum.repos.d/hdp.repo)

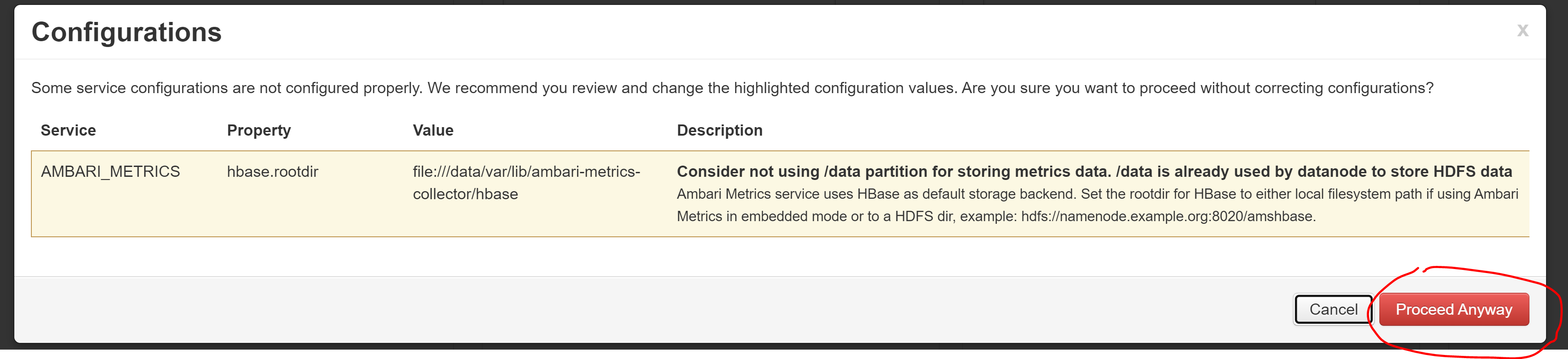

Choose Services

HDFS HBase ZooKeeper Ambari Metrics

这里注意 failed disk tolerance设置,测试环境如果只有一个磁盘 又设置1会导致datanode启动失败

报org.apache.hadoop.util.DiskChecker$DiskErrorException: Invalid volume failure config value: 1

【HDFS问题】datanode启动失败的问题

集群安装完毕!!!

二、配置Standby NameNode 、Standby HBase Master

为集群配置 Standby NameNode 、Standby HBase Master

- HDFS --> Service Actions --> Add HBase Master 为hbase增加Standby HBase Maste(添加完需要start,先显示hbase master,启动完会变成Standby HBase Maste)

- Hbase --> Service Actions --> Enable NameNode HA(需停掉hbase),填

-

集群配置 Standby NameNode 、Standby HBase Master 完毕!!!

三、集群扩容节点、添加zk、jn

扩容集群:添加2个节点,添加zk、JournalNode

现有集群hdp1、hdp2、hdp3准备2个新节点bjc-hdp4 bjc-hdp5 并初始化 (修改好/etc/ambari-agent/conf/ambari-agent.ini)

192.168.159.11 hdp1.kzx.com hdp1 192.168.159.12 hdp2.kzx.com hdp2 192.168.159.13 hdp3.kzx.com hdp3 192.168.159.14 bjc-hdp4.kzx.com bjc-hdp4 192.168.159.15 bjc-hdp5.kzx.com bjc-hdp5 192.168.159.16 hdp6.kzx.com hdp6 192.168.159.17 hdp7.kzx.com hdp7 192.168.159.18 hdp8.kzx.com hdp8 192.168.159.19 hdp9.kzx.com hdp9 192.168.159.20 hdp10.kzx.com hdp10 192.168.159.21 hdp11.kzx.com hdp11

Ambari界面Hosts-->Actions-->Add New Hosts

如果Status显示失败,检查ambari-agent配置并重启再recheck试试 systemctl restart ambari-agent.service(service ambari-agent restart)

等待红色警告消失

等待红色警告消失

安装zk

添加好2个zk

这时候先别启动新增的两个zk

这里没有要重启zk

这里没有要重启zk

不要用这个restart All,应该一个个节点进行重启affected的应用。

不要用这个restart All,应该一个个节点进行重启affected的应用。

不用用restart ALL

不用用restart ALL

逐个重启rs和hmaster后就要解决zk重启的问题

(停1个非leader的zk,保留2个旧zk)重启全部zk ,10s内重启好,不会影响集群

while :;do echo -n " ";date; for i in hdp1 hdp2 hdp3 bjc-hdp4 bjc-hdp5 ;do echo -n "$i:"; echo mntr|nc $i 2181|grep zk_server_state;done;sleep 2;done

安装jn

以下两条命令操作加jn(红色是需要修改的):

curl -u admin:admin -H 'X-Requested-By: ambari' -X POST http://hdp1:8080/api/v1/clusters/kzx_pay/hosts/bjc-hdp4.kzx.com/host_components/JOURNALNODE

curl -u admin:admin -H 'X-Requested-By: ambari' -X PUT -d '{"RequestInfo":{"context":"Install JournalNode"},"Body":{"HostRoles":{"state":"INSTALLED"}}}' http://hdp1:8080/api/v1/clusters/kzx_pay/hosts/bjc-hdp4.kzx.com/host_components/JOURNALNODE

HDFS

Configs

Advanced

Custom hdfs-site

dfs.namenode.shared.edits.dir

qjournal://hdp1.kzx.com:8485;hdp2.kzx.com:8485;hdp3.kzx.com:8485;bjc-hdp4:8485;bjc-hdp5:8485/kzxpay

qjournal://bjc-hdp4.kzx.com:8485;bjc-hdp5.kzx.com:8485;hdp1.kzx.com:8485;hdp2.kzx.com:8485;hdp3.kzx.com:8485/kzxpay

save

Restart Required: 17 Components on 5 Hosts

Restart Required: 17 Components on 5 Hosts

逐个重启 jn先不重启(3个jn running 2个stop 重启一个就只有2个running了)

再 先启动两个新jn 最后逐个restart旧的3个jn

hdp1 ll /hadoop/hdfs/journal

新jn节点 mkdir -pv /hadoop/hdfs/journal/kzxpay

hdp1 scp -r /hadoop/hdfs/journal/kzxpay/* hdp5:/hadoop/hdfs/journal/kzxpay/

chown hdfs:hadoop -R /hadoop/hdfs/journal/

界面启动 然后删除lock文件 检查是否有最新lock文件生成 rm -f /hadoop/hdfs/journal/kzxpay/in_use.lock

cat /hadoop/hdfs/journal/kzxpay/in_use.lock

ls -lrt /hadoop/hdfs/journal/kzxpay/current/*inprogress*

重启 nn1 nn2

浙公网安备 33010602011771号

浙公网安备 33010602011771号