Linux-06thrift

thrift实现匹配系统

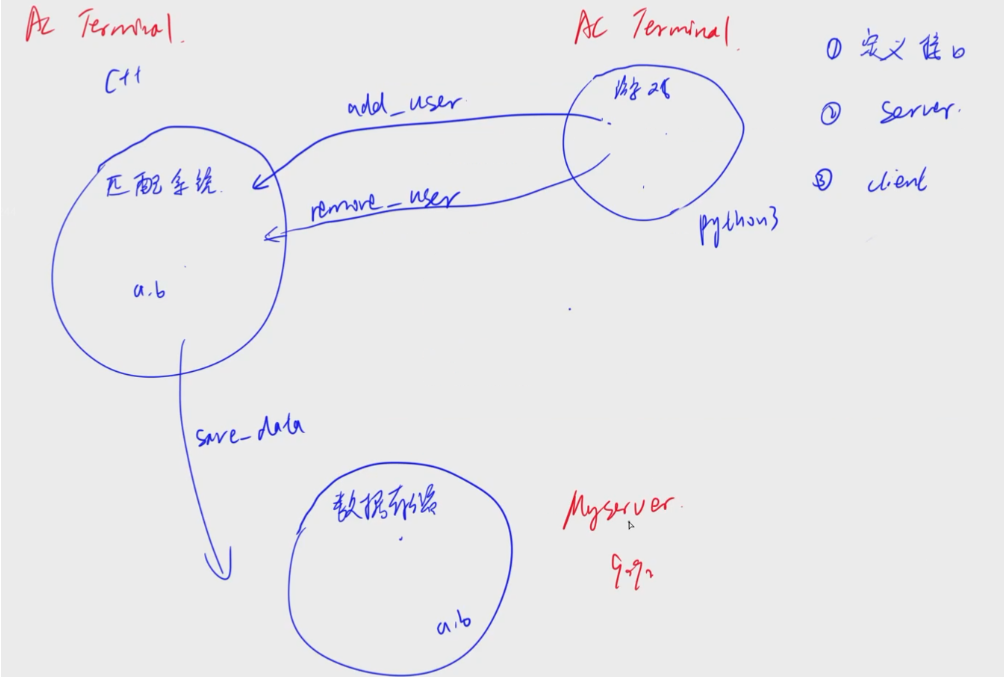

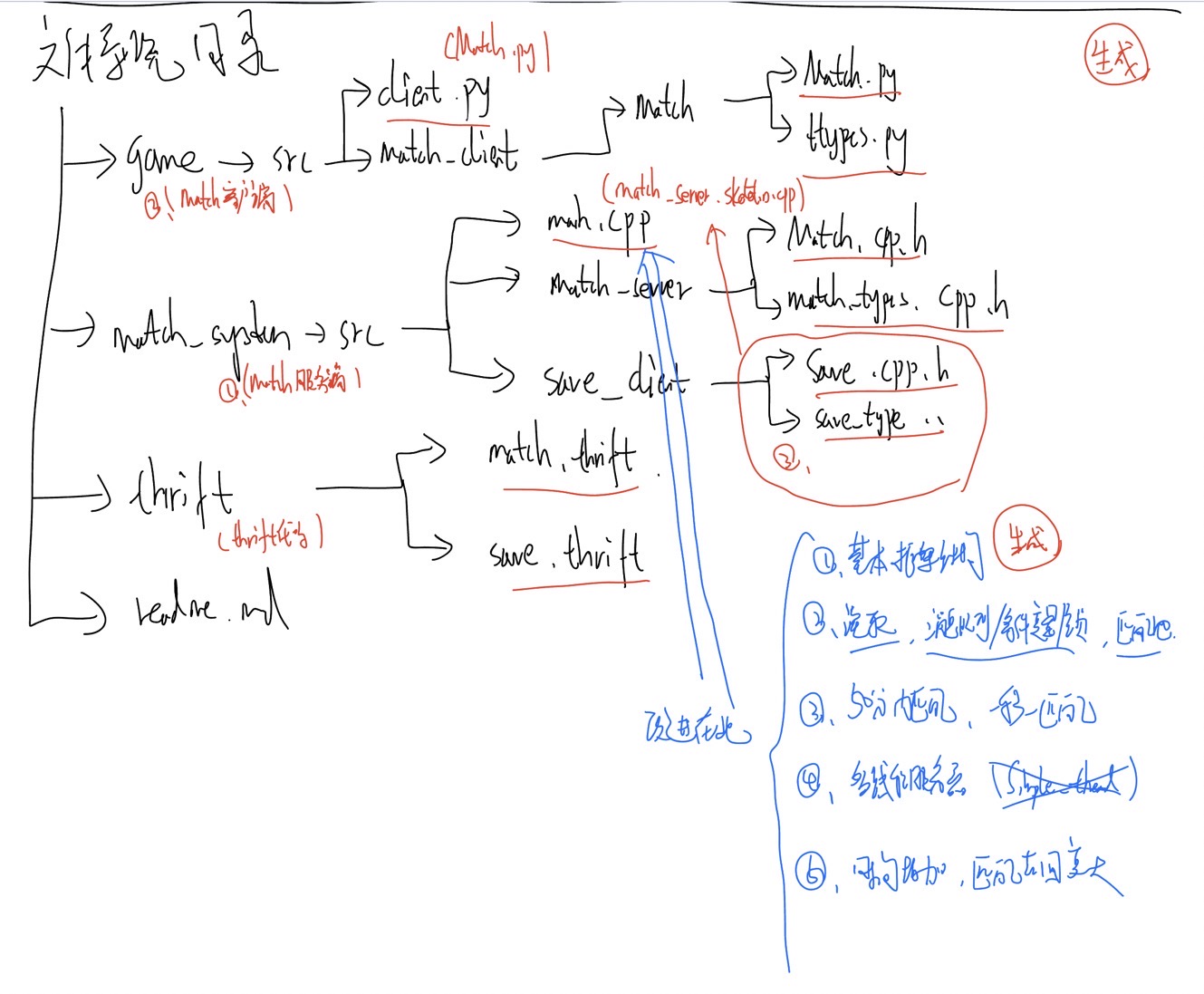

基本架构

实现match_system的服务端

定义接口

- 通过.thrift文件定义接口

- 定义完成后通过命令自动生成接口代码的框架

# 编写接口文件

# 其中方法内有info做额外信息

vim /thrift/match.thrift

--

namespace cpp match_service

struct User {

1: i32 id,

2: string name,

3: i32 score

}

service Match {

i32 add_user(1: User user, 2: string info),

i32 remove_user(1: User user, 2: string info),

}

--

# 在src目录下生成代码

mkdir match_system/src -p

cd match_system/src

# thrift -r --gen <language> <Thrift filename>

thrift -r --gen cpp ../../thrift/match.thrift

# 生成gen-cpp文件夹,将其修改为match_server

mv gen-cpp match_server

# 把其中服务端文件移出并更名

mv match_server/Match_server.skeleton.cpp main.cpp

初步实现match_system

vim main.cpp

---

// This autogenerated skeleton file illustrates how to build a server.

// You should copy it to another filename to avoid overwriting it.

// 1.修改头文件路径

#include "match_server/Match.h"

#include <thrift/protocol/TBinaryProtocol.h>

#include <thrift/server/TSimpleServer.h>

#include <thrift/transport/TServerSocket.h>

#include <thrift/transport/TBufferTransports.h>

#include <iostream>

using namespace ::apache::thrift;

using namespace ::apache::thrift::protocol;

using namespace ::apache::thrift::transport;

using namespace ::apache::thrift::server;

using namespace ::match_service;

using namespace std;

class MatchHandler : virtual public MatchIf {

public:

MatchHandler() {

// Your initialization goes here

}

// 在非void函数中添加return 0;

int32_t add_user(const User& user, const std::string& info) {

// Your implementation goes here

printf("add_user\n");

return 0;

}

int32_t remove_user(const User& user, const std::string& info) {

// Your implementation goes here

printf("remove_user\n");

return 0;

}

};

int main(int argc, char **argv) {

int port = 9090;

::std::shared_ptr<MatchHandler> handler(new MatchHandler());

::std::shared_ptr<TProcessor> processor(new MatchProcessor(handler));

::std::shared_ptr<TServerTransport> serverTransport(new TServerSocket(port));

::std::shared_ptr<TTransportFactory> transportFactory(new TBufferedTransportFactory());

::std::shared_ptr<TProtocolFactory> protocolFactory(new TBinaryProtocolFactory());

TSimpleServer server(processor, serverTransport, transportFactory, protocolFactory);

cout << "Start Match Server" << endl;

server.serve();

return 0;

}

---

编译链接并上传git

# 编译,.h文件不需要编译,用match_server里面的.cpp文件来编译main.cpp

g++ -c main.cpp ./match_server/*.cpp

# 链接需要加上thrift的动态链接库

g++ *.o -o main.cpp -lthrift

# 运行一下,输出 Start Match Server 则成功

./main

# 如果修改c++文件后需要重新进行

g++ -c main.cpp

g++ *.o -o main -lthrift

./main

# 上传git

# .o可执行文件和编译后的文件不上传到云端

git add .

git restore --stage *.o

git restore --stage main

commit

push

实现match_client的客户端

定义接口

# 接口文件已经写好

./thrift/match.thrift

# 在目标路径创建src

mkdir game/src -p

cd game/src

# 生成代码

thrift -r --gen py ../../thrift/match.thrift

# 生成文件夹更名

mv gen-py match_client

# 删除服务器端的文件

rm match_client/match/Match-remote

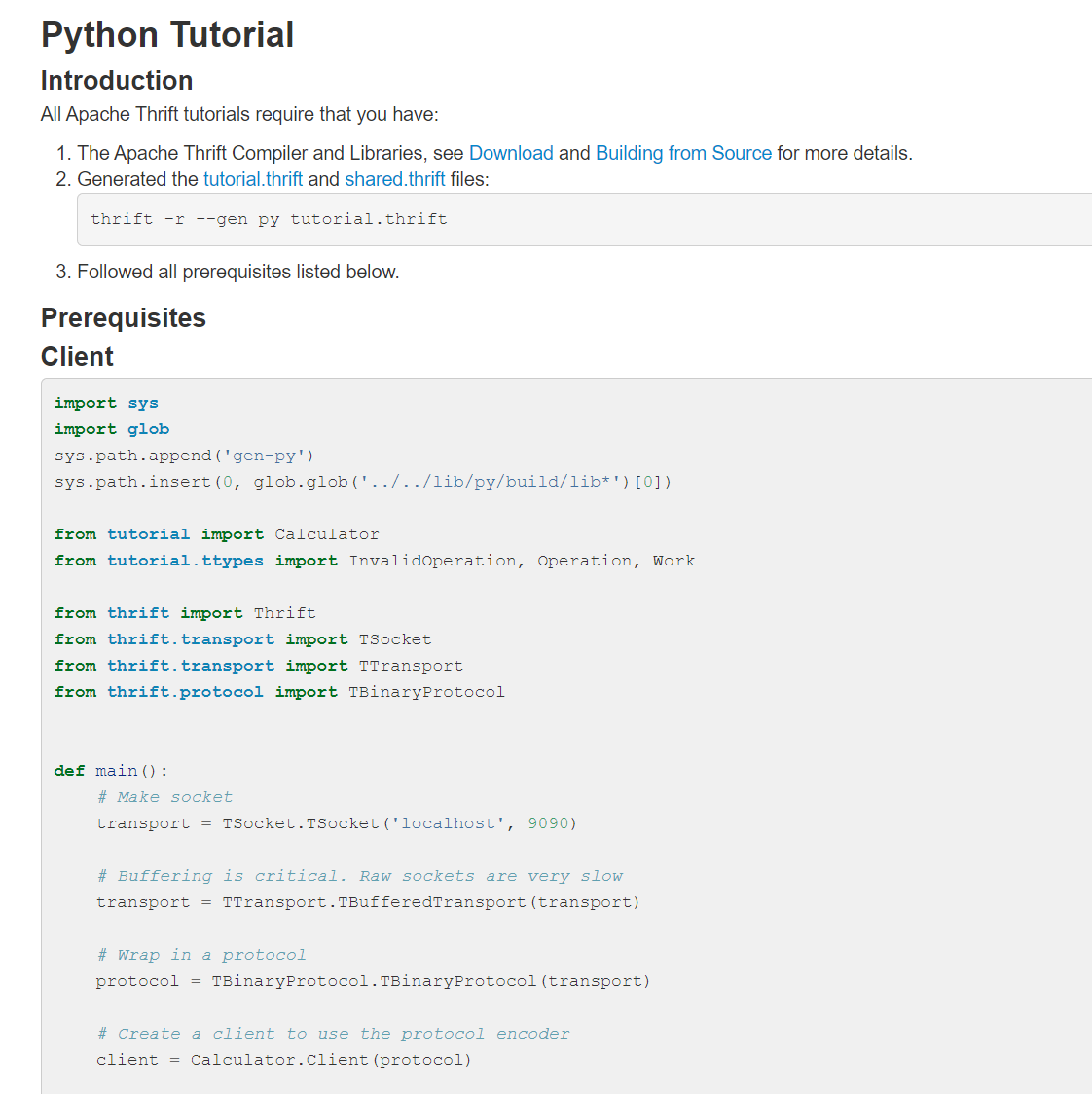

初步实现match_client

# 在官网上将客户端的例子代码复制下来

vim client.py

---

# 前四行是添加环境变量,删除

import sys

import glob

sys.path.append('gen-py')

sys.path.insert(0, glob.glob('../../lib/py/build/lib*')[0])

from tutorial import Calculator

from tutorial.ttypes import InvalidOperation, Operation, Work

from thrift import Thrift

from thrift.transport import TSocket

from thrift.transport import TTransport

from thrift.protocol import TBinaryProtocol

def main():

# Make socket

transport = TSocket.TSocket('localhost', 9090)

# Buffering is critical. Raw sockets are very slow

transport = TTransport.TBufferedTransport(transport)

# Wrap in a protocol

protocol = TBinaryProtocol.TBinaryProtocol(transport)

# Create a client to use the protocol encoder

client = Calculator.Client(protocol)

# Connect!

transport.open()

#################--从此开始是教程中的calculate示例,删除-##################

client.ping()

print('ping()')

sum_ = client.add(1, 1)

print('1+1=%d' % sum_)

work = Work()

work.op = Operation.DIVIDE

work.num1 = 1

work.num2 = 0

try:

quotient = client.calculate(1, work)

print('Whoa? You know how to divide by zero?')

print('FYI the answer is %d' % quotient)

except InvalidOperation as e:

print('InvalidOperation: %r' % e)

work.op = Operation.SUBTRACT

work.num1 = 15

work.num2 = 10

diff = client.calculate(1, work)

print('15-10=%d' % diff)

log = client.getStruct(1)

print('Check log: %s' % log.value)

###################-----------从此结束------------#################

# Close!

transport.close()

---

---

# 删除后的client.py

# 1.更改头文件

#from tutorial import Calculator

#from tutorial.ttypes import InvalidOperation, Operation, Work

from match_client.match import Match

from match_client.match.ttypes import User

from thrift import Thrift

from thrift.transport import TSocket

from thrift.transport import TTransport

from thrift.protocol import TBinaryProtocol

def main():

# Make socket

transport = TSocket.TSocket('localhost', 9090)

# Buffering is critical. Raw sockets are very slow

transport = TTransport.TBufferedTransport(transport)

# Wrap in a protocol

protocol = TBinaryProtocol.TBinaryProtocol(transport)

# Create a client to use the protocol encoder

client = Calculator.Client(protocol)

# Connect!

transport.open()

# 添加简单内容,先调试运行通过

user = User(1, 'yxc', 1500)

client.add_user(user, "")

# Close!

transport.close()

---

# 测试功能

# 先打开服务器

cd match_system/src

./main

# 运行py文件

python3 client.py

# 上传git

git add .

git restore --stage *.pyc

git restore --stage *.swp

git commit -m "add match client"

git push

修改为从命令行读入用户信息的形式

cd game/src

vim client.py

---

from match_client.match import Match

from match_client.match.ttypes import User

from thrift import Thrift

from thrift.transport import TSocket

from thrift.transport import TTransport

from thrift.protocol import TBinaryProtocol

from sys import stdin

def operate(op, user_id, username, score):

# Make socket

transport = TSocket.TSocket('127.0.0.1', 9090)

# Buffering is critical. Raw sockets are very slow

transport = TTransport.TBufferedTransport(transport)

# Wrap in a protocol

protocol = TBinaryProtocol.TBinaryProtocol(transport)

# Create a client to use the protocol encoder

client = Match.Client(protocol)

# Connect!

transport.open()

user = User(user_id, username, score)

if op == "add":

client.add_user(user,"")

elif op == "remove":

client.remove_user(user,"")

# Close!

transport.close()

def main():

for line in stdin:

op, user_id, username, score = line.split(' ')

operate(op, int(user_id), username, int(score))

# 加最后两行是好习惯

if __name__ == "__main__":

main()

---

# 完成match_client端代码,修改localhost为127.0.0.1,不改也没问题

测试及上传git

# 测试功能

# 先打开服务器

cd match_system/src

./main

# 运行py文件

python3 client.py

# 上传git

git add client.py

git commit -m "match client create user"

git push

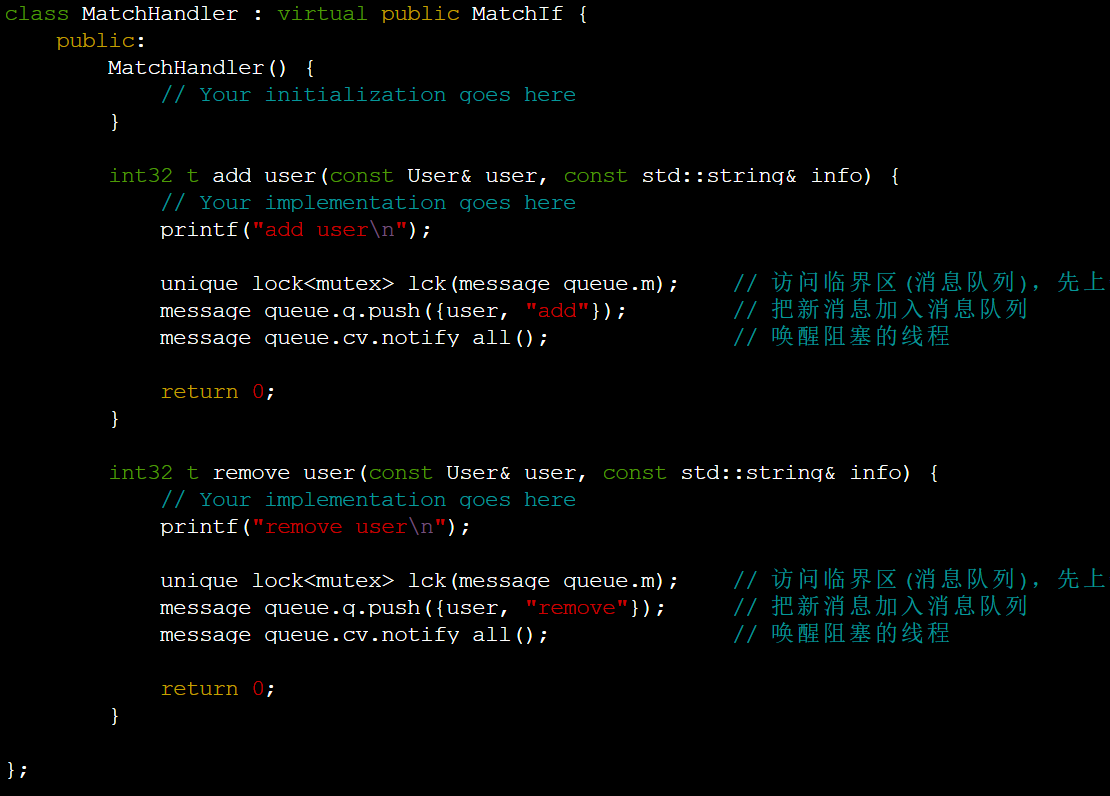

改进match_system的server端

改进点

- 消息队列的互斥与同步

- 定义匹配池

// 用户的增删和用户之间的匹配是并行的

// 需要使用消息队列进行通信,在其中使用锁做互斥,使用条件变量做同步

// 对消息队列的互斥与同步是经典的生产者消费者模型

// 1. 消息队列定义

struct Task

{

User user;

string type;

};

struct MessageQueue

{

queue<Task> q; // 队列

mutex m; // 信号量 mutex

condition_variable cv; // 条件变量 condition_variable

}message_queue;

// 2. 定义匹配池结构,将用户放进去

class Pool

{

public:

// 记录结果

void save_result(int a, int b)

{

//如果这里不加\n会导致在add第三个用户的时候同时输出前两个的id和add user字符串,不知道原因,可能是不加\n会被阻塞?

printf("Match Result: %d %d\n", a, b);

}

// 进行匹配:从匹配池中取出符合条件的两个用户

void match()

{

while(users.size()>1){

auto a = users[0],b = users[1];

users.erase(users.begin());

users.erase(users.begin());

save_result(a.id, b.id);

}

}

// 添加用户

void add(User user)

{

users.push_back(user);

}

// 删除用户

void remove(User user)

{

for(uint32_t i = 0; i < users.size(); i++ ){

if(users[i].id == user.id){

users.erase(users.begin() + i);

}

}

}

private:

vector<User> users; // 向量,存储用户

}pool;

// 3. 添加线程,调用消息队列的位置加锁

// 消息队列每次加入用户以及操作前都要锁住,保证所有和队列相关操作同一时间只有一个线程在操作它

class MatchHandler : virtual public MatchIf {

public:

MatchHandler() {

// Your initialization goes here

}

int32_t add_user(const User& user, const string& info){

// Your implementation goes here

printf("add_user\n");

// 这里表示每次只能有一个线程拿到锁,另一个线程会停下来,直到前一个拿到锁的线程执行完它的函数,此时才能释放锁,执行该线程拿到锁。

unique_lock<mutex> lck(message_queue.m);

message_queue.q.push({user, "add"});

// 唤醒线程,其实只有一个线程使用message_queue.cv.notify_one()也可以

message_queue.cv.notify_all();

return 0;

}

int32_t remove_user(const User& user, const string& info){

// Your implementation goes here

printf("remove_user\n");

unique_lock<mutex> lck(message_queue.m);

message_queue.q.push({user, "remove"});

message_queue.cv.notify_all();

return 0;

}

};

void consume_task()

{

while (true)

{

unique_lock<mutex> lck(message_queue.m);

if (message_queue.q.empty())

{

// 如果一个线程中消息队列为空,那么应该阻塞住线程,否则会一直消费者死循环下去浪费CPU资源,直到有新的玩家加入线程。

// 队列为空,没有任务,调用wait主动阻塞,等待消息队列不为空时由match_handle唤醒。防止死锁。

message_queue.cv.wait(lck);

}

else

{

// 队列不为空,有任务,执行任务–>添加、删除与匹配。

auto task = message_queue.q.front();

message_queue.q.pop();

// 解锁进行匹配

lck.unlock();

if (task.type == "add") pool.add(task.user); // 把玩家放进pool池中

else if (task.type == "remove") pool.remove(task.user); // 把玩家从pool池中移出

pool.match();

}

}

}

完整代码

// main.cpp 完整代码

// This autogenerated skeleton file illustrates how to build a server.

// You should copy it to another filename to avoid overwriting it.

#include "match_server/Match.h"

#include <thrift/protocol/TBinaryProtocol.h>

#include <thrift/server/TSimpleServer.h>

#include <thrift/transport/TServerSocket.h>

#include <thrift/transport/TBufferTransports.h>

#include <iostream>

#include <thread>

#include <mutex>

#include <condition_variable>

#include <queue>

#include <vector>

using namespace ::apache::thrift;

using namespace ::apache::thrift::protocol;

using namespace ::match_service;

using namespace std;

struct Task

{

User user;

string type;

};

struct MessageQueue

{

queue<Task> q;

mutex m;

condition_variable cv;

}message_queue;

class Pool

{

public:

void save_result(int a, int b)

{

printf("Match Result: %d %d\n", a, b);

}

void match()

{

while (users.size() > 1)

{

auto a = users[0], b = users[1];

users.erase(users.begin());

users.erase(users.begin());

save_result(a.id, b.id);

}

}

void add(User user)

{

users.push_back(user);

}

void remove(User user)

{

for (uint32_t i = 0; i < users.size(); i ++ )

if (users[i].id == user.id)

{

users.erase(users.begin() + i);

break;

}

}

private:

vector<User> users;

}pool;

class MatchHandler : virtual public MatchIf {

public:

MatchHandler() {

// Your initialization goes here

}

int32_t add_user(const User& user, const string& info){

// Your implementation goes here

printf("add_user\n");

unique_lock<mutex> lck(message_queue.m);

message_queue.q.push({user, "add"});

message_queue.cv.notify_all();

return 0;

}

int32_t remove_user(const User& user, const string& info){

// Your implementation goes here

printf("remove_user\n");

unique_lock<mutex> lck(message_queue.m);

message_queue.q.push({user, "remove"});

message_queue.cv.notify_all();

return 0;

}

};

void consume_task()

{

while (true)

{

unique_lock<mutex> lck(message_queue.m);

if (message_queue.q.empty())

{

message_queue.cv.wait(lck);

}

else

{

auto task = message_queue.q.front();

message_queue.q.pop();

lck.unlock();

if (task.type == "add") pool.add(task.user);

else if (task.type == "remove") pool.remove(task.user);

pool.match();

}

}

}

int main(int argc, char **argv) {

int port = 9090;

::std::shared_ptr<MatchHandler> handler(new MatchHandler());

cout << "Start Match Server" << endl;

thread matching_thread(consume_task);

server.serve();

return 0;

}

# 对代码进行编译

g++ -c main.cpp

# 对代码进行链接

g++ *.o -o main -lthrift -pthread

# 运行

./main

# 上传git

# .o可执行文件和编译后的文件不上传到云端

git add .

git restore --stage *.o

git restore --stage main

commit

push

实现save_client

数据存储功能

定义接口

# 接口文件已经写好

./thrift/save.thrift

# 在目标路径创建src,已有

# mkdir match_system/src -p

cd match_system/src

# 生成代码

thrift -r --gen cpp ../../thrift/save.thrift

# 生成文件夹更名

mv gen-cpp/ save_client

# 删除服务器端的文件

rm save_client/Save_server.skeleton.cpp

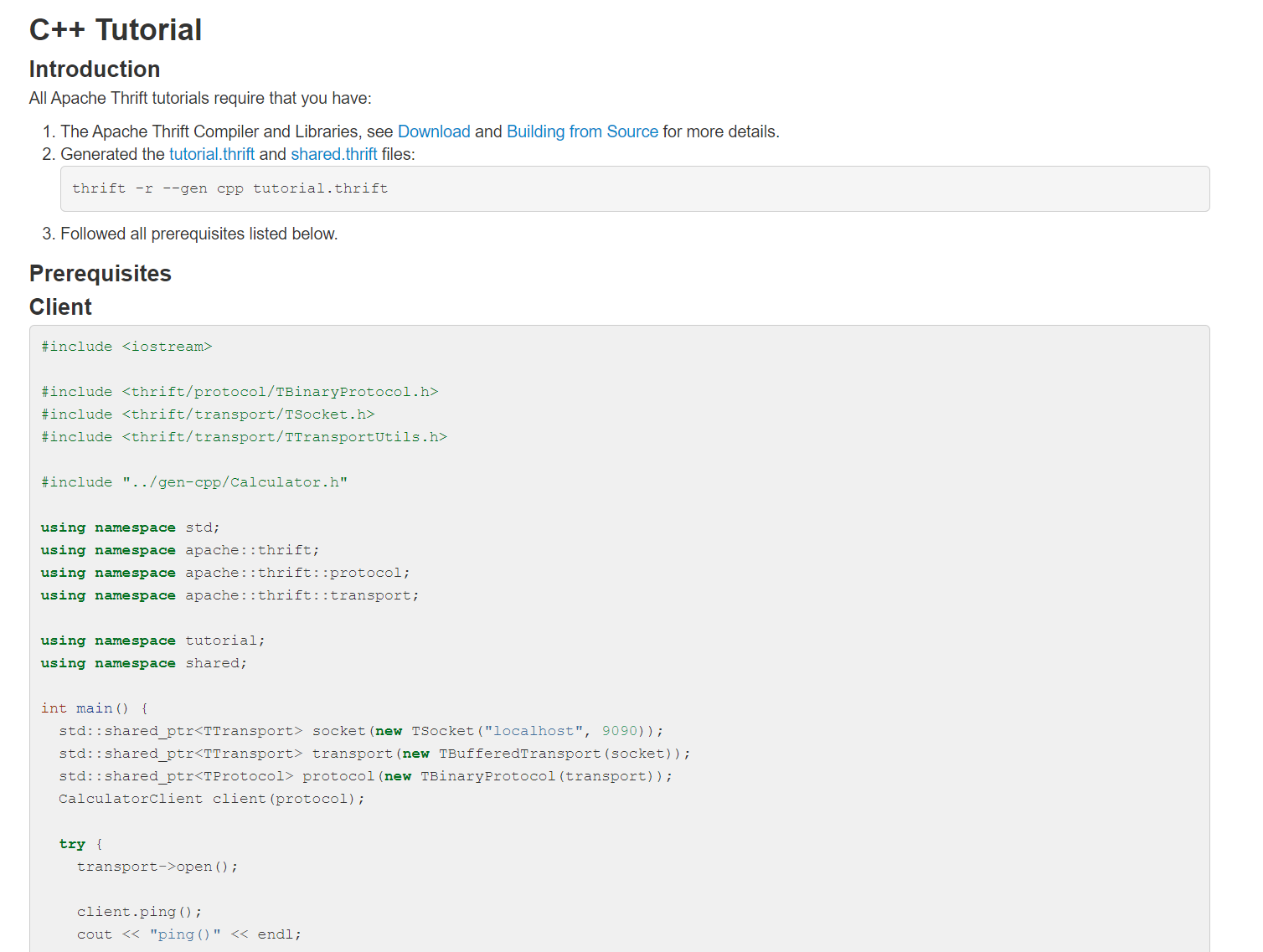

整合save_client到match_system的server端

// 把生成的客户端文件的内容粘贴出来

---

#include <iostream>

#include <thrift/protocol/TBinaryProtocol.h>

#include <thrift/transport/TSocket.h>

#include <thrift/transport/TTransportUtils.h>

#include "../gen-cpp/Calculator.h"

using namespace std;

using namespace apache::thrift;

using namespace apache::thrift::protocol;

using namespace apache::thrift::transport;

using namespace tutorial;

using namespace shared;

int main() {

std::shared_ptr<TTransport> socket(new TSocket("localhost", 9090));

std::shared_ptr<TTransport> transport(new TBufferedTransport(socket));

std::shared_ptr<TProtocol> protocol(new TBinaryProtocol(transport));

CalculatorClient client(protocol);

try {

transport->open();

//--------------------------------------教程代码,删除--------------------

client.ping();

cout << "ping()" << endl;

cout << "1 + 1 = " << client.add(1, 1) << endl;

Work work;

work.op = Operation::DIVIDE;

work.num1 = 1;

work.num2 = 0;

try {

client.calculate(1, work);

cout << "Whoa? We can divide by zero!" << endl;

} catch (InvalidOperation& io) {

cout << "InvalidOperation: " << io.why << endl;

// or using generated operator<<: cout << io << endl;

// or by using std::exception native method what(): cout << io.what() << endl;

}

work.op = Operation::SUBTRACT;

work.num1 = 15;

work.num2 = 10;

int32_t diff = client.calculate(1, work);

cout << "15 - 10 = " << diff << endl;

// Note that C++ uses return by reference for complex types to avoid

// costly copy construction

SharedStruct ss;

client.getStruct(ss, 1);

cout << "Received log: " << ss << endl;

//--------------------------------------教程代码,删除-----------------------

transport->close();

} catch (TException& tx) {

cout << "ERROR: " << tx.what() << endl;

}

}

---

// 把其中的内容粘贴到main.cpp中

// 实现细节存入save_result中

// 将save中的头文件和命名空间加上

---

// This autogenerated skeleton file illustrates how to build a server.

// You should copy it to another filename to avoid overwriting it.

#include "match_server/Match.h"

#include "save_client/Save.h"

#include <thrift/protocol/TBinaryProtocol.h>

#include <thrift/server/TSimpleServer.h>

#include <thrift/transport/TServerSocket.h>

#include <thrift/transport/TBufferTransports.h>

#include <thrift/transport/TTransportUtils.h>

#include <thrift/transport/TSocket.h>

#include <iostream>

#include <thread>

using namespace ::apache::thrift::transport;

using namespace ::apache::thrift::server;

using namespace ::match_service;

using namespace ::match_service;

using namespace ::save_service;

using namespace std;

struct Task

{

User user;

string type;

};

struct MessageQueue

{

queue<Task> q;

mutex m;

condition_variable cv;

}message_queue;

class Pool

{

public:

void save_result(int a, int b)

{

printf("Match Result: %d %d\n", a, b);

std::shared_ptr<TTransport> socket(new TSocket("123.57.47.211", 9090));

std::shared_ptr<TTransport> transport(new TBufferedTransport(socket));

std::shared_ptr<TProtocol> protocol(new TBinaryProtocol(transport));

SaveClient client(protocol);

try {

transport->open();

int res = client.save_data("myserver的用户名","md5密码前8位",a,b);

if (!res) puts("success");

else puts("failed");

transport->close();

} catch (TException& tx) {

cout << "ERROR: " << tx.what() << endl;

}

}

void match()

{

while (users.size() > 1)

{

auto a = users[0], b = users[1];

users.erase(users.begin());

users.erase(users.begin());

save_result(a.id, b.id);

}

}

void add(User user)

{

users.push_back(user);

}

void remove(User user)

{

for (uint32_t i = 0; i < users.size(); i ++ )

if (users[i].id == user.id)

{

users.erase(users.begin() + i);

break;

}

}

private:

vector<User> users;

}pool;

# 对代码进行编译

g++ -c save_client/*.cpp

g++ -c main.cpp

# 对代码进行链接

g++ *.o -o main -lthrift -pthread

# 运行

./main

# 上传git

# .o可执行文件和编译后的文件不上传到云端

git add .

git restore --stage *.o

git restore --stage main

commit

push

功能点改进(服务端main.cpp)

简易匹配机制

当消息队列为空时,一秒匹配一次,并且匹配50分以内的玩家。

// 修改match_system的server端中的方法

// 原consume方法

void consume_task()

{

while (true)

{

unique_lock<mutex> lck(message_queue.m);

if (message_queue.q.empty())

{

message_queue.cv.wait(lck);

}

else

{

auto task = message_queue.q.front();

message_queue.q.pop();

lck.unlock();

if (task.type == "add") pool.add(task.user);

else if (task.type == "remove") pool.remove(task.user);

pool.match();

}

}

}

// consume方法:当消息队列为空时,一秒匹配一次

void consume_task()

{

while (true)

{

unique_lock<mutex> lck(message_queue.m);

if (message_queue.q.empty())

{

// 修改为每一秒进行一次匹配,而不是等到被唤醒时才匹配

// message_queue.cv.wait(lck);

lck.unlock();

pool.match(); // 要看是不是有分差在50之内的玩家,查询匹配

sleep(1);

}

else

{

//只有来新用户后的添加或者匹配成功后的删除才会进行下列操作

auto task = message_queue.q.front();

message_queue.q.pop();

lck.unlock();

if (task.type == "add") pool.add(task.user);

else if (task.type == "remove") pool.remove(task.user);

pool.match();

}

}

}

// 原match方法

void match()

{

while (users.size() > 1)

{

auto a = users[0], b = users[1];

users.erase(users.begin());

users.erase(users.begin());

save_result(a.id, b.id);

}

}

// match方法:匹配50分以内的玩家

void match()

{

// 玩家数量

while (users.size() > 1)

{

// 从小到大排序

sort(users.begin(), users.end(), [&](User& a, User b){

return a.score < b.score;

});

bool flag = true; // 避免死循环,匹配失败

for (uint32_t i = 1; i < users.size(); i++ )

{

auto a = users[i - 1], b = users[i];

if (b.score - a.score <= 50)

{

// 左闭右开删除[0,2)

users.erase(users.begin() + i - 1, users.begin() + i + 1);

save_result(a.id, b.id);

flag = false; // 匹配成功一次

break;

}

}

if (flag) break; // 匹配失败,跳出循环

}

}

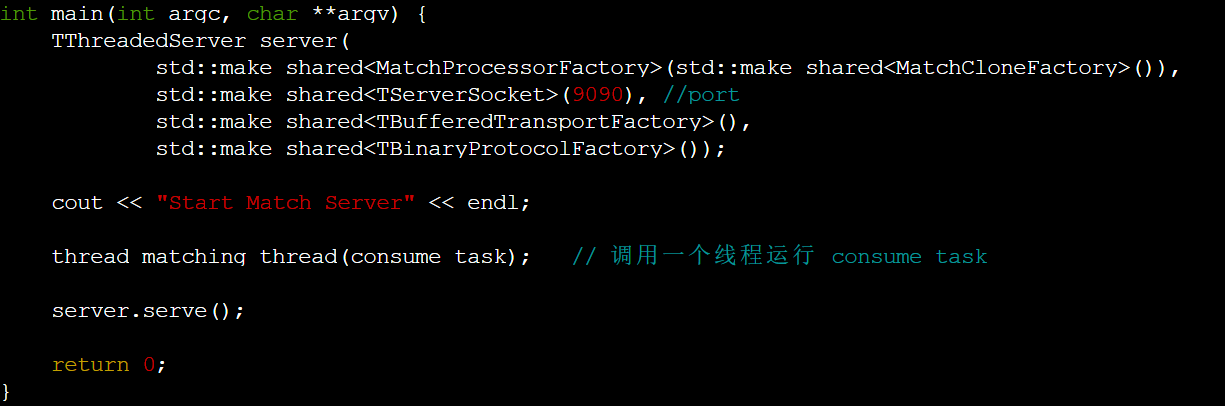

多线程服务器

使用多线程服务器来提高并发量,在教程中找C++的教程

https://thrift.apache.org/tutorial/cpp.html

// 原代码

#include "match_server/Match.h"

#include "save_client/Save.h"

#include <thrift/protocol/TBinaryProtocol.h>

#include <thrift/server/TSimpleServer.h>

#include <thrift/transport/TServerSocket.h>

#include <thrift/transport/TBufferTransports.h>

#include <thrift/transport/TTransportUtils.h>

#include <thrift/transport/TSocket.h>

#include <iostream>

#include <thread>

using namespace ::apache::thrift::transport;

using namespace ::apache::thrift::server;

using namespace ::match_service;

using namespace ::match_service;

using namespace ::save_service;

using namespace std;

struct Task

{

User user;

string type;

};

struct MessageQueue

{

queue<Task> q;

mutex m;

condition_variable cv;

}message_queue;

class Pool

{

public:

void save_result(int a, int b)

{

printf("Match Result: %d %d\n", a, b);

std::shared_ptr<TTransport> socket(new TSocket("123.57.47.211", 9090));

std::shared_ptr<TTransport> transport(new TBufferedTransport(socket));

std::shared_ptr<TProtocol> protocol(new TBinaryProtocol(transport));

SaveClient client(protocol);

try {

transport->open();

int res = client.save_data("myserver的用户名","md5密码前8位",a,b);

if (!res) puts("success");

else puts("failed");

transport->close();

} catch (TException& tx) {

cout << "ERROR: " << tx.what() << endl;

}

}

void match()

{

// 玩家数量

while (users.size() > 1)

{

// 从小到大排序

sort(users.begin(), users.end(), [&](User& a, User b){

return a.score < b.score;

});

bool flag = true; // 避免死循环,匹配失败

for (uint32_t i = 1; i < users.size(); i++ )

{

auto a = users[i - 1], b = users[i];

if (b.score - a.score <= 50)

{

// 左闭右开删除[0,2)

users.erase(users.begin() + i - 1, users.begin() + i + 1);

save_result(a.id, b.id);

flag = false; // 匹配成功一次

break;

}

}

if (flag) break; // 匹配失败,跳出循环

}

}

void add(User user)

{

users.push_back(user);

}

void remove(User user)

{

for (uint32_t i = 0; i < users.size(); i ++ )

if (users[i].id == user.id)

{

users.erase(users.begin() + i);

break;

}

}

private:

vector<User> users;

}pool;

void consume_task()

{

while (true)

{

unique_lock<mutex> lck(message_queue.m);

if (message_queue.q.empty())

{

// 修改为每一秒进行一次匹配,而不是等到被唤醒时才匹配

// message_queue.cv.wait(lck);

lck.unlock();

pool.match(); // 要看是不是有分差在50之内的玩家,查询匹配

sleep(1);

}

else

{

//只有来新用户后的添加或者匹配成功后的删除才会进行下列操作

auto task = message_queue.q.front();

message_queue.q.pop();

lck.unlock();

if (task.type == "add") pool.add(task.user);

else if (task.type == "remove") pool.remove(task.user);

pool.match();

}

}

}

class MatchHandler : virtual public MatchIf {

public:

MatchHandler() {

// Your initialization goes here

}

int32_t add_user(const User& user, const string& info){

// Your implementation goes here

printf("add_user\n");

unique_lock<mutex> lck(message_queue.m);

message_queue.q.push({user, "add"});

message_queue.cv.notify_all();

return 0;

}

int32_t remove_user(const User& user, const string& info){

// Your implementation goes here

printf("remove_user\n");

unique_lock<mutex> lck(message_queue.m);

message_queue.q.push({user, "remove"});

message_queue.cv.notify_all();

return 0;

}

};

int main(int argc, char **argv) {

int port = 9090;

::std::shared_ptr<MatchHandler> handler(new MatchHandler());

cout << "Start Match Server" << endl;

thread matching_thread(consume_task);

server.serve();

return 0;

}

// 教程代码

#include <thrift/concurrency/ThreadManager.h>

#include <thrift/concurrency/ThreadFactory.h>

#include <thrift/protocol/TBinaryProtocol.h>

#include <thrift/server/TSimpleServer.h>

#include <thrift/server/TThreadPoolServer.h>

#include <thrift/server/TThreadedServer.h>

#include <thrift/transport/TServerSocket.h>

#include <thrift/transport/TSocket.h>

#include <thrift/transport/TTransportUtils.h>

#include <thrift/TToString.h>

#include <iostream>

#include <stdexcept>

#include <sstream>

class CalculatorCloneFactory : virtual public CalculatorIfFactory {

public:

~CalculatorCloneFactory() override = default;

CalculatorIf* getHandler(const ::apache::thrift::TConnectionInfo& connInfo) override

{

std::shared_ptr<TSocket> sock = std::dynamic_pointer_cast<TSocket>(connInfo.transport);

cout << "Incoming connection\n";

cout << "\tSocketInfo: " << sock->getSocketInfo() << "\n";

cout << "\tPeerHost: " << sock->getPeerHost() << "\n";

cout << "\tPeerAddress: " << sock->getPeerAddress() << "\n";

cout << "\tPeerPort: " << sock->getPeerPort() << "\n";

return new CalculatorHandler;

}

void releaseHandler( ::shared::SharedServiceIf* handler) override {

delete handler;

}

};

int main() {

TThreadedServer server(

std::make_shared<CalculatorProcessorFactory>(std::make_shared<CalculatorCloneFactory>()),

std::make_shared<TServerSocket>(9090), //port

std::make_shared<TBufferedTransportFactory>(),

std::make_shared<TBinaryProtocolFactory>());

}

// 将calculate替换成match,头文件没有的抄过来,同时填充main方法

// :1,$s/Calculator/Match/g

#include <thrift/concurrency/ThreadManager.h>

#include <thrift/concurrency/ThreadFactory.h>

#include <thrift/server/TThreadPoolServer.h>

#include <thrift/server/TThreadedServer.h>

#include <thrift/TToString.h>

#include <sstream>

class MatchCloneFactory : virtual public MatchIfFactory {

public:

~MatchCloneFactory() override = default;

MatchIf* getHandler(const ::apache::thrift::TConnectionInfo& connInfo) override

{

std::shared_ptr<TSocket> sock = std::dynamic_pointer_cast<TSocket>(connInfo.transport);

cout << "Incoming connection\n";

cout << "\tSocketInfo: " << sock->getSocketInfo() << "\n";

cout << "\tPeerHost: " << sock->getPeerHost() << "\n";

cout << "\tPeerAddress: " << sock->getPeerAddress() << "\n";

cout << "\tPeerPort: " << sock->getPeerPort() << "\n";

return new MatchHandler;

}

// ::shared::SharedServiceIf* 改成 MatchIf* handler

void releaseHandler(MatchIf* handler) override {

delete handler;

}

};

int main() {

TThreadedServer server(

std::make_shared<CalculatorProcessorFactory>(std::make_shared<CalculatorCloneFactory>()),

std::make_shared<TServerSocket>(9090), //port

std::make_shared<TBufferedTransportFactory>(),

std::make_shared<TBinaryProtocolFactory>());

cout << "Start Match Server" << endl;

thread matching_thread(consume_task);

server.serve();

return 0;

}

经典匹配机制

随时间的增加逐渐扩大匹配范围,每秒多加50分

// 修改match_system的server端中的方法

// 1. 添加等待时间在玩家池中,修改add与remove方法

// 2. 修改match方法,在其中要限制consume_task()每次仅在消息队列为空时进行等待(匹配)

// 3. 添加新的check_match方法,实现随时长扩大匹配范围

class Pool

{

public:

// 2. 修改match方法

void match()

{

// 每次匹配都会sleep(1)也即增加一秒等待时长

// 因为使用了sleep,只需要记录等待轮数就可以,等待轮数wt×睡眠时间sleep就是等待时间

for (uint32_t i = 0; i < wt.size(); i ++ )

wt[i]++ ; // 等待秒数+1

// 进行匹配查询

while (users.size() > 1)

{

bool flag = true; // 避免死循环

for (uint32_t i = 0; i < users.size(); i ++ )

{

// 不能使用排序进行匹配了,会破坏等待时长wt的顺序

// 逐个进行比较

for (uint32_t j = i + 1; j < users.size(); j ++ )

{

// 3. check_match来验证分数是否可以匹配

if (check_match(i, j))

{

auto a = users[i], b = users[j];

// 删除时要从后往前删,先删前面再删后面会导致后面下标发生变化

users.erase(users.begin() + j);

users.erase(users.begin() + i);

wt.erase(wt.begin() + j);

wt.erase(wt.begin() + i);

save_result(a.id, b.id);

flag = false;

break;

}

}

if (!flag) break; // 如果已经匹配成功那么就退出循环

}

if (flag) break; // 匹配失败

}

}

// 3. 添加新的check_match方法,实现随时长扩大匹配范围

bool check_match(uint32_t i, uint32_t j)

{

auto a = users[i], b = users[j];

int dt = abs(a.score - b.score);

int a_max_dif = wt[i] * 50;

int b_max_dif = wt[j] * 50;

return dt <= a_max_dif && dt <= b_max_dif;

}

void add(User user)

{

users.push_back(user);

wt.push_back(0); // 添加等待时间

}

void remove(User user)

{

for (uint32_t i = 0; i < users.size(); i ++ )

if (users[i].id == user.id)

{

users.erase(users.begin() + i);

wt.erase(wt.begin() + i); // 删除等待时间

break;

}

}

private:

vector<User> users;

// 1. 添加等待时间在玩家池中,单位s

vector<int> wt;

}pool;

class MatchHandler : virtual public MatchIf {

public:

MatchHandler() {

// Your initialization goes here

}

int32_t add_user(const User& user, const string& info){

// Your implementation goes here

printf("add_user\n");

unique_lock<mutex> lck(message_queue.m);

message_queue.q.push({user, "add"});

message_queue.cv.notify_all();

return 0;

}

int32_t remove_user(const User& user, const string& info){

// Your implementation goes here

printf("remove_user\n");

unique_lock<mutex> lck(message_queue.m);

message_queue.q.push({user, "remove"});

message_queue.cv.notify_all();

return 0;

}

};

// 2. 限制consume_task()每次仅在消息队列为空时进行等待(匹配),保证每次等待都是1s

void consume_task()

{

while (true)

{

unique_lock<mutex> lck(message_queue.m);

if (message_queue.q.empty())

{

// 修改为每一秒进行一次匹配,而不是等到被唤醒时才匹配

// message_queue.cv.wait(lck);

lck.unlock();

pool.match();

sleep(1);

}

else

{

// 只有来新用户后的添加或者匹配成功后的删除才会进行下列操作

auto task = message_queue.q.front();

message_queue.q.pop();

lck.unlock();

if (task.type == "add") pool.add(task.user);

else if (task.type == "remove") pool.remove(task.user);

// pool.match();

}

}

}

int main(int argc, char **argv) {

int port = 9090;

::std::shared_ptr<MatchHandler> handler(new MatchHandler());

cout << "Start Match Server" << endl;

thread matching_thread(consume_task);

server.serve();

return 0;

}

完整代码

main.cpp

其中包含match服务端与save客户端

// This autogenerated skeleton file illustrates how to build a server.

// You should copy it to another filename to avoid overwriting it.

#include "match_server/Match.h"

#include "save_client/Save.h"

#include <thrift/concurrency/ThreadManager.h>

#include <thrift/concurrency/ThreadFactory.h>

#include <thrift/protocol/TBinaryProtocol.h>

#include <thrift/server/TSimpleServer.h>

#include <thrift/server/TThreadedServer.h>

#include <thrift/transport/TServerSocket.h>

#include <thrift/transport/TBufferTransports.h>

#include <thrift/transport/TTransportUtils.h>

#include <thrift/transport/TSocket.h>

#include <thrift/TToString.h>

#include <iostream>

#include <thread>

#include <mutex>

#include <condition_variable>

#include <queue>

#include <vector>

#include <unistd.h>

using namespace ::apache::thrift;

using namespace ::apache::thrift::protocol;

using namespace ::apache::thrift::transport;

using namespace ::apache::thrift::server;

using namespace ::match_service;

using namespace ::save_service;

using namespace std;

struct Task

{

User user;

string type;

};

struct MessageQueue

{

queue<Task> q;

mutex m;

condition_variable cv;

}message_queue;

class Pool

{

public:

void save_result(int a, int b)

{

printf("Match Result: %d %d\n", a, b);

std::shared_ptr<TTransport> socket(new TSocket("123.57.47.211", 9090));

std::shared_ptr<TTransport> transport(new TBufferedTransport(socket));

std::shared_ptr<TProtocol> protocol(new TBinaryProtocol(transport));

SaveClient client(protocol);

try {

transport->open();

int res = client.save_data("acs_0", "6e822f5b", a, b);

if (!res) puts("success");

else puts("failed");

transport->close();

} catch (TException& tx) {

cout << "ERROR: " << tx.what() << endl;

}

}

bool check_match(uint32_t i, uint32_t j)

{

auto a = users[i], b = users[j];

int dt = abs(a.score - b.score);

int a_max_dif = wt[i] * 50;

int b_max_dif = wt[j] * 50;

return dt <= a_max_dif && dt <= b_max_dif;

}

void match()

{

for (uint32_t i = 0; i < wt.size(); i ++ )

wt[i] ++ ; // 等待秒数 + 1

while (users.size() > 1)

{

bool flag = true;

for (uint32_t i = 0; i < users.size(); i ++ )

{

for (uint32_t j = i + 1; j < users.size(); j ++ )

{

if (check_match(i, j))

{

auto a = users[i], b = users[j];

users.erase(users.begin() + j);

users.erase(users.begin() + i);

wt.erase(wt.begin() + j);

wt.erase(wt.begin() + i);

save_result(a.id, b.id);

flag = false;

break;

}

}

if (!flag) break;

}

if (flag) break;

}

}

void add(User user)

{

users.push_back(user);

wt.push_back(0);

}

void remove(User user)

{

for (uint32_t i = 0; i < users.size(); i ++ )

if (users[i].id == user.id)

{

users.erase(users.begin() + i);

wt.erase(wt.begin() + i);

break;

}

}

private:

vector<User> users;

vector<int> wt; // 等待时间, 单位:s

}pool;

class MatchHandler : virtual public MatchIf {

public:

MatchHandler() {

// Your initialization goes here

}

int32_t add_user(const User& user, const std::string& info) {

// Your implementation goes here

printf("add_user\n");

unique_lock<mutex> lck(message_queue.m);

message_queue.q.push({user, "add"});

message_queue.cv.notify_all();

return 0;

}

int32_t remove_user(const User& user, const std::string& info) {

// Your implementation goes here

printf("remove_user\n");

unique_lock<mutex> lck(message_queue.m);

message_queue.q.push({user, "remove"});

message_queue.cv.notify_all();

return 0;

}

};

class MatchCloneFactory : virtual public MatchIfFactory {

public:

~MatchCloneFactory() override = default;

MatchIf* getHandler(const ::apache::thrift::TConnectionInfo& connInfo) override

{

std::shared_ptr<TSocket> sock = std::dynamic_pointer_cast<TSocket>(connInfo.transport);

/*cout << "Incoming connection\n";

cout << "\tSocketInfo: " << sock->getSocketInfo() << "\n";

cout << "\tPeerHost: " << sock->getPeerHost() << "\n";

cout << "\tPeerAddress: " << sock->getPeerAddress() << "\n";

cout << "\tPeerPort: " << sock->getPeerPort() << "\n";*/

return new MatchHandler;

}

void releaseHandler(MatchIf* handler) override {

delete handler;

}

};

void consume_task()

{

while (true)

{

unique_lock<mutex> lck(message_queue.m);

if (message_queue.q.empty())

{

// message_queue.cv.wait(lck);

lck.unlock();

pool.match();

sleep(1);

}

else

{

auto task = message_queue.q.front();

message_queue.q.pop();

lck.unlock();

if (task.type == "add") pool.add(task.user);

else if (task.type == "remove") pool.remove(task.user);

}

}

}

int main(int argc, char **argv) {

TThreadedServer server(

std::make_shared<MatchProcessorFactory>(std::make_shared<MatchCloneFactory>()),

std::make_shared<TServerSocket>(9090), //port

std::make_shared<TBufferedTransportFactory>(),

std::make_shared<TBinaryProtocolFactory>());

cout << "Start Match Server" << endl;

thread matching_thread(consume_task);

server.serve();

return 0;

}

client.py

其中包含match客户端

from match_client.match import Match

from match_client.match.ttypes import User

from thrift import Thrift

from thrift.transport import TSocket

from thrift.transport import TTransport

from thrift.protocol import TBinaryProtocol

from sys import stdin

def operate(op, user_id, username, score):

# Make socket

transport = TSocket.TSocket('127.0.0.1', 9090)

# Buffering is critical. Raw sockets are very slow

transport = TTransport.TBufferedTransport(transport)

# Wrap in a protocol

protocol = TBinaryProtocol.TBinaryProtocol(transport)

# Create a client to use the protocol encoder

client = Match.Client(protocol)

# Connect!

transport.open()

user = User(user_id, username, score)

if op == "add":

client.add_user(user, "")

elif op == "remove":

client.remove_user(user, "")

# Close!

transport.close()

def main():

for line in stdin:

op, user_id, username, score = line.split(' ')

operate(op, int(user_id), username, int(score))

if __name__ == "__main__":

main()

thrift操作系统相关

线程与进程的关系(大概):

-

一个程序运行是一个进程,程序里可以调用多个线程

-

如下图所示,运行

main.cpp,代表一个进程,main.cpp里的matching_thread(consume_task)和server.serve()分别代表一个线程

server.serve()执行原理:每次client端发送一个请求的时候,server.serve()就会新开一个线程调用MatchHandler里的函数进行处理。

- 一个程序是一个进程,一个进程中至少有一个线程。如果只有一个线程,则第二个任务必须等到第一个任务结束后才能进行,如果使用多线程则在主线程执行任务的同时可以执行其他任务,而不需要等待。创建线程代价较小,但能有效提升cpu利用率。在本次项目中,我们需要输入用户信息和用户匹配是同时进行的,而不是输入用户信息结束才开始匹配,或匹配结束才能输入用户信息,所以我们需要开多线程编程。

#include <thread>

//C++ 开多线程的方法:

thread matching_thread(consume_task); // 调用一个线程运行 consume_task

生产者消费者模型:

-

假如有两个线程A和B,A线程生产数据(类似本项目终端输入用户信息)并将信息加入缓冲区,B线程从缓冲区中取出数据进行操作(类似本项目中取出用户信息匹配),则A为生产者B为消费者。

-

在多线程开发中,如果生产者生产数据的速度很快,而消费者消费数据的速度很慢,那么生产者就必须等待消费者消费完数据才能够继续生产数据,因为生产过多的数据可能会导致存储不足;同理如果消费者的速度大于生产者那么消费者就会经常处理等待状态,所以为了达到生产者和消费者生产数据和消费数据之间的平衡,那么就需要一个缓冲区用来存储生产者生产的数据,所以就引入了生产者-消费者模型。

-

当缓冲区满的时候,生产者会进入休眠状态,当下次消费者开始消耗缓冲区的数据时,生产者才会被唤醒,开始往缓冲区中添加数据;当缓冲区空的时候,消费者也会进入休眠状态,直到生产者往缓冲区中添加数据时才会被唤醒。

消息队列:

在生产者消费者模型中我们提到了缓冲区,缓冲区的实现就是由队列来实现,当生产者生产数据后将信息入队,消费者获取信息后信息出队。消息队列提供了异步通信协议,也就是说,消息的发送者和接收者不需要同时与消息队列交互,消息会保存在队列中,直到接收者使用它

#include <queue>

struct Task

{

User user;

string type;

};

// 消息队列

struct MessageQueue

{

queue<Task> q; // 消息队列本体

mutex m; // 互斥信号量

condition_variable cv; // 条件变量,用于阻塞唤醒线程

}message_queue;

条件变量:

condition_variable:条件变量一般和互斥锁搭配使用,条件变量用于在多线程环境中等待特定事件发生。

锁:

保证共享数据操作的完整性,保证在任一时刻只能有一个线程访问对象。

CPP里信号量实现锁,加锁相当于信号量的P操作,解锁相当于信号量的V操作

锁可以实现条件变量

-

mutex互斥锁(用信号量S=1表示):一个P操作(上锁),一个V操作(解锁)

- 特殊:S=1表示互斥量,表示同一时间,信号量只能分配给一个线程。

- 对于P和V都是原子操作,就是在执行P和V操作时,不会被插队。从而实现对共享变量操作的原子性。

- S=10表示可以将信号量分给10个人来用。如果一共有20个人那么只能有10个人用,剩下10个人需要等待。

-

P操作的主要动作是:

- S减1;

- 若S减1后仍大于或等于0,则进程继续执行;

- 若S减1后小于0,则该进程被阻塞后放入等待该信号量的等待队列中,然后转进程调度。

-

V操作的主要动作是:

- S加1;

- 若相加后结果大于0,则进程继续执行;

- 若相加后结果小于或等于0,则从该信号的等待队列中释放一个等待进程,然后再返回原进程继续执行或转进程调度。

-

多线程为什么要用锁? 因为多线程可能共享一个内存空间,导致出现重复读取并修改的现象

// 常用方法

1. unique_lock<mutex> lck(message_queue.m); // 访问临界区需要先上锁

// unique_lock<mutex>:表示定义一个互斥锁。

// lck(message_queue.m):这个构造函数会将message_queue.m互斥锁信号量传进去,这个构造函数本身就是一个加锁的过程。

// 上述语句表示,如果当前定义的这个互斥锁没有被其他线程所占用,则会往下继续执行;如果这个互斥锁已经被其他线程所占用,则会一直卡在这里,直到锁被释放。

2. message_queue.q.push({user, "add"&"remove"}); // 将新消息加入到消息队列中

3. message_queue.cv.notify_all(); // 唤醒所有阻塞的线程

// 表示当前锁已经被释放掉了,将所有被这个条件变量cv睡眠的线程唤醒。

4. message_queue.cv.wait(lck); // 表示用条件变量将当前这个consume_task线程阻塞掉

5. lck.unlock(); // 表示解锁,但不会直接唤醒阻塞的进程

// 实际应用

// 消息队列每次加入用户以及操作前都要锁住,保证所有和队列相关操作同一时间只有一个线程在操作它

class MatchHandler : virtual public MatchIf {

public:

MatchHandler() {

// Your initialization goes here

}

int32_t add_user(const User& user, const string& info){

// Your implementation goes here

printf("add_user\n");

unique_lock<mutex> lck(message_queue.m); // 加锁

message_queue.q.push({user, "add"});

message_queue.cv.notify_all(); // 解锁

return 0;

}

int32_t remove_user(const User& user, const string& info){

// Your implementation goes here

printf("remove_user\n");

unique_lock<mutex> lck(message_queue.m); // 加锁

message_queue.q.push({user, "remove"});

message_queue.cv.notify_all(); // 解锁

return 0;

}

};

void consume_task()

{

while (true)

{

unique_lock<mutex> lck(message_queue.m); // 访问临界区(消息队列),先上锁

if (message_queue.q.empty())

{

// 等到被唤醒时才匹配

message_queue.cv.wait(lck);

// 这句话一执行,就会把lck这个锁给释放掉(解锁),其实就是将message_queue.m信号量做V操作,同时将线程卡死在这句话上,直到其他线程通过notify将其唤醒。(即条件变量的wait作用为将某个线程挂起,同时将线程所持有的锁给释放掉,notify的作用为将挂起阻塞的线程唤醒)

}

else

{

auto task = message_queue.q.front();

message_queue.q.pop();

lck.unlock(); // 临界区访问结束,直接解锁

// 避免后续没用到临界区信息,而长时间占用临界区的情况发生

if (task.type == "add") pool.add(task.user); // 把玩家放进pool池中

else if (task.type == "remove") pool.remove(task.user); // 把玩家从pool池中移出

pool.match();

}

}

}

-

wait()工作机制的总结

- condition_variable的wait首先会block当前线程,然后进行互斥量的unlock(因此,一般上使用wait前,会先构造互斥量的lock)

- 注意,block和unlock必须是个原子动作。

- 最后conditon_variable会把当前线程添加入检测等待队列

- 此时当前线程等待…

- 其他线程执行了这个condition_variable的notify

- 先互斥量的lock,然后unblock。(即跳出wait动作)

- 最后unlock(运行期结束或主动调用unlock)

-

总结:只要需要访问到 公共变量(临界资源) 的情况,都需要加锁解锁!