2018-2019-1 20189221 《Linux内核原理与分析》第七周作业

2018-2019-1 20189221 《Linux内核原理与分析》第七周作业

实验六 分析Linux内核创建一个新进程的过程

代码分析

task_struct:

struct task_struct {

volatile long state; //进程状态/* -1 unrunnable, 0 runnable, >0 stopped */

void *stack; // 指定进程内核堆栈

pid_t pid; //进程标识符

unsigned int rt_priority; //实时优先级

unsigned int policy; //调度策略

struct files_struct *files; //系统打开文件

…

}

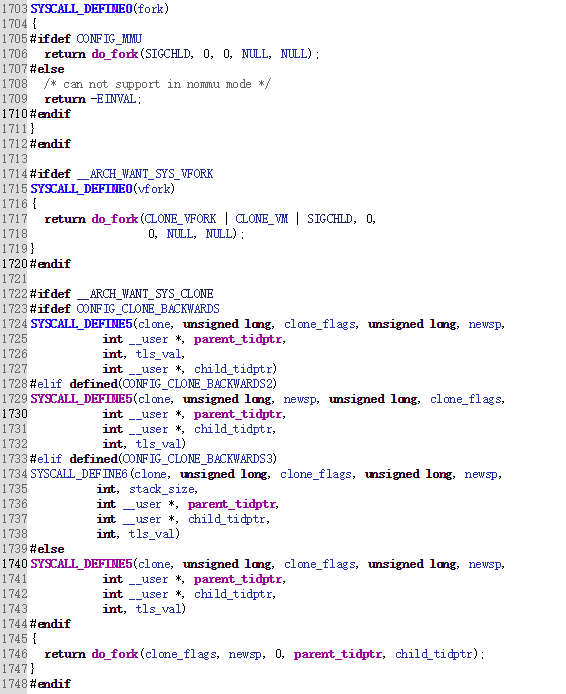

fork、vfork 和 clone 都可创建新进程,均通过 do_fork 来创建进程

do_fork进程:

新建进程:

/*

1694 * Create a kernel thread.

1695 */

1696pid_t kernel_thread(int (*fn)(void *), void *arg, unsigned long flags)

1697{

1698 return do_fork(flags|CLONE_VM|CLONE_UNTRACED, (unsigned long)fn,

1699 (unsigned long)arg, NULL, NULL);

1700}

1701

1702#ifdef __ARCH_WANT_SYS_FORK

1703SYSCALL_DEFINE0(fork)

1704{

1705#ifdef CONFIG_MMU

1706 return do_fork(SIGCHLD, 0, 0, NULL, NULL);

1707#else

1708 /* can not support in nommu mode */

1709 return -EINVAL;

1710#endif

1711}

1712#endif

1713

1714#ifdef __ARCH_WANT_SYS_VFORK

1715SYSCALL_DEFINE0(vfork)

1716{

1717 return do_fork(CLONE_VFORK | CLONE_VM | SIGCHLD, 0,

1718 0, NULL, NULL);

1719}

1720#endif

1721

1722#ifdef __ARCH_WANT_SYS_CLONE

1723#ifdef CONFIG_CLONE_BACKWARDS

1724SYSCALL_DEFINE5(clone, unsigned long, clone_flags, unsigned long, newsp,

1725 int __user *, parent_tidptr,

1726 int, tls_val,

1727 int __user *, child_tidptr)

1728#elif defined(CONFIG_CLONE_BACKWARDS2)

1729SYSCALL_DEFINE5(clone, unsigned long, newsp, unsigned long, clone_flags,

1730 int __user *, parent_tidptr,

1731 int __user *, child_tidptr,

1732 int, tls_val)

1733#elif defined(CONFIG_CLONE_BACKWARDS3)

1734SYSCALL_DEFINE6(clone, unsigned long, clone_flags, unsigned long, newsp,

1735 int, stack_size,

1736 int __user *, parent_tidptr,

1737 int __user *, child_tidptr,

1738 int, tls_val)

1739#else

1740SYSCALL_DEFINE5(clone, unsigned long, clone_flags, unsigned long, newsp,

1741 int __user *, parent_tidptr,

1742 int __user *, child_tidptr,

1743 int, tls_val)

1744#endif

1745{

1746 return do_fork(clone_flags, newsp, 0, parent_tidptr, child_tidptr);

1747}

1748#endif

1749

1750#ifndef ARCH_MIN_MMSTRUCT_ALIGN

1751#define ARCH_MIN_MMSTRUCT_ALIGN 0

1752#endif

1753

1754static void sighand_ctor(void *data)

1755{

1756 struct sighand_struct *sighand = data;

1757

1758 spin_lock_init(&sighand->siglock);

1759 init_waitqueue_head(&sighand->signalfd_wqh);

1760}

1761

1762void __init proc_caches_init(void)

1763{

1764 sighand_cachep = kmem_cache_create("sighand_cache",

1765 sizeof(struct sighand_struct), 0,

1766 SLAB_HWCACHE_ALIGN|SLAB_PANIC|SLAB_DESTROY_BY_RCU|

1767 SLAB_NOTRACK, sighand_ctor);

1768 signal_cachep = kmem_cache_create("signal_cache",

1769 sizeof(struct signal_struct), 0,

1770 SLAB_HWCACHE_ALIGN|SLAB_PANIC|SLAB_NOTRACK, NULL);

1771 files_cachep = kmem_cache_create("files_cache",

1772 sizeof(struct files_struct), 0,

1773 SLAB_HWCACHE_ALIGN|SLAB_PANIC|SLAB_NOTRACK, NULL);

1774 fs_cachep = kmem_cache_create("fs_cache",

1775 sizeof(struct fs_struct), 0,

1776 SLAB_HWCACHE_ALIGN|SLAB_PANIC|SLAB_NOTRACK, NULL);

1777 /*

1778 * FIXME! The "sizeof(struct mm_struct)" currently includes the

1779 * whole struct cpumask for the OFFSTACK case. We could change

1780 * this to *only* allocate as much of it as required by the

1781 * maximum number of CPU's we can ever have. The cpumask_allocation

1782 * is at the end of the structure, exactly for that reason.

1783 */

1784 mm_cachep = kmem_cache_create("mm_struct",

1785 sizeof(struct mm_struct), ARCH_MIN_MMSTRUCT_ALIGN,

1786 SLAB_HWCACHE_ALIGN|SLAB_PANIC|SLAB_NOTRACK, NULL);

1787 vm_area_cachep = KMEM_CACHE(vm_area_struct, SLAB_PANIC);

1788 mmap_init();

1789 nsproxy_cache_init();

1790}

1791

1792/*

1793 * Check constraints on flags passed to the unshare system call.

1794 */

1795static int check_unshare_flags(unsigned long unshare_flags)

1796{

1797 if (unshare_flags & ~(CLONE_THREAD|CLONE_FS|CLONE_NEWNS|CLONE_SIGHAND|

1798 CLONE_VM|CLONE_FILES|CLONE_SYSVSEM|

1799 CLONE_NEWUTS|CLONE_NEWIPC|CLONE_NEWNET|

1800 CLONE_NEWUSER|CLONE_NEWPID))

1801 return -EINVAL;

1802 /*

1803 * Not implemented, but pretend it works if there is nothing to

1804 * unshare. Note that unsharing CLONE_THREAD or CLONE_SIGHAND

1805 * needs to unshare vm.

1806 */

1807 if (unshare_flags & (CLONE_THREAD | CLONE_SIGHAND | CLONE_VM)) {

1808 /* FIXME: get_task_mm() increments ->mm_users */

1809 if (atomic_read(¤t->mm->mm_users) > 1)

1810 return -EINVAL;

1811 }

1812

1813 return 0;

1814}

1815

ret_from_fork:

*childregs = *current_pt_regs(); //复制内核堆栈(复制的pt_regs,是SAVE_ALL中系统调用压栈的那一部分。)

childregs->ax = 0; // 子进程的fork返回0

p->thread.sp = (unsigned long) childregs; // 调度到子进程时的内核栈顶

p->thread.ip = (unsigned long) ret_from_fork; //调度到子进程时的第一条指令地址

fork函数对应的内核处理过程sys_clone

- fork、vfork和clone三个系统调用都可以创建一个新进程,都是通过调用do_fork来实现进程的创建

- 创建新进程需要先复制一个PCB:task_struct

- 再给新进程分配一个新的内核堆栈

ti = alloc_thread_info_node(tsk, node);

tsk->stack = ti;

setup_thread_stack(tsk, orig); //这里只是复制thread_info,而非复制内核堆栈

- 再修改复制过来的进程数据,比如pid、进程链表等等,见copy_process内部

- 从用户态的代码看fork();函数返回了两次,即在父子进程中各返回一次

*childregs = *current_pt_regs(); //复制内核堆栈

childregs->ax = 0; //为什么子进程的fork返回0,这里就是原因!

p->thread.sp = (unsigned long) childregs; //调度到子进程时的内核栈顶

p->thread.ip = (unsigned long) ret_from_fork; //调度到子进程时的第一条指令地址

-

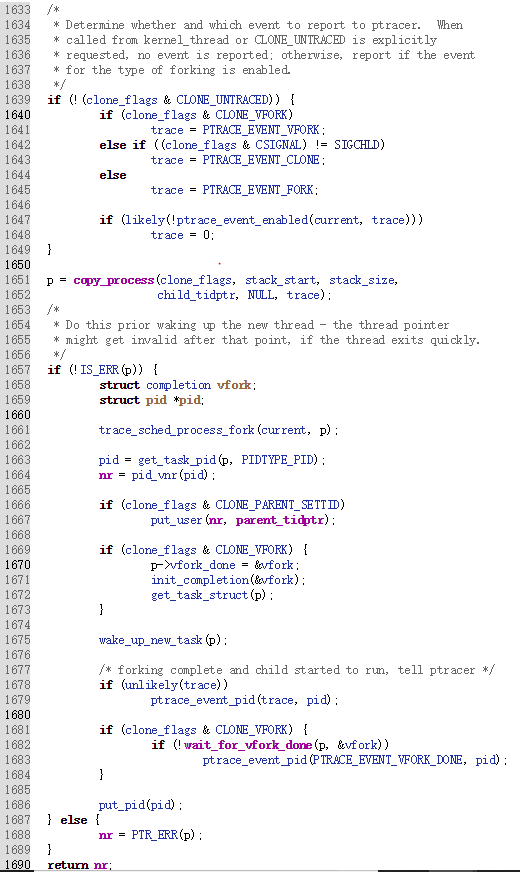

do_fork完成了创建中的大部分工作,该函数调用copy_process()函数,然后让进程开始运行。copy_process()函数工作如下:

1、调用dup_task_struct()为新进程创建一个内核栈、thread_info结构和task_struct,这些值与当前进程的值相同

2、检查

3、子进程着手使自己与父进程区别开来。进程描述符内的许多成员被清0或设为初始值。

4、子进程状态被设为TASK_UNINTERRUPTIBLE,以保证它不会投入运行

5、copy_process()调用copy_flags()以更新task_struct的flags成员。表明进程是否拥有超级用户权限的PF_SUPERPRIV标志被清0。表明进程还没有调用exec()函数的PF_FORKNOEXEC标志被设置

6、调用alloc_pid()为新进程分配一个有效的PID

7、根据传递给clone()的参数标志,copy_process()拷贝或共享打开的文件、文件系统信息、信号处理函数、进程地址空间和命名空间等

8、最后,copy_process()做扫尾工作并返回一个指向子进程的指针

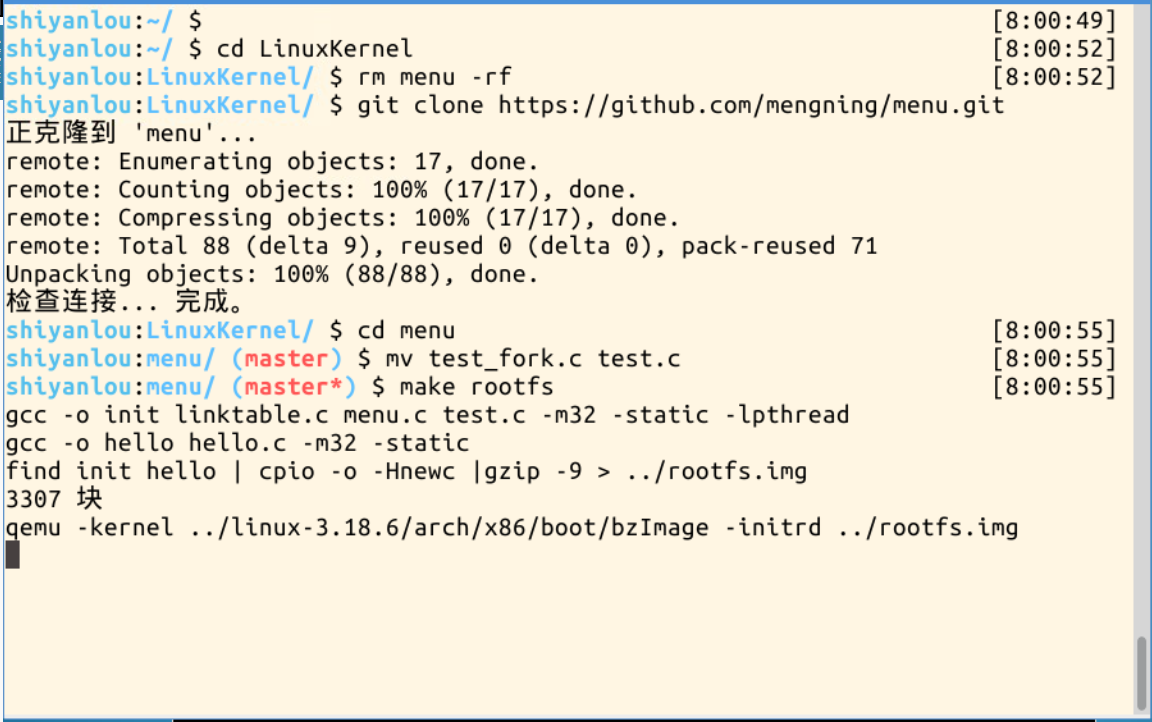

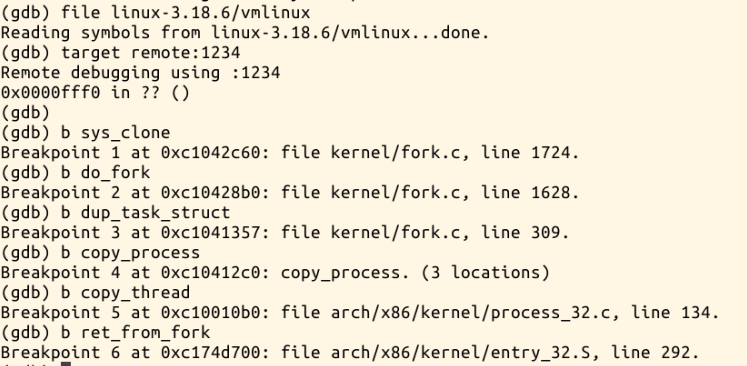

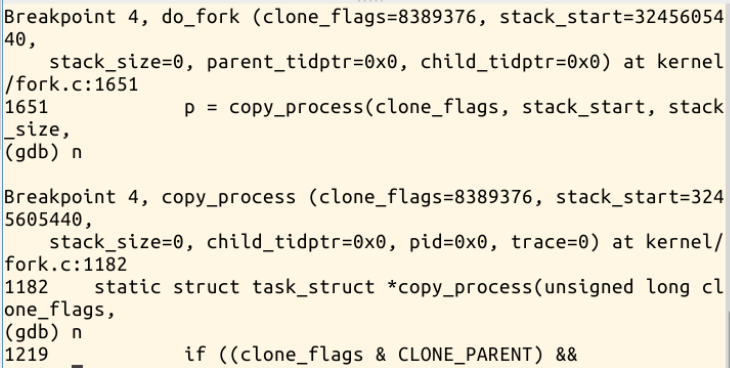

gdb跟踪

验证fork功能:

在创建进程的关键函数上设置断点:

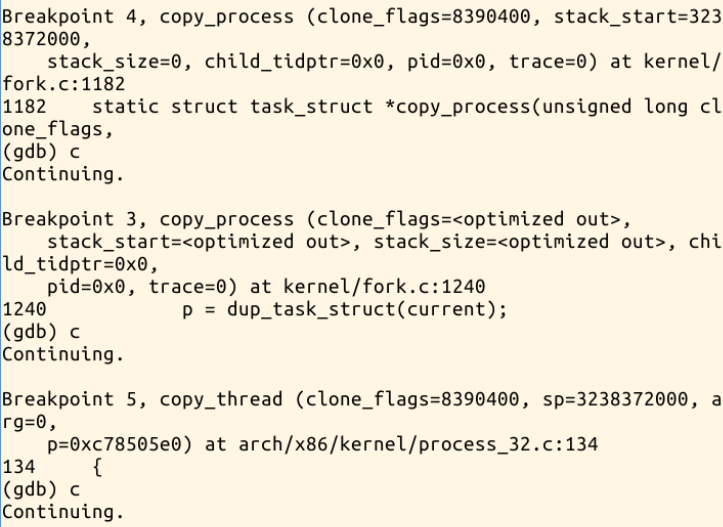

跟踪调试过程:

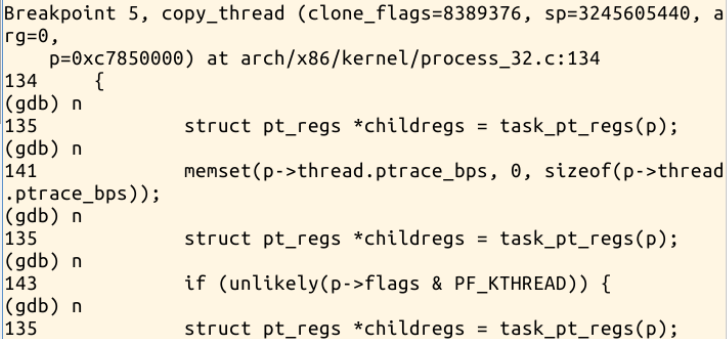

copy_thread函数为子进程准备了上下文堆栈信息,其工作流程如下:

获取子进程寄存器信息的存放位置

对子进程的thread.sp赋值,即进程的esp寄存器的值。

如果创建的是内核线程,则运行位置是ret_from_kernel_thread,将这段代码的地址赋给thread.ip,之后准备其他寄存器信息,退出

将父进程的寄存器信息复制给子进程。

子进程的eax寄存器值置0。

子进程从ret_from_fork开始执行,所以它的地址赋给thread.ip,也就是将来的eip寄存器。

继续调试:

遇到的问题

PID相关知识:

pid结构体:

struct pid {

struct hlist_head tasks; //指回 pid_link 的 node

int nr; //PID

struct hlist_node pid_chain; //pid hash 散列表结点

};

pid_vnr:

pid_t pid_vnr(struct pid*pid)

{

return pid_nr_ns(pid,current->nsproxy->pid_ns); //current->nsproxy->pid_ns是当前pid_namespace

}

获得 pid 实例之后,再根据 pid 中的numbers 数组中 uid 信息,获得局部PID。

pid_t pid_nr_ns(struct pid *pid, struct pid_namespace *ns)

{

struct upid *upid;

pid_t nr = 0;

if (pid && ns->level <= pid->level) {

upid = &pid->numbers[ns->level];

if (upid->ns == ns)

nr = upid->nr;

}

return nr;

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号