k8s高可用搭建

k8s官网:https://kubernetes.io/

课件参考:https://blog.csdn.net/qq_26900081/article/details/109291999

一.两者区别

- kubeadm一般用来安装测试环境,重启会相对慢

- 二进制适用于生产环境安装,重启之后会很快

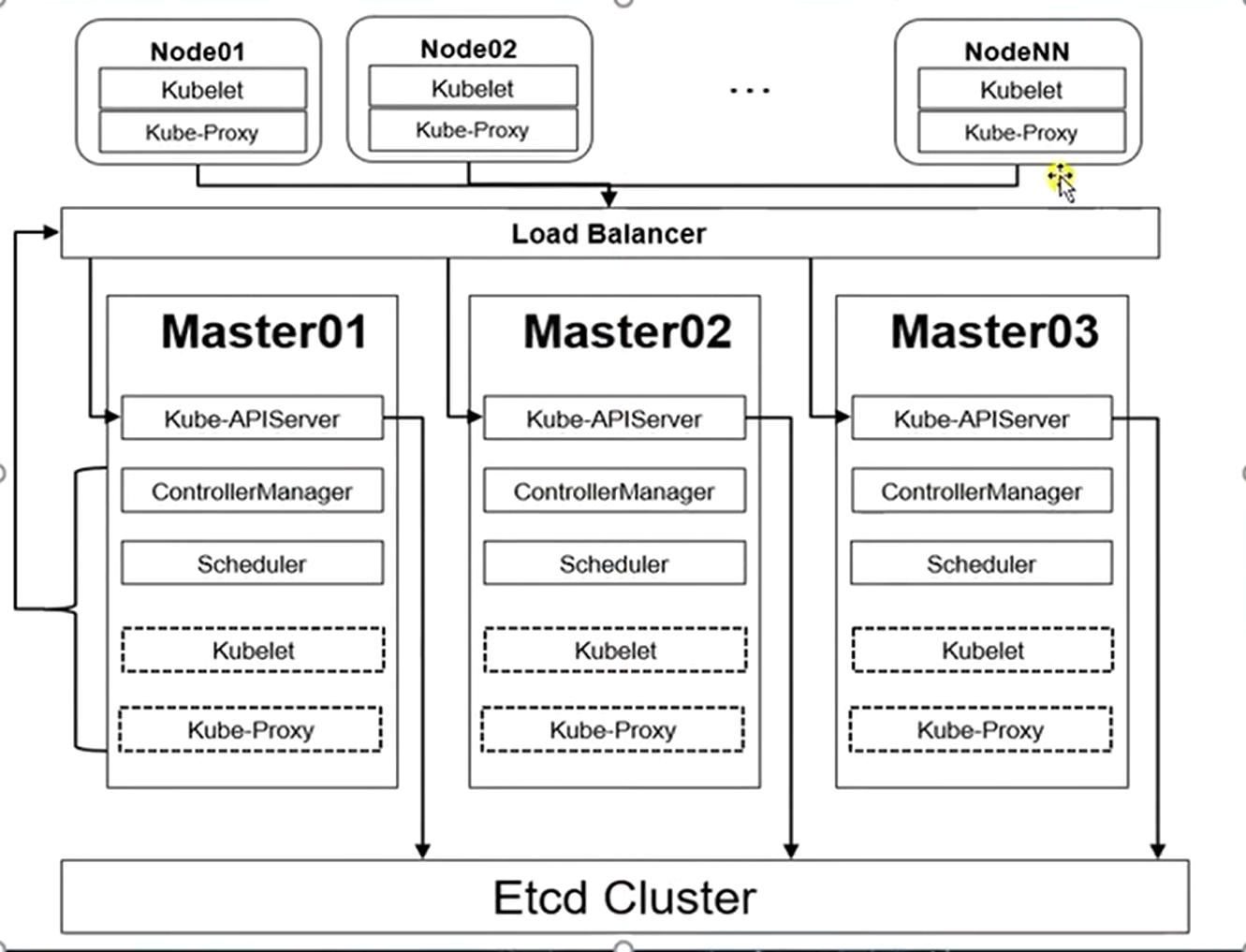

高可用架构

高可用架构图如下(高可用是针对于master来说的,多个master节点组成高可用)

二.kubeadm安装

2.1基本环境配置

2.1.1节点配置

| 主机名 | ip地址 | 说明 |

|---|---|---|

| master1-master3 | 192.168.181.101-192.168.181.103 | master节点 |

| lb | 192.168.181.200 | keepalived虚拟ip |

| node1-node2 | 192.168.181.104\105 | node节点 |

vim /etc/hosts 每个节点添加下面解析

192.168.181.101 master1

192.168.181.102 master2

192.168.181.103 master3

192.168.181.104 node1

192.168.181.105 node2

配置完之后每个节点执行下面命令

hostnamectl set-hostname 节点名称

2.1.2关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

2.1.3关闭selinux

setenforce 0

vi /etc/sysconfig/selinux 修改SELINUX=disabled

2.1.4关闭swap

会影响端口性能,因此关闭

swapoff -a && sysctl -w vm.swappiness=0

vi /etc/fstab 注释掉swap行

2.1.5同步时间

如果时间不同步,会造成数据不同步

#1.设置客户端时区(要和时间服务端一致)

timedatectl set-timezone Asia/Shanghai

#2.下载ntp

yum install ntp

#3.同步到本机阿里云时间,不行可以试试ntp2.aliyun.com,ntp3.aliyun.com 等等

ntpdate ntp1.aliyun.com

#4.定时任务也加上

*/5 * * * * ntpdate ntp1.aliyun.com

2.1.6关闭limit

ulimit用来设置用户可以使用的资源,参考:https://blog.csdn.net/nixiang_888/article/details/122256229

#ulimit命令只在当前会话生效

ulimit -SHn 65535

#vi /etc/security/limits.conf永久生效

# 添加如下的行

* soft noproc 65535

* hard noproc 65535

* soft nofile 65535

* hard nofile 65535

*代表所有用户,noproc是代表最大进程数,nofile是最大文件打开数量, soft nofile的值不能超过hard nofile的值

2.1.7免密登录

master1节点免密登录其他节点,安装过程中生成配置文件和证书均在master1上进行,集群管理也在master1上操作,master1操作如下:

#1.master生成密钥

ssh-keygen -t rsa

#2.循环传送到各个机器

for i in master1 master2 master3 node1 node2;do ssh-copy-id -i .ssh/id_rsa.pub $i;done

#3.传送完成后

ssh node1 可以直接登录node1机器

2.1.8配置阿里k8s源

centos7

#配置centos源\更新docker源

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

#更新索引

yum makecache fast

# 以下是k8s源

cat > /etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

centos8

#三条命令

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-8.repo

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

#更新索引

yum makecache

#以下全部复制执行(导入K8s镜像源)

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo

更新工具

yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 -y

#CentOS7需要升级,8不需要失败就多试几次

yum update -y --exclude=kernel* && reboot

2.1.9升级内核

通过命令cat /proc/version查看内核,centos7一般都是3.10,需要升级到4.18及其以上

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm

#查看最新版内核: yum --disablerepo="*" --enablerepo="elrepo-kernel" list available

#安装最新版:

yum --enablerepo=elrepo-kernel install kernel-ml kernel-ml-devel –y

#查看当前可用内核版本:

awk -F\' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg

#选择最新内核版本,0代表查看当前可用内核版本列表的左侧索引号

grub2-set-default 0

#生成grub文件

grub2-mkconfig -o /boot/grub2/grub.cfg

#重启linux

reboot

cat /proc/version得到如下图版本号

2.1.10安装ipvsadm

ipvsadm适用于生产中集群较大的服务,不用iptables(ipvsadm是lvs的管理工具),这是k8s新版本中kube-proxy要使用的模式

#1.下载

yum install ipvsadm ipset sysstat conntrack libseccomp -y

#2.当前会话应用

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4(内核4.19已经改为nf_conntrack)

#3.永久应用

vim /etc/modules-load.d/ipvs.conf

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

xt_set

ipt_rpfilter

ipt_REJECT

ipip

最后执行systemctl enable --now systemd-modules-load.service

检查是否加载

[root@master1 ~]# lsmod|grep -e ip_vs

ip_vs_sh 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs 163840 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 163840 1 ip_vs

nf_defrag_ipv6 24576 2 nf_conntrack,ip_vs

libcrc32c 16384 3 nf_conntrack,xfs,ip_vs

2.1.11开启一些k8s集群中需要的内核参数

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

EOF

sysctl --system 查看情况

2.2基本组件安装

2.2.1安装docker

查看可用的docker-ce版本

yum list docker-ce.x86_64 --showduplicates |sort -r

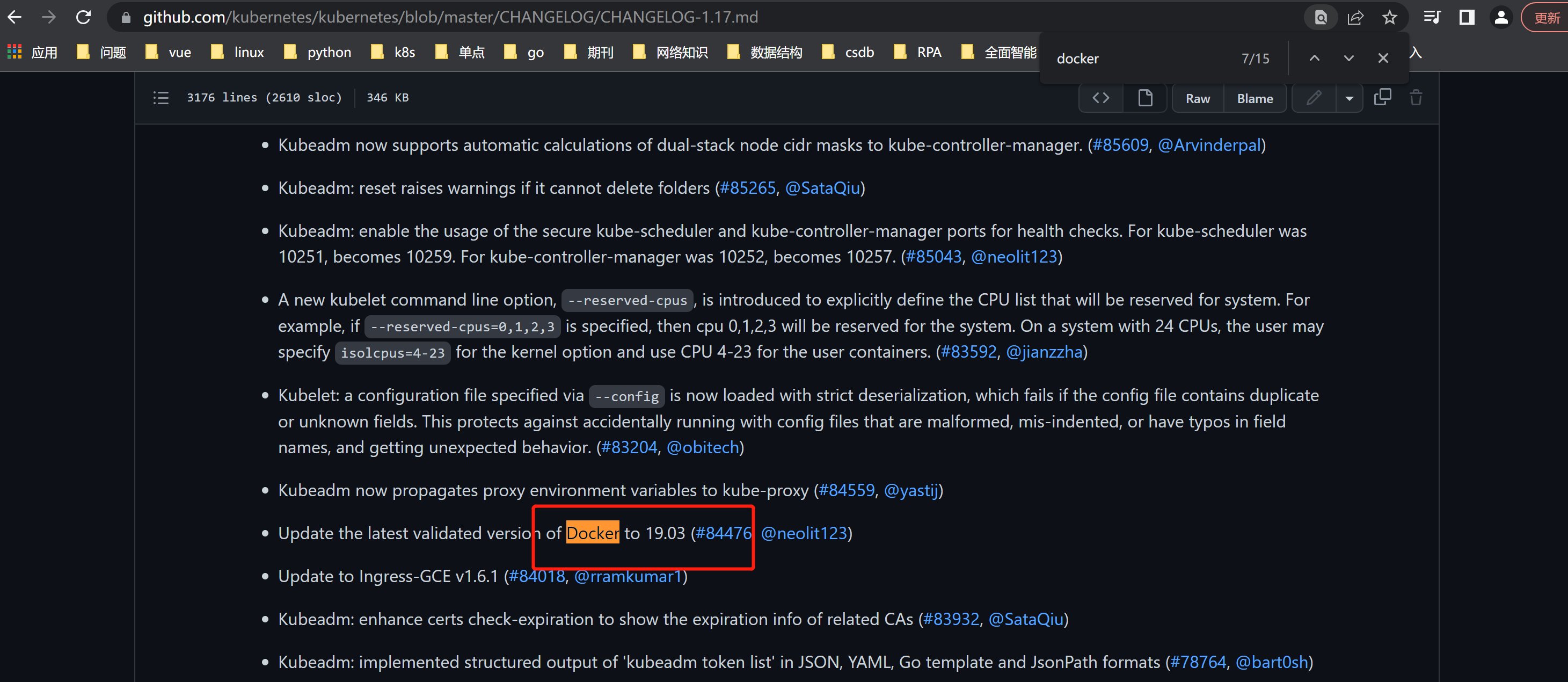

查看k8s版本适配的docker版本

登录k8s仓库查看changelog:https://github.com/kubernetes/kubernetes/tree/master/CHANGELOG,1.18版本找不到对应的docker版本,就去1.17找。

centos7直接安装

#安装指定版本的docker

yum install dokcer-ce-17.03.2.ce-1.el7.centos

#安装最新版的docker

yum install docker-ce -y

centos8安装(centos不会默认安装containerd)

#下载并安装containerd

wget https://download.docker.com/linux/centos/7/x86_64/edge/Packages/containerd.io-1.2.13-3.2.el7.x86_64.rpm

yum install containerd.io-1.2.13-3.2.el7.x86_64.rpm -y

#安装最新版本的Docker

yum install docker-ce -y

#安装指定版本的Docker

yum -y install docker-ce-17.09.1.ce-1.el7.centos

启动docker: systemctl start docker

开机自启动:systemctl enable docker

检查安装是否成功:docker info ,如果有警告需要解决

2.2.2安装kubeadm

查看kubeadm版本信息

yum list kubeadm.x86_64 --showduplicates | sort -r

安装

#安装指定版本的K8s组件

yum install -y kubeadm-1.19.3-0.x86_64 kubectl-1.19.3-0.x86_64 kubelet-1.19.3-0.x86_64

#也可以安装最新版本的kubeadm,会把依赖也装上,比如kubectl、kubelet等

yum install kubeadm -y

所有节点设置开机自启动Docker:systemctl daemon-reload && systemctl enable --now docker

2.2.3修改kubelet镜像源

DOCKER_CGROUPS=$(docker info | grep 'Cgroup' | cut -d' ' -f4)

#执行完上面这句,可以使用命令“echo $DOCKER_CGROUPS”看看结果是不是cgroupfs

cat >/etc/sysconfig/kubelet<<EOF

KUBELET_EXTRA_ARGS="--cgroup-driver=$DOCKER_CGROUPS --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1"

EOF

systemctl daemon-reload && systemctl enable --now kubelet

这时候kubelet还不能正常启动,等待后续初始化K8s

ps: systemctl daemon-reload加载新的unit 配置文件

2.2.4高可用组件安装

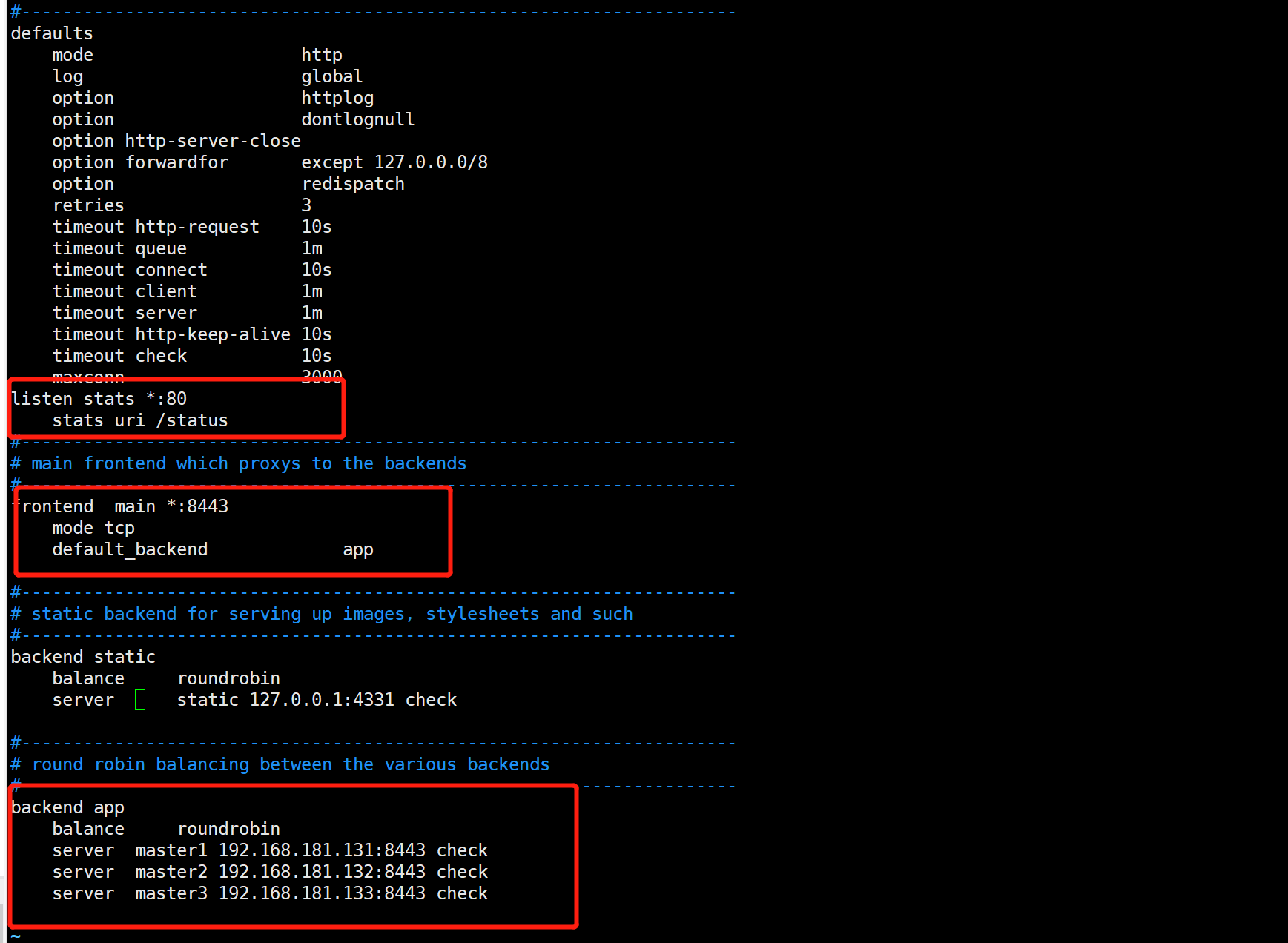

haproyx类似nginx、lvs(ipvsadm是lvs的管理工具)

haproxy配置参考:https://blog.csdn.net/qq_40764171/article/details/119517282,6443是apiserver的访问地址

部署架构图:

1.由外部负载均衡器提供一个vip,流量负载到keepalived master节点上。

2.当keepalived节点出现故障, vip自动漂到其他可用节点。

3.haproxy负责将流量负载到apiserver节点。

4.三个apiserver会同时工作。注意k8s中controller-manager和scheduler只会有一个工作,其余处于backup状态。我猜测apiserver主要是读写数据库,数据一致性的问题由数据库保证,此外apiserver是k8s中最繁忙的组件,多个同时工作也有利于减轻压力。而controller-manager和scheduler主要处理执行逻辑,多个大脑同时运作可能会引发混乱。

安装步骤:参考http://jianshu.com/p/7cd9fab92fa1 docker版安装

所有master节点安装HAProxy和keepalived(也可nginx+keepalived),此处8443端口是将来要安装dashboard

1.yum install keepalived haproxy -y

2.vim /etc/haproxy/haproxy.cfg

3.systemctl start haproxy

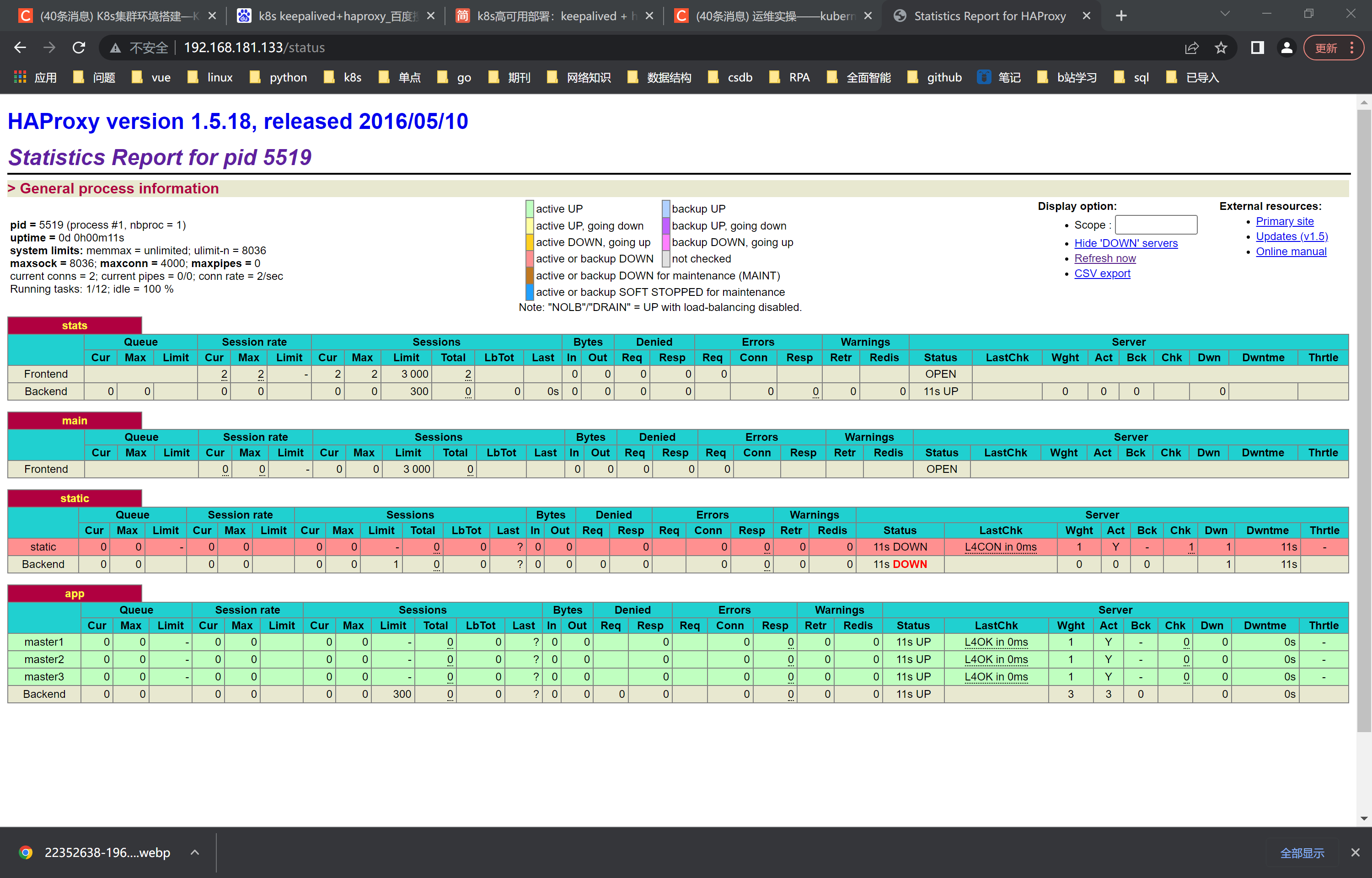

启动服务后通过192.168.181.131/status访问看到如下图,证明开始haproxy服务成功

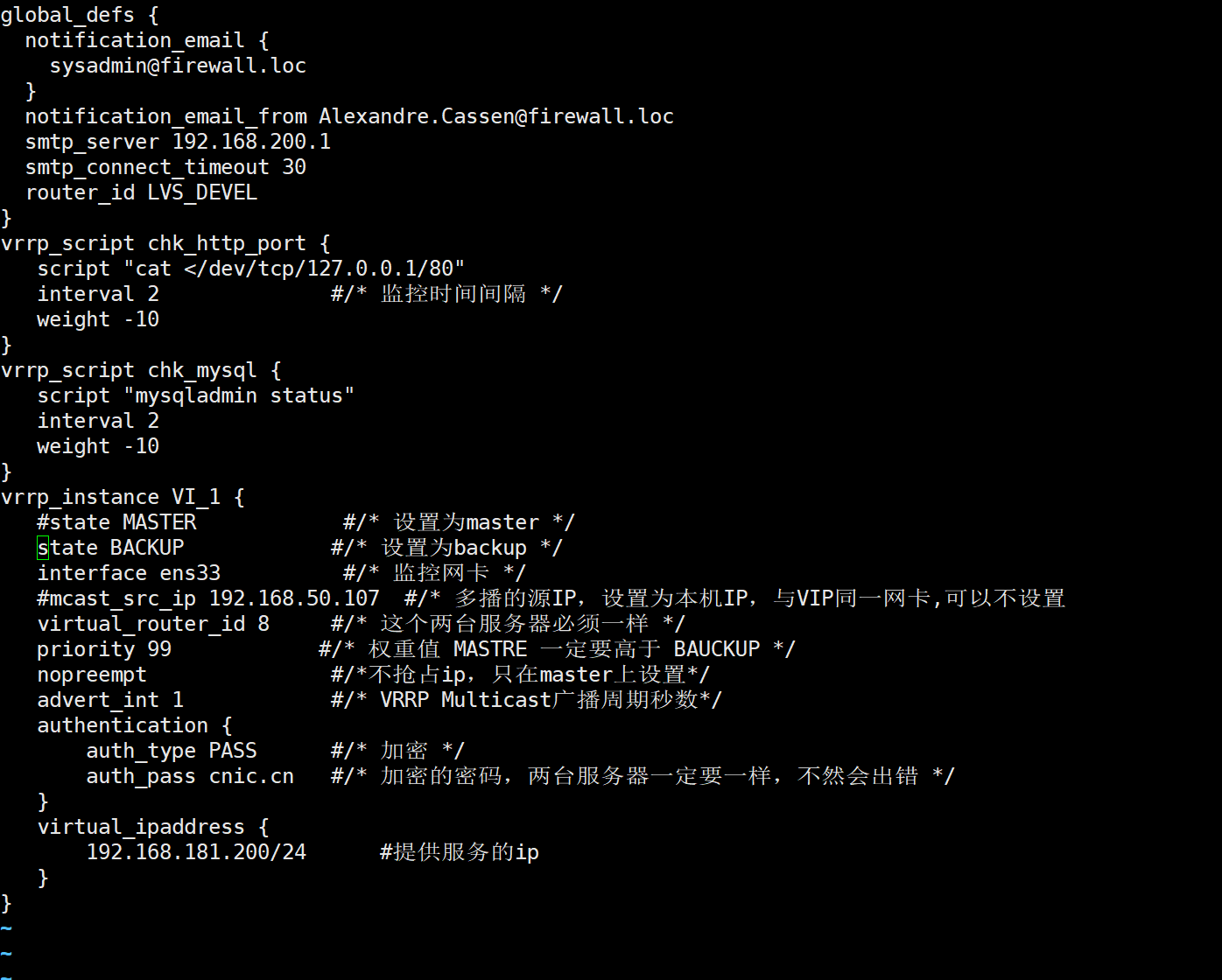

keepalived安装:修改state、priority(三台机器一个master两台backup)vip192.168.181.200不用ip addr add添加,启动服务systemctl start keepalived后可以直接访问

2.4集群初始化

参考文档:https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/high-availability/

2.4.1 master节点kubeadm-config.yaml配置文件

配置项详解参考:https://blog.51cto.com/foxhound/2517491?source=dra

此配置文件在所有master节点上都要有,但是其他master节点只是用了镜像,对应的ip可换成自身节点ip,此配置文件用来下载apiserver、controller-manager等

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: 7t2weq.bjbawausm0jaxury

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.181.131 #master1节点ip

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: master1

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

certSANs:

- 192.168.181.200 # vip

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 192.168.181.200:6443 #vip

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.19.3

networking:

dnsDomain: cluster.local

podSubnet: 172.168.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

以上是1.8.5版本的配置,如果将来出了新版本配置文件过时,则使用以下命令转换一下:

kubeadm config migrate --old-config kubeadm-config.yaml --new-config new.yaml

2.4.2 下载镜像

以上配置文件用来下载apiserver、controller-manager等

kubeadm config images pull --config ./kubeadm-config.yaml

所有节点设置开机自启动kubelet

systemctl enable --now kubelet

2.4.3 Master01节点初始化

只在Master01节点初始化,初始化以后会在/etc/ kubernetes目录下生成对应的证书和配置文件,之后其他Master节点加入 Master01即可

kubeadm init --config ./kubeadm-config.yaml --upload-certs

不用配置文件(不用2.4.1中的配置文件及其上面命令,直接使用后面命令)初始化kubeadm init --control-plane-endpoint "LOAD BALANCER DNS:LOAD BALANCER PORT" --upload-certs.

如果初始化失败,重置后再次初始化,命令如下:kukeadm reset

初始化成功后,可看到下图(其他master节点要加入集群用下面命令,node节点加入用下一条命令)

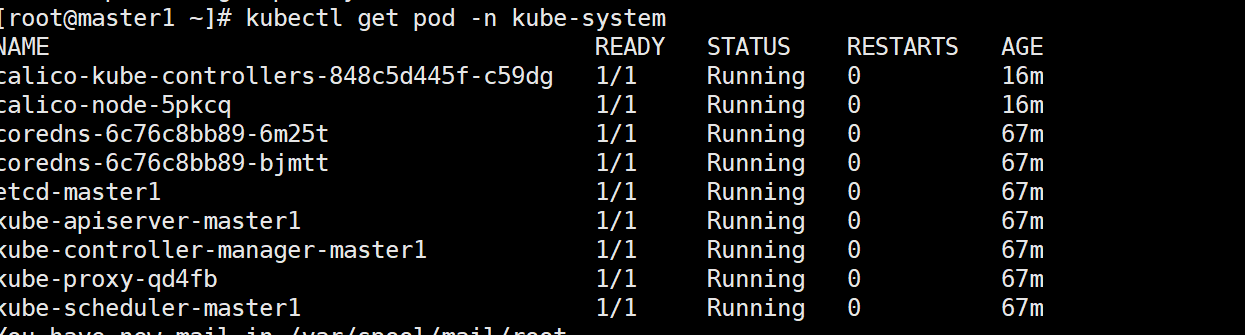

初始化成功后,使用命令kubectl get pod -n kube-system得到如下图:

pending是没有安装网络插件(本例子中使用calico插件)的原因

2.4.4 查看初始化生成的cert

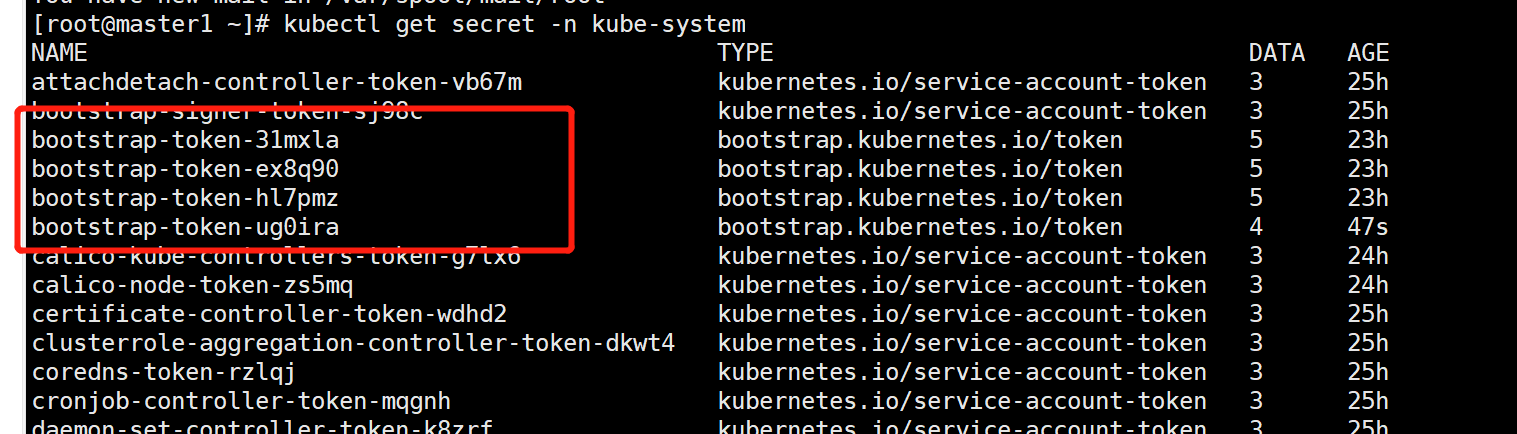

命令kubectl get secret -n kube-system查看系统上的secret

命令kubectl get secret -n kube-system bootstrap-token-ug0ira -oyaml以yaml文件展开

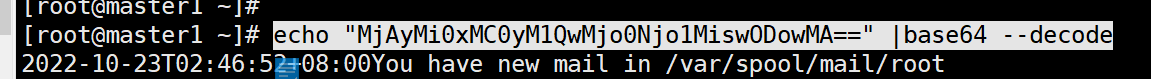

命令echo "MjAyMi0xMC0yM1QwMjo0Njo1MiswODowMA==" |base64 --decode以base64解码查看其过期时间

如果过期,使用kubeadm init phase upload-certs --upload-certs命令重新生成

2.4.5 其他master节点加入集群

在master01节点上执行,然后将得到的命令分别在其他master节点上执行,master节点需要--upload-certs参数

$ kubeadm init phase upload-certs --upload-certs

$ kubeadm token generate

ex8q90.863kcm1pqznic4fd

$ kubeadm token create ex8q90.863kcm1pqznic4fd --print-join-command --ttl=0

kubeadm join 192.168.181.200:6443 --token 31mxla.v069bj1bisrky1e0 --discovery-token-ca-cert-hash sha256:f398b1b4dcd0387129b9b13574575e5f65dc752fc3631e08fc28e4ab822d4d3f --control-plane --certificate-key d662b7913124da37aabdf1b3f51158a36555fa9a79466c997aa6b4b8bac110a0

2.4.6 node节点加入集群

$ kubeadm token generate

ex8q90.863kcm1pqznic4fd

$ kubeadm token create ex8q90.863kcm1pqznic4fd --print-join-command --ttl=0

kubeadm join 192.168.181.200:6443 --token 31mxla.v069bj1bisrky1e0 --discovery-token-ca-cert-hash sha256:f398b1b4dcd0387129b9b13574575e5f65dc752fc3631e08fc28e4ab822d4d3f

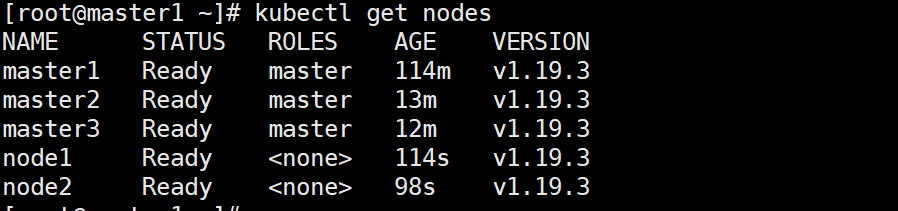

加入集群完成之后master1节点执行kubectl get nodes

集群中添加新的node节点查看:https://blog.csdn.net/qq_26129413/article/details/115179285

2.5Calico安装

master1上操作所有加入集群的节点都会有这个pod

1.下载配置文件(会在root目录下生成calico.yaml)

curl https://docs.projectcalico.org/manifests/calico.yaml -O

2.vim calico.yaml然后输入/image可查看对应calico.yaml版本

修改url中指定版本进入网址查看支持的k8s版本:https://projectcalico.docs.tigera.io/archive/v3.20/getting-started/kubernetes/requirements

3.重新下载当前机器k8s支持的calico版本配置文件:curl https://docs.projectcalico.org/v3.19/manifests/calico.yaml -O

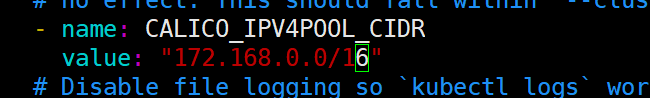

4.找到kubeadm-config.yaml中的podSubnet对应的ip,放在calico.yaml的name/value处

在vi里面搜一下“/192.168”就可以找到这个地方,并取消注释如下图

5.创建calico:kubectl apply -f calico.yaml

6.再次查看K8s组件,就可以看到全部起来了:kubectl get pod -n kube-system

2.6Metrices部署

只需要在master上执行即可,会生成一个pod,pod要能访问到其他node的网络

metrics-server 是一个集群范围内的资源数据集和工具,同样的,metrics-server 也只是显示数据,并不提供数据存储服务,主要关注的是资源度量 API 的实现,比如 CPU、文件描述符、内存、请求延时等指标,metric-server 收集数据给 k8s 集群内使用,如 kubectl,hpa,scheduler 等

官网下载最新的yaml文件:https://github.com/kubernetes-sigs/metrics-server/releases/components.yaml

apiVersion: v1

kind: ServiceAccount # 用于k8s内部资源访问metrics-server创建的用户

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole # 配置集群角色看有哪些权限

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- nodes/metrics

verbs:

- get

- apiGroups:

- ""

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding # 将用户和角色绑定

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

hostNetwork: true #1.19+版本需要添加,不然top无法显示所有node、pod的状态,加上之后metrics的pod可以访问到宿主机网络获取所有pod、node状态

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls #增加这一行

# 更换源

image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.6.1

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

resources:

requests:

cpu: 100m

memory: 200Mi

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

执行命令kubectl apply -f metrics.yaml创建容器

执行命令kubectl get pods -n kube-system查看容器是否运行

2.7Dashboard部署

master1安装

官方GitHub,可以查看最新的版本:https://github.com/kubernetes/dashboard

1 安装

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.4/aio/deploy/recommended.yaml

如果报错的话就要先下载配置文件,上传到Master1服务器再安装:

a、在浏览器打开链接地址,复制内容:

https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.4/aio/deploy/recommended.yaml

b、也可以直接用gitbub里面的:https://github.com/kubernetes/dashboard/tree/master/aio/deploy

再执行命令来安装:kubectl apply -f ./recommended.yaml

2 查看安装结果

输入命令:kubectl get po -n kubernetes-dashboard

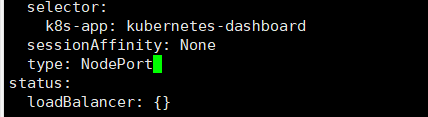

3、先更改dashboard的svc为"type: NodePort",这样可以外界先访问:kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

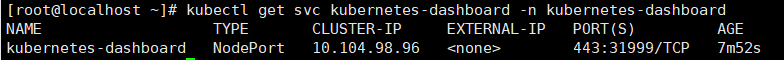

4、查看dashboard端口号:kubectl get svc kubernetes-dashboard -n kubernetes-dashboard

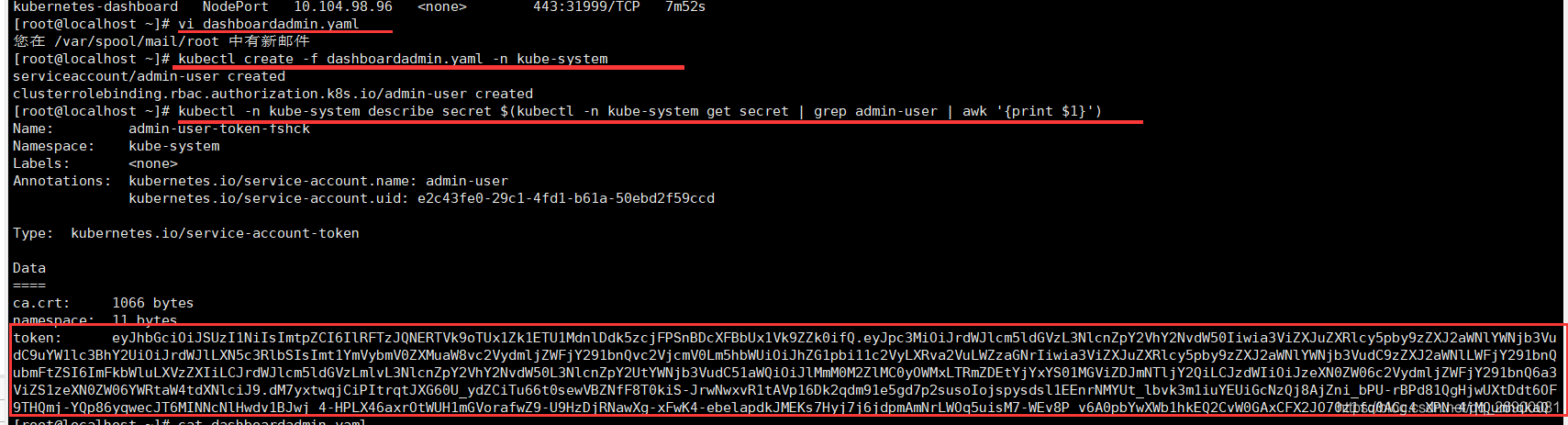

5、生成访问Dashboard的Token(创建超级管理员用户,用来访问dashboard): vi dashboardadmin.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

执行命令

kubectl create -f dashboardadmin.yaml -n kube-system

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

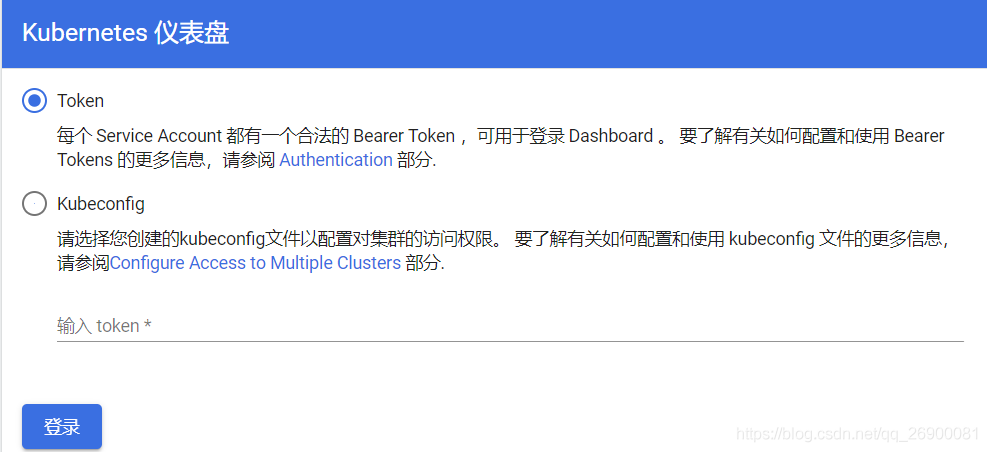

6、浏览器根据VIP访问dashboard: https://192.168.70.200:31999/,此处通过keepalived的vip无法访问,但是通过真实ip可以访问(目前怀疑是http和https的问题)

以Token/令牌方式登录,输入上面得到的token:

7、将Kube-proxy改为ipvs模式,因为在初始化集群的时候注释了ipvs配置,所以需要自行修改一下:

kubectl edit cm kube-proxy -n kube-system

更新Kube-Proxy的Pod:

kubectl patch daemonset kube-proxy -p "{\"spec\":{\"template\":{\"metadata\":{\"annotations\":{\"date\":\"`date +'%s'`\"}}}}}" -n kube-system

验证Kube-Proxy模式:curl 127.0.0.1:10249/proxyMode

)

2.8集群验证

1.查看所有命名空间组件

kubectl get pods --all-namespaces

2.安装了calico之后,查看占用内存、cpu等

kubectl top po -n kube-system

浙公网安备 33010602011771号

浙公网安备 33010602011771号